Using a Secrets Manager in an AWS Lambda Function in a Private Network

ACM.317 Lack of sufficient logging and generic error messages makes troubleshooting Lambda timeouts complicated

Part of my series on Automating Cybersecurity Metrics. Lambda. Network Security. GitHub Security. Container Security. Deploying a Static Website. The Code.

Free Content on Jobs in Cybersecurity | Sign up for the Email List

In the last post I added a personal access token in GitHub and granted access from the NAT in my private network by adding the AWS Elastic IP Address for the NAT into the GitHub Organization security settings.

In this post I want to access Secrets Manager in an AWS Lambda function in a private Network. Initially I thought I’d be retrieving the secret and cloning the repo all in one post, but as it turns out, AWS has made troubleshooting Lambda functions quite complicated due to lack of sufficient error messages. I have some recommendations to fix those messages at the bottom, but in the meantime, you can see here how I resolved the problem.

I already added permission for the Lambda function execution role to access the secret.

Ensure the Lambda role includes permission to read the secret

Recall that I added permission to view a secret in the Lambda execution role, but only if a secret is required.

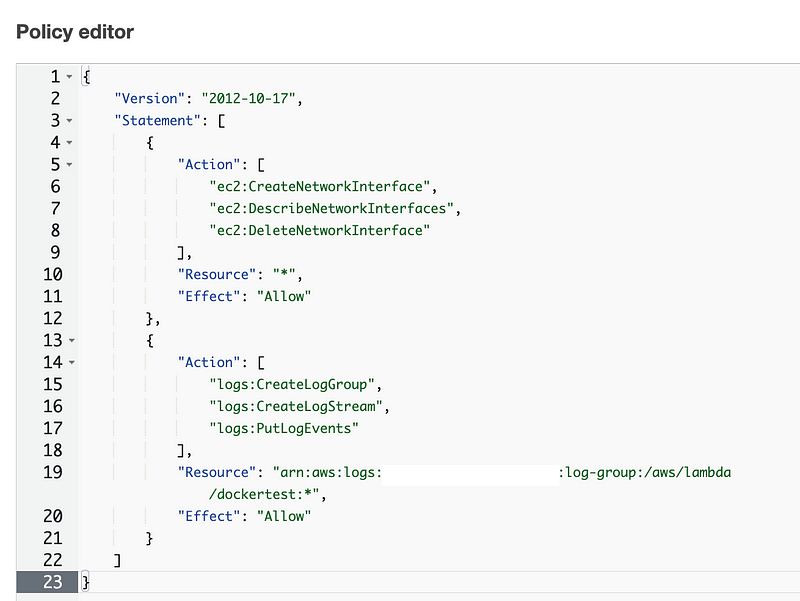

Take a look at the policy in the AWS console and it appears that the role did not get deployed with the optional secret permissions.

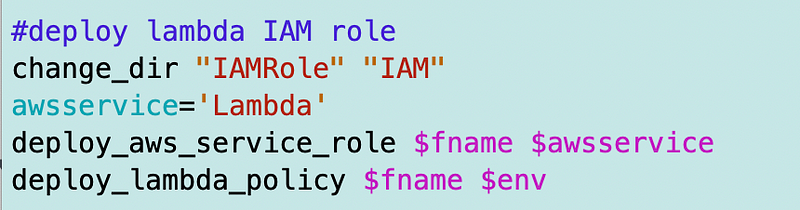

Recall that the deploy script for the Lambda function deploys the role:

SecurityMetricsAutomation/Apps/Lambda/stacks/functions/dockertest

But the role templates exist under IAM.

There’s a generic role policy for a service here:

SecurityMetricsAutomation/IAM/stacks/Role/cfn/AWSServiceRole.yaml

And there’s a Lambda policy role here:

SecurityMetricsAutomation/IAM/stacks/Role/cfn/Policy/LambdaPolicy.yaml

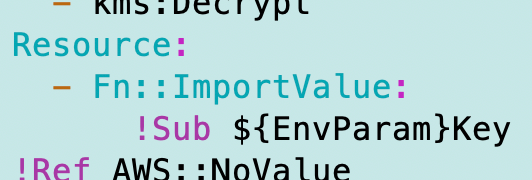

The Lambda role policy looks like this:

We need to set the HasSecretParam to true when we deploy the role.

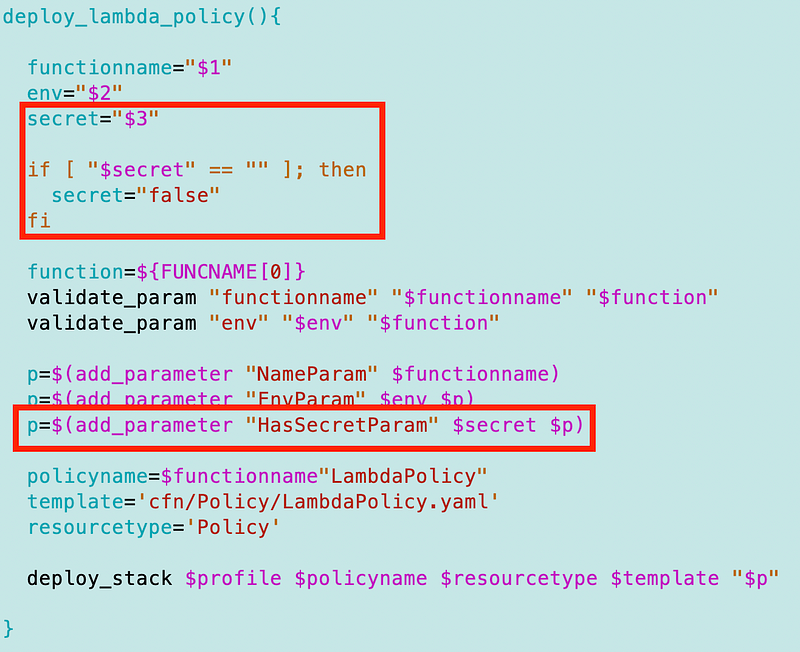

I alter the deploy_lambda_policy function to enable passing in true or false if the Lambda has an associated secret.

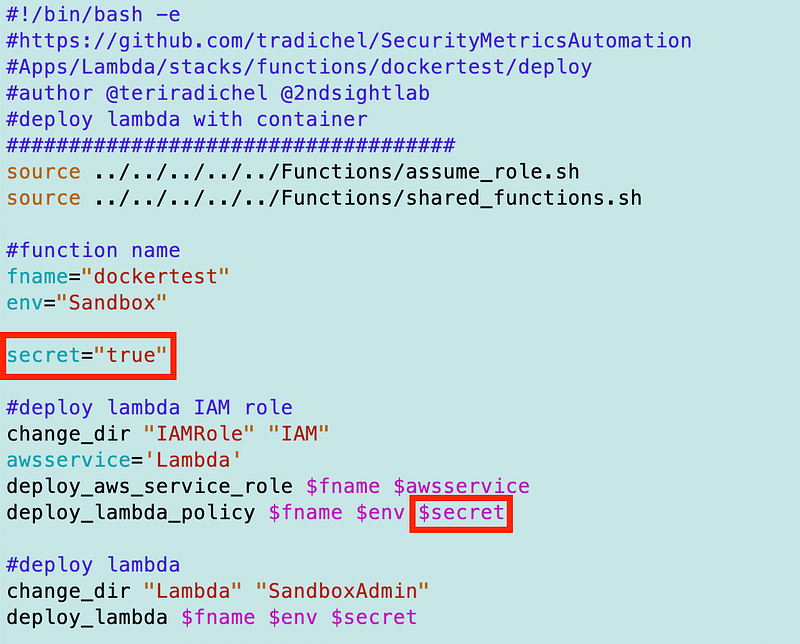

Back in my Lambda deploy script I move the secret variable above the policy deployment and pass it in.

Next I execute the deploy script for the Lambda function to redeploy the policy.

./deploy.sh

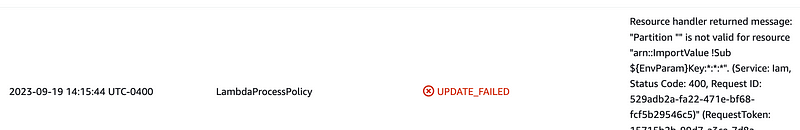

And I get the most unhelpful error message:

“ResourceStatusReason”: “The following resource(s) failed to update: [LambdaProcessPolicy].”

Apparently CloudFormation is not consistent in how it passes back errors so my generic error reporting function is not giving me the error that triggered the problem.

Looking at the CloudFormation dashboard I see this error:

That nonsensical error occurs because I forgot a colon (:) after my Fn::Import: statement. I fixed that and then the policy deployed.

Now our Lambda function should have permission to access its own secret. The next step is to test out our function to see if the Lambda function can access the secret.

Test retrieving our secret in a Lambda function locally

Ok now we can attempt to retrieve the secret in our docker container.

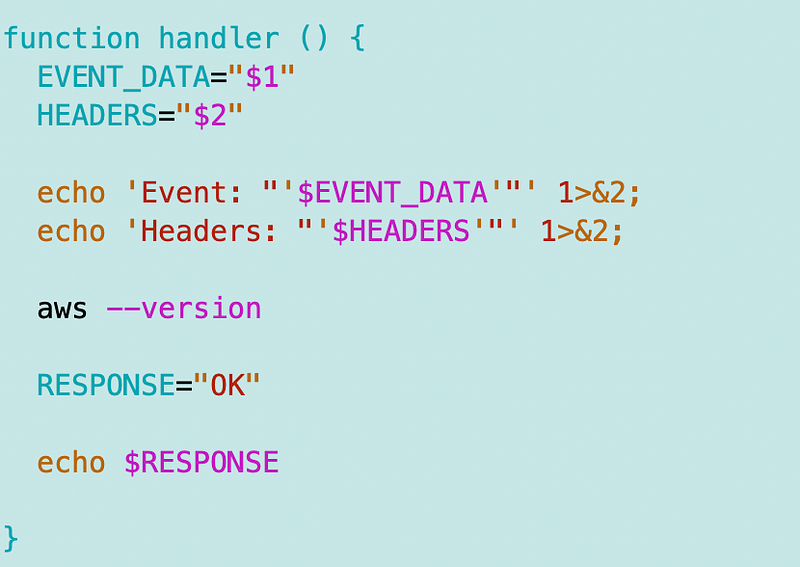

First I’m going to test to see if the AWS CLI is installed on this container that uses the AWS custom runtime container as a base.

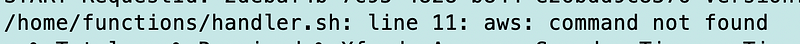

I add this command to my container:

aws --versionHere’s what my function/handler.sh file looks like at the moment:

I build and run the Lambda function locally as explained in prior posts:

./build.sh ./localtest.sh

I test it from a separate command line window.

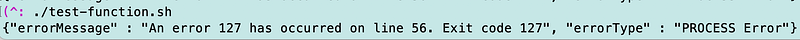

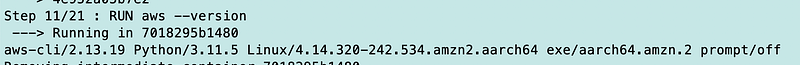

./test-function.sh

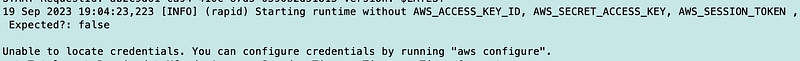

I get an error.

Now it’s at this point that I see that I need better error reporting.

Returning to the screen where the Lambda container is executing I see:

The AWS CLI is not installed in the container by default, so we’ll need to install it. More on handling this more efficiently and securely in a later post.

In my Dockerfile I add the following, including a command to install unzip so we can unzip the AWS CLI, a command to check the AWS version, and a command to uninstall unzip.

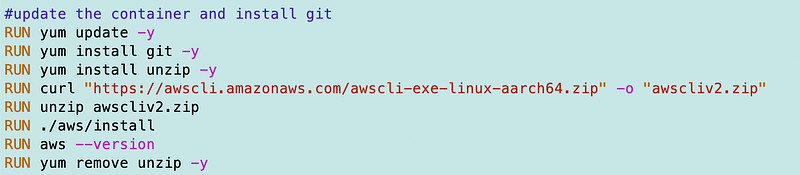

When I build the container it works and the AWS CLI is installed.

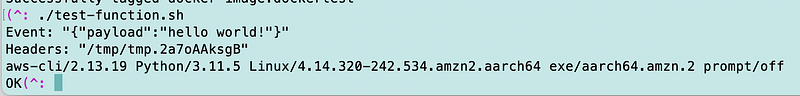

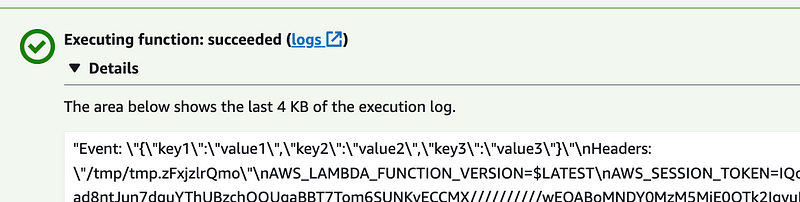

Now I re-run my function and it works:

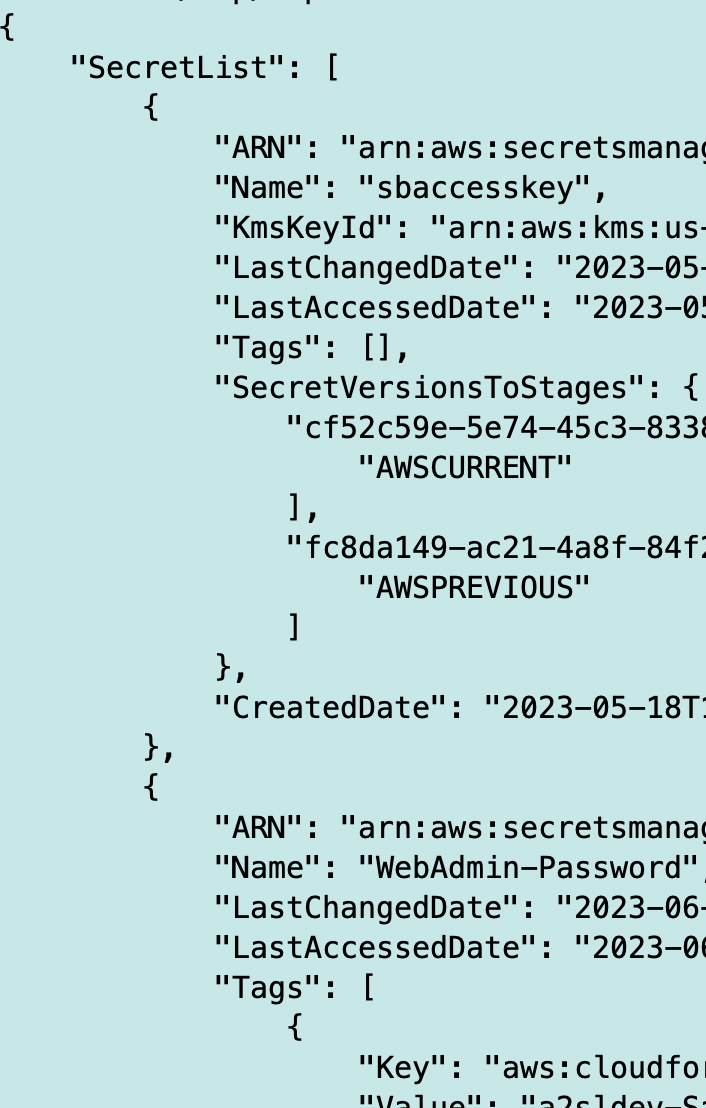

Next, I’ll add a command to list secrets.

aws secretsmanager list-secrets

Here’s the next problem. When we’re testing locally we don’t have the credentials that the Lambda execution role would have.

So what’s going on here? We don’t have valid credentials to run the commands required by our Lambda function. The credentials will be provided when we run within the Lambda environment. You can see those credentials if you add a command to your function to dump the environment variables (which we really should *not* be doing in general, but just for the purposes of troubleshooting I’m going to do this).

I added the env command to my handler and this is what I see:

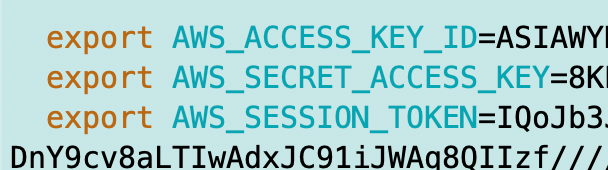

That session token is available because the function assumes the LambdaExecutionRole when running in the context of the Lambda service on AWS.

So maybe my function will run if I push the function to AWS because my function will have the necessary permissions.

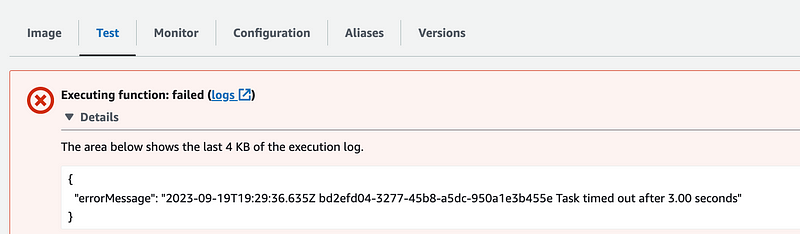

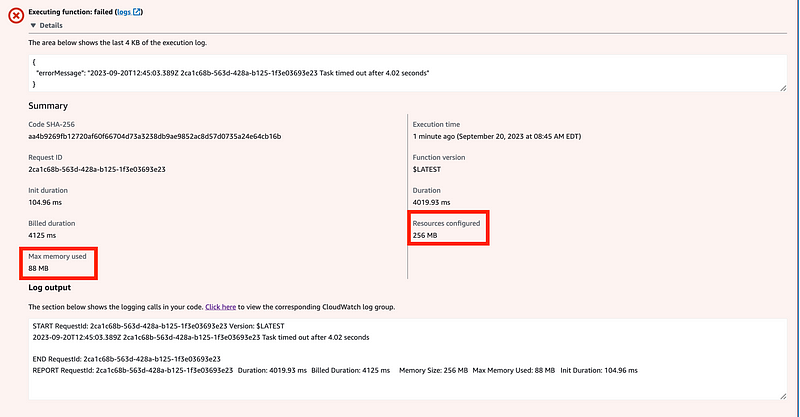

But when I do that, I get a timeout message and no other useful error message in the logs:

So the first thing to check in this case is to make sure the function has enough memory configured. It seems like AWS Lambda should throw a more specific error when this happens because I see a lot of people asking this question.

The output says that my function used 88MB of memory and 256MB the Lambda configuration provides 256MB so that is not the problem.

I also tried increasing the timeout to 10 seconds. I figured that would be plenty of time but the function still failed.

A timeout often occurs in relation to networking so let’s check that.

I explained how to check VPC Flow Logs when executing a Lambda function in this post.

But I can’t find any entries in VPC Flow Logs showing denied — or accepted — traffic. I looked through the logs for every ENI multiple times. It’s not there at the time of this writing. And that’s a problem. There should at least be an outbound request from the Lambda function — but that doesn’t get logged. Only the ALLOW or DENY on the destination network element gets logged. And in my case, that made troubleshooting more time consuming than it needed to be because I could not use logs to pinpoint my error.

I wrote about private IP access in certain logs, or lack therof, in this post. This is another thing I think AWS should fix. Show the blocked traffic when a VPC Endpoint is involved. You should have some evidence somewhere in some log showing you the failed traffic.

Is the private traffic destined for AWS Simply being swallowed up and not logged anywhere?

The new Resource Map feature also provides no assistance in this case. It doesn’t seem to include the paths for VPC Endpoints and it seems like it would need to allow you to choose the Lambda function and the service you are trying to reach and tell you what’s misconfigured.

I submitted some feedback for that on the ResourceMap page in the AWS Console.

So at this point I guess I am just supposed to know somehow that I can’t reach AWS Secrets Manager due to networking? I guess?

Anyway, let’s add a VPC Endpoint for Secrets Manager to our private Lambda network.

Adding a VPC Endpoint for Secrets Manager

So I copy and paste the code for deployment of a network interface and change the ARNs. The security groups deploy fine. Then I get this less than helpful error:

Resource handler returned message: “ (Service: Ec2, Status Code: 400,

As it turns out I spent far too long resolving the fact that I had this cation:

SecretsManager.ListSecrets

Instead of this:

SecretsManager:ListSecretsReally think that AWS CloudFormation or the underlying library could figure that out and provide a better message.

Problem solved. Retest the Lambda function with VPC Endpoint in place.

I still get a timeout.

Verifying permissions are not the problem

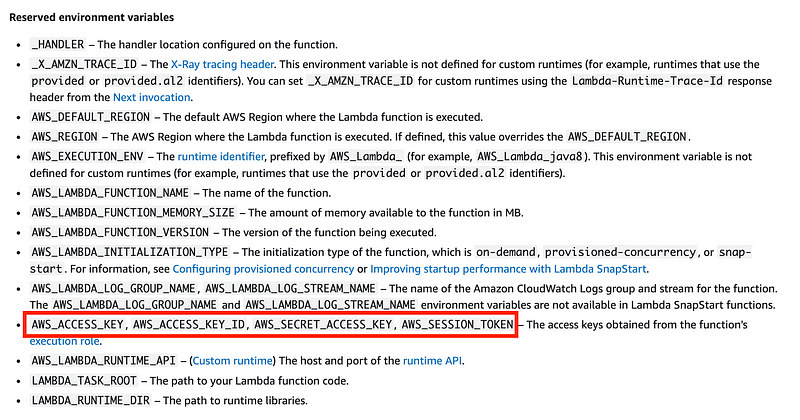

Ok let’s do this. I’m going to output some variables when I deploy to Lambda. There are some defined environment variables here and I want to snag the credentials for testing:

As it turns out, AWS_ACCESS_KEY doesn’t actually exist.

But if I grab these three variables from my Lambda function running on AWS and set them in my function running in my local container:

I can successfully list the secrets when I use those credentials in a function I test in my EC2 instance outside of Lambda.

That tells me that all my permissions are set up correctly for my Lambda function outside of networking. Recall that my local test is in an EC2 instance in a different network.

Checking our Networking More Closely

Let’s check the networking in detail, methodically, step by step.

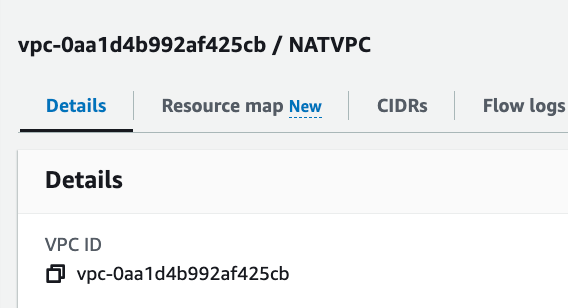

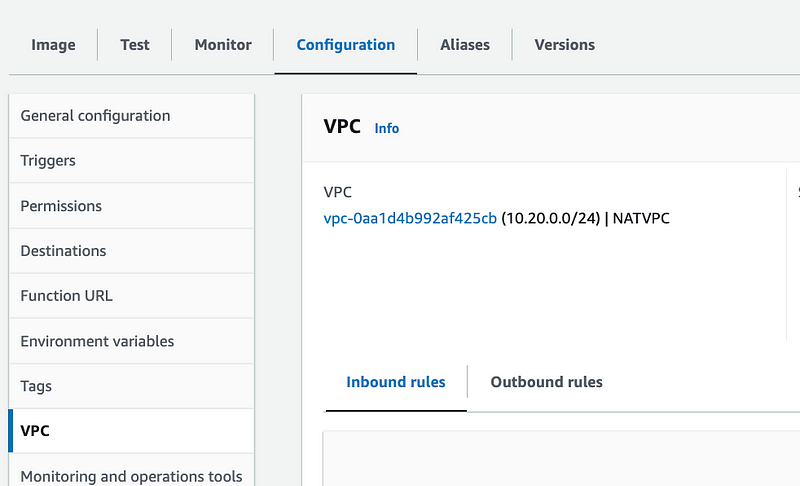

The Lambda function is in a private VPC. Get the VPC ID:

Check the Lambda function to make sure that the correct VPC exists in the configuration.

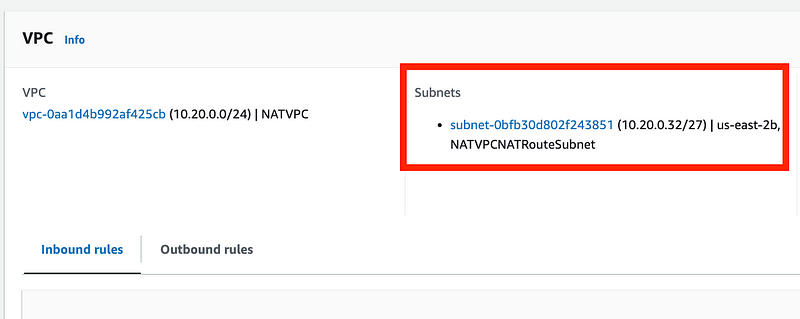

Get the subnet that the Lambda function exists in.

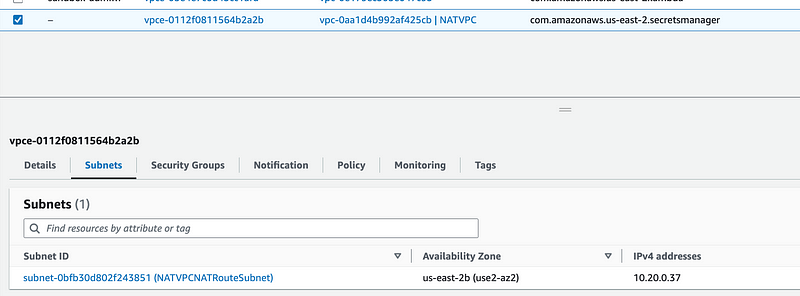

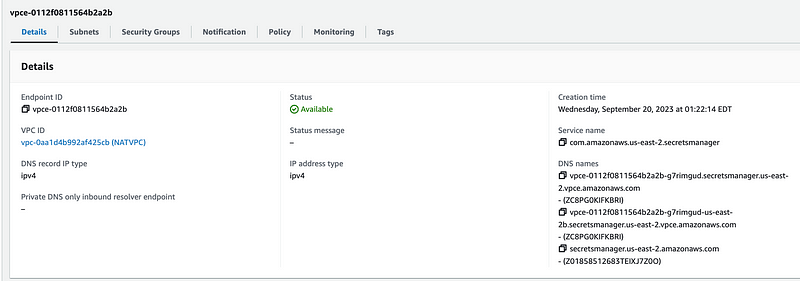

Take a look at the new Endpoint we created for Secrets Manager and make sure it is in that subnet.

This is where I found an error. I had deployed the VPC Endpoint to the wrong subnet due to a copy and paste typo.

So if you do that — you’ll apparently get no network logs indicating what the problem is. Good luck.

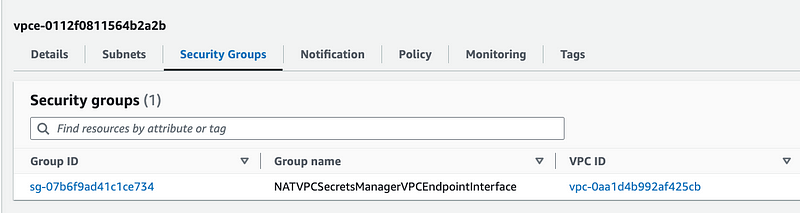

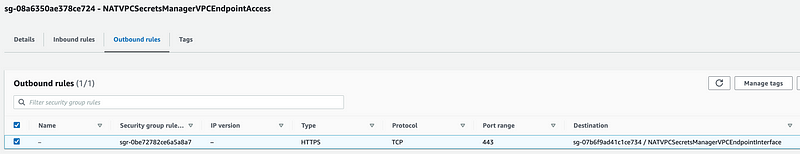

Click on the endpoint Security Groups.

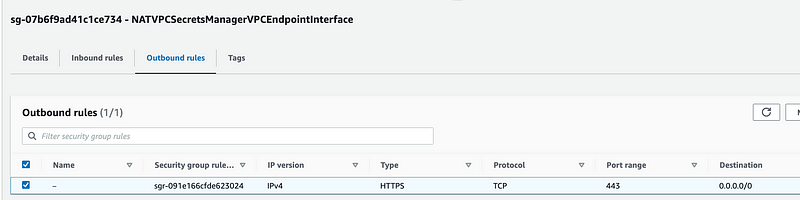

Click on the Endpoint interface security group. That’s the security group applied to the endpoint. It needs to allow outbound access to AWS services on port 443.

Click on Outbound rules. Make sure TCP 443 is allowed.

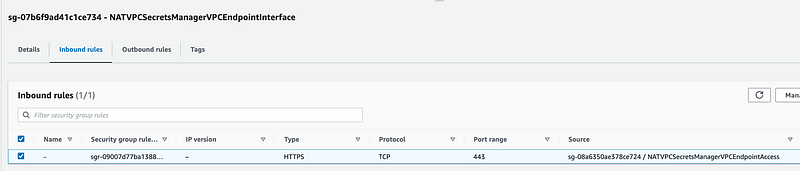

Click on the Inbound rules. Make sure the Endpoint Access Security Group we deployed is allowed to send traffic in on port 443. This allows any resources assigned to the endpoint access security group to access the endpoint.

Now one thing I notice above is that the IP version is not specified while I specified IPv4 in my outbound rule. What if the Lambda function is trying to send in IPv6?

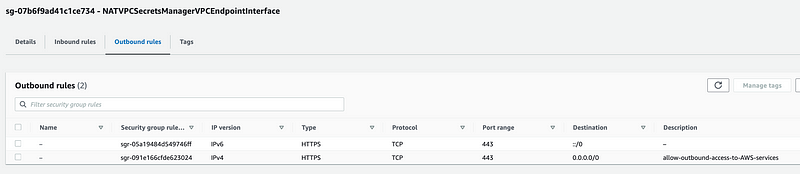

What if I edit my outbound rule to allow IPv4 and IPv6?

To test that theory I added IPv6 outbound even though I don’t want to use that in my network.

That did not solve the problem. I wouldn’t expect it to because I specify IPv4 DNS for the endpoint. On that note, my networking for a private network is configured to use private DNS and return private IP addresses. As mentioned in a prior post, the requests might still end up going out over the NAT because the DNS for the service will resolve to the public instead of the private IP. In that case, you would see the traffic traversing the NAT in VPC Flow Logs. I’m not seeing that.

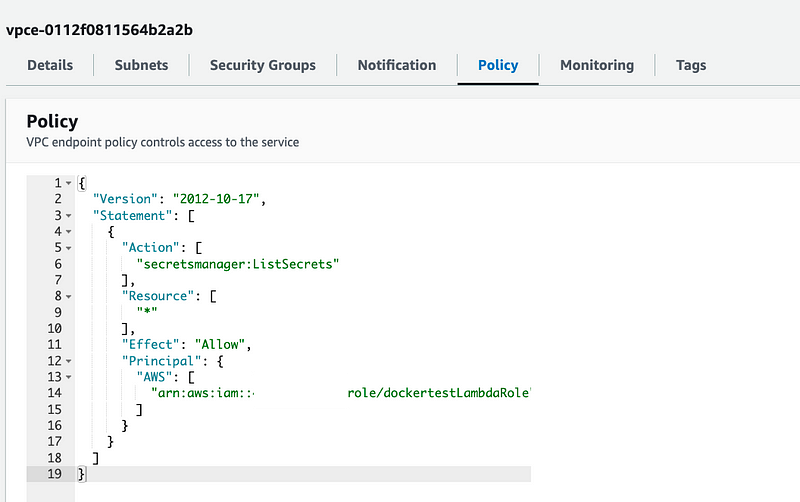

Check the policy:

It seems OK.

Check the outbound rules for the endpoint access security group to make sure it is allowed to send outbound traffic to the endpoint interface.

One thing I forgot to do earlier was add the VPC Endpoint Access Security Group to my Lambda function. I added that manually for testing purposes.

Now all the networking looks correct. The Lambda function should be able to access the VPC Endpoint for Secrets Manager.

One other thing we could check would be the Subnet NACLs. Head over to the subnet.

I have wide open NACLS on the subnet at the moment. However, I’m not yet seeing that any traffic is hitting those NACLs via VPC flow logs.

I test again. Timeout.

Well, this is all less than helpful. There are no logs anywhere that tell me what’s causing my Lambda function to fail in terms of networking and I think my networking is correct.

X-Ray

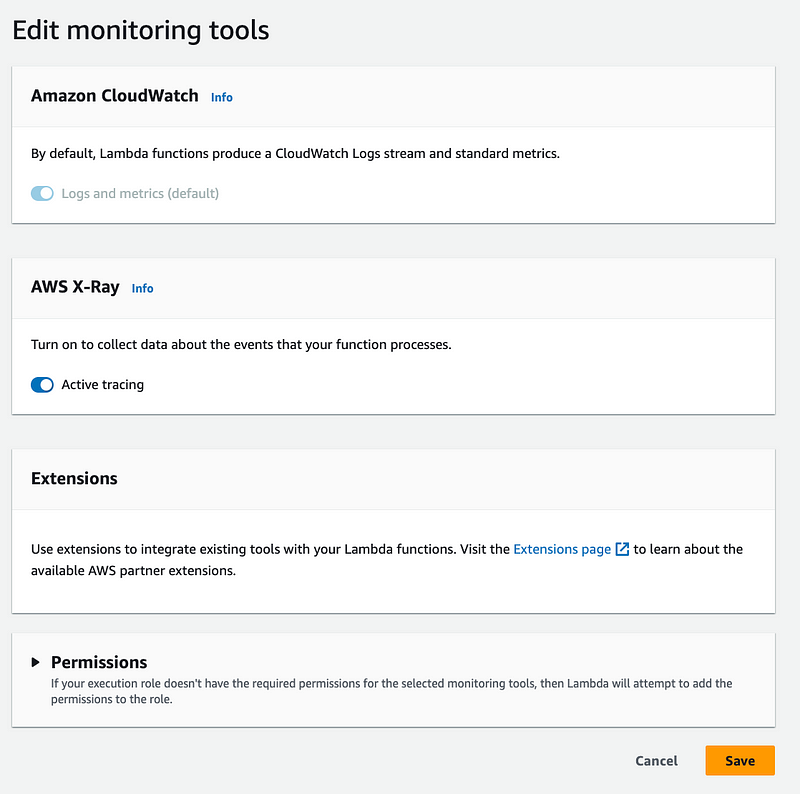

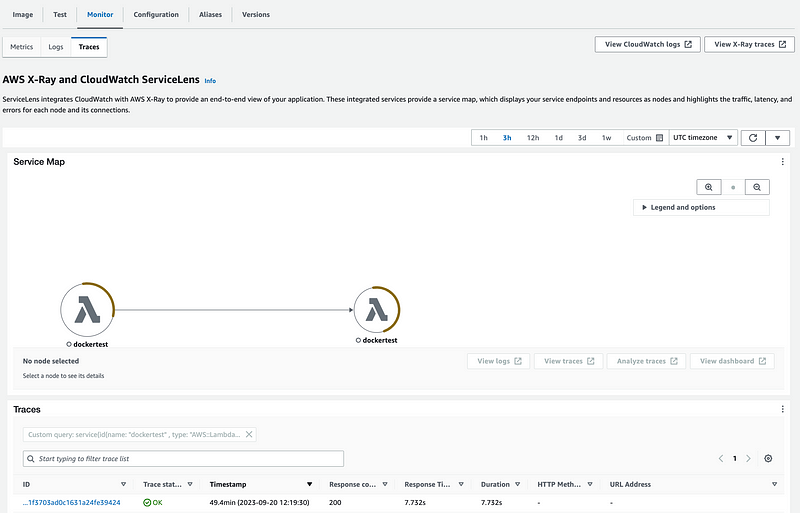

There’s one other thing we can try and that is to enable X-Ray. Maybe it will give us more information.

I’m going to manually enable X-Ray to see what we get.

You can find this toggle under Monitoring tools in your Lambda configuration.

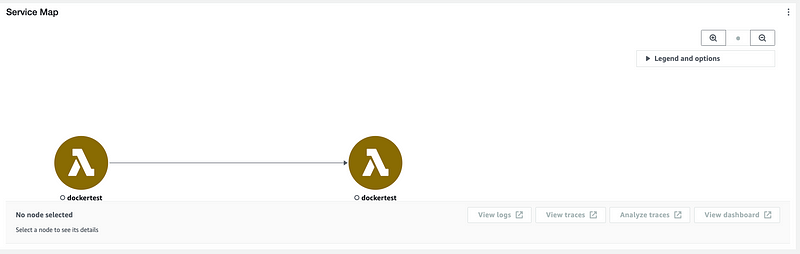

I tested the function a couple of times since X-Ray uses sampling and the logs showed me the exact same thing I’m already seeing.

The interesting thing is that this diagram makes it look like the Lambda function is calling the Lambda function itself. But in any case there’s no evidence that an AWS secrets manager call was performed here.

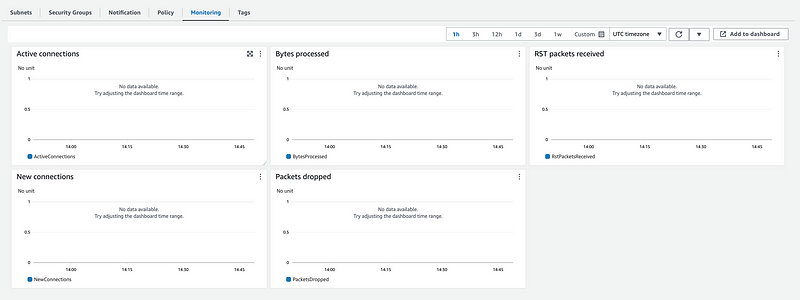

The other thing is that if you look at the monitoring tab on the VPC Secrets Manager endpoint there is zero traffic. Nothing ever hits it.

So at this point I’m wondering what traffic is occurring in my Lambda function at all.

I made a weak attempt to install tcpdump before doing the following.

I added a KMS VPC Endpoint as I suspect that will be required to fetch the actual secrets but I’m not obtaining a secret yet, only listing them. I don’t think that should require KMS?

When all else fails…

Here’s where the misconfigurations that come about in cloud environments come into play — and the above insufficient logging and error messages is what contributes to their existence. I’m going to do something I don’t recommend — however I’m only doing it in a private VPC — which is less risky than a public VPC. But not without risk.

I created a test security group to allow any and all traffic to and applied it to the Lambda and the VPC Endpoints. That way I can verify once and for all that networking is or is not the problem.

Still no luck. The function does not work.

Now, I had done this before as noted above and it did not fix the problem, but I increased the timeout to 10 seconds. I also increased my ephemeral storage to 1000 MB.

Somehow, after all that, the function runs. Hallelujah.

That took way too long.

Reverse engineering the configuration back to what we actually want

Ok but I’m not satisfied that the function just runs. I changed the time to 10 seconds before and it didn’t work. I want to validate that this is what really solved the problem. Also, do not be that person who causes a data breach with something you were just testing and failed to clean up.

I’m going to change the ephemeral storage, usage of which is not reported on the test screen, back to 512 just to see what happens. Why is this use of storage not reported anywhere? Maybe it is and I’m not seeing it?

The function still works.

Now I’m going to remove the security group from the KMS VPC Endpoint. I didn’t think “list-secrets” would require kms but let’s find out.

Still works.

Now let’s remove the all for test security group from the VPC Endpoint for Secrets Manager.

And finally I’m going to remove the All for test security group from the Secrets Manager Endpoint.

Annnnd it works.

Hypothesis of the entire scenario that led to this problem

So here’s what I think happened, but without enough logging from the Lambda service it’s hard to tell and I’m not going to repeat all of this.

- I had installed the VPC endpoint to the wrong subnet when I initially increased the Lambda timeout to 10s and it didn’t work with a timeout error because there was no path to the VPC endpoint in my networking.

- When I tested locally outside Lambda with the credentials from the Lambda function it worked in less than three seconds. But I increased the timeout to 4 seconds “just to be safe.” Yeah right. The function takes 7–8 seconds to run just to list secrets in AWS Secrets Manager and probably more on initial run.

- It takes over double the time it takes to run that on an EC2 instance that I have in a VPC without all the private networking. Is that something AWS can fix?

- Then I reinstalled the VPC endpoint into the correct subnet.

- I also had to apply the security group that gives access to the endpoint to the Lambda function.

- After all that it still failed — with the same error.

- I didn’t think the timeout was the issue since I had already tested that but after wasting too much time I tried it again. Lesson learned.

Here’s the big problem:

- There is no distinction when you get a timeout as to whether it is a memory error, a network or permission problem, or a true timeout.

- There are no network logs indicating that the networking is the problem when you increase the timeout and the VPC Endpoint doesn’t exist in the proper subnet.

These things make it very difficult to troubleshoot effectively. However, by going through the steps above you will figure it out…eventually.

Note that this solves problems if you’re using a VPC with one subnet. But if you have a VPC with multiple subnets, read this:

Here’s what I am adding to my AWS Wishlist:

Report Memory Errors More Explicitly: AWS can figure out that your function ran out of memory and show you that error message in the console and when you run the function. They have the values there so obviously they can do the math and report the error.

Report No Access to Service Errors More Explicitly: Report back to the user when there is no network path to reach an AWS Service. Obviously you can figure that out on the back end.

Permissions errors: I did not have a permission error in this case. I presume if I did it would not be reported as a timeout error and it should not be. This includes a misconfiguration of the VPC Endpoint policy or some other policy.

Report back explicitly which policy or network configuration is preventing access so the user doesn’t have to go through all these hoops to add all access and then remove different access step by step to figure out what the problem is. In this case, the main problem is that the same error gets reported for a true timeout and lack of network access. Those need to be different.

By the way, this is what X-Ray looks like after success and it’s not that much different. It wasn’t very helpful in solving this particular problem. Maybe it would be if it showed you here that access to a particular AWS service got blocked and by what network resource.

OK, we can access AWS Secrets Manager. I have some templates to fix and I’m guessing we’ll need an endpoint for KMS as well, unless that happens behind the scenes within the AWS Network. More testing to be done.

I’ve explained a number of times why you need a VPC. Yes, this is painful, but the results if you don’t use one are likely worse.

At one point I explained why you need a VCP for Lambda but I didn’t even take into account the configuration to call AWS networking.

But as we were discussing on Twitter Ben Kehoe said, “Yes, but AWS should make it easier.” I agree. Lack of sufficient logs and generic error messages made this way too time consuming to troubleshoot. Hopefully AWS will be able to fix it with better error messages and the improvements they are working on for the VPC Resource Map.

On to getting and using a secret.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

The best way to support this blog is to sign up for the email list and clap for stories you like. If you are interested in IANS Decision Support services so you can schedule security consulting calls with myself and other IANS faculty, please reach out on LinkedIn via the link below. Thank you!

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

Author: Cybersecurity for Executives in the Age of Cloud

Presentations: Presentations by Teri Radichel

Recognition: SANS Difference Makers Award, AWS Security Hero, IANS Faculty

Certifications: SANS

Education: BA Business, Master of Software Engineering, Master of Infosec

Company: Cloud Penetration Tests, Assessments, Training ~ 2nd Sight LabLike this story? Use the options below to help me write more!

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Clap

❤️ Referrals

❤️ Medium: Teri Radichel

❤️ Email List: Teri Radichel

❤️ Twitter: @teriradichel

❤️ Mastodon: @[email protected]

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab

❤️ Buy a Book: Teri Radichel on Amazon

❤️ Request a penetration test, assessment, or training

via LinkedIn: Teri Radichel

❤️ Schedule a consulting call with me through IANS ResearchMy Cybersecurity Book: Cybersecurity for Executives in the Age of Cloud