Handling Credentials Generically in a Custom Lambda Runtime

ACM.320 Also, threat modeling use of local credentials in relation to the LastPass breach

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: Lambda | Container Security | Application Security

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post I showed you how to create a generic error handler — that actually works, unlike my first rough cut — for a Bash Lambda Runtime. That was not simple as the resulting code looks (see the TLDR; at the top). It still needs more testing and I have a couple planned improvements, but for now, it works.

Next I want to show you the generic code I wrote to handle credentials when testing locally to simplify testing. But before I give you the code that I’m using to obtain credentials, I’m going to explain why it is risky and how you can reduce the risk associated with using this code. If you use this code in a manner other than what I am explaining here, prepare to face the consequences.

Threat Modeling Attacks on local Lambda Credentials

In this post, I’m going to consider an attack — the LastPass Breach — and what an attacker could do. Here’s the scenario:

Here’s the scenario:

A developer working on a laptop has remote access to systems.

They are developing code with whatever credentials and access you gave them.

They install some software (software to listen to music in that case).

The software has a vulnerability that allows attackers to access the system.

Attackers exploit the vulnerability and install malware on the developer laptop.

The malware includes a keylogger.

Any time the developer types a username and password, the attackers can get them.

Any time the developer enters an MFA code the attackers can get it.

If there's a keylogger in place, an attacker can obtain MFA tokens.

Any plain text file with credentials is accessible to the attackers.

Any environment variable with credentials is accessible to the attackers.

Anything a developer can execute is accessible to the attackers.Thinking through what an attacker can do if they obtain access to systems or credentials is called Threat Modeling. You think like an attacker and try to think of all the things they can do to attack your implementation. If you need to think of things an attacker might try to do you can use a list of attacks provided in the Mitre ATT&CK Framework. For each attack, see if your system is vulnerable to these threats and attacks:

I’m not going to go through every attack listed there. I’m going to focus on what happened in the LastPass Breach because it’s a great scenario to consider various types of attacks such as those I presented in this post on different types of Man-In-The-Middle (MITM) attacks.

The fact that the attacker also had a keylogger is also tricky. I’m not going to solve all the problems in this post, but I’m going to show you how my method is less risky than some others, and leads to more accurate testing. I hope to solve the remaining problems in future posts.

Making testing Lambda containers more accurate

The first thing I want to do is make sure my local testing is as accurate as possible. In order to do that, I need to get credentials and a session token for my Lambda execution role. That ensures that my local Lambda container that I’m testing has the exact same permissions that my Lambda function has when it runs.

If I don’t do that I could either get false positive results showing my container works because my local credentials have too much access. I could also get a negative result where my local credentials don’t have all the permissions that my remote container has and get inaccurate failure. Part of that latter challenge also includes networking since I’m using a separate test environment that may have different networking. I explained some issues I had with networking and how I fixed them. I still need to further automate that network environment, but for today we’ll focus on credential access.

Making testing easier

I already explained in a prior post how you can obtain credentials from a Lambda function. Lambda temporary credentials work for a set amount of time before they time out. You can change that session time if you want.

I’ve seen security people scoff because it is like 12 hours but if you don’t like that you can adjust it. For testing purposes that works fine for me, but I’d have to reset the credentials every day in my local test environment. I want to automate that process a bit more.

In this post I want to automate that process a bit more. By having my Lambda function output the credentials in the format I need to set local environment variables in the container, I can more easily copy and paste Lambda credentials into my container code for local testing.

A longer term solution — even simpler but more risk

I would consider this step one of an even more automated solution. A more time-consuming fix that could save time in the long run would be to create a Lambda function that returns the credentials in a response that your container can read for a given Lambda execution role. Read the response, and use it in your local test environment.

Let’s consider what happens if you allow that. Instead of having to log into a website to retrieve the credentials, presumably with MFA and ideally with a hardware MFA token as explained in my post on Man-in-the-Middle attacks, the attacker can now just use the permissions of the local developer to grab the Lambda credentials and use them. Anywhere if you don’t have any other networking restrictions.

To prevent that we could create our container that returns the credentials and provides them to a running container and restrict that execution to a specific AWS and network environment with no sensitive data. That’s all complicated and needs a lot more thought, so I’m not doing that just yet.

It would be nifty for testing — any running container in a test environment could reach out and the credentials for the specified role and set them inside the container in it’s environment variables. It would also be really nifty for attackers who can execute that Lambda function — and get credentials for any Lambda function running in that environment.

I’m getting more concerned as I write this, actually. If an attacker gets access to deploy things in your environment — they could deploy a container like that themselves. The role would have to allow a Lambda function to assume the role in the trust policy. I’d have to test it out but I presume one Lambda could assume the role of another Lambda function as long as Lambda is allowed to assume that role — but we can test that out.

Yikes, if possible.

Reducing the blast radius

What’s my alternative? My credentials don’t have a lot of access for one thing. My Lambda credentials only allow getting a token from Secrets Manager right now and that token has read-only access to a public GitHub repository. Want the code for a development website already public? Knock yourself out.

I only have one Lambda role right now. So I can think this over and create a more robust solution for the long term as I work though my blog posts — before granting more permission. We’ll test various access and do additional threat modeling along the way and make sure we have a well thought-through solution for protecting the credentials.

We can create additional service control policies to limit the networks and environments where credentials may be used. Any by the way…

This is not very secure (i.e. Don’t do this in production)

This process for obtaining credentials is actually not secure. It’s for a test environment. Test environments should not have sensitive production data. In a production environment I want to turn this off.

For now, I’m going to add a DEBUG mode which gets disabled in production.

This is not very secure because anyone who gets access to my machine can read the credentials in any plain files or via environment variables.

Any requests sent with http instead of https will be accessible on the network. I’m using http in my local RIE. Those requests are all on the same machine but any attacker on my machine could intercept and read those requests via the network.

Anyone who can log into the AWS console and look at the logs for my Lambda function can see the credentials. They are not encrypted in the logs.

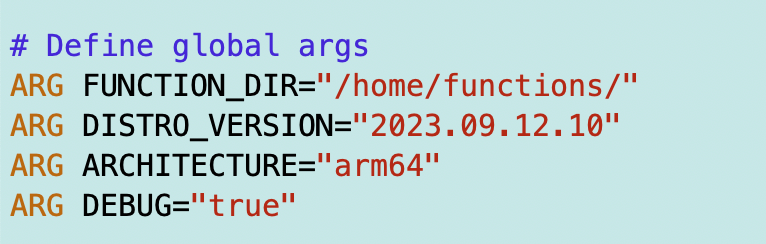

The other thing I add is a DEBUG argument in my Dockerfile:

I only copy the credentials for local testing if in DEBUG mode.

NOTE: When you remove the file and rebuild the container it may still persist in prior laeyrs before you removed it. This is where you need to think through your Docker build process a bit more and take action to remove the sensitive data in prior layers. I’ll cover that later.

I repeat: This is not a secure solution. This is only for my immediate testing.

Test and Verify this is disabled with QA and Code Reviews

You need to make sure that if you use anything remotely close to this, it is disabled when deploying to production. Also think through the threat model completely as to what someone can do if they have these credentials or any credentials belonging to your developers.

What can AWS principles deploy where?

One of the whole points of what I am doing here is to get to a secure deployment pipeline. This isn’t it. This is for testing purposes and should not be anywhere near production or credentials that can be used in production.

This is one of those things your security scanners aren’t likely to uncover. That’s why you need to understand what code is doing and have reviews by people who understand the implications of configurations. You may be able to create some custom automated checks for this value, but human review is still a good idea.

I would recommend that once your code reaches a QA environment this should be turned off. If your QA environment does not mirror production, then add a separate staging environment that is fully automated before releasing to production and validate that what is deployed there (which should mirror what will be deployed in production and cannot be changed after that point) has any debugging turned off.

QA professionals should test that it is turned off before it reaches production in an environment people cannot manually alter or adjust that mirrors your production environment.

Make sure this is turned off after an automated deployment that will be repeated the same way in the production environment. Verify the checksums of containers and code packages. Make sure your code cannot change during or after the production deployment.

That will help prevent a SolarWinds type breach, by the way, the largest breach of US Government systems at that point.

Better than long-lived credentials, but maybe not much

Although this is not super secure, is using the temporary short-lived credentials for a Lambda function better than using your own long-lived credentials? Yes. If you have your long-lived credentials hardcoded on your local machine, then an attacker can get those and use them repeatedly with no timeout.

If you are using Lambda temporary credentials there are a couple of benefits:

- The attacker can only use the credentials until they timeout.

- The attacker can only do what the Lambda function can do.

But what if an attacker obtains access to the developer’s laptop where they are executing Lambda functions and logging into the AWS console? An attacker can do anything a developer can do in that case. They can potentially read anything on the network, in storage, or in memory on that machine.

If there is a process the developer can use to obtain the short-lived credentials, and the attacker has access to a developer machine, then the attacker can trigger the process to get the short lived credentials the same way a developer can.

Short-lived credentials — such as short-lived OIDC credentials for GitHub which is all the rage right now — are not any better than long-lived credentials if an attacker gets access to the process for creating new short lived credentials.

Defense in depth — multiple security controls to limit attack vectors

When I’m thinking about threat models and potential attacks, I like to ask the question — how many chances are you giving attackers to break in and steal your data? How can you reduce those chances?

Attack vectors are all the ways an attacker can breach your system. We want to continuously try to reduce the attack vectors. Having multiple security controls that reduce attack vectors and require attackers to bypass multiple layers of security controls (like MFA for your bank account) is what security professionals call Defense in depth.

In my case, my process for getting short-lived credentials is as follows. Let’s say, as in the case of LastPass, an attacker gets access to my local laptop. I’ve got SSH access to an EC2 instance. I’ve locked down the ability to invoke Lambda functions to that network where the EC2 instance is deployed via a Service Control Policy. So in order to invoke Lambda functions in that OU, the attacker has to be in that network.

With access to my local laptop alone, the attacker cannot invoke Lambda functions. They would also need to get access to the EC2 instance or be able to deploy a new EC2 instance or resource that can trigger Lambda functions from within that network.

To get access to the EC2 instance, they would need to bypass all the network restrictions set up previously. If they are on my local laptop and can SSH into the EC2 instance, then they could get access to invoke Lambda functions. Depending on how my credentials are set up, they could potentially connect from my local laptop to the existing EC2 instance but they have to do it while I’m using it, because I shut it down when not in use.

It would potentially be hard for me to tell by looking at network traffic alone that something is wrong because my IP is allowed through the network. You could restrict the SSH access on the EC2 instance to only one connection, or take some other means to discover how many connections exist at any given time.

From the EC2 instance, they could try to obtain the credentials and use them in some other environment. Well, I could lock down other sensitive actions to my VPC the same way I did in the post above.

What if we locked down access to Secrets Manager for Lambda secrets to the Lambda VPC? Then the attacker would need to execute a Lambda from my EC2 instance to get access to Secrets Manager values and retrieve the credentials that way.

Once the attacker gets the GitHub personal access token, what can they do with it?

For GitHub, recall that my GitHub account is locked down to certain IP ranges. The attacker would need to access GitHub from my EC2 instance or a Lambda function with access through the NAT.

We could also add additional restrictions as to which IPs and resources can access GitHub via the GitHub security group in a Service Control Policy.

Logging and Monitoring

Let’s say an attacker did get all the necessary access to steal the GitHub personal access token and now they are on the EC2 instance with access to GitHub or some other resource they deployed in that account.

Then the attacker could try to exfiltrate the data. Data exfiltration is a fancy way of saying an attacker figures out how to steal the data and transfer it from your systems to their own. Does your network allow outbound transfer or data and in what circumstances? How can you spot that exfiltration? The attacker would need to be able to send data outbound and likely you’d see a large file transfer outbound of some kind. You can monitor for that.

In fact, in the Capital One breach I was told that someone in the Security Operations Center (SOC) did see a large outbound file transfer. But due to all the noise that SOCs have to deal with they presumed the transfer was OK and ignored the alert. How would you know the difference between a valid outbound file transfer and a malicious one?

What if we only allow very few people to execute functions that can transfer data from GitHub to AWS CodeCommit and send an alert to that group of people any time that function executes? They should be able to figure out who executed the function and if it is legitimate in an environment with sensitive data.

Attackers could also try to encrypt all your data so you cannot access it — which is what Ransomware does. Would you know if someone was encrypting all your data? Would you be able to recover it? Do you have a well segregated backup account?

Table Top Exercises for Threat Modeling

I could go on and on but those are some of the things you need to think about any time you are dealing with credentials. It’s a good idea to do what are called Table Top Exercises for threat modeling systems. You sit down with your team, hopefully including developers and QA professionals who built the system, and try to come up with ways the system may be attacked and how you will defend against those attacks.

As a single person trying to do this, I might miss something, so it’s better if you are going to use any of my code to have your team do some threat modeling. I’d be grateful if you contact me on Twitter (X) or LinkedIn using the links below and send me a note if you thought of an attack not mentioned here, and I’ll try to cover it in a future post.

Obtaining temporary credentials for Lambda testing purposes

The solution I’m using here to get credentials for testing is not incredibly secure but I have some additional controls in place and I’m working in an environment with very limited resources. Should something unexpected occur there’s a better chance I will notice it.

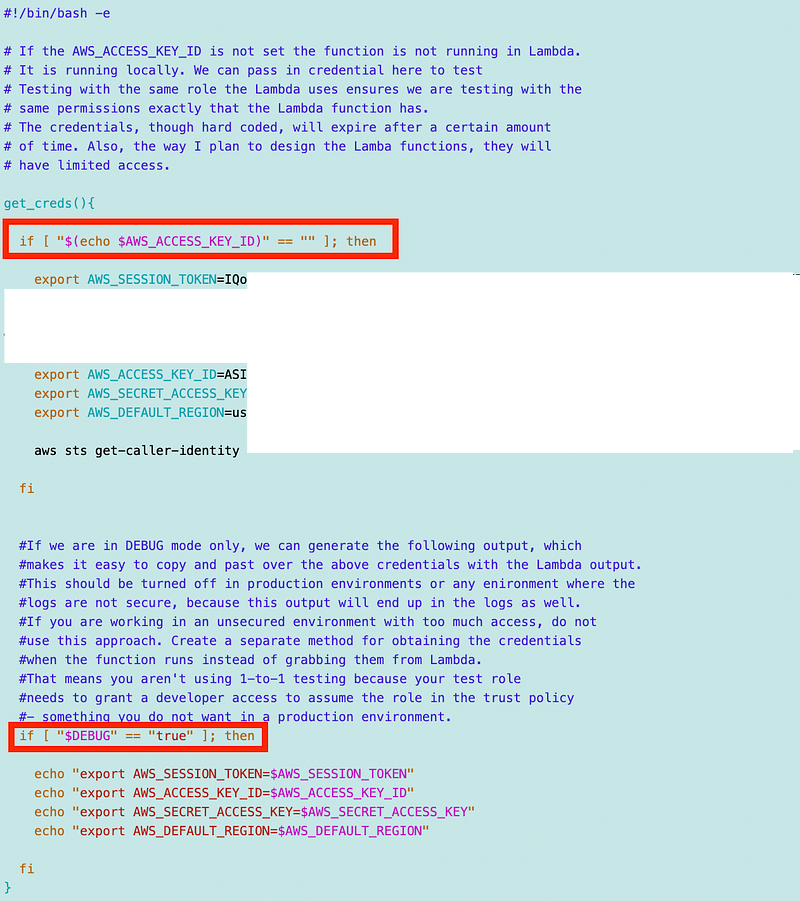

Note that my export commands need to run inside the container. What if I moved this code to Dockerfile? The environment variables would be set to hardcoded values if I was running in Lambda as well as if I wasn’t. We need to check which environment we are in when we run the container and if they are, don’t override them.

If the environment variables with credentials are set, then we don’t need to do anything. The container will use the credentials provided by the Lambda function from within AWS.

I also determined in the last post that the STS check I have here to validate that the credentials are working and using the correct principal was failing when the container runs on Lambda. I only run that if testing locally.

If we are running on Lambda and DEBUG is “true” then I will output the values in the format required so I can simply copy and paste the values to my Lambda container code. I think I added some quotes somewhere so now I have to remove the extraneous line break characters and manually add line breaks — maybe I’ll adjust that somehow later but this process is still easier than copying and pasting each value separately.

Nothing is foolproof but I’ve taken steps to limit the risk, and will continue to do so as we progress. For example, what if I could require specific MFA credentials to obtain the GitHub secret? GitHub doesn’t have a way to provide programmatic MFA but we may be able to enforce it on the AWS side. But we can’t require MFA for the Lambda Execution Role. I’ll explore those ideas in an upcoming post.

For now, here’s the code I’m using to obtain the credentials for local Lambda testing.

I have a DEBUG credential and as noted I want to push and retrieve it from a temp file for the reasons explained in the prior post. I haven’t updated this code yet to do that but you can easily change that by mimicking what I did in the last post.

Here’s my function for getting the credentials.

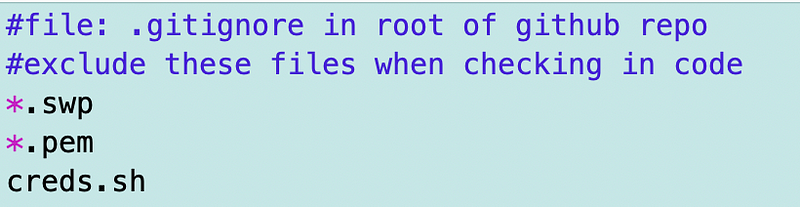

I made own other update after I wrote this post initially. I moved the credential export commands to a separate file and sourced it in the above file. That helps in two ways:

- It’s easier to delete and recreate a new credential file.

- I can add that credential file to my .gitignore file so I don’t accidentally check those credentials into GitHub.

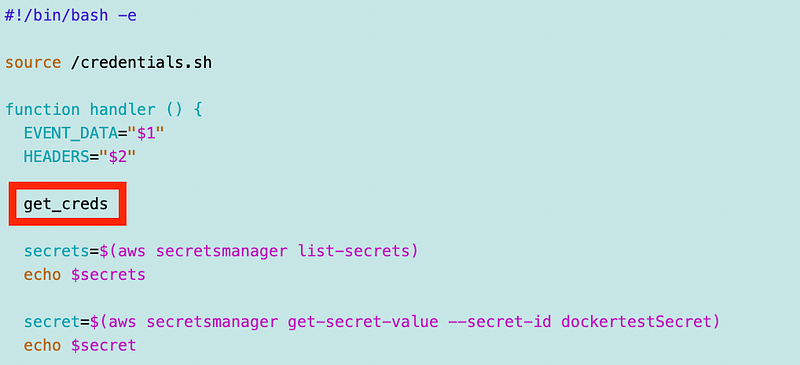

I can simply call this function at the top of my functions/handler.sh file to make sure the credentials are set when running my function.

Perhaps you even require that developers remove that line when deploying to production just to be safe.

Now that I have a decently solid way to test my container we’ll proceed to copying code from GitHub to AWS CodeCommit and see what else breaks in the process. :-)

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab