Copy Files Between S3 Buckets In Different AWS Accounts

ACM.217 Simple method to transfer files (objects) between two AWS accounts

Part of my series on Automating Cybersecurity Metrics and stories on AWS S3 Buckets. The Code.

Free Content on Jobs in Cybersecurity | Sign up for the Email List

In the lat post I wrote about sharing and encrypted AWS Amazon Machine Image (AMI) programmatically.

In this post I’m going to provide a script to transfer files between S3 buckets in the simplest form. As I wrote about in this post, there are many options — and security and cost considerations — for transferring files between S3 buckets.

I also covered the topics of objects versus files in this post:

There are a myriad of security, cost, and performance considerations as I went over in the above posts. You might need to use a different option than I am using here for the various reasons I covered.

For my use case, with limited data and no compliance or high security requirements, I’m going to use a simple mechanism to get the job done.

I’m going to use the aws s3 sync CLI command.

S3 bucket with a KMS Key

I’ve already shown you how to create an S3 bucket:

With a KMS Key:

Get the User ARN

In my case, I was using a cross account role to get into the account where the files exist that I wanted to copy to the new account. In order to get the correct ARN for my user I ran this command:

aws sts get-caller-idGrant the user access in the S3 bucket policy

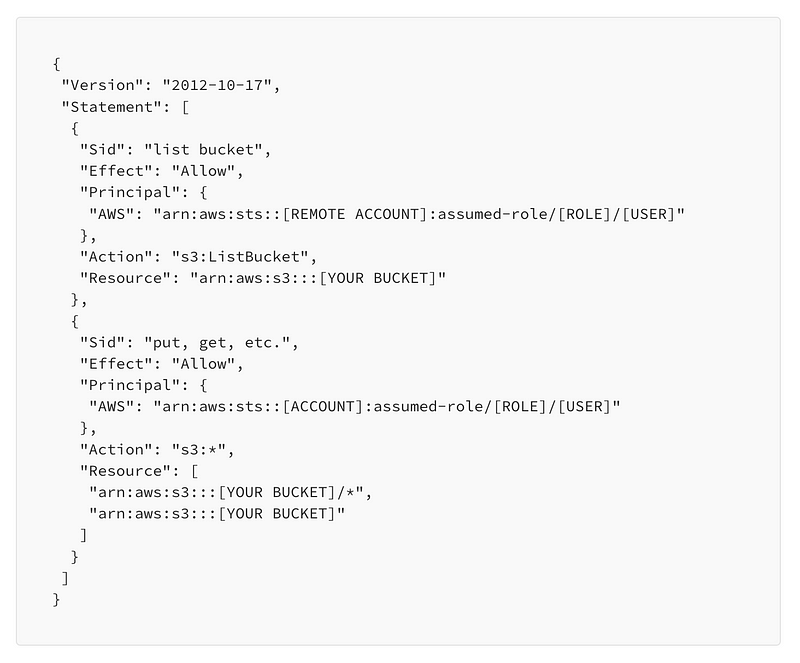

Create an S3 bucket policy that allows the user to access the files in the bucket.

Note a couple of things below:

- Your User ARN may be different. Use the get-caller-identity command above to get the correct ARN.

- Note that there is no asterisk (*) on the bucket ARN for ListBucket.

- Add a slash and an asterisk (/*) for resource specific actions like GetObject and PutObject.

- Note that I removed this policy as soon as I was done transferring the files. For a more permanent solution or a more high-security scenario, only add the specific actions you need where I have s3:* below.

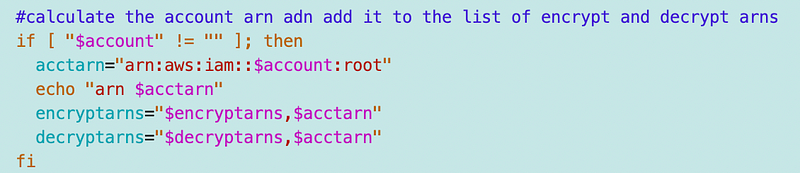

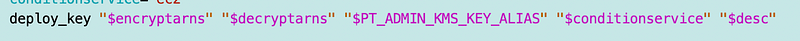

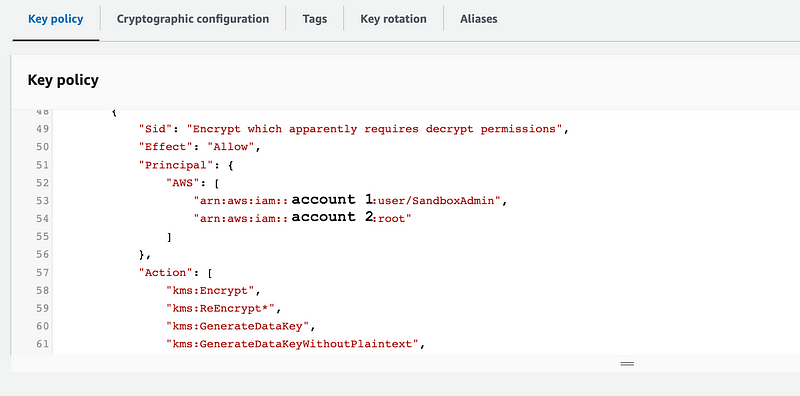

Give the remote account access to the KMS key in the Key Policy

I showed you how to grant access to a remote account in a key policy when I showed you how to share encrypted AMIs. We can simply pass the remote ARN to our generic key template in the list of encrypt and decrypt ARNs and we’re good to go.

Once again, your User ARN will be different than what is listed below. Use the aws sts get-caller-identity call above to get the ARN for your use case if you are the one running the file transfer commands.

Read the prior post for details.

Grant the user access to the bucket in the IAM policy

We also need to grant the remote user in the remote account access in their IAM role or policy. I was using a cross account role so I added a policy to the cross account role. This is a temporary policy which I will remove after the transfer. If this is a more permanent solution, only add the actions you need. For a back up user they only need to put objects in the bucket, not download or delete them.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "list",

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": [

"arn:aws:s3:::[YOUR_BUCKET]"

]

},

{

"Sid": "actions",

"Effect": "Allow",

"Action": "s3:*",

"Resource": [

"arn:aws:s3:::[YOUR_BUCKET]/*"

]

}

]

}Too many requests

At some point my session got blocked for making too many requests. I was using AWS CloudShell. I simply exited and refreshed to start a new session and then started the command up again.

In other words, I had to kill my session and start over.

That’s it! It didn’t take too long for my particular amount of files.

You can use similar commands for backups. There are many other ways to do backups and you might want a Direct Connect, VPN, or Private Link as well and limit S3 buckets with a VPC Endpoint or IP restriction in the bucket policy. I would *not* use IP restrictions alone. Use them with encryption (KMS) and identity controls (IAM and bucket policies). In my case, the allowed role also requires MFA.

You can check out my posts for MFA with AWS IAM roles and the CLI here:

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab