AWS Service Endpoints and VPC Endpoints

ACM.379 Fixing the STS network problem blocking STS assume-role in Lambda and containers

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: AWS Security | Secure Code | Network Security

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

It seems like every time I go down a particular path to try something out there’s some kind of road block. In the last post I just wanted to test out whether AWS Marketplace would work in my scenario with a quick test, but as it turns out I’d be setting up something in the wrong account and I can’t change it later so I had to pause those efforts. I missed something in the directions but that’s because I wanted to test without setting up a public profile so I skipped over those directions. I guess that’s not possible. I’m waiting to hear back from AWS and get what I started closed down, since I don’t see a way to do that myself and regrouping.

I could continue down the path of setting up the AWS Seller Account but I need a new account for that. Even if I do set that up, I still want to resolve whatever is causing my STS issues that say a region is disabled when it’s not.

When I tested that outside my private network it worked fine. This started to get me thinking about a prior problem I had with Lambda related to this issue. I was told that my Lambda role did not have permission when it did.

What if my Lambda function was also just a networking issue because STS is using an endpoint from another region or some IP range I’ve inadvertently blocked?

I’m on a mission to solve this problem today, if I can. I know that when I ran the same code outside of this network it worked in both a container and container using the Lambda Runtime Interface Emulator. I thought the STS restriction was due to something in Lambda itself, but now I’m thinking it might have been my network.

So here’s what I want to figure out — how to assume a role in container in a private network with VPC Endpoints. Then I’ll see if that resolves the Lambda issue.

And AWS really needs to correct the error messages in both the above scenarios to be accurate. #awswishlist

First the container alone, since that’s what I’ve been working with most recently and have handy. Once we resolve the issue that blocked me at the end of this post, I can test running the script in the container I specify to make an API in a remote account that requires role assumption with MFA.

AWS Service Endpoints

Here’s my first hypothesis. Is the AWS CLI calling an AWS service endpoint that is getting blocked?

When AWS makes calls to AWS to perform actions on its cloud platform it makes those calls to AWS Service Endpoints. These are essentially domain names that point to specific infrastructure that handles your API requests.

AWS has regional, global, and some other cloud and stack specific endpoints which you can read about here.

What I started to wonder is if my call is going to an endpoint that is not allowed by my particular network configuration. I am especially aware of this issue because when Capital One first started to try to use AWS, none of the commands the developers tried to run worked.

The problem was that every time a developer made a call it would try to hit one of these endpoints and the proxy was blocking the connection based on the domain name. The proxy team had to explicitly open up any APIs the developers were allowed to access.

I also had this issue when teaching classes for an organization that has developers use remote VMs to reach cloud systems. I had to explain to them a bunch of times that wherever the students were using the VMS, the network had to allow access to the cloud. I don’t think the particular people I was explaining this to were technical enough to understand what I meant.

So I was surprised when we went to teach to a team at the FBI, and I was told no problem. They will have cloud access from an FBI facility. I would think the FBI would have strict network connectivity requirements. Also, we didn’t test our labs in GovCloud so if that’s what they were allowed to access our labs might break.

Thankfully before class someone pointed out that, no, you can’t randomly make any network connection you want to cloud APIs in an FBI network. I don’t know all the details, but at the last minute the class got moved to a different location where the students had networking access.

That is also why, when I ran own classes, students used VMs in their own cloud non-production account — and preferably a free trial account set up specifically for class if advanced features were not required (because Azure doesn’t give you access to all features in their free trial accounts — some things simply did not work when students tried to do them, but I digress). Because I knew the network would not block their API calls.

So what could my STS problem be? Potentially, the call is making its way to some other endpoint than the ones to which I have allowed access via Private Endpoints. What if the call is getting directed to a global endpoint and it needs to go to a regional endpoint? I’m not sure how the STS endpoint works exactly behind the scenes and what service endpoints it is using. Let’s check it out.

STS Service Endpoints

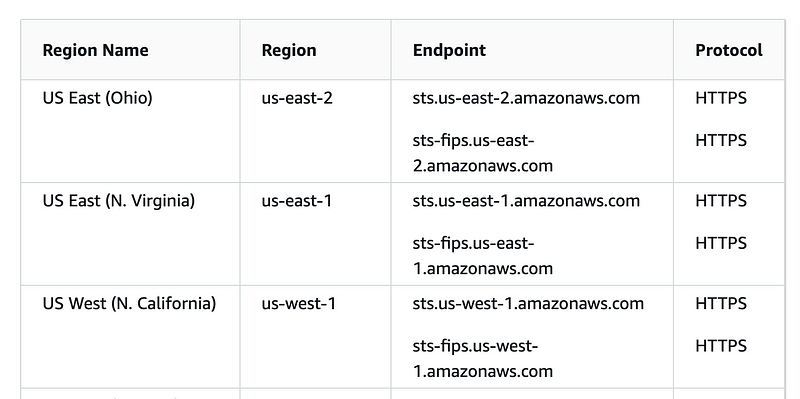

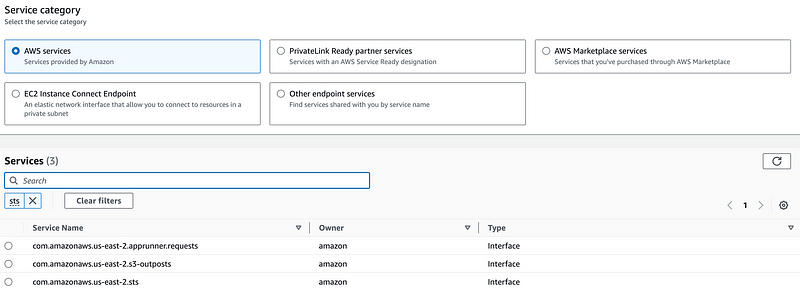

Here are the service endpoints for STS:

The documentation says:

By default, the AWS Security Token Service (AWS STS) is available as a global service, and all STS requests go to a single endpoint at https://sts.amazonaws.com.

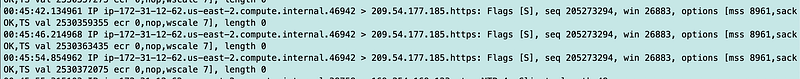

Checking DNS resolution

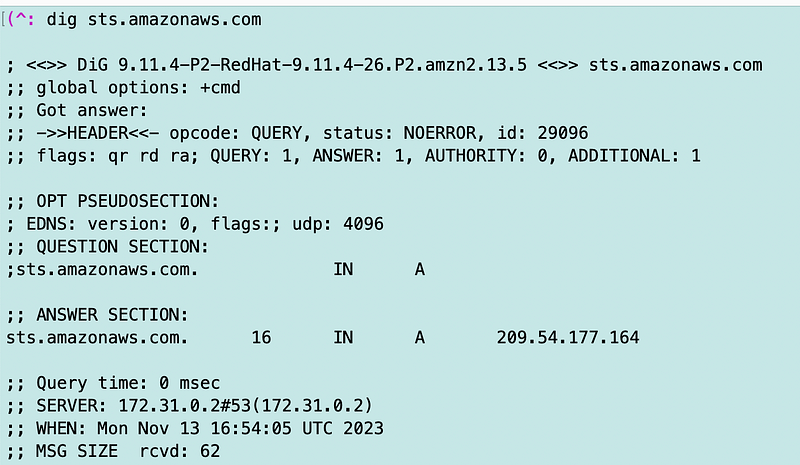

The first thing I can do is run dig in my VM to see what IP addresses I’m getting back for that global endpoint.

That is not a private IP address. It is also an interesting IP address I don’t recall seeing before:

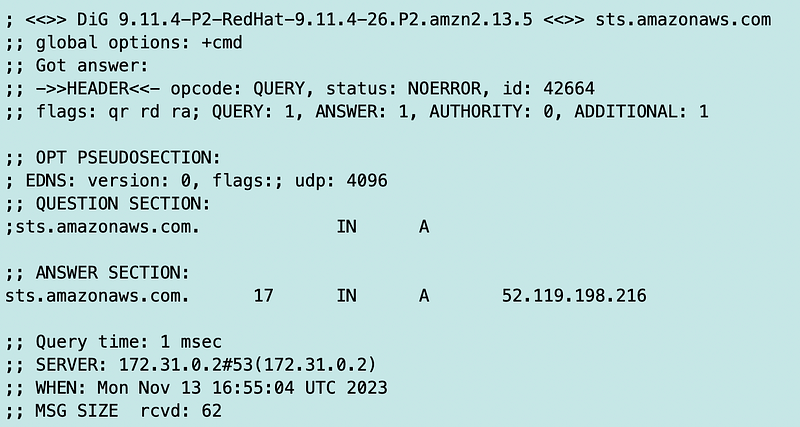

I run the dig command again and this time I get the following:

I run the command a bunch more times and I keep getting the 209 address.

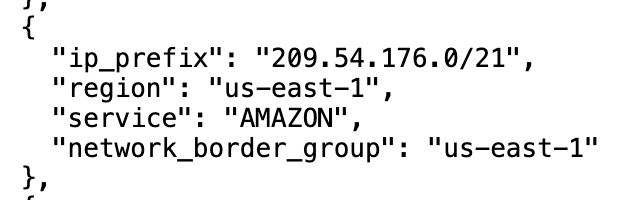

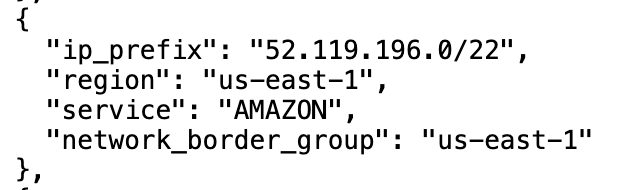

Just out of curiosity I look the IP up in the JSON IP Ranges published by AWS that define which IP ranges relate to which services.

The AWS IP range list is not always that helpful because it doesn’t actually define which IP ranges are used by which services. In this case the IP range generically says “AMAZON” as many of them do. This could be better, but I’m sure it’s difficult to manage a network at AWS’s scale.

I supposed the other address generically says AWS. Yes.

Well, at least we know the IP range is in us-east-1. I can also see that these are resolving to public IPs not private, even though I am using an STS endpoint.

If you’re not familiar with the above concepts I’ve written about all those before in prior posts.

So first of all, let me double check that I have set up private DNS on my STS endpoint.

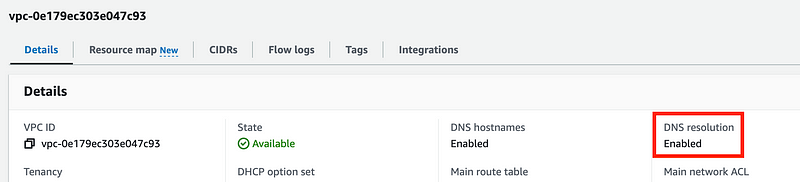

DNS resolution is enabled:

I would expect to be getting back a private IP here, which would work for my networking rules. However, in some cases, AWS does return a public IP that remains on the AWS network. I presume they have this configured correctly. People with higher concerns than me may want to verify this further with AWS.

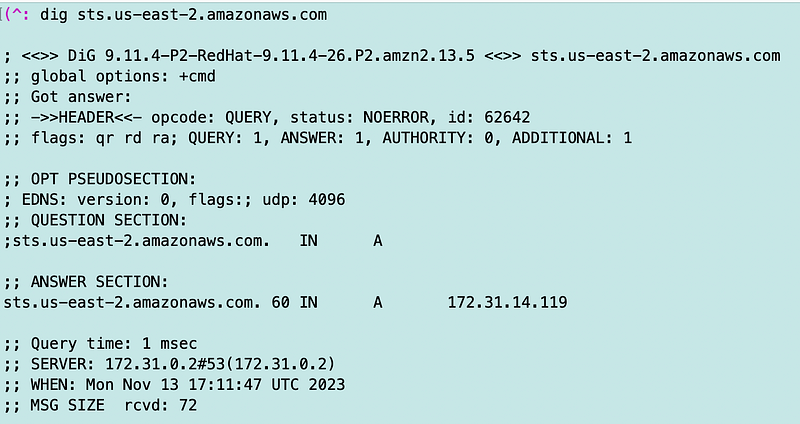

What if I try DNS resolution on the regional endpoint?

Find the regional endpoint for your region from the list above.

Out of curiosity again I’m going to try the first two.

Aha! A local IP address! That’s what we wanted in the first place.

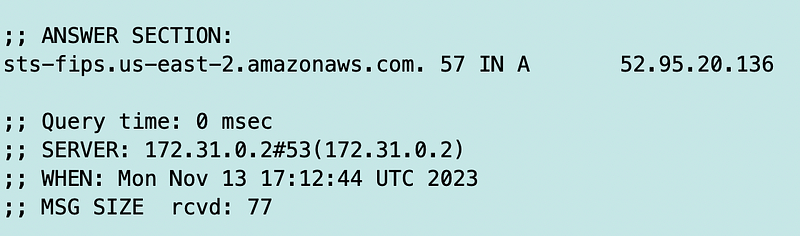

Let’s try the FIPS endpoint.

For reasons I don’t understand, this returns a public IP address. It seems like if you are using FIPS for improved security, you would want a private IP address.

That’s something you may want to explore if using FIPS but not the point of this post. I am not using FIPS and I want to see if a local IP address solves my problem. I want to keep the traffic on local IP addresses which is why I set up VPC Endpoints in the first place.

Changing the code to use a regional endpoint

Further reviewing the documentation:

All new SDK major versions releasing after July 2022 will default to regional. New SDK major versions might remove this setting and use regional behavior. To reduce future impact regarding this change, we recommend you start using regional in your application when possible.

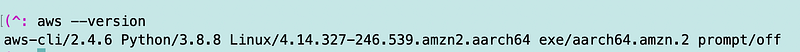

That’s odd. I thought my AWS CLI was the latest version. Let’s double check.

What is the latest version of the AWS CLI?

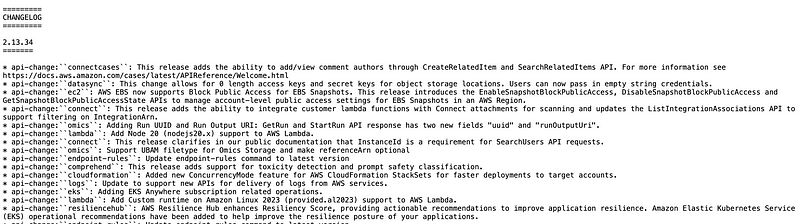

I click the change log and determine somehow my CLI version is not up to date. That’s odd and really not good. I’m going to fix!

https://raw.githubusercontent.com/aws/aws-cli/v2/CHANGELOG.rst

I have a process for building secure VMs that rebuilds and updates all software which I’ll hopefully explain later in a blog post. I used it to build VMs for every class and I use it to build a new VM for every penetration test to make sure my code is up to date. I guess I haven’t rebuilt it in a while and also — the following command does not update the AWS CLI apparently. Or at least it’s not working for me. Keep reading.

sudo yum updateAlso, as I’ve written about many times before, that command doesn’t update everything if you restrict your system to a private network with Amazon Linux 2 or the newer 2023 version from my testing. I wish AWS would fix this so you can get all updates from a private network and IP address if you are using VPC endpoints and the update is coming from AWS.

I spent some time updating my AWS CLI which I moved to this post:

Wow this is a windy blog post as all this is interrelated.

Testing the container with an updated AMI

Ok now let’s see what happens when I run my container. Does STS work? Does it use the local endpoint?

No. I still get the same error. Which is incorrect.

An error occurred (RegionDisabledException) when calling the AssumeRole operation: STS is not activated in this region for account:xxxxxxxxxxxx. Your account administrator can activate STS in this region using the IAM Console.

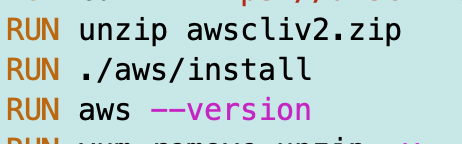

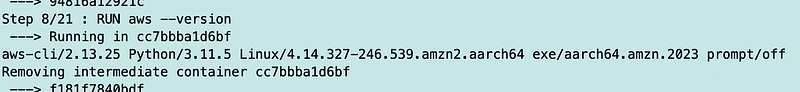

But wait. Which AMI is being used? It’s in the container and I’m installing it in the Dockerfile. Let’s add a version check.

Well, we had the correct version in the container the whole time.

But I still needed to update my AWS AMI on the local host.

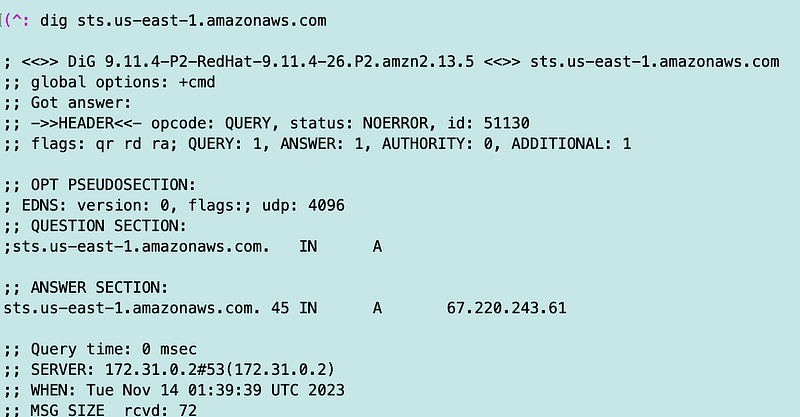

Checking DNS again VPC Flow Logs

I check dig again to see what IP I’m getting back for the endpoints. The results have not changed.

I check VPC Flow Logs to see what’s getting blocked.

I didn’t find what I was looking for — my IP address trying to reach one of those STS endpoints. But I did find this interesting entry.

Well, whomever is on the Linode network trying to masquerade as a local IP address in he 172.X range on port 4444 (the default Metasploit port) can just stop now. Do not allow this range on your network from the Internet: 172.0.0.0/8. That entire range is not private IP addresses.

I can’t find any rejected traffic in my VPC flow logs for the two addresses above used by the STS global endpoint. So I presume that traffic is headed out over the private endpoint to STS and getting blocked within the AWS network somewhere.

Also, is the CLI actually using the regional STS endpoint? How can we tell?

What if I run tpcdump and look for those IP addresses when I run my container command?

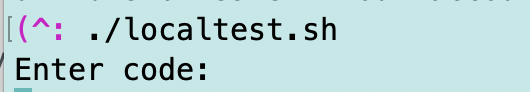

I run my code up to this point:

Then I run tcpdump in another window excluding SSH traffic.

sudo tcpdump port not 22I stop tcpdump after I get the error.

Here I can confirm that STS is correctly trying to reach the regional domain:

20:24:40.757708 IP ip-172–31–12–62.us-east-2.compute.internal.46877 > ip-172–31–0–2.us-east-2.compute.internal.domain: 54238+ A? sts.us-east-2.amazonaws.com. (45)

I also can’t really see anything blocked in the traffic from my side, unless I’m missing something. So I can only speculate that something goes along the network internally at AWS and gets blocked somewhere I don’t control and at that point some software returns these seemingly inaccurate error messages.

Testing from a wide-open network

I already basically tested that theory because I ran the code in AWS Cloud Shell and it worked.

But to fully test this theory, I can create a new wide open subnet with no VPC Endpoints and see if I can get my code to work that way.

I create a new test VPC, subnet, Internet Gateway, and route for my IGW to the entire Internet.

I launch an instance from the image I mentioned I created above so I’m using the exact same code.

Yes. I am correct.

When I run this code in a completely wide-open network it works.

When I run the code in a private network with an STS private endpoint it does not work.

But wait. Why was I able to assume a role with MFA from the AWS CLI configured on an EC2 instance earlier? That EC2 instance was running in the same private network.

Here’s why — I think.

In that case, I was trying to call STS to assume a role within the same account.

In the second case, I’m trying to call a cross-account role to run the assume role in an external account.

So this all has something to do with cross-account roles.

I’m wondering if it has to do with the restriction that prevents transitive routing on peered networks.

A VPC peering connection is a one to one relationship between two VPCs. You can create multiple VPC peering connections for each VPC that you own, but transitive peering relationships are not supported. You do not have any peering relationship with VPCs that your VPC is not directly peered with.

When the traffic leaves the VPC and hits the STS VPC Endpoint then it’s trying to jump over to some third VPC or the Internet and that’s not allowed?

Either that or it’s just some restriction to prevent unauthorized cross-account networking. But then why would the Lambda function be blocked when performing this same action in a single account?

Well, I sort of solved the problem, but not exactly how I wanted to do it. I meant it works, but it’s not really a correct solution for a private network. If I am in a private network I want to stay in a private network. But apparently I cannot do that and call cross-account roles.

It kind of makes sense. You don’t want your developers calling cross-account roles and exfiltrating data when you think everything is in a private network, right?

What if you peer the local and remote networks? Would that work? I don’t know. But how can you peer networks if someone is using Cloud Shell? You don’t control that network.

What if I test my code on Lambda in a public network. Would that work as well? I don’t know. That would be interesting to try later but not the solution I want. I want things running in a private network.

The main problem that caused me a ton of lost time here was inaccurate error messages. I really wish AWS would fix the error messages for both these two scenarios to accurately state the problem and how to fix it (if it is fixable.)

Also, I really need the Lambda solution to work in a private network.

For my current testing, I guess I can just test in a public network for any cross-account access and eventually get back to hosting a container in the AWS Marketplace later.

This is enough testing that took me way off track and it’s time to walk the dog!

Update:

Oh hang on…..

I was testing the next blog post when I realized that I had the region hardcoded in the STS call which forces the request to use the regional endpoint or though I thought. But maybe I had a bug and didn’t realize it where I was forcing the use of the wrong region in the wrong account.

I was using the local region where I’m testing in that call instead of the remote region where the role is located. That was working before I went to walk the dog…now it is not.

What happened

Anyway, I adjusted that code to use the correct region with the correct credentials — in other words:

Remote role — us-east-1

Local role — us-east-2

Now I can make the call again in a public network. What about a private network?

AHAHAHAHHAHA!! Yay!!!!

This does actually work in a private network. Or does it?

Thing work, then they don’t and it all feels very random. As soon as this was working it started not working again.

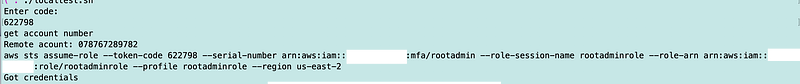

Then I realized, it’s just going very slowly when running this command:

account=$(aws sts get-caller-identity — query Account — output text — profile $remoterolename — region $remoteregion)

I can see attempts to access the STS endpoint public IP for us-east-2 in a private network — what?:

Then it times out on:

Connect timeout on endpoint URL: “https://sts.us-east-1.amazonaws.com/"

So I thought that was because the remote role I’m using is in us-east-1 I presume and the local instance is in us-east-2. But the remote role needs to take actions in us-east-1. Does it matter which endpoint it calls if it specifies the region in the request? I presume it does.

Hmm. If I change the get account request to us-east-2 the commands run quickly. But I am not deploying anything, I’m only getting an account number and assuming a role.

But now my request to assume credentials in us-east-1 in the remote account is taking forever. So I change that to us-east-2 and that runs quickly.

But what happens when I need to perform operations in us-east-2 in the remote account? We’ll find out.

Ok wait a minute. When I change the STS call back to us-east-2 I’m getting this call again after it runs successfully a few times. What is going on??

An error occurred (RegionDisabledException) when calling the AssumeRole operation: STS is not activated in this region for account:xxxxxxxxxxxx. Your account administrator can activate STS in this region using the IAM Console.

Argh.

I finally think I nailed this down.

If you use a VPC Endpoint and specify private DNS, you only get a private IP address for the specific endpoint address you add to your VPC. In my case I added sts.us-east-2.amazonaws.com to my VPC.

Now I need to connect to an endpoint in another account in a different region than my endpoint so I’m getting back a public IP address.

Since I don’t allow that public IP address in my NACLs this is taking forever to resolve. I suppose at some point I added one of the necessary IP addresses to be allowed in my NACL so that is working? Or does it eventually use the private IP address?

No idea. I didn’t wait that long.

What if I add a VPC Endpoint for another region to my subnet?

Oh look. I can’t.

So this is a problem for customers who want to maintain cross-region traffic on private networks.

Even if you can add a second VPC Endpoint these things already cost so much.

Oh well, I kind of figured out a bit more about this problem. Maybe AWS will just fix the DNS problem and make life easier.

Update: So I researched this further and you can setup peering to get private DNS responses according to this blog post and send the traffic through a peering connection to a VPC Endpoint in the desired region.

But like I said, this is going to be even more expensive since you’re paying for peering and additional VPC endpoints. I started setting up a transit gateway and in my particular environment my costs went up over 2000%. So, I’m skipping that option for now and working in my local network and account for the moment.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab