A *Working* Container That Requires an MFA Code on Every Invocation and Runs A Script

ACM.385 Getting the container working with the new directory structure in a local network to deploy resources on AWS

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code

🔒 Related Stories: AWS Security | Secure Code | Container Security

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last few I wrote about restricting the encryption algorithm used with creating EC2 Key Pairs to reduce the chance of attack on SSH due to a recently announced vulnerability.

Prior to that I was trying to get my container that requires MFA for deployments to work in a private network that assumed a role in an account in another organization in a different region. The results were very confusing at first due to inaccurate error messages so I had to perform some reverse engineering to figure out what was really going on. I sort of got it working but not fully or ideally.

In this post, I want to get my deployment container completely working and run scripts without delays or public IP addresses.

Re-organizing and re-running the root scripts in Cloud Shell

I’m going to go ahead and run my root script I was testing earlier in Cloud Shell in the remote account but this time in my local account using the root user. I need to make sure I have everything deployed for the rootadmin in this account before proceeding.

There’s one change I want to make while going through this.

- I want to only deploy the rootadmin user and policy scripts with the root user.

- I’m going to deploy the organization with the rootadmin user.

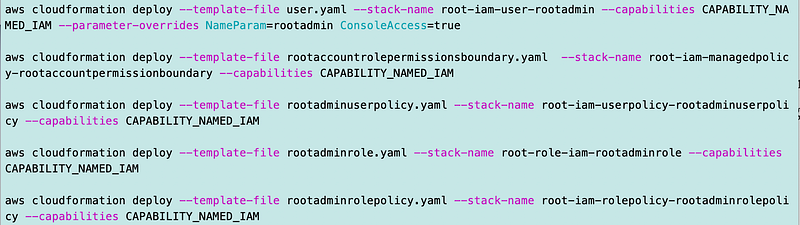

I split that code into two scripts and I now have a this much simpler script in the root folder:

deploy/root/deploy_rootadmin.sh

I moved all the code to deploy the organization to:

deploy/rootadmin/deploy_organization.sh

I’m going to test my container with that deploy_organization.sh script.

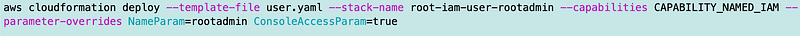

Next I uploaded the necessary files to run the root script in AWS Cloud Shell. I ran the deploy_rootadmin.sh script to deploy all the root admin requirements.

Once that completed I had the rootadmin user in that account that I can use to test my container.

Add MFA and store the rootadmin credentials in the AWS Secret

Recall that when I create a user the initial password is stored in Secrets Manager. This is a temporary password that the user will reset. Someone would have to get that to the user in a secure manner (i.e. not email.)

So I go to get the password for the adminroot user but it’s not there. I hit another glitch in CloudFormation while fixing this.

I fixed that and re-deployed my user and now I can see the password in Secrets Manager.

I use the password to login as the rootadmin user in an incognito window.

I change the password and add hardware MFA. Recall that you have to log out and back in to create the Virtual MFA device and that we are restricting the name to the current username since there’s no way to create a policy to restrict users to manage their own virtual MFA devices. Hopefully AWS will fix that. They need to add the user as a resource for the CreateVirtualMFA device action.

Next I create a secret key and access key. I need those to assume a role with MFA for my container credentials.

Recall that the container looks for those credentials in a secret. The secret has to be in the account where a local profile running the script can access it. This seems silly because to retrieve the credentials I could create an AWS CLI profile using the credentials I am trying to retrieve. But ultimately, the role on an EC2 instance will have access to retrieve the credentials so that’s why I’m testing it this way.

For this test, my existing OrgRoot user will be the local admin and has access to the secret. I’m thinking about an alternate solution so not going to dive into this issue too much here.

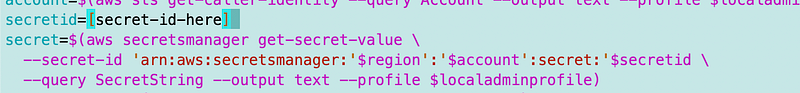

I was testing the role assumption in this file:

/awsdeploy/localtest.sh

I’m just changing the hardcoded secret id for this test.

Recall that the secret ID is in the ARN and does not match the secret name. AWS appends random numbers to the end of the secret name to come up with the secret ID.

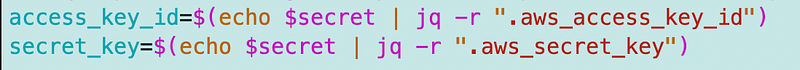

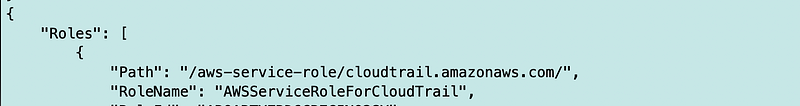

The test script is looking for these two values in the secret so I populate these with my credentials:

Recall that if I have designed my policies correctly these credentials can do almost nothing without MFA. The credentials are used to assume a role with MFA and then the role can take a limited set of actions.

So I run the script and it’s still running slowly on role assumption. That’s because I forgot to change my remote region back to us-east-2 to match my local environment I think. (This is one of the things I had to reverse-engineer in prior posts.)

Once commands are configured to run in the us-east-2 environment where my EC2 instance exists that I am using to run the commands, the commands in my localtest.sh script run quickly, even though I’m running the commands in a completely different account from where they are executing.

The next problem is that the OrgAdmin user does not have permission to pull the container from the ECR repository. This is not my long term solution, so for the purposes of this test, I change the user to the SandboxAdmin user for those particular commands.

So my container runs to the end but it hits an error on the script path. It’s looking for a script in the rootadminrole directory to match the profile and role name. Well, that’s actually more accurate so I rename the directory, build and push the container and try again.

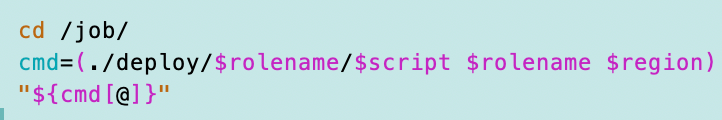

Then one last thing I realize is that I have to pass the rolename to the script.

I was also having some issues getting the command to execute properly. I ended up using an approach mentioned in this blog post:

And it works!

I still have a lot of cleanup and testing to do but now I can run my rootadmin scripts in the container or outside the container while testing them as demonstrated in this post:

Here’s my code for now:

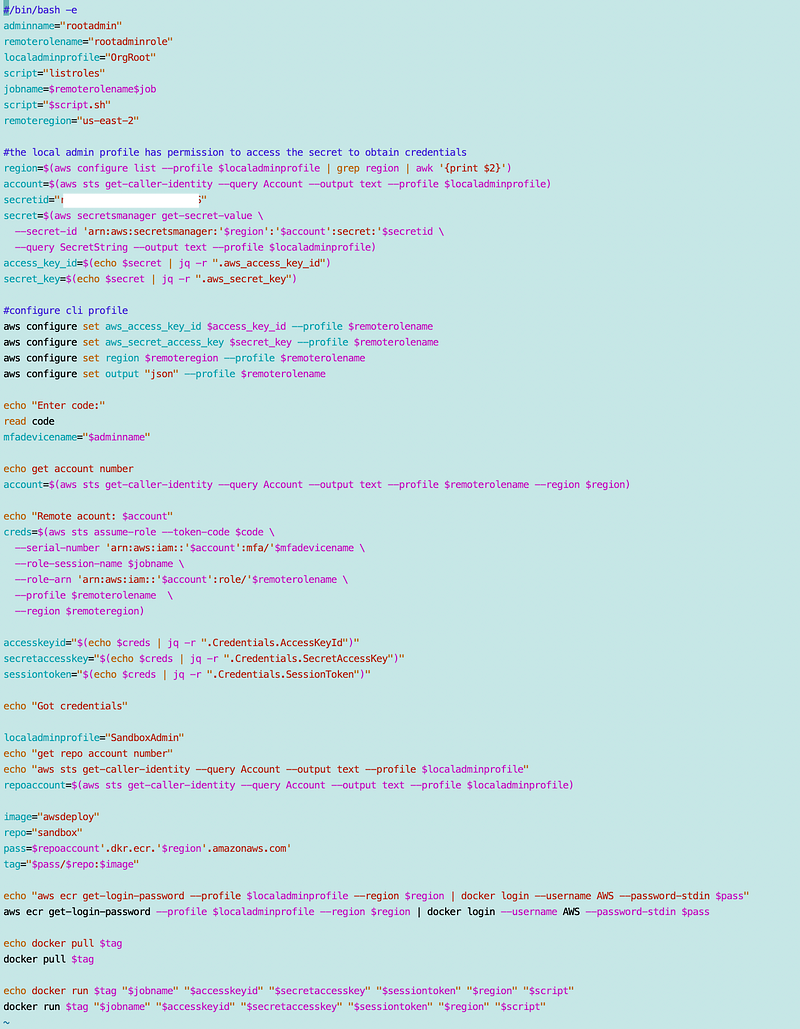

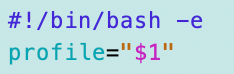

localtest.sh

job/run.sh

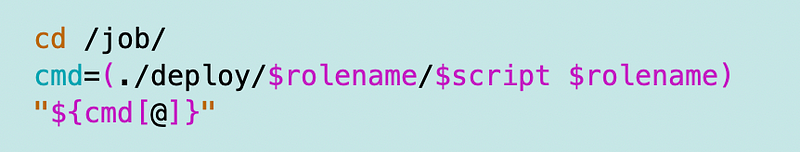

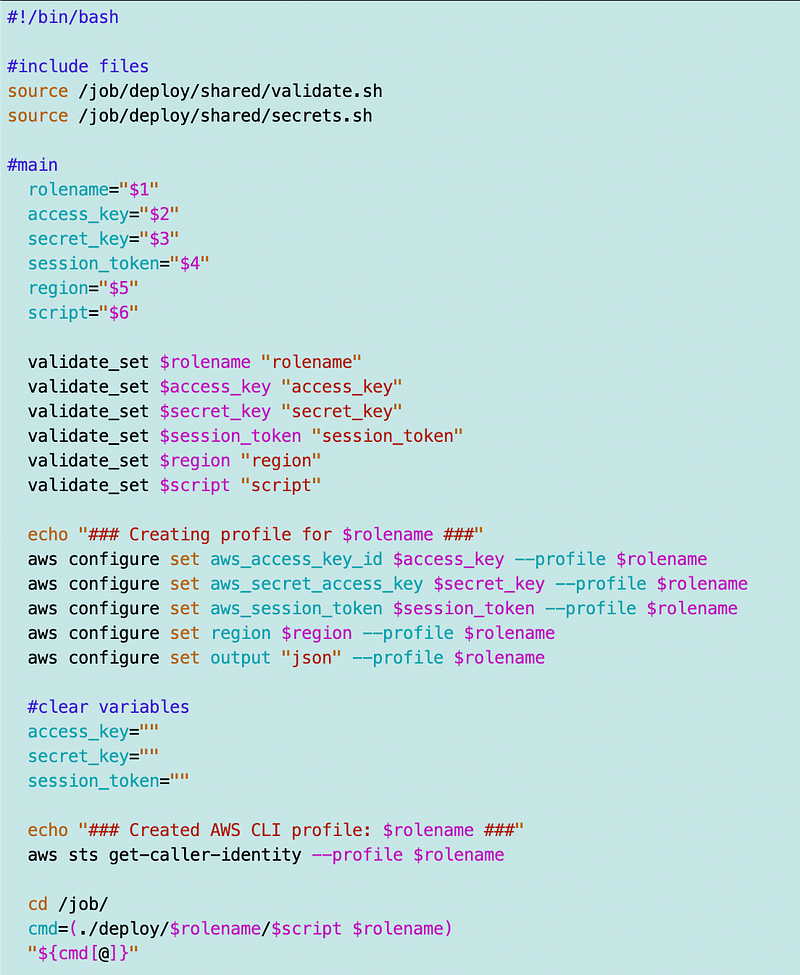

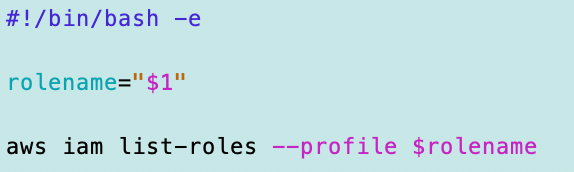

deploy/rootadminrole/listroles.sh

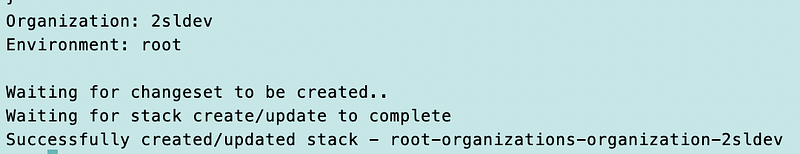

Deploying my organization with the container

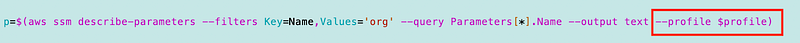

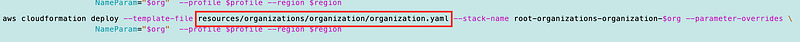

What if I want to run the script to deploy my organization?

First I have to add the AWS CLI profile as an argument.

Then I need to add the profile to all the commands since we’re not running the script in Cloud Shell anymore.

I need to align my template paths with the directory structure.

Now here again for some reason the script is taking a very long time…perhaps something that the script is doing is trying to reach a different region?

What if I hardcode the region in every AWS CLI call to the region I’m working in? I pass the region into the script and use it to set the region on all my AWS CLI commands.

That didn’t help. The command to obtain the organization information is still taking an excessively long time. In fact, it is taking even longer now.

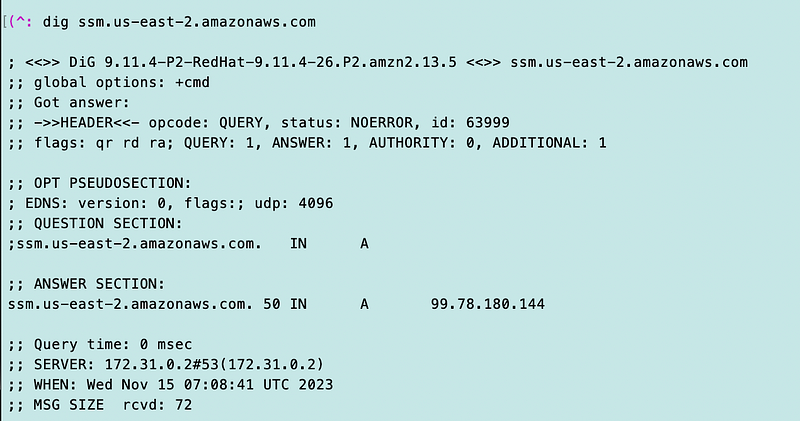

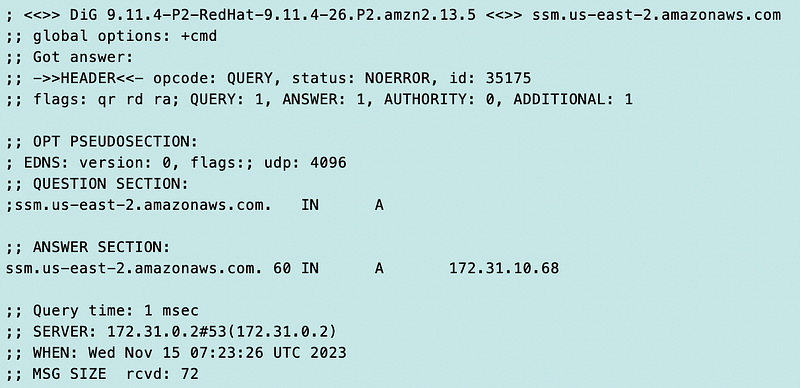

Well, here’s why. Something is wrong. I’m in a private network with a VPC endpoint for SSM and DNS should be returning a private IP address. But it’s not.

Here’s what else I realized. I had spun up a new EC2 instance and I forgot to add the Security Group for the VPC Endpoint. I really need to automate that. This was not the problem in my earlier tests. I add the Security Group.

And this is when it hits me that there is no IAM VPC Endpoint option and my last call to list roles worked without a VPC Endpoint for that service. Was it using a public or private IP? Something to check later. I add the security group to my VM where I’m running the commands.

This is also the point where I realize that is not STS it’s SSM this time causing the problem. I don’t have an SSM Endpoint. Aha.

And once again, I’m thinking there must be a better way to configure a private network for all services without the 2500% cost increase. Anyway I add the endpoint.

Was I looking at this wrong yesterday too? Like a phishing attack — only different. I went back and checked my screen shots and no, yesterday’s problems were all about STS.

I add the endpoint and configure private DNS.

Now I have a private IP.

So the moral is — if you are using VPC Endpoints, you might always want to specify the region in your AWS CLI commands and block public IPs so you don’t make mistakes. If you don’t have a public route (Internet Gateway or similar) then you might also be safe. But if you have a public route, you might make a mistake and send something over the public Internet which defeats the purpose of configuring and paying for a VPC Endpoint.

Finally. It works.

Now I need to go back and test this in a new organization at some point. But I’m going to wait until I have some other resources I want to set up.

So what have I done? To summarize:

- I created a container to deploy — anything.

- The container has all the CloudFormation scripts in the /resources folder generated from the AWS documentation.

- The folder structure matches the Category::Type in an AWS CloudFormation template.

- The deploy folder has the names of users and roles that can execute scripts.

- The scripts each user or role can execute is in their corresponding folder.

- The container takes a few generic parameters to run any script including a unique MFA token to assume the specified role.

- Presuming you have configured your roles to require MFA for assumption in your trust policies, this container requires a different MFA code each time it executes.

- The developer secret key and access key are not in the code, nor are they located on a developer’s machine who is executing the code.

Nice!

But I still have a lot, lot, lot more work to do to secure all these resources and migrate over all my code. And there are more resources to deploy.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab