Creating a Generic Job Execution Container

ACM.432 Reducing complexity and creating a flexible container that can run any type of job, not just deployment jobs

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Part of my series on Automating Cybersecurity Metrics. The Code.

🔒 Related Stories: AWS Security | Application Security | Abstraction

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post, I defined a job configuration that can run on AWS in an SSM Parameter Store Parameter using my container that requires an MFA token to execute jobs and listed the available jobs in a particular post.

In this post, I want to execute the job defined in that parameter. But this turned out to be a whole lot more complex than I initially thought. Although my thought process and changes are more complex, the resulting code is much simpler and can execute any type of job using a job configured in an SSM parameter — not just a deployment job.

Test Script

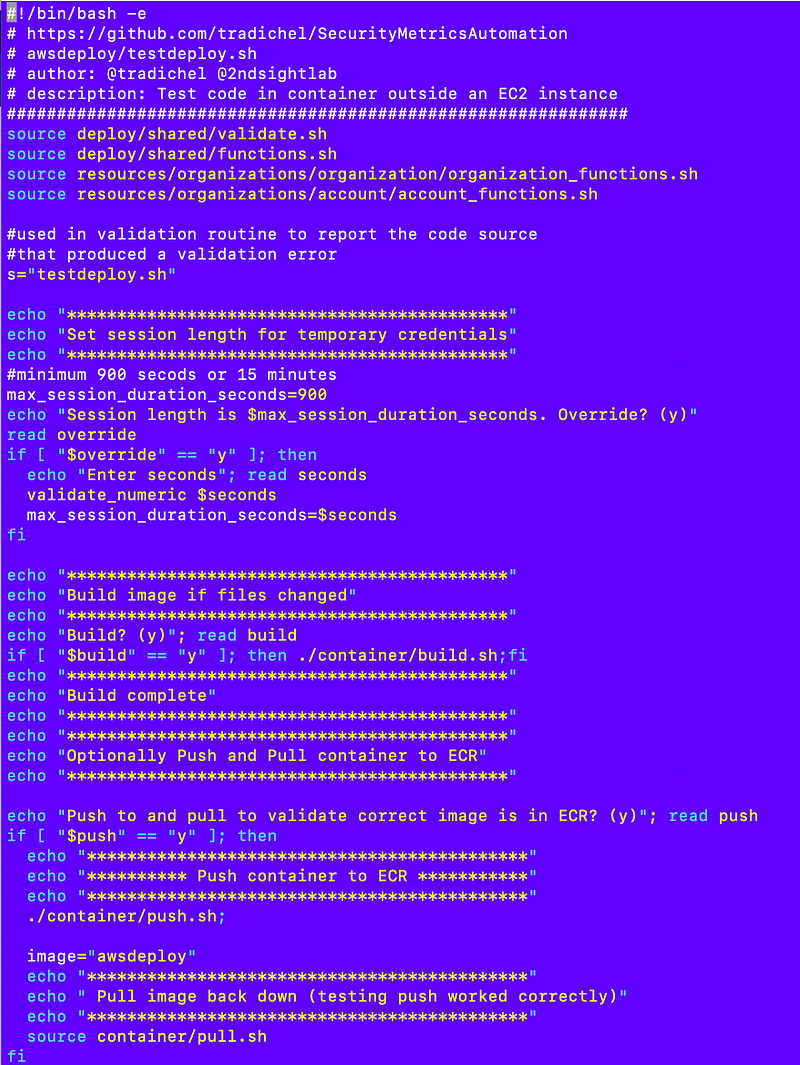

This is not the order in which I modified the code but it is the order which makes most sense to explain the changes. I wanted to make a change that required modifications to my test script. As I made those changes I realized I could make things more generic to support any type of job and minimize changes to critical code used by every job.

First of all, I ended up renaming my test script to make a backup of it. I

In the end I changed localtest.sh to testexecute.sh.

I started looking through the code and wanted to simplify it while introducing the concept of SSM Parameters for job configuration.

Here’s what I ended up with for now. Maybe I can simplify it even more later → without breaking the trust boundaries between the control plane and the data plane.

Sure I can have one role do everything but that misses the point of separation of duties between accessing and using the credentials and requiring a new MFA token each time a job gets executed.

You can choose not to take that step obviously. Or you can choose to ensure your deployments are authorized by the appropriate user with an MFA token. You can also allow users to write job execution code that cannot obtain the credentials used to run the job.

These architectural details are not going to prevent all data breaches, but can prevent a lot of unwanted actions. If your container is compromised and cannot access the host, then it cannot obtain the credentials used to execute the job. On top of that, the attacker would need to obtain the MFA token. They might be able to use the job session but it is very short-lived the way I’ve written the code — as short as allowed on AWS unless we actually need a longer session to run a job.

I wrote about all that in prior posts. On to the changes.

Changes to simplify and clarify the script:

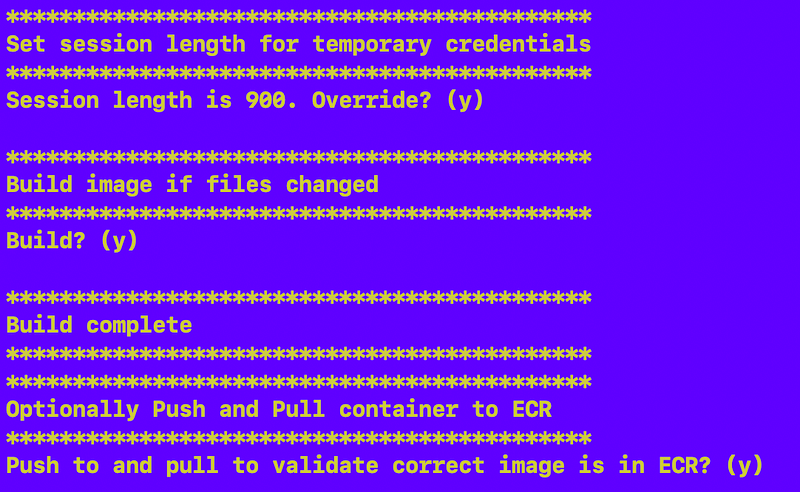

The whole top section remains unchanged — setting the session length to something other than the minimum if required, building the container image and optionally pushing it to ECR and retrieving it again to test that the correct container image is coming from ECR.

Here’s what I rearranged or changed.

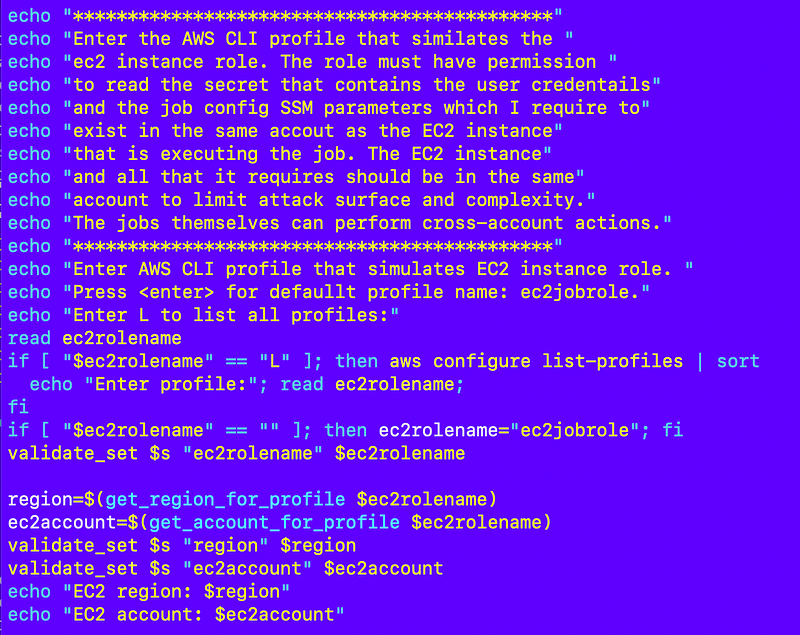

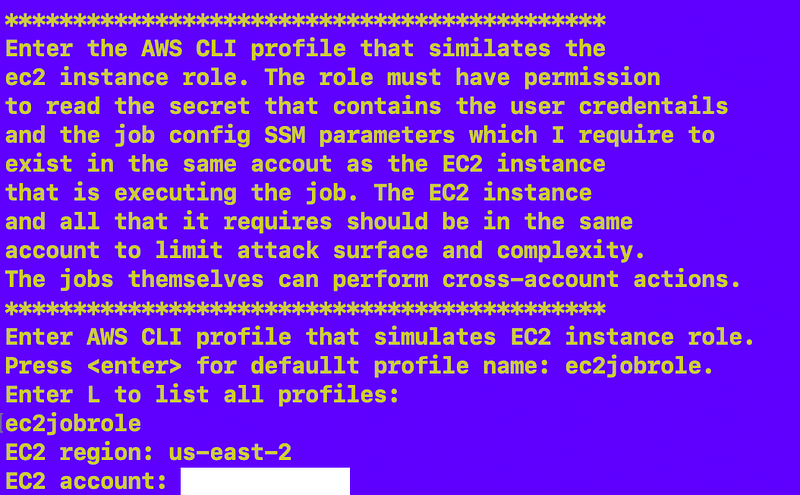

EC2 Role

There’s a role that simulates the EC2 instance and gets two things from the account in which it is running and needs the corresponding permissions:

- A list of jobs and the job configuration in the SSM Parameter.

- The secret that contains the user credentials used to assume the job role.

My default EC2 Role is ec2jobrole. If you create a role with that name you can just hit enter for the first question. If you want to see all the AWS CLI profiles configured on the current host that you can use for this test, type L. Otherwise type in the role name you want to use to simulate the EC2 instance that has the above permissions.

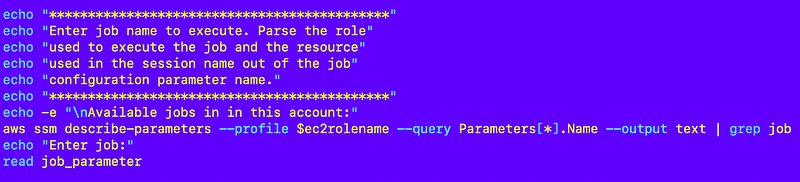

In this script, the EC2 instance role lists the available jobs in the EC2 account that the user can run. This could come from some other user interface later. In this test script I just list them and the user can copy and paste the job they want to run so the EC2 role needs permission to list SSM parameters in the account in which it exists.

Job Name

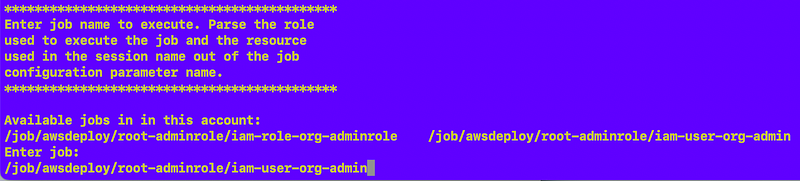

I list the SSM parameters that contain jobs as explained in a prior post.

The user copies and pastes the name of the job they want to execute. Obviously this could be fancier but works for now.

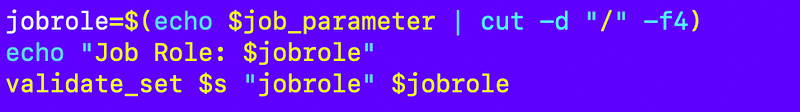

Job Role

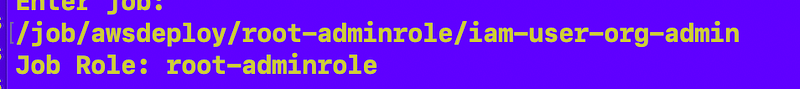

I parse the job role out of the job parameter name.

The script prints out the job role:

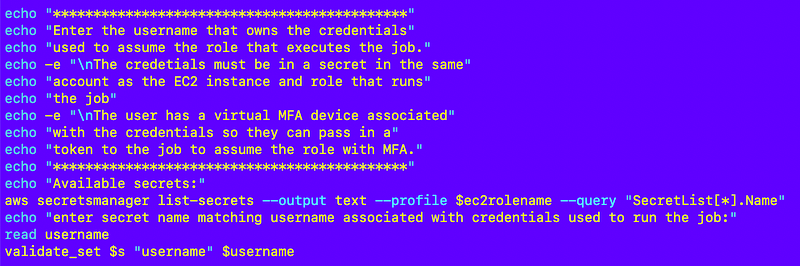

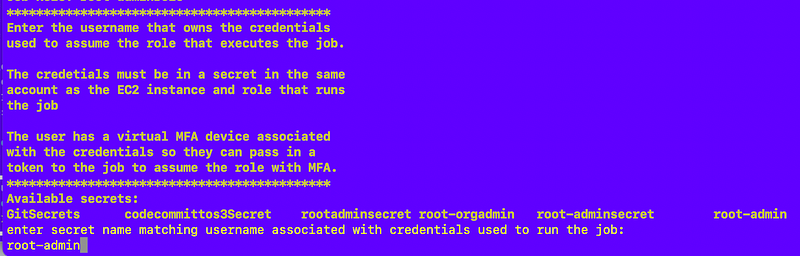

List secrets and select secret for user that has permission to assume the job role

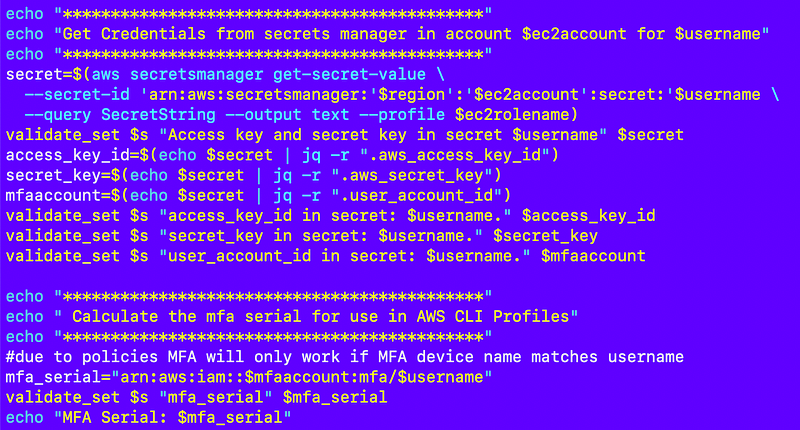

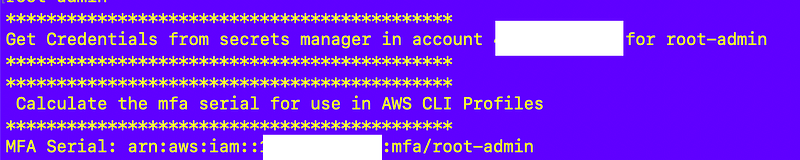

Next, I get the secret with the user credentials, obtain them, and calculate the ARN of the MFA device:

The script prints available secrets.

Get credentials and calculate MFA ARN from secret values

After entering a secret the script parses out the required values.

AWS CLI Profile for User on EC2 Instance

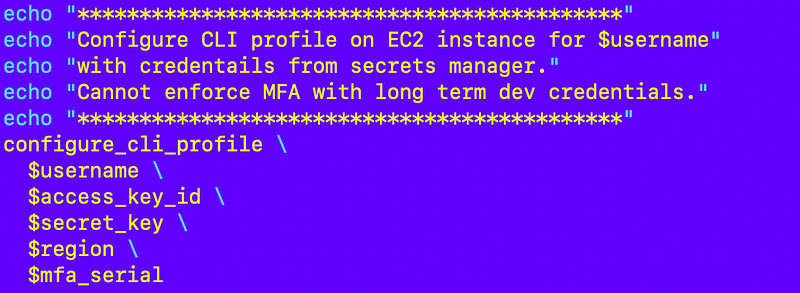

Configure an AWS CLI Profile on the EC2 instance using the user credentials. The MFA device is a bit extraneous here but I have an issue I need to fix if not included thanks to bash. I might remove that later:

Recall that we cannot enforce MFA when long term credentials are used with the AWS CLI. But we can enforce MFA at the point those credentials get used to assume a role defined in a separate AWS CLI profile.

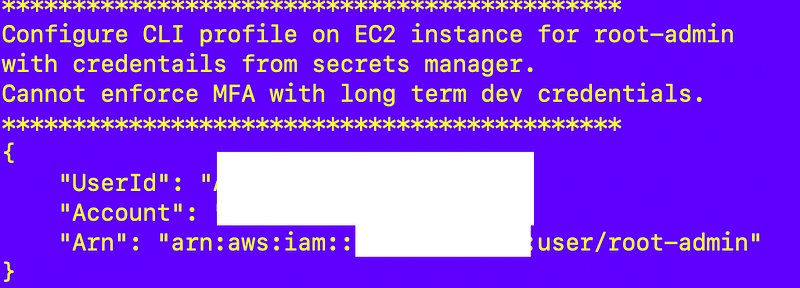

The configure_cli_profile function calls sts get-caller-id to verify the profile is correctly configured:

Assume the Job Role with MFA and get Temporary Credentials

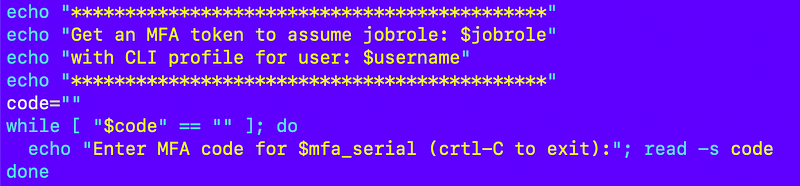

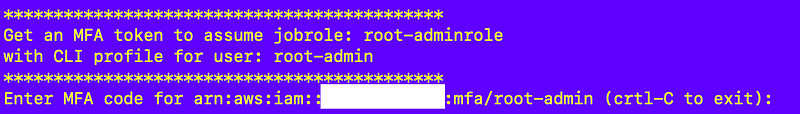

Next the EC2 instance uses the AWS CLI created for the user with long term credentials assumes the role used to run the job with MFA and gets the temporary credentials assigned to that role.

Request an MFA token:

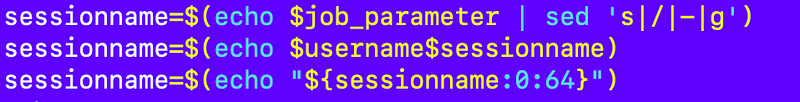

Calculate the session name that appears in CloudTrail:

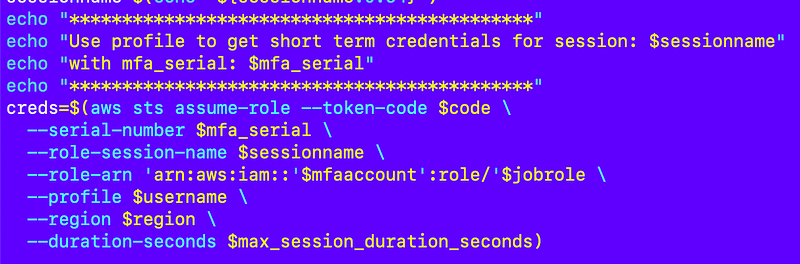

Assume the role:

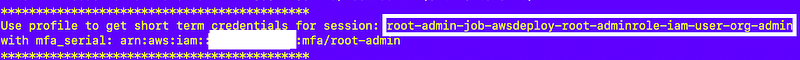

Note the session name in the output that will appear in CloudTrail for any actions taken by this job:

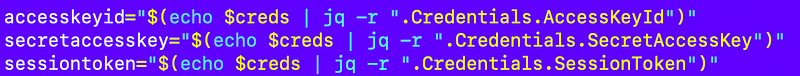

Obtain the temporary credentials from the role assumption:

Pass the role short term credentials to the container and execute the job

Pass the temporary credentials for the role that is executing the job to the container and execute the job.

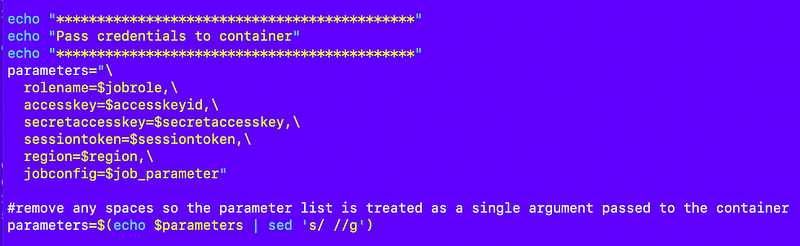

Formulate the parameters:

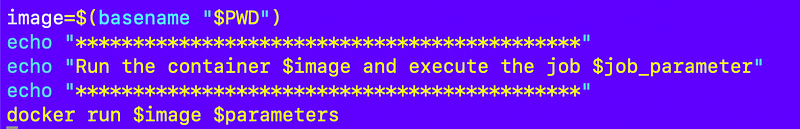

Execute the job:

Note that I do not need to pass the script that the job will run to the job as the job always runs the same script now. The default job script parses the parameter to determine what the job executes but not by passing in scripts which can lead to security problems. Instead we will pass in non-executable parameters that drive the execution path.

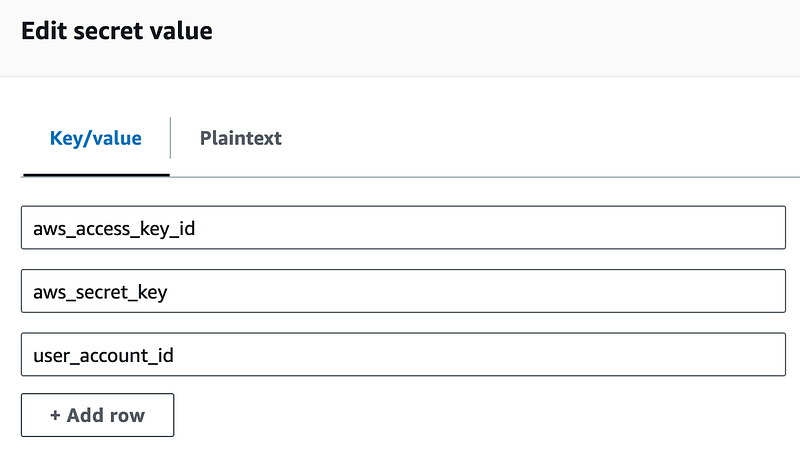

Add user account — *not MFA device ARN* — to secret value

I resolved one issue I punted on until later in a previous iteration. I am now storing the account ID where the user MFA device exists in the secret so I don’t have to give permission to my EC2 execution roles to use AWS Organizations to look up account IDs for an account name or use some other role for that.

The user or role that configures the job in AWS SSM Parameter store already likely has access to either AWS Organizations or knows which account the user is in when they configure this secret as they need to obtain the credentials from that account. That’s why I moved the specification of the account that has the MFA device to this secret.

Notice I am not adding the MFA ARN here. I started to do that but then realized that I could verify that the user and ARN device name match more easily by calculating the ARN name in my test script.

Recall that I have an SCP that requires MFA for non-role actions and will block use of MFA devices where the name of the MFA device does not match the user name. Why? I went into that in detail in a prior post where I demonstrated the available resources in an action when creating conditions to require MFA.

Run script in the container

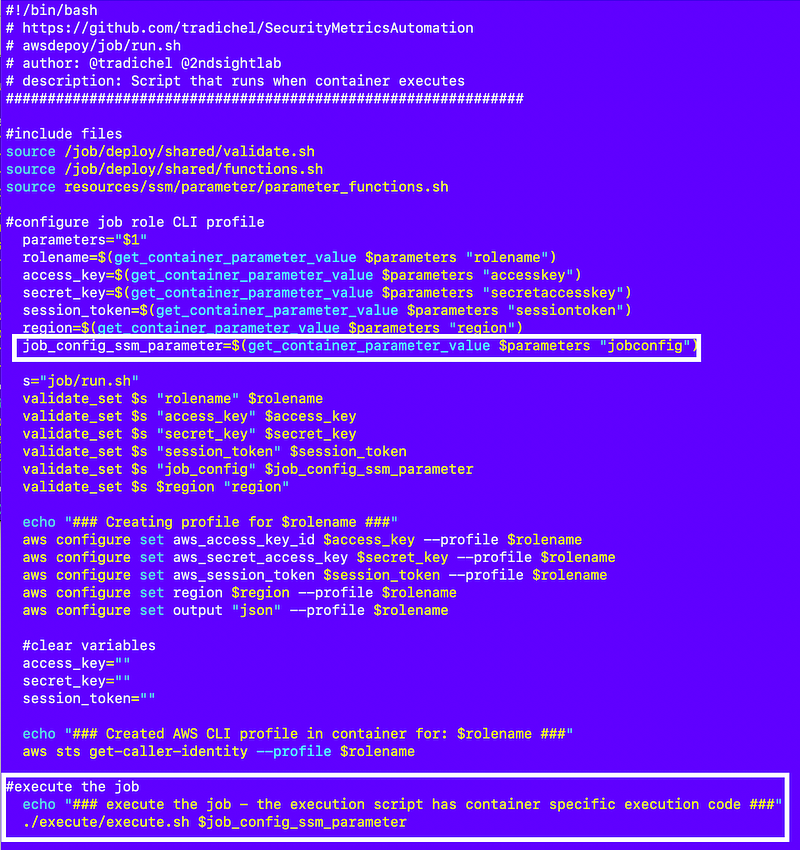

I needed to make some modifications to the run script that executes when the container starts.

Instead of passing in the script to run, now I pass in a job configuration. The job configuration is always processed by a generic script called execute.sh now. That execute.sh script may be different in each different type of container.

Security considerations and improvements:

- The run.sh script that receives the credentials and assumes the role is generic and should not need to be changed from one container to the next.

- The container run script no longer uses the parameters passed into the command line to prevent a whole range of potential security issues stemming from variable parameters.

- The parameters will all come from the job configuration in SSM Parameter store and get parsed within the container and be limited to the specific role used by the container.

- The role(s) on the container should not have access to the credentials in AWS Secrets Manager.

- The role can also be limited to only the job configuration parameters for the jobs it is allowed to run.

- The job configuration parameter values should never contain executable code.

Here are the code revisions in job/run.sh.

I removed extraneous parameters.

I added the job configuration parameter.

I’m not passing in a script but rather calling a generic execution script which will parse the job configuration and execute the job-specific actions.

Note that I’ve renamed “deploy” to “execute” so this common execute.sh script works for any type of job, not just deployment jobs. I think I can get rid of most of what’s in the deploy directory besides my shared functions but I’m keeping that code around until I can completely replace all the functionality in those scripts with job configurations.

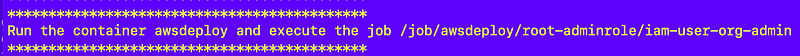

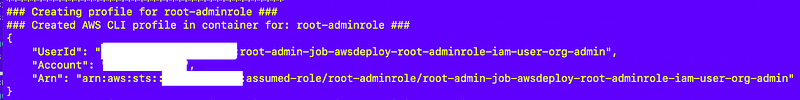

You can see the output of the above script when I execute my test script. It assumes the role used to execute the job and calls sts get-caller-identity to show that the role assumption succeeded.

Separation of concerns and duties

This is an important point. I’ve often designed systems and segregated out code people should not be touching from the code they are actually supposed to be modifying. You can even put the code people should not touch that is error prone, complex, or related to security in a separate repository from the code people should be changing.

The trick is that the system needs to remain flexible enough to allow developers to do what they need to do — and that’s where a lot of systems break down.

That’s why I’m trying to make sure this system is safe and prevents errors while still allowing developers to do pretty much anything they need to do who are using it — including me!

Standard job execution code

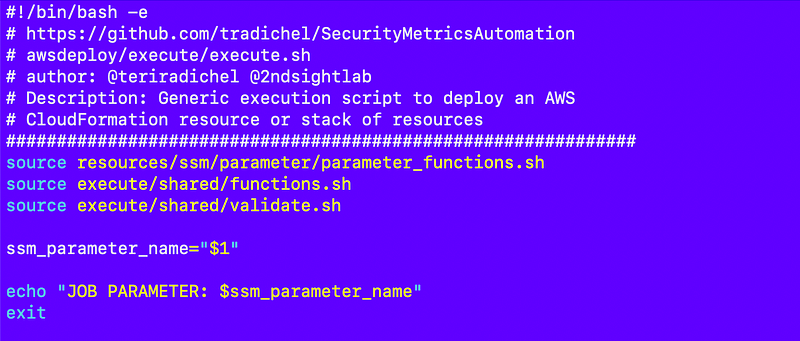

Next I need to create my execution.sh file. Initially I simply want to make sure this file gets called correctly as it can work for any type of job or container.

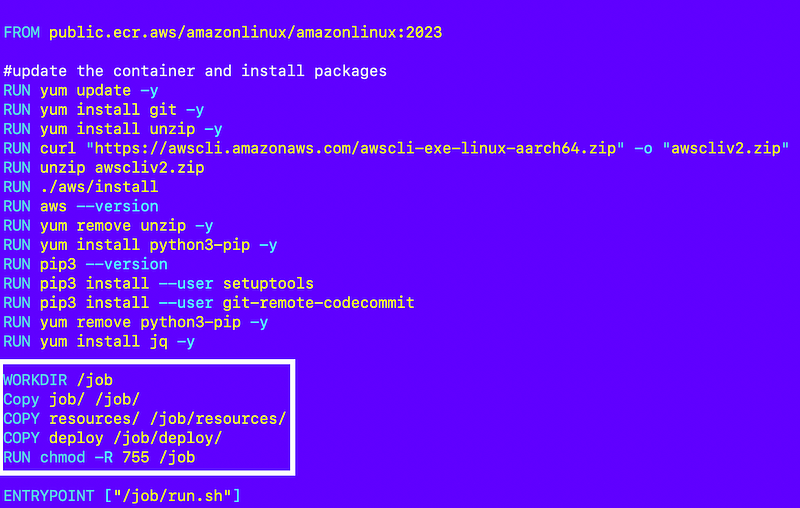

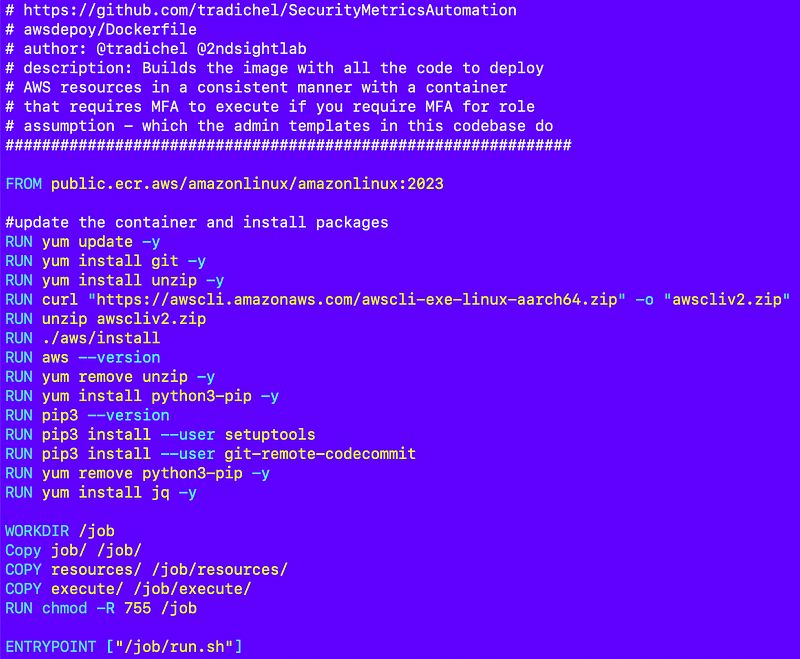

I need to modify my container to include the execution directory instead of the deploy directory.

Like this:

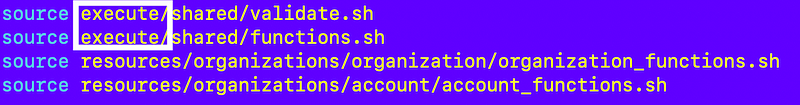

Along with renaming my deploy directory to execute, I will need to change all the files that reference the shared files to reference the renamed directory.

I wrote about global replacement of values in directories in this post:

I can use this command to fix all the file references:

find . -type f -exec sed -i 's|deploy/shared|execute/shared|g' {} +Separate Job Configuration from Job Execution

Also, the job configuration really shouldn’t be in the container the more I think about it.

I move the awsdeploy directory to:

ssm_job_config/awsdeploy/...I’ll have to adjust the script that deploys parameters to work from that location.

Initial execution test

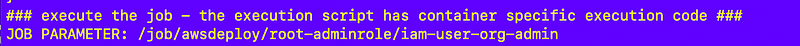

Initially I simply print out the name of the execution file and exit to test that when I run my local test all my variables are set correctly and the role assumption works.

When I execute my test script I can verify that the role assumptions work based on the above output and that my code in this file gets executed correctly when I after rebuild the container image to pick up the changes.

Phew! I initially thought I was just going to modify that file 👆. Instead I just got to the point of executing it.

As it turns out, I cleaned up a lot of code and simplified it. I fixed some security issues (still more to do) and made it easier to execute jobs and create new containers that execute jobs.

On to the generic deployment function in the execute.sh file.

I think I can eliminate a lot more code to simplify new deployments.

Update: I did! Keep reading the next few posts. It’s pretty cool

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2024

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab