Create a Batch Job Trigger Role

ACM.21 Creating a role to use for triggering batch jobs

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics. The Code.

🔒 Related Stories: IAM | AWS Security | Application Security

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post we created an IAM role for a batch job that will be allowed to encrypt a secret using a KMS key. I explained the catch 22 with IAM and KMS key policies in that post as well.

In this post we’re going to create a role that can decrypt using the KMS key but we’ll add the IAM policy later. Once we have the roles created we can create our KMS key, the KMS key policy. Once we’ve created the KMS and Secret Manager resources we can add IAM roles restricted to only the actions the roles need to take, and the resources (which currently don’t exist) that the roles can access.

Batch Job Trigger Role Policy

As described previously, in order to require MFA, we ultimately need an AWS Identity that has MFA associated with it to provide an MFA code. When we have some actions that require MFA like our STS:AssumeRole action in this architecture and other actions that don’t, we could write policies with some tricky logic to try to handle all use cases.

However, as I explained in this post on IAM conditions, creating tricky logic with if-then statements, double negatives, and blacklists can lead to odd edge cases that need to be tested to make sure they work as expected.

I’d rather simply use a white list and allow only what is supposed to be allowed.

If you’re not familiar with the concept of black lists and white lists in security policies, I wrote about that in my book at the bottom of this post.We will definitely be creating a stand-alone policy for this role.

Relationship of our credential decryption role to batch jobs

As explained in the last post we can easily create a new role for our batch job using our generic role creation template. Do we want to use that template to create a role for the role that will decrypt the credentials used to assume our batch job roles?

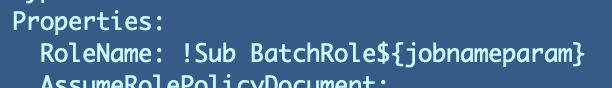

If you recall that role CloudFormation template requires a job name:

If we use that CloudFormation template we would need to create a separate role to retrieve the credentials required to run a batch job for every single job.

Do we want to do that? Do we need that?

If you have the following requirements, then yes:

- A different set of AWS access key for each batch job stored in a separate Secrets Manager secret.

- A separate KMS key to encrypt each set of credentials.

- An IAM policy for each role that allow it to only a the Secrets Manager secret encrypted with a specific KMS key for a single set of credentials for that specific batch job.

However, the access key is associated with a user so we can require MFA. We likely don’t have a separate user administering each batch job. This relationship doesn’t make sense for my current use case. It may for yours.

I explained in prior posts why we are using a separate role to decrypt the credentials from the one that runs the job itself. There’s another reason we can’t use the common role template without modifying it further. We would need different role names for the role used to execute the job and the role used to decrypt the secrets.

I could modify the template for that scenario with a new parameter or an if/then statement but I can already envision bugs and problems with that I won’t get into here. At that point, I’d still create two separate templates to avoid bugs and prevent errors. These are two discrete use cases.

For my planned architecture and use case, I don’t think I need separate credentials and KMS keys for the portion of the architecture that triggers the batch jobs. So I’ll keep the code for triggering the job in a separate folder, and I’ll put my CloudFormation template to trigger a job in this folder.

trigger_batch_jobRelationship of our credential decryption role to user credentials

Another scenario and one I could have in the future, is that you have multiple batch job administrators that you need to allow to execute a single batch job. An MFA device should always be associated with a single user. It identifies that user.

Note that you cloud also design a process where you pass the MFA device to whomever is on call in which case you need a rock-solid method of determining who had that device for any given period of time. I’d keep it simple and give each administrator their own MFA device and ensure they understand the implications of sharing it with anyone. Their name will be in the logs for any action taken with that device.

So let’s say we need to give multiple sets of credentials permission to execute batch jobs, and each user has their own MFA device. In this scenario you have multiple sets of credentials associated with a single batch job or group of batch jobs. Do you need a separate role to decrypt each set of credentials?

That depends on your architecture and your objectives.

- Are you going to create separate keys and secrets for each set of credentials?

- Are you going to create separate processes with separate permissions to access each set of credentials?

If you are using the same keys and secrets to protect all the credentials creating separate roles and processes doesn’t make sense because they can all access the secrets anyway.

If you are going to create one process and give it access to all the keys and credentials anyway you’re just creating more work for yourself and more expense to pay for separate resources.

If you truly want to protect the limit blast radius with separate credentials you would need to restrict the resources and processes that use and protect those credentials as well. You’d probably want a separate process for each set of credentials with a separate role that has a policy that restricts it to a specific secret and KMS encryption key.

Here’s how I’m planning to implement my architecture at the moment and why I don’t think I need that level of complexity. I think it’s ok to allow a single role to access multiple secrets containing each set of credentials encrypted with the same key:

- The only thing these credentials can do is assume a role. That role assumption requires MFA. Our role used to decrypt the credentials does not have MFA associated with it and cannot do anything with these credentials.

- The users who own the credentials and can provide an MFA code to assume a role will not ever see the credentials when they are created (as long as you adhere to the architecture I will provide in future blog posts).

- Anyone who should be able to retrieve credentials association with this decryption process should not have access to the MFA code. They will not have the virtual MFA device. I am writing a separate process to handle retrieval of the MFA code.

- If you are using code similar to this in your organization you could delegate separate teams to manage these two parts of the system.

- You can also lock down this code in production so no one can tamper with it and access the credentials or code in that environment because the whole process will be automated.

Of course, I will test this out and keep thinking about ways I could break it like I do on penetration tests. and assessments. It and can change if I think of any gaps later. This all depends on the entirety of my architecture, which I will explain as we implement it. As I warned in the last post, changing any one part of the architecture incorrectly could break the integrity of the design and lead to a security incident or data breach.

Everything has to be architected to work together, and that is why security architecture is not a checklist.

Architecting policies to prevent data breaches

As you can see, when you design security policies, you need to understand the relationship between the different system components. I’m attempting to architect the system to minimize complexity, but still maintain zero-trust policies.

In some cases, when people get frustrated with creating separate roles and policies for every instance of a common system component, they complain. They want to just create a single policy that allows every role, user, or application access everything without this complication or restriction.

And that, my friends, may have been one of the underlying causes of one of the most impactful cloud data breaches to date. Executives also need to understand the reasoning behind policies they choose to cast aside, and that is one of the reasons I wrote my book at the end of this post.Consider where it is appropriate to abstract away complexity with a single role and policy, and where it is appropriate to maintain separation to protect data appropriately.

CloudFormation Template for CredentialDecryptionRole

I’m going to create my standard directory and deployment script for the batch job trigger process in the directory I mentioned above:

trigger_batch_job_role

/cfn

deploy.shI can copy my existing, working template and modify it. Navigate to the cfn directory and type:

cp ../../batch_job_role/cfn/role_batch_job.yaml .I’m not sure if I will test this role with Lamda or an EC2 isntance yet but for now I’ll edit the AssumeRolePolicyDocument to remove the AWS user and add the ec2 service:

Principal:

Service: "ec2.amazonaws.com"If I use EC2 I’ll need a role profile but I’ll decide that later.

I’m going to remove the MFA requirement for the moment since this is not an assume role action and will likely be triggered by an automated event. More thought on that as I consider use cases later.

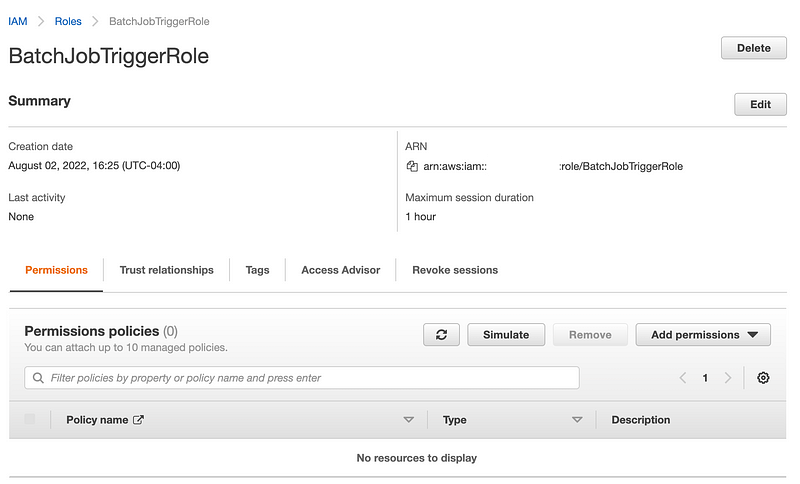

As discussed this role is not associated with a single batch job so we need to change the name. For now we’ll name it BatchJobTriggerRole.

I will still create a separate policy document so I can alter and deploy that separately from my role as explained in the previous post and limit actions to specific resources after those resources have been created.

I renamed the template file:

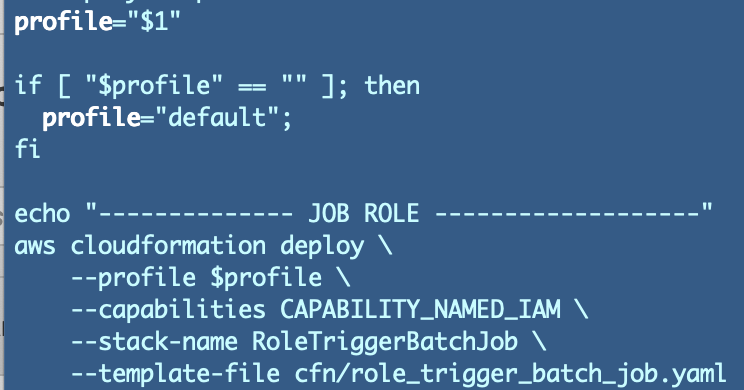

role_trigger_batch_job.yamlI copied my existing role deploy script and modified it to help me avoid too many typos. Remove the job name parameter and change profile to reference the first argument passed into the script.

Now deploy this role and verify that it was, in fact, successfully deployed.

Now that we have a role that will be allowed to encrypt credentials (last post) and decrypt credentials, we can create our KMS key with a policy that allows these roles to encrypt and decrypt with that key. We can also add policies to these IAM roles to allow them to perform actions with the new KMS key after we create it.

The code for this post exists in the following directory and may contain modifications made in subsequent posts.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2022

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab