Defining Requirements for KMS Encryption Key Policies

ACM.19 Determining who can encrypt and decrypt the credentials used by our batch job

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics. The Code.

🔒 Related Stories: IAM | AWS Security | Application Security | KMS

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post we started working on a generic CloudFormation template we can use to deploy any KMS key. If you’re following along you will see throughout these blog posts that that template changes as we learn about new requirements and have to alter it to support them. Now we are going to define requirements to determine how we want to use our KMS key.

If you’ve been following along you understand some of the decisions up to this point including leveraging user credentials so I can require MFA to kick of a batch job which requires use of user credentials instead of an IAM role. I want to create an AWS KMS key to encrypt the AWS secret key and access key I’m using to run assume the role used by a batch job. That role assumption will require MFA and then subsequent actions by the batch job will not for reasons previously explained.

I need to determine how I am going to create, store, and securely access these credentials. I don’t want them just hanging around in source control or a text file somewhere. I am thinking through how I can leverage separation of concerns between system components and development teams to require multiple people’s access to alter the system functionality. There are a few complications regarding how I will store and retrieve the credentials I need to think through. Some of them I will address in this post and some I will address in later posts.

Secrets Manager versus AWS Systems Manager Parameter Store

I have a couple of options for storing encrypted secrets in AWS. I’m sticking with a cloud native option in this case because I don’t want to set up run a third-party solution. I don’t want to have to worry about the credentials and infrastructure for all of that on top of everything else I already have to secure. With a third-party system I will have to run and secure some type of compute resource and consider how I am protecting access to retrieve secrets from that system. If I use AWS native secrets storage I have integrated, built in protections that work with KMS — Amazon’s encryption key management service.

Should you use KMS? I get this question all the time. Although you are letting Amazon store your encryption keys, are you actually doing a better job yourself than Amazon is doing at segregation of duties, secure secrets storage, and all the other aspects of maintaining encryption keys securely? Maybe you are. Perhaps you are using something like Venafi which I haven’t personally tested without doing so and am not recommending for that reason— just mentioning as an option — but seems like a pretty good solution. If you have your encryption keys in a spreadsheet — you are not doing a better job. Let Amazon store your keys.

If you want to know why I don’t recommend a product I have not personally had the chance to use and test I wrote about that here:

If you want to be really sure that you can trust Amazon, you should read the details of the architectural white papers that explain how KMS works, and you should ensure your contract includes the appropriate protections in the case of a compromise with the help of a lawyer. For my purposes, KMS does a better job of handling my encryption keys than I could write myself so I’ll use it. I also know some large banks use it. KMS has not, as of yet, been the source of a data breach (that I know of) though improper use of KMS has been through poorly architected use of encryption keys. (Things I discuss on calls with IANS Research customers.)

Two options for storing secrets in AWS that can be encrypted with KMS keys include AWS Systems Manager Parameter Store and AWS Secrets Manager.

I want to store the credentials in Secrets Manager initially for a couple of reasons. First of all, I’m interested in seeing if Secrets Manager credential rotation will work for our use case.

AWS Systems Manger Parameter Store does not support rotation, though you could obviously implement it yourself.

From the Systems Manager Parameter Store Documentation:

To implement password rotation lifecycles, use AWS Secrets Manager. You can rotate, manage, and retrieve database credentials, API keys, and other secrets throughout their lifecycle using Secrets Manager.

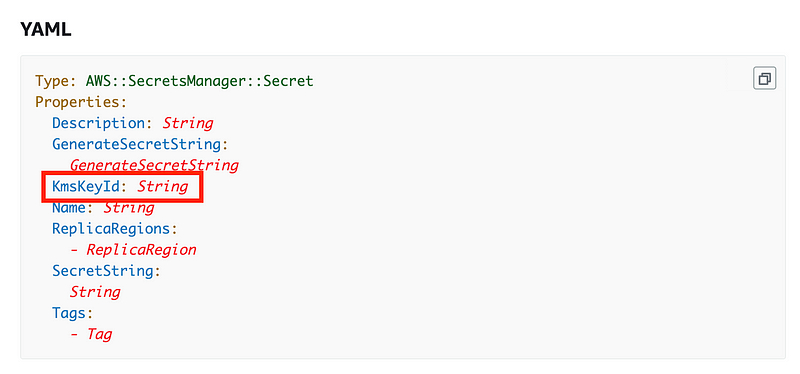

Secondly, we have some better options when creating secrets with CloudFormation when using Secrets Manager. You can assign a KMS key directly to a secret in CloudFormation with SecretManager.

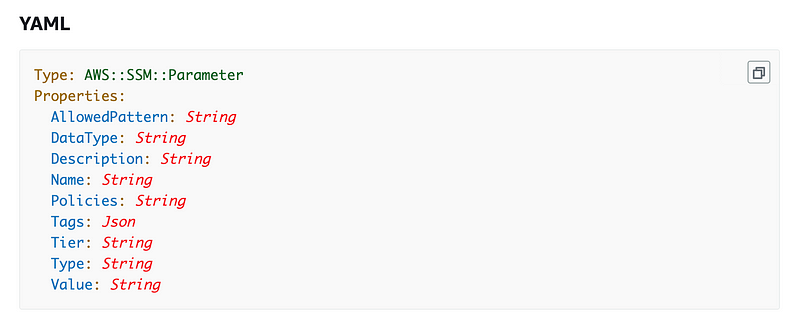

I don’t see that option with SSM Parameters:

https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-resource-ssm-parameter.html

You’ll want to review all the logs where the secret values might be stored when adding secrets to a secret store and whether or not they are encrypted in those logs when you create or add them. Take a look at the parameters in the AWS console after you run your CloudFormation script when passing in secret values. Do you see your secret value in plain text in the Outputs, Parameters, Resources, or any other place in the console? Do you find your secret in plain text in any CloudFormation logs, application logs, source control, containers, environment variables, web request logs, or in your command line history?

Type this command to see your CLI history:

historyCan you think of any other places where your secret may end up after running your CloudFormation template, CLI commands, or SDK API Calls that someone could query later to view your secrets?

Complexity

Parameter Store is part of a larger service — AWS Systems Manager. If we use Systems Manager Parameter Store, we’ll need to ensure that all our IAM Policies that provide access to any part of AWS Systems Manager do not inadvertently give someone access to our credentials if we were to use that. If you are using Systems Manager in a complex development or production environment running many different operations, what are the chances someone could inadvertently over-provision permissions to SSM Parameter Store? AWS Secrets Manager, on the other hand, has a singular purpose.

There is one aspect of Parameter Store that makes it useful for certain configuration values. AWS Parameter Store is cheaper. In my case, I store multiple values in a single parameter for a system and parse them out to save money (less parameters). Here is the pricing of each service for comparison:

Additionally, Secrets Manager can be used across different accounts:

AWS Parameters cannot be unless you provide access to the entire service. If we use parameters in parameter store and do not provide any cross-account access to the service itself we can be relatively sure no one outside of the account can access the parameters. This is both a pro and a con depending on how you want to use Parameter Store. If you want to share parameters across accounts it’s not so great. You can’t define granular policies for a single parameter.

Who will get encrypt and decrypt permissions in our key policy?

OK, so now we know that we will need to pass in an encryption key to our CloudFormation to create our Secrets Manager secret to hold our credentials. We will need to create the key first. But along with that key we want to create a key policy that says who can use and administer the key. That means we need to think about who those users should be. It looks like we will have to think through our policies and potentially create some new roles before we can create the key, so we can assign those roles permissions in our key policy.

We’re creating a Secret Manager secret that will enable our batch job to pull credentials out of it, decrypt them, and assume a role used for the batch job. The batch administrator that assumes the role used by the batch job owns the credentials so they should be able to decrypt they right? But the catch 22 is that without the credentials, the user has no permissions. Without any permissions, the owner of the credentials can’t make the call to obtain the encrypted credentials or decrypt them. So something besides the user who owns the credentials needs to decrypt them.

In the end, I’m hoping the batch jobs will get kicked off with some automated process such as when a file added to an S3 bucket. We can associate an AWS Lambda function with that event. The Lambda function can use a role that is allowed to decrypt the credentials. So we’ll need to create a role for some lambda function that can retrieve the credentials from Systems Manager. Once we have the credentials we can use those to assume a role.

What about the MFA token? We’ll need to get that from the user that owns those credentials. We’ll think about that more later. For now we need to store and retrieve the credentials. We can create a role that is allowed to retrieve the decrypted credentials from Secrets Manager and then we will trigger the rest of the process.

What about encrypting the credentials? We don’t really need the system that decrypts and uses the credentials to encrypt the credentials. The credentials need to be encrypted when they are stored in secrets manager. We’ll probably ultimately have a system component that creates the initial credentials and can store them in secrets manager, so we’ll create a role for that purpose as well.

After the point of creation, Secrets Manager should be able to handle rotation and encryption. Or perhaps Secrets Manager will leverage our creation and encryption process to rotate the credentials. TBD.

By separating the components this way we have another benefit. An organization could assign the development and maintenance of these different components to different teams. The people who create the credentials cannot use the credentials. The component that gets the code from the user to kick off the batch job with MFA could also be a separate component managed by another team. Now it would require a three-party collusion to access the data.

If you ever took one of my cloud security classes, you probably heard me tell this story. I talked to the then Deputy CISO later CISO of DocuSign, Vanessa Pegueros, who has since moved on about their design before recommending that service to Capital One. She explained the architecture of the DocuSign system (at the time) which used this concept of a three-party collusion to access data in the system. Hopefully the company has maintained the integrity of their original system design and the reason I recommended it, in part.

Future system integrity

The decisions that go into designing who can encrypt and decrypt with your encryption key requires some though, as you can see form this blog post. If you set something like this up and the person who creates it leaves your organization, I can imagine it would be easy for someone after the fact to create some small flaw that provides a window for an attack. The person who takes over development for the system may not understand the initial system design. You will need people who clearly understand and maintain the integrity of the system and separation of duties.

Too many times I’ve worked for managers that don’t understand the implication of allowing a junior programmer or even a senior programmer in some cases to make system changes without understanding the consequences of their actions. Make sure you document your design decisions and explain them to everyone on the team — including managers — so they understand how and why the system is designed the way it is.

Here’s another common scenario. If one team is not as good as the other at quickly resolving problems, the better team will get frustrated and vie for position to take over the whole system. This is the point where the people involved need to understand the system design and why separate groups maintain different parts of the system for security reasons. If one person’s credentials get compromised, the attacker cannot compromise the entire system. Instead of throwing up their hands and letting the faster team take over, executives need to understand how and why things are the way they are and work towards solutions that maintain the integrity of system security architecture and processes.

If you can think through and build a core centralized system for accessing sensitive data and have all the systems you build use these core security components, you won’t have this complexity in every system you build. You can assign the core security architecture to a team well versed in both security — and building user-friendly systems that still make it easy for developers to get their jobs done. On that note, I’ve also seen architects build core systems that become a bottleneck and frustration for the developers who use them. Building a core secure architecture that developers like to use is not an easy undertaking, but ultimately it is one of the best ways to get a handle on this complexity and maintain the security of your systems.

I’ve already explained that I’m trying to test out maintaining encryption keys in a single AWS account and creating a common template for KMS keys. We many need to adjust our prior policy a bit to account for the fact that we’ll be allowing AWS Secrets Manager to encrypt and decrypt keys, but we’ll abstract out what we can so we have a common template for future key deployments.

Initial KMS key policies and testing

Now that we understand our requirements, here’s what we will try to crate for our initial testing purposes:

- A role that our lambda function will ultimately use to retrieve the secrets from Systems Manager.

- A role that will mimic what a deployment system would use. We’ll write some code to create the AWS developer keys for our batch job and store them in secrets manager.

- We can use these two roles on an EC2 instance to test out both creating the credentials and assuming a role.

- Later we could move these roles as is or with any necessary modifications into our final design.

Of course, we may need to tweak things as we go if something doesn’t work out as we originally thought it would, but at least we’re putting some thought into it up front instead of building first and fixing security later.

These resources will be created in the upcoming posts and then we’ll test out our theories. Follow for updates.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2022

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab