An SCP with Multiple Conditions to Enforce MFA to Clone a GitHub Repository (In Theory)

ACM.328 How are multiple conditions evaluated in a service control policy — and, or — does requiring MFA even work on Lambda invoke?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: AWS Security | GitHub Security | IAM

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post I changed my git authentication method so as to not send credentials in a URL when cloning a GitHub repository.

In this post I want to enforce MFA to clone a GitHub repository. But you can’t do that! Right? Well, not via GitHub at the time of this writing. But we can require MFA when the person invokes that Lambda function and only the Lambda function, or a secrets administrator using MFA on the AWS console, can leverage or access the GitHub personal access token.

Of course, you also have to secure the GitHub side by only allowing people in your organization to login with MFA to access GitHub and use those privileges to generate a new access token. I wrote about GitHub security in other posts.

“Requires MFA?” you ask. But a Lambda Execution Role can’t provide MFA. That’s right. But one thing we can do is require MFA to execute a Lambda function.

The method I’ll show you in this post has some limitations. It still offers access to an active session. In fact, it flat out doesn’t work correctly on the command line as far as I can tell.

In the next post I’ll show you how to enforce MFA every time you invoke a Lambda function (hopefully.)

I am finally getting to what I hinted at ages ago. A short batch job can run in Lambda. A long batch job can run in AWS Batch. Or anywhere else really if you design it as such. I want to enforce MFA to execute certain batch jobs. These next two posts explain how to do it.

I was thinking through a more extensive solution here:

But for now I’m just going to implement a simpler solution and consider the risks.

Adding an MFA requirement to our Service Control Policy

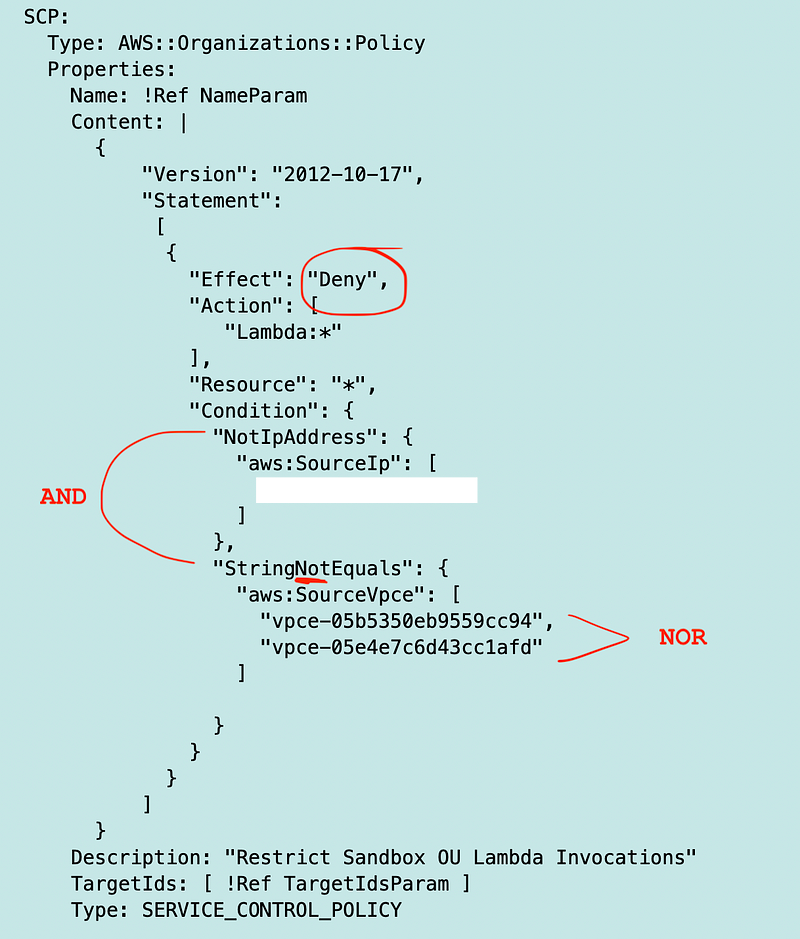

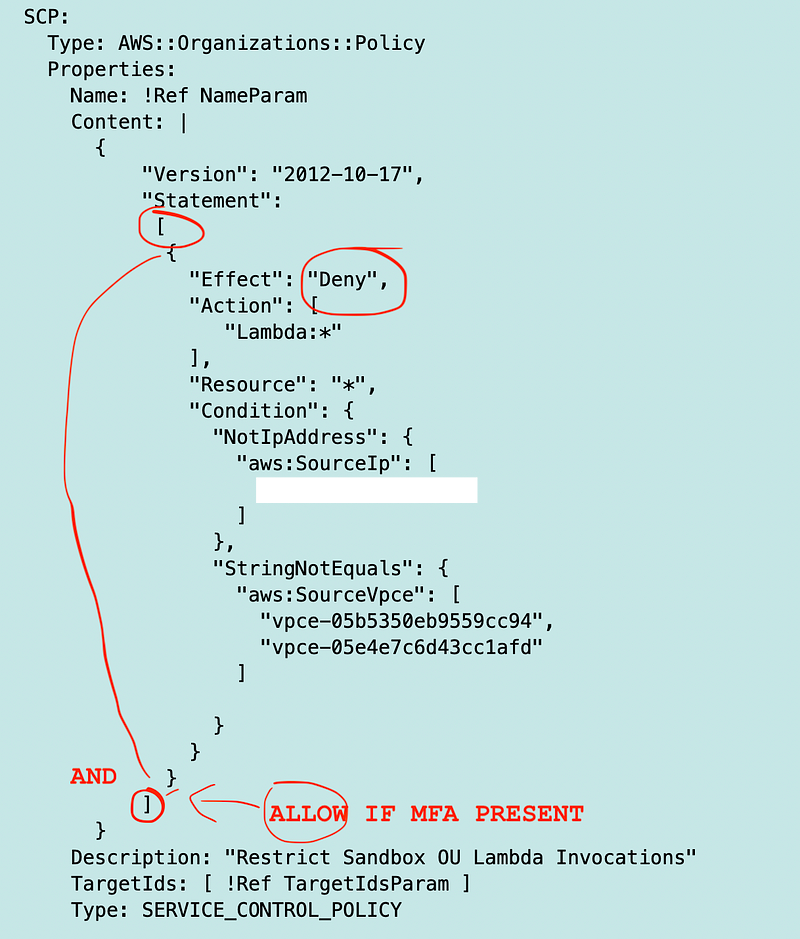

I already explained how to limit Lambda invocations for a particular environment to specific networks with this SCP (and some caveats about the IP address restriction when using VPC Endpoints):

Recall that this SCP is attached to our Sandbox environment for testing.

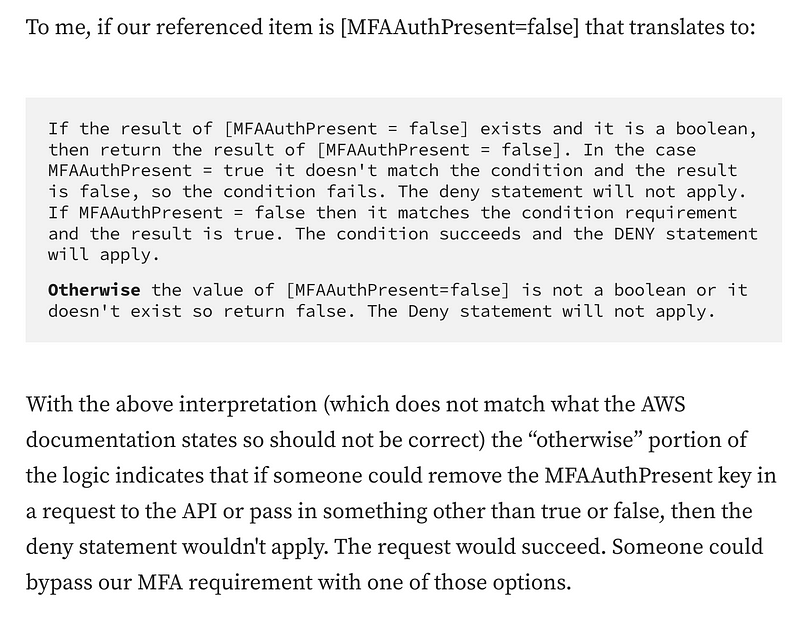

We can also add MFA as a requirement to invoke our functions in that Service Control Policy. Remember that there’s a right and a wrong way to do this if you want to actually enforce MFA every time — not only when MFA exists in the AWS API request.

From the above post:

So I am going to add this condition without the if exists option in the documentation, which limits my use cases but that is what I want. You won’t be able to use hard coded AWS credentials without MFA if you take this approach. Or at least you shouldn’t be able to according to the documentation I reviewed in that prior post…hold that thought.

Condition:

"Bool":

"aws:MultiFactorAuthPresent": "true"One caveat about the above is that it allows any MFA device, not a specific MFA device for a specific user or AWS account. What if I want to only allow principals in the SandBox OU to invoke Sandbox Lambda function? We should be able to use these organizational conditions, though they haven’t always worked as expected for me in the past, so make sure you test them.

You can only allow certain principals in various parts of your organization to take certain actions:

You can limit principals to accessing certain resources.

I might write about that more later in a separate post. For now, I’ll just add MFA.

The other thing I have to worry about is getting the logic right.

I want to allow a specific IP address OR a private VPC endpoint

AND

I want to enforce MFA for every requestThese two contents of an SCP statement are joined with an AND. The request has to meet all the criteria as a set.

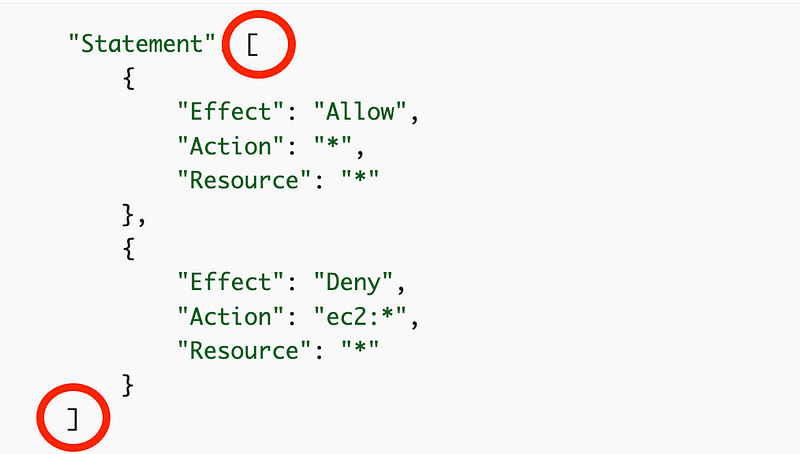

Any action is allowed AND EC2 is denied.

Which effectively means any action but EC2 is allowed.

Note that a simpler way to write this would be a single NotAction statement to deny EC2. It would also be easier for the next person to read and understand. But sometimes you need this combination to achieve a desired outcome.

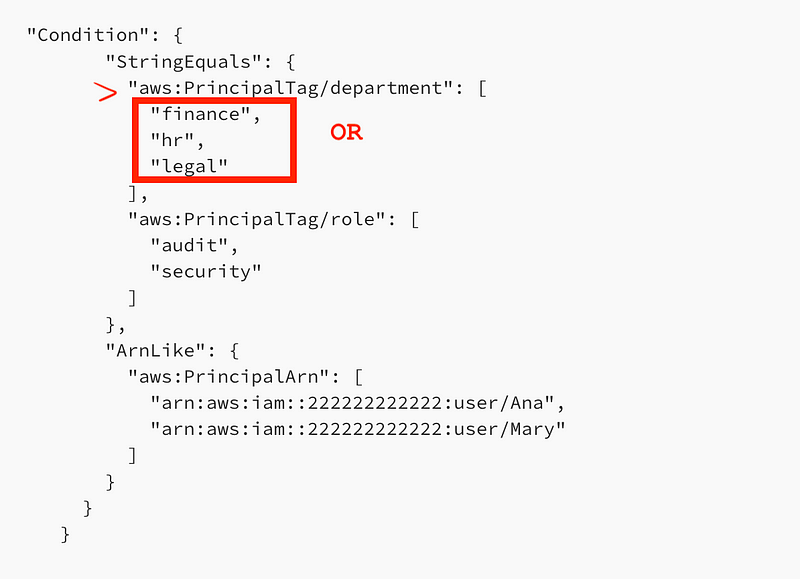

What about conditions? Here’s a condition from the AWS documentation in an ALLOW statement. Does this allow only cases where all the following values are true or any of the following values are true?

"Condition": {

"StringEquals": {

"aws:PrincipalTag/department": [

"finance",

"hr",

"legal"

],

"aws:PrincipalTag/role": [

"audit",

"security"

]

},

"ArnLike": {

"aws:PrincipalArn": [

"arn:aws:iam::222222222222:user/Ana",

"arn:aws:iam::222222222222:user/Mary"

]

}

}

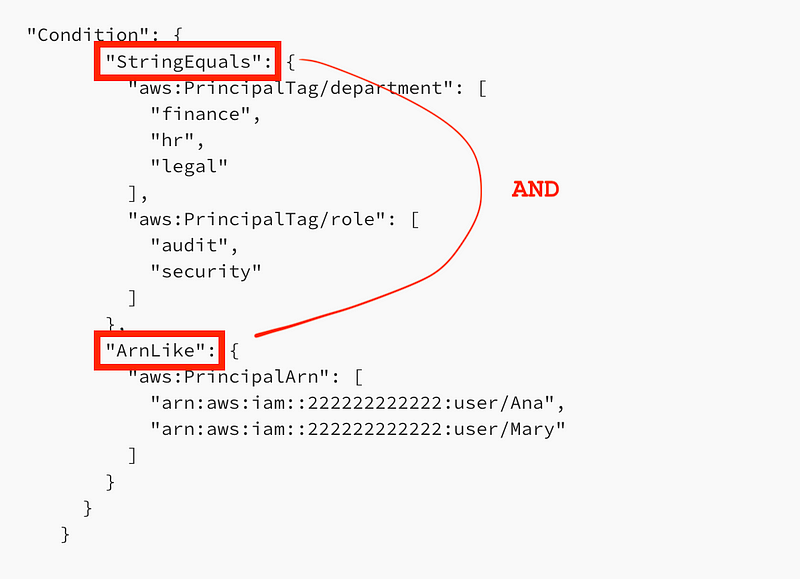

}Here’s what the documentation says:

* Multiple condition operators are evaluated using a logical AND.

* Multiple context keys attached to a condition operator are evaluated as a logical AND.

* Multiple values for a single context key are evaluated as OR.

* Multiple values for a context key for a single negated condition operator are evaluated as NOR.Condition operators are the values StringEquals and ARNLike in the above statement. They are the methods you can use to evaluate values in an AWS request. I wish AWS would provide a complete list of condition operators in one place, but I cannot seem to find one. Their implementation varies by service, also, so you have to look at each service you use to see if your condition is going to work, and what other conditions may be available to you. And test them, as we will see in this post.

Based on the above explanation of condition logic, the above policy will only allow the action if the “StringEquals” the specified value and the “ARNLike” the specified values.

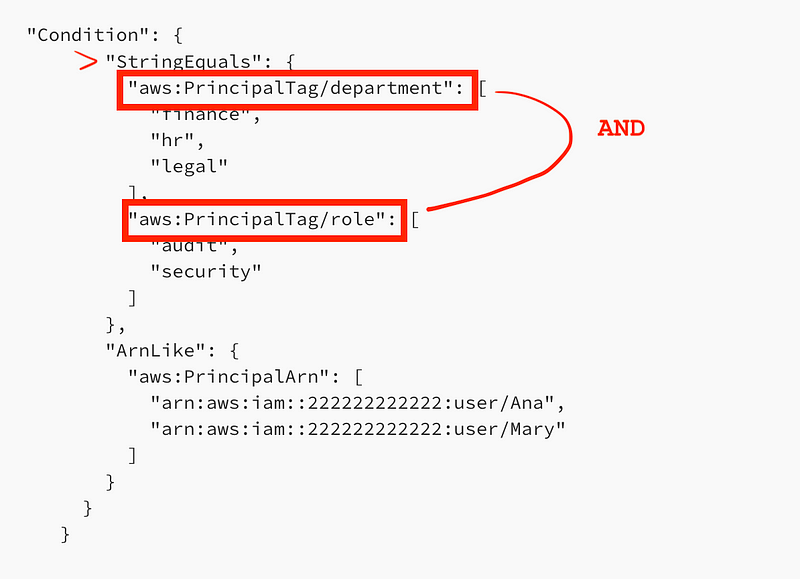

Next we have context keys. AWS doesn’t seem to provide a succinct definition of context keys. Essentially it is the “key” in the “key-value” pair that you are evaluating in a condition.

In the policy above we are evaluating the values of the keys below for the StringEquals condition. The key is the department or role. The values are the specific allowed values for each of those keys.

Multiple keys for a single condition operator get evaluated with an AND.

Then we have the values for each key. The documentation above says that the values for a single key are evaluated as an OR. So any of those values will meet the conditional criteria.

The last statement says “Multiple values for a context key for a single negated condition operator are evaluated as NOR.” Take a look at our Lambda SCP above.

We are denying the action if the source IP does not equal a specific IP address and the source VPC Endpoint is neither of the values.

How do we add “AND MFA is present to the above?

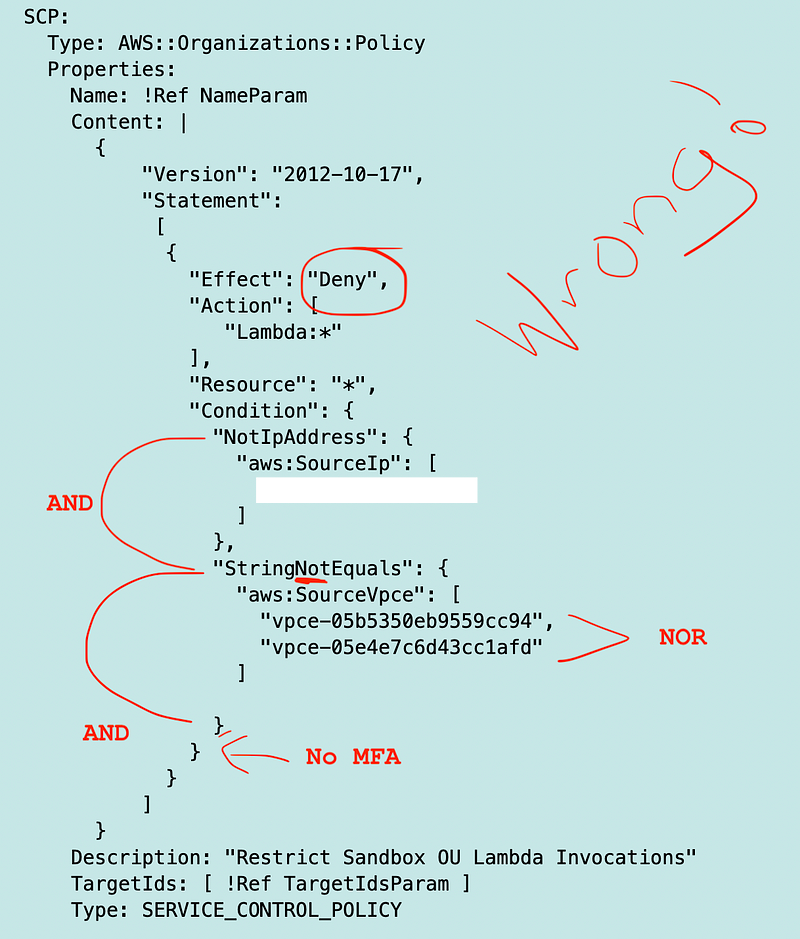

What if we did this:

Someone tries to invoke Lambda. They invoke the Lambda within the correct network but without MFA.

The policy is evaluating whether to deny the request. If all conditions are true, the deny action will be applied to the request.

If person is not using the correct IP address then that condition evaluates to TRUE. However, the request is coming from the correct VPC endpoint, so the second condition evaluates to FALSE. So the request is not denied.

Let’s say the person tries to invoke Lambda from the wrong network WITH MFA.

The first and second conditions are true because the person is in the wrong network. The last condition is FALSE, because the person is using MFA. What happens? Any person using MFA from the wrong network can invoke the Lambda function.

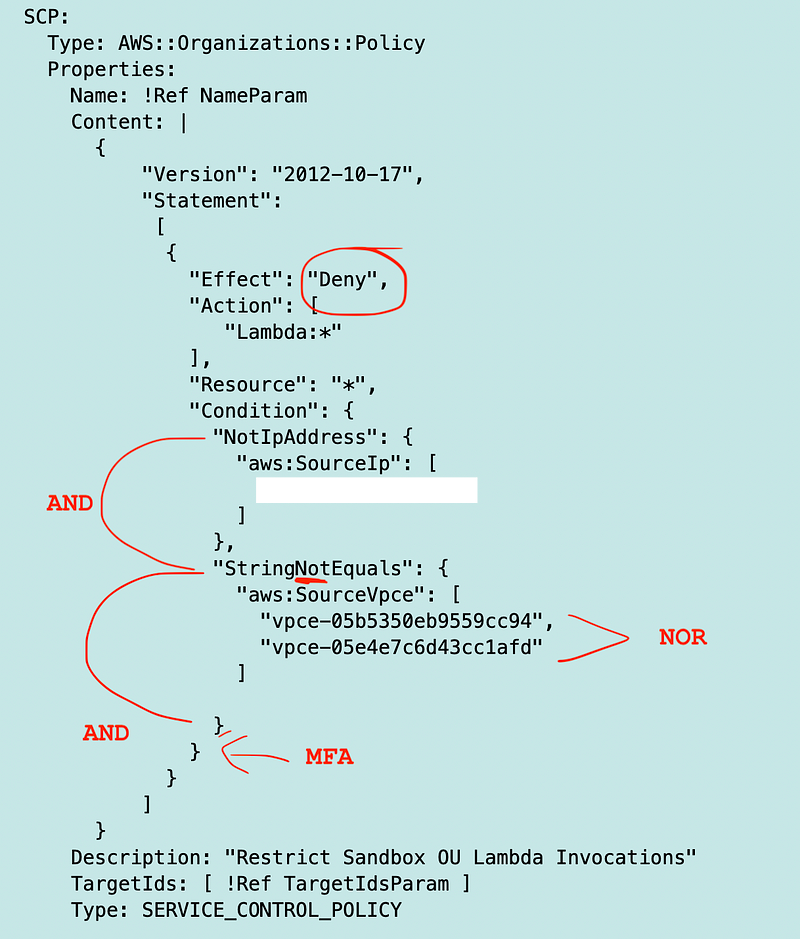

What if we change the condition as follows. The person is in the wrong network and MFA is present.

That’s not what we want either. What happens when someone is in the correct network and is using MFA. Well, one of the network options evaluates to false so MFA is irrelevant in that case. All the conditions must be met or evaluate to have the DENY action apply to the request.

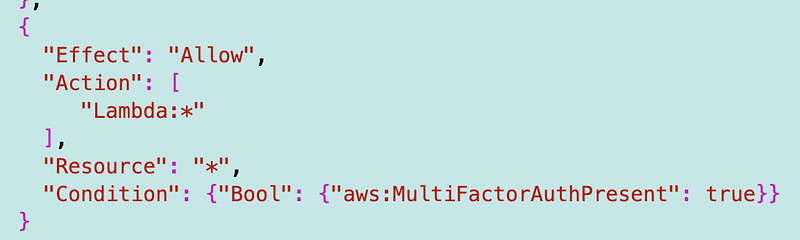

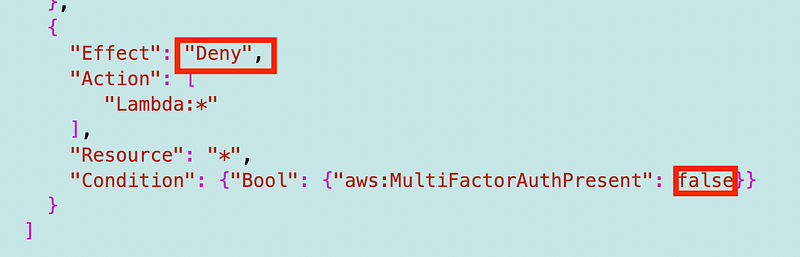

So how can we fix this? We can create a separate statement to allow Lambda if MFA is present.

In other words, DENY the request if it does not meet either network requirement AND ALLOW the request if it does not include MFA.

Let’s go through the scenarios again.

The person is not in the correct network and is using MFA. The request will meet the DENY and the ALLOW criteria. DENY.

The person is in the correct network but is not using MFA. The request does not meet the DENY criteria so will not be denied, but does not meet the ALLOW criteria either. DENY.

The person is in the correct network and using MFA. The request does not meet the DENY criteria so will not be denied. The request does meet the MFA criteria in the ALLOW statement. ALLOW.

But here’s the thing. Upon testing, the allow statement didn’t work. CloudFormation wouldn’t let me deploy it, saying it did not meet the criteria for that type of policy.

But the above won’t work either, because in my root OU I allow all actions. That overarching policy would override my attempt to only allow Lambda when MFA is present. Think about what would happen if the above did work. Would everything except Lambda then be denied? AWS won’t let you install that anyway. Let’s change it to a DENY.

Policies can be very tricky, and in all cases you should TEST THEM. Make sure that your logic holds up — and that AWS has not made a mistake.

If you work in QA (Quality Assurance) testing software, you need to understand all paths testing — and when you cannot test all paths you have to understand what you have and have not tested.

What should you test for the above policy?

- Test in the correct IP with MFA.

- Test in the correct IP without MFA.

- Test in the correct VPC with a VPC Endpoint with MFA.

- Test in the correct VCP with a VPC Endpoint without MFA.

- Test in the wrong network with MFA.

- Test in the wrong network without MFA.

We also need to test all the different scenarios for each mechanism by which you can invoke the action if working at AWS:

- Console

- AWS CLI

- Lambda Runtime Emulator

- AWS Java API Request

- All the other SDKs or any other method of invoking Lambda that might take a different execution path through the evaluation logic in the AWS policy code.

Since we don’t have that code to evaluate we can’t know how many execution paths exist that would result in a variable result. From the outside, we would need to test all paths if we wanted to verify that the policy is evaluated correctly, but AWS should be doing that since it’s their code. Still, it’s a good idea to test the code paths you might be using.

If you’re a penetration tester, your boundaries are much broader. You’re trying to break things by completely abusing the system. In this case, we’re testing the system used as designed and all the logical paths for this specific use case.

However, just testing the valid use cases when it comes to policies and ensuring your test coverage for a policy is complete is a feat unto itself for most organizations. Testing IAM and related policies can be very tricky and that’s why I suggest having dedicated teams focused on this particular aspect of security.

Anyway, now I can try to deploy my policy changes.

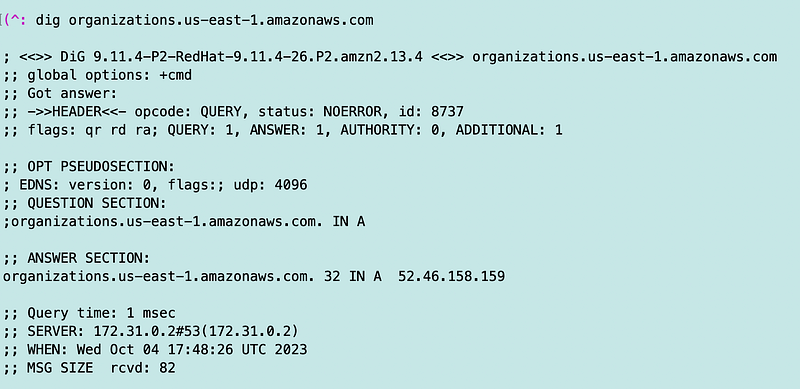

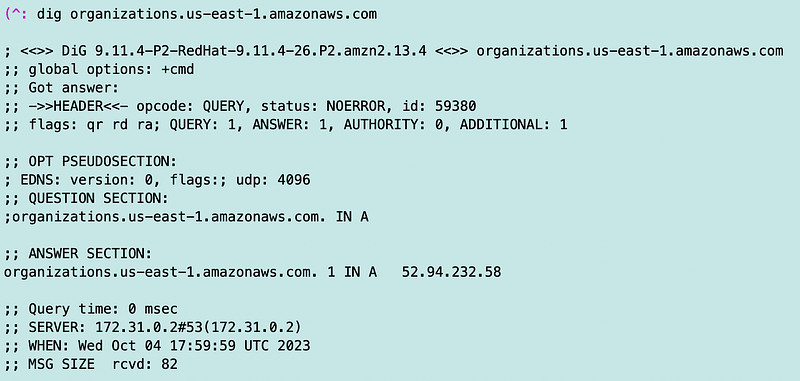

The next interesting point is that I cannot update my policy because I’m getting a connection timeout trying to reach an AWS Organizations endpoint:

Connect timeout on endpoint URL: "https://organizations.us-east-1.amazonaws.com/"Recall that I have added VPC endpoints to my VPC for various services. Can we add a VPC endpoint for AWS Organizations?

Apparently not.

This is a problem.

Well, at this moment, that domain resolves to:

There are probably others but I will try allowing that…and it works.

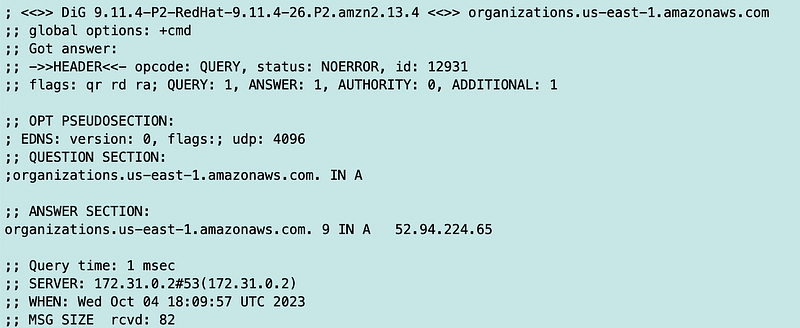

Sure enough, I had to fix a policy issue and my next request failed. Here’s another IP:

We can’t use prefix lists in a NACL even if one exists for AWS Organizations.

And another.

After I add those three IP addresses (which could change) I can deploy my policy update.

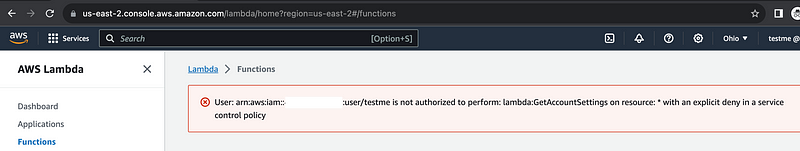

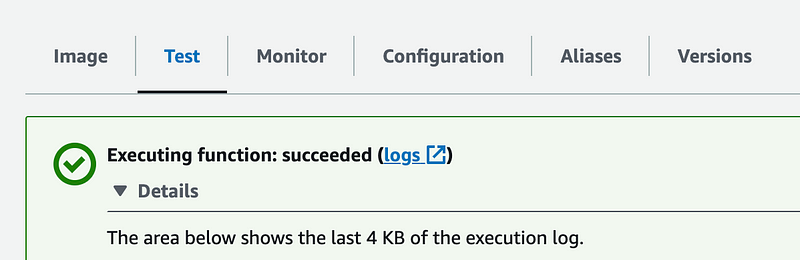

That works and I can test out all my scenarios above, including a user without MFA in the AWS console. I gave this user full Lambda access.

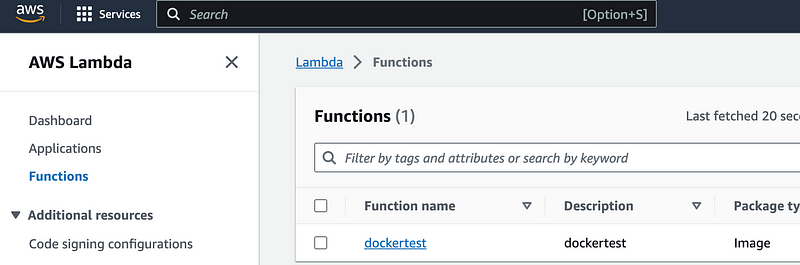

After adding MFA that user can access the Lambda console:

And execute the function:

Now, for executing the function on the command line, we’d have to use a user with an MFA device. We will not be able to use a role because a role doesn’t have an MFA device associated with it. That means a role cannot invoke our Lambda function (in theory). But a user with MFA can.

I explained all that in much more detail in prior posts:

But for now, let’s see if your Sandbox Admin with MFA can invoke the Lambda function.

Well, that depends. The way we’ve been running this function is by grabbing the credentials out of the function in a test environment. I’d need to have the ability to execute the function in the console via MFA to get those credentials, but once I have them, I can run any local test I want using that existing session:

That’s why I mentioned you don’t want to allow access to obtain those credentials in a production environment.

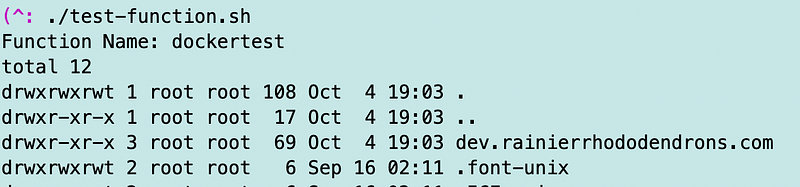

What about invoking the Lambda function in Lambda from the command line?

I have to base64 encode the parameters I’m passing in so I create a little script like this:

I run that script, and it works. I never have to enter MFA credentials. Why?

Let’s take a look at the AWS request in CloudTrail. We have to look at the Lambda Data Events Log we enabled in an earlier post to see the invoke actions.

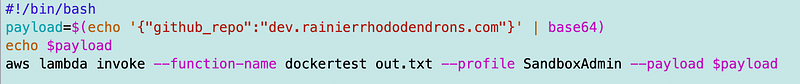

{"type":"IAMUser","principalId":"x","arn":"arn:aws:iam::xxx:user/SandboxAdmin","accountId":"xxxx","accessKeyId":"xxxx","userName":"SandboxAdmin"},"eventTime":"2023-10-04T19:22:14Z","eventSource":"lambda.amazonaws.com","eventName":"Invoke","awsRegion":"us-east-2","sourceIPAddress":"xxxx","userAgent":"aws-cli/2.4.6 Python/3.8.8 Linux/4.14.322-246.539.amzn2.aarch64 exe/aarch64.amzn.2 prompt/off command/lambda.invoke","requestParameters":null,"responseElements":null,"requestID":"a8897453-d60c-4cd2-b312-dcdaec91d2c1","eventID":"1bfd2200-bce7-44cd-b371-9534095155c1","readOnly":false,"resources":[{"accountId":"xxxx","type":"AWS::Lambda::Function","ARN":"arn:aws:lambda:us-east-2:xxxx:function:dockertest"}],"eventType":"AwsApiCall","managementEvent":false,"recipientAccountId":"xxxx","vpcEndpointId":"vpce-05e4e7c6d43cc1afd","eventCategory":"Data","tlsDetails":{"clientProvidedHostHeader":"lambda.us-east-2.amazonaws.com"}}There is no MFA present in this request. Why is it allowed based on our SCP and returning the temp credentials for our Lambda function?

This request and allowed action is behaving as if I am checking whether MFA exists or not in my policy — but I am not doing that. If I were checking whether MFA exists or not in the request, I would expect this behavior, because MFA does not exist in the request. But my policy simply states that any Lambda function should be denied if MFA is not present.

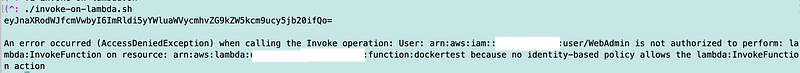

What if I use some other AWS CLI Profile that is valid but doesn’t have permission to invoke the Lambda function?

That works.

Well, clearly requiring MFA from the console is working so I don’t think this is a problem with my service control policy. I can’t find any documentation explaining why this works the way it does.

But I was going to show you an alternate approach anyway so I’m going to move on to that in the next post and see if we can get that working instead.

I do wish this worked properly. If I am missing something that is not clear from the documentation. Customers should be able to enforce MFA for Lambda invocation from an SCP. #awswishlist.

If I find that I’ve made a mistake or new information comes to light, I’ll update this post. You could try checking the age of the MFA device instead and you can likely add MFA to every principal policy but that’s missing the point of a single point of governance. I am moving on for now.

Hopefully my alternate approach in the next post will work.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab