A Docker Container In CloudShell

ACM.435 Building and running containers in AWS CloudShell

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Part of my series on Automating Cybersecurity Metrics. The Code.

🔒 Related Stories: AWS Security | Application Security | Batch Jobs

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post I moved my files into separate repositories so it is easier to allow people to modify jobs without modifying the core execution framework. New jobs and job configurations can be independently and easily added and modified. Security, governance, flexibility, speed, and convenience all rolled into one if all goes according to plan.

I came to the conclusion I do not want to deploy the job framework in the root management account in a prior post. I want to use a basic script to deploy a few initial components and limit what I deploy in that account to the minimum required.

I want to try to perform my initial deployment with a container. For now I’m going to pull the code into CloudShell, build the container and run it. This is not my final solution.

About a week or so ago, AWS announced support for Docker in CloudShell. Well, I was using containers in CloudShell months ago so I wonder if it just wasn’t announced or there’s really something new here.

At any rate, it’s officially supported now, but only in certain regions. That means you have to use one of the supported regions if you want to use my approach. I’m sure the list will expand over time.

A warning about AWS CloudShell and anything you run in a browser

Now mind you, I’m not a fan of CloudShell for regular use for security reasons. But when you first open an account it’s an easy way to get your initial resources in place, and they you can lock it down. Why am I not a fan of CloudShell in general?

- How do you run resources in a private network?

- How do you view network traffic for resources running in CloudShell? They aren’t in a VPC so no Flow Logs.

- It’s running in a browser — huge attack surface.

- What access do people at AWS or AWS systems have to the resources you deploy in CloudShell?

- Can you encrypt the data you put in CloudShell with a KMS key?

Those things alone are enough to make me not want to use CloudShell so I stopped there. But others have mentioned inability to audit it. How do you know what software components and versions are in CloudShell? How do you maintain access to and a history of actions taken in CloudShell at the OS level? I haven’t fully explored what you get and don’t get out of CloudTrail and any other missing logs you might need in the case of a related security incident.

I don’t use it for the most part so I haven’t really thought through all the implications it just feels like an insecure solution from the start so I didn’t think about it too much and don’t use it in most cases.

But CloudShell has some good uses:

- It is good for the initial setup in your account because the account is new and there’s nothing to steal. The code I’m deploying is public. It’s used for a quick build of minimal resources which can be easily verified. CloudTrail logs can be reviewed. Then you can lock it down.

- CloudShell is a good when you are learning AWS, or when you simply want to quickly test a command. It’s a handy resource when you don’t want to spin up a whole EC2 instance or environment to test out your syntax. Though mostly I can do anything I can do in CloudShell in an EC2 instance.

- You can verify the network is the problem if you run a command in CloudShell and from a private network (though you can also figure this out by looking at rejects in your logs which is the approach I typically take.)

Perhaps you have a sandbox account where people can use CloudShell which is completely segregated from your other resources and the Internet.

The main concern even with a sandbox account is if people are using the same browser for CloudShell, surfing the web, reading and clicking links and documents in email, and using that same browser to access other AWS environments. I tend to limit my use of the browser as much as possible and always use hardware MFA in browsers when I can.

An initial deployment container

I started to build out my initial deployment container in a new AWS account in the last post. I got the build working with the AWS Job Execution framework and the container specific code in separate repositories.

I need finish a couple of things:

- Update the execute.sh file to ensure it has all the scripts I want the initial container to run.

- Write a script to clone the repos, build the container, and run it with CloudShell credentials.

Let’s do it.

Initial execute.js update

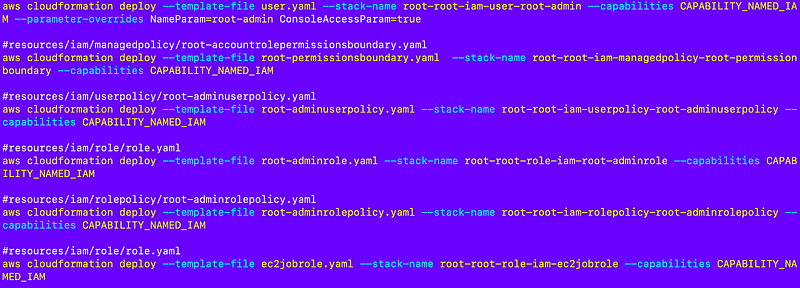

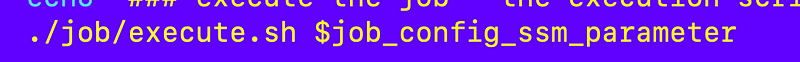

Initially I am going to copy over the command I was executing in CloudShell after manually copying in all the files the script needed.

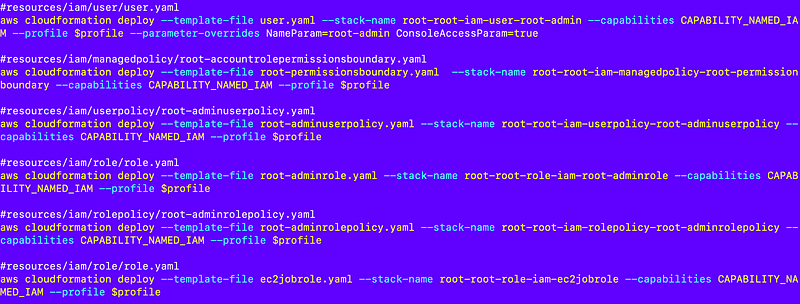

However, my container runs using a CLI profile configured in the container, so I’ll need to add the following to all the commands:

--profile $profileThe value of $profile is going to be set in run.sh.

Changes to run.sh

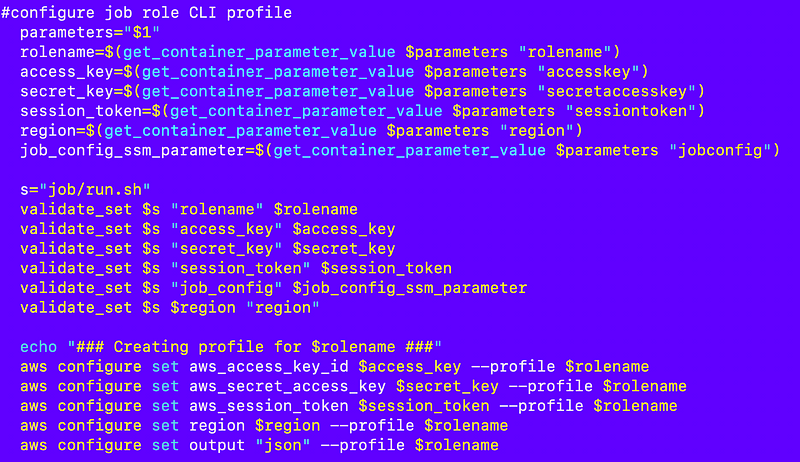

Recall what the run.sh script does when the container starts:

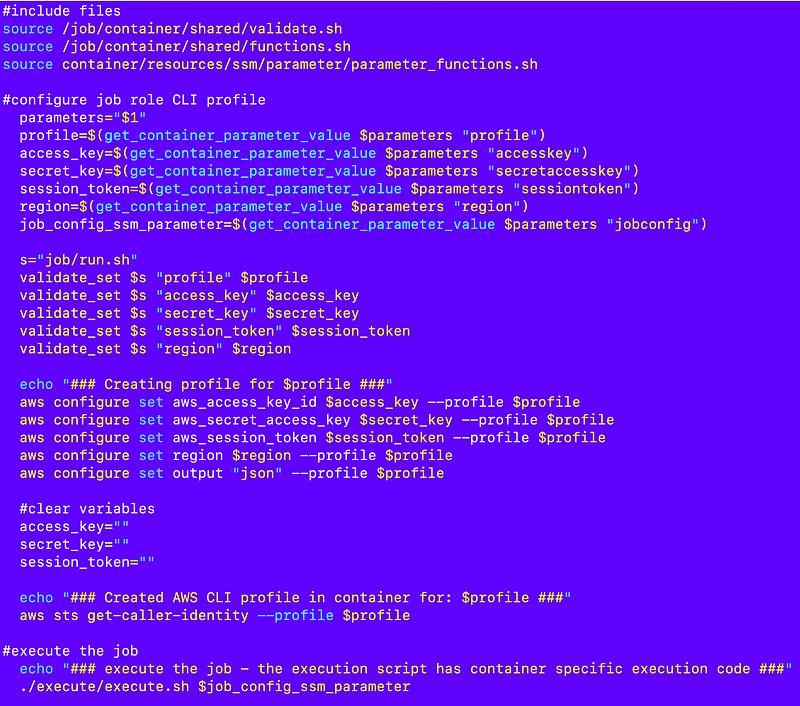

Well in this case, we don’t have a job configuration, so I’m going to make that an optional parameter. Perhaps some jobs have no job configuration the script just does whatever it needs to do.

However, we need to pass in rolename, access_key, secret_key, session_token, and region. The role name is really an AWS CLI profile name in this case, not a role name. For clarity, I am going to change the rolename to profile name here because that’s really what it is. But whenever a profile is configured for a role, the role name and the profile name should be one and the same.

I can change that with the sed command that operates on files:

sed -i 's/rolename/profile/g' run.shHere’s the update run.sh file with removal of the validation of the job configuration and rolename changed to profile everywhere. I also just noticed the validation of region was backwards for some reason so I rearranged the arguments.

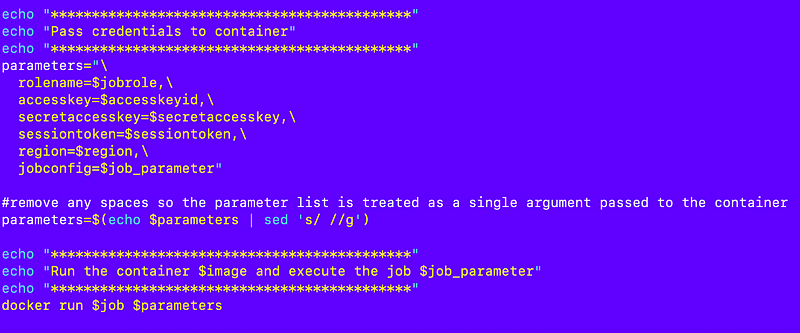

Passing credentials to the container

Recall that I have this code at the bottom of my testexecute.sh script:

I can use that in a script that runs in CloudShell to pass credentials into the container less the job config parameter. However I’ll need to get the credentials to pass to the container from CloudShell. How can I do that?

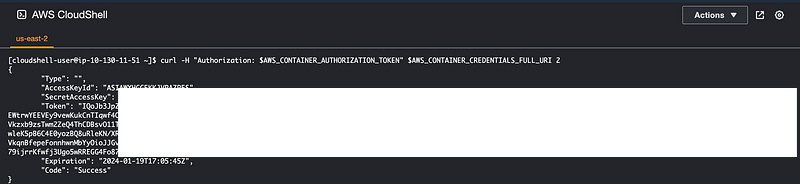

Open up CloudShell and run this command:

curl -H "Authorization: $AWS_CONTAINER_AUTHORIZATION_TOKEN" $AWS_CONTAINER_CREDENTIALS_FULL_URI 2Well look at that. Short term credentials in CloudShell in the root account where SCPs are not enforced.

Can I use those credentials outside of CloudShell? Why not?

Do you see why running commands in CloudShell in a browser may be of concern to a security team? Those credentials may be able to:

- Code in the browser written by the company that provided your browser.

- Plug-ins you installed in your browser that may leverage a vulnerability somehow.

- Vulnerabilities on your system that allow injection of commands into CloudShell when you’re operating in it.

- Any sort of web flaw that can get cross tab access. Hopefully there are none but others have demonstrated flaws in CloudShells provided by cloud vendors in the past — and browsers and related extensions. Huge attack surface.

Just saw this browser vulnerability after writing this, for example:

I’d rather keep those credentials out of my browser, personally. You can’t really prevent use of CloudShell by the root account in your management account — which is why you should never use that user once you’ve set up other options. You can limit use of CloudShell in IAM policies and SCPs for all but the management account where SCPs don’t apply and you can’t put restrictions on the root user.

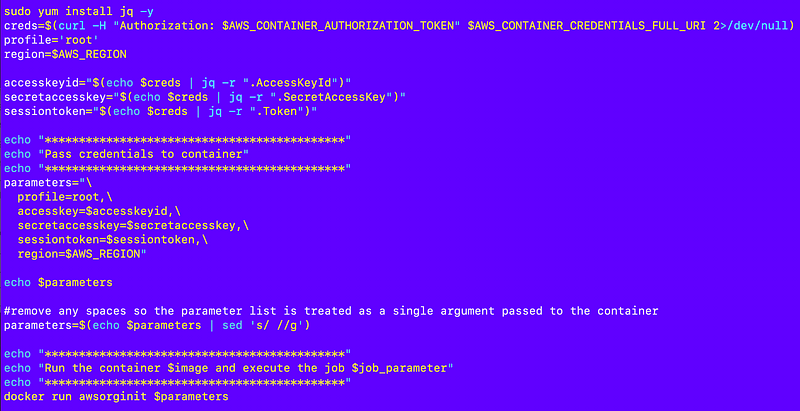

In any case I will use those short term credentials by passing them into my container running in CloudShell to execute my initial commands to create and initialize our organization. We have everything we need there to obtain the parameters we need to pass into the container.

I’m going to need to push my code to the repositories but once I do that I can execute a script something like this to get the code, build the container, obtain the tokens, and pass them to the container.

#the one parameter we need is the organization name

#that can be deployed to a parameter and used by the job

echo "Enter the organization name:"

read name

deploy the job parameter with the organization name.

git clone https...AWSJobExecFramework

git clone https...job-awsorginit

cd AWSJobExecFramework

./scripts/build.sh awsorginit

#get the CloudShell credentials:

sudo yum install jq -y

creds=$(curl -H "Authorization: $AWS_CONTAINER_AUTHORIZATION_TOKEN" $AWS_CONTAINER_CREDENTIALS_FULL_URI 2>/dev/null)

ACCESS_KEY=`echo $creds | jq -r .AccessKeyId`

SECRET_KEY=`echo $creds | jq -r .SecretAccessKey`

SESSION_TOKEN=`echo $creds | jq -r .Token`

profile='root'

parameters = [parameters list shown above]

docker run awsorginit $parametersCreate the repositories and push code to them

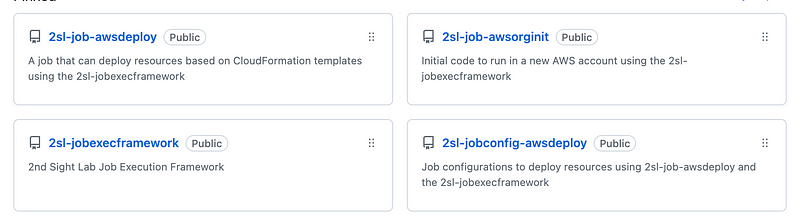

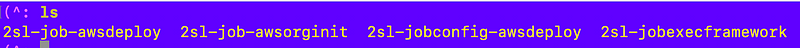

I created the the new repositories on GitHub and checked in my code.

Note that I changed the names slightly. I include 2sl- in front to indicate this is a 2nd Sight Lab (no s) repository not from some third party I cloned or forked.

All the repositories start with “2sl-job” now so they will show in the same place in a alphanumeric sort of repositories or folders.

I took AWS out of the name of the jobexecution repository since jobs can do other things besides operate on AWS. For example, I can use a job to deploy a GitHub repository. I would need to make some adjustments but stay tuned for that.

By the way GitHub has this new nifty .gitignore template feature but unfortunately nothing for AWS. So I added my own .gitingore file and my license file.

I created a new folder on my system for this code named “job.”

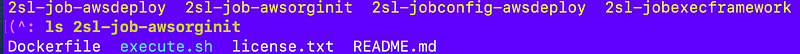

I clone those four directories into that folder:

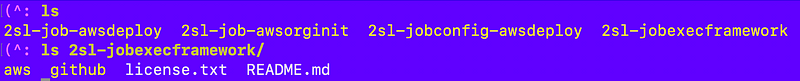

Now all my relative file paths should work — except that I am going to make changes yet again. Remember how I said I might have jobs for GitHub or AWS or something else? I’m going to modify the 2sl-jobexecframework folders to start with the service name at the root. The service name is the service that I need to authenticate to in order to perform the job actions. Each service is likely going to have a separate authentication mechanism.

Oh, and by the way, no matter how much people want to convince you that Terraform deploys anything anywhere with a single template — bear in mind that you still have to write custom code for each cloud provider. It’s not like you just plug in your user name and password and deploy the same template everywhere. Some of the code is the same, abstracted out as much as possible like mine, but some of it has to be vendor specific. It’s still a great tool, I’m just explaining that it’s not exactly the holy grail of single step cross-cloud deployments that some people think it is.

You’re also layering code on top of code so you’re increasing, not decreasing, the code used to deploy resources. That’s not necessarily bad — if all the code is free from bugs and security problems — and it reduces the time it takes humans to deploy things which for some organizations I think it does. But each of those new lines of code and additional complexity can lead to more bugs or vulnerabilities and you have to provide credentials to that tool to operate in your account. So review it carefully.

Like Terraform, I have subsets of code to authenticate to and deploy resources to different cloud providers. With this solution, you can also add your own jobs if you want to extend the platform. Just remember the code and concepts described here are for your own use, not to be embedded into a commercial product or service. If you would like to do that please contact me for a license.

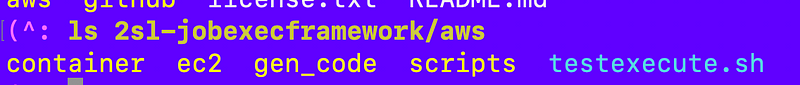

But anyway, for now, I have these folders in the job exec repository root:

The following files from prior posts are in the aws directory (note that I changed container to “job” later down the line):

And the following files are in the 2sl-job-awsorginit repository:

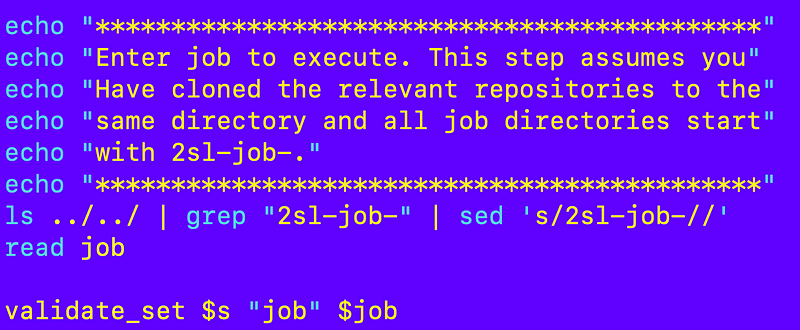

I need to change this code that lists the jobs to move up one directory and add 2sl.

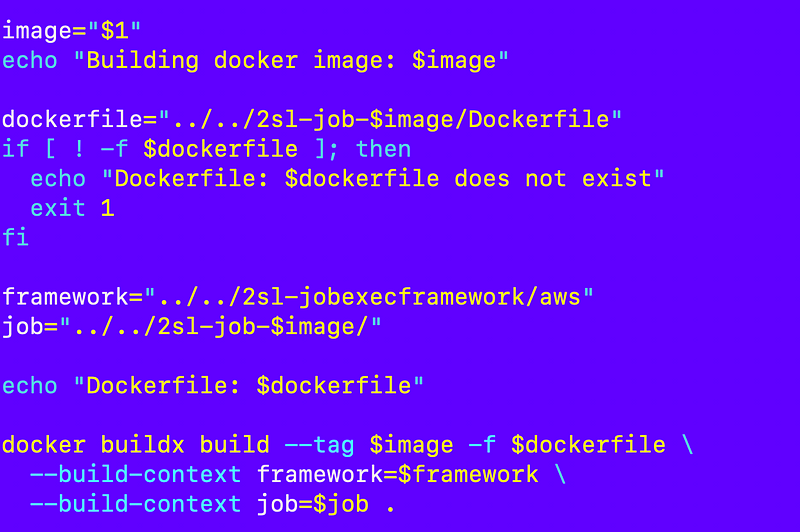

And a few adjustments to my build script:

I test out my test script to make sure it can still build the 2sl-job-awsorginit container and it works.

Script to build and execute the container

Next I created a script I can run in CloudShell to do the following:

- Clone the repositories

- Build the container

- Get CloudShell credentials.

- Run the container and pass in the credentials.

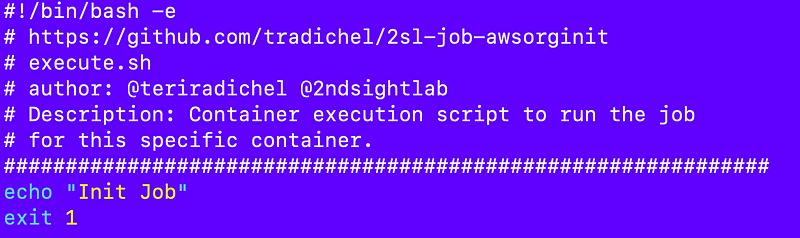

I want to test one thing at a time so I’m going to echo out a comment at the top of the execute script and exit. I’m not running this currently in the root account because I want to use those credentials as little as possible.

When I do test in a root account I can use a backup AWS account to reduce the exposure of my production account until I know the script works.

Next I add a new script called init.sh to the 2sl-job-awsorginit folder.

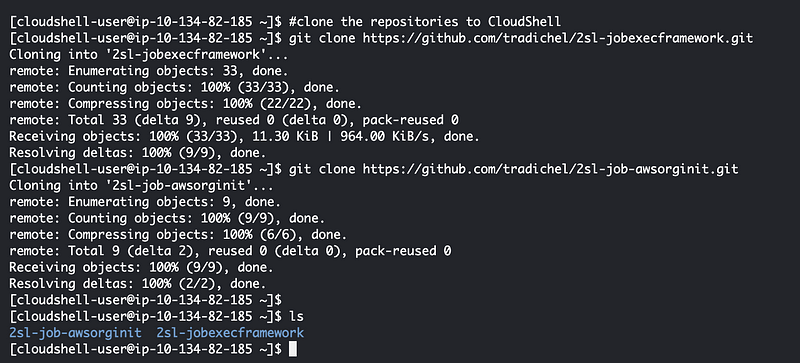

I test cloning the repos in CloudShell:

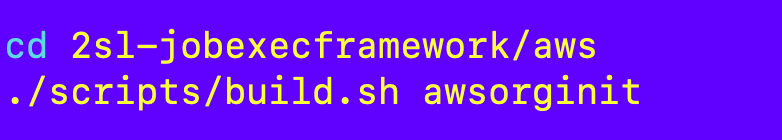

Next I add a couple of lines to test building the container.

Simple, right? This works on my EC2 instance so it should just work in CloudShell. But it doesn’t.

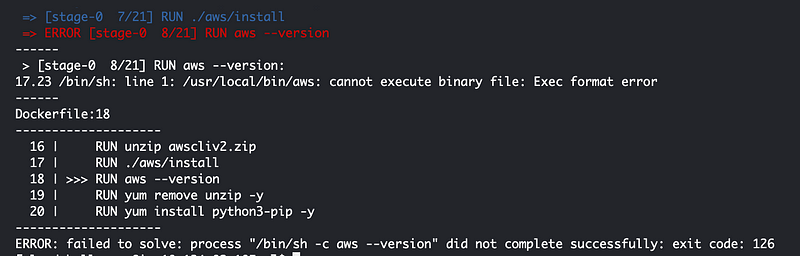

This is kind of a weird place to get an error:

Is it the architecture?

I wrote about architectures here:

and here:

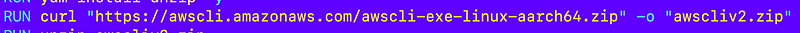

I am deploying the arm version of the AWS CLI:

The container is generically using an Amazon Linux Container:

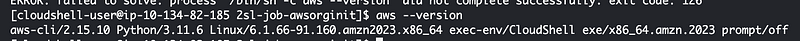

What is CloudShell running on? Let’s check the AWS CLI version:

Aha! Another good reason to use a separate container for this purpose. CloudShell is running on x86 and I suspect that we need to use that architecture for the AWS CLI since that is the underlying architecture of the host running the container.

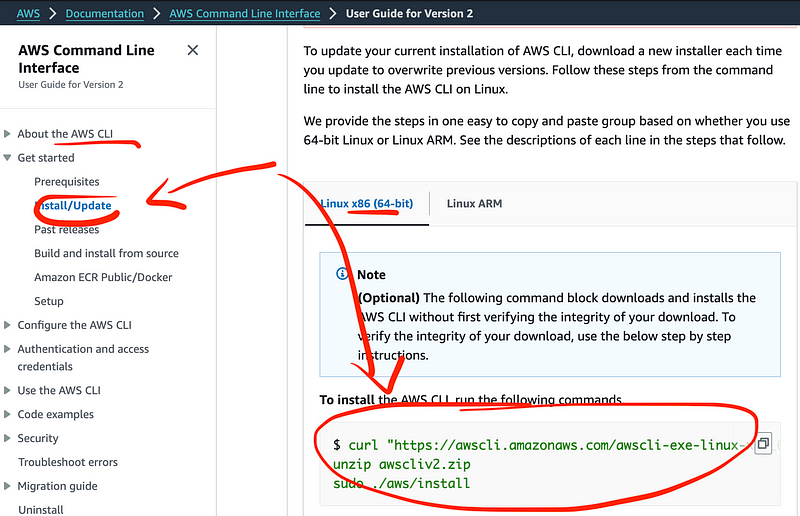

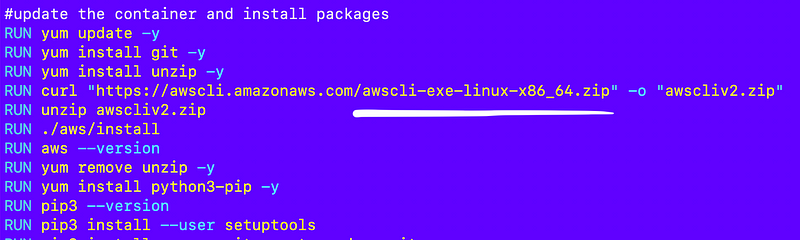

I obtain the link to download the neccessary version of the AWS CLI:

I update the respective line in my container:

So that worked for that particular error. Very slowly…but it worked…I have thoughts on that issue but I’ll save them for later.

It worked up to the point where I try to install jq on the container.

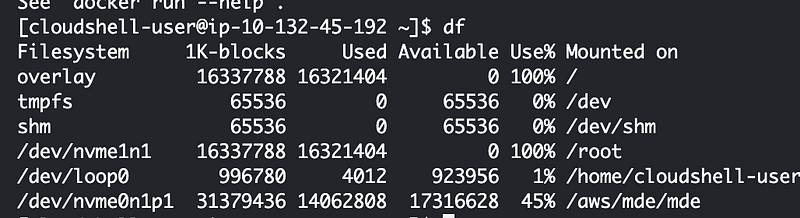

I removed some of the unnecessary components from my container thinking maybe if it is smaller that will work. But instead of that fixing the problem, I get this error that the “device” is out of space.

On an openssl library…??

Which device? My container or the disk for CloudShell?

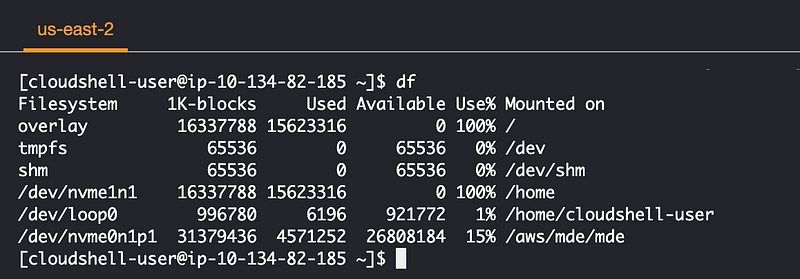

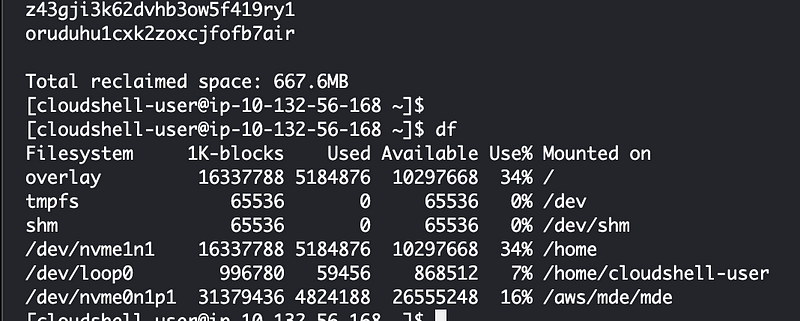

I run df:

I delete some files but that doesn’t clear up any space.

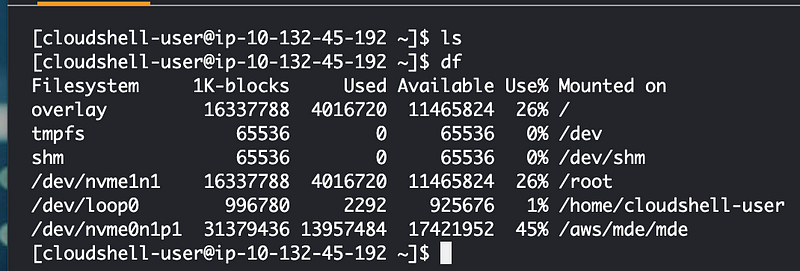

Out of curiosity I try a different region:

Let’s try building in this region with the smaller container.

Well that worked but it was very, very slow. So besides the reasons above related to security, CloudShell performance and space is limited. But it will work for certain appropriate use cases. Also, this is not my final solution, hopefully.

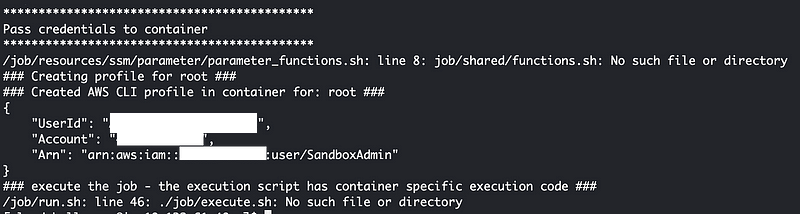

The next thing I need to do is parse out the CloudShell credentials and create my parameter list to pass in to the container.

I’m going to use the credential parsing code above.

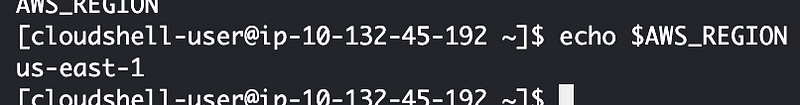

Luckily it’s easy to get the current region:

I test my code to verify that I’m parsing the parameters correctly and then I add the line to run the container:

One more thing — I have to update the path in run.sh to the correct path for execute.sh:

After fixing a few more bugs I’m back to this even though I’m not adding a lot more code — no space left on device:

Welp. Let’s head back over to the other region. Looks like we have some space here.

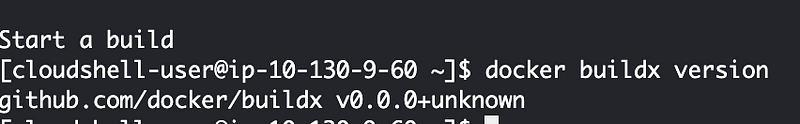

So I start testing again. This is when I realize files files are not correctly copied to the container using the build contexts in the last post. I don’t know if that is because I have an old version of buildx in CloudShell or it never worked in the first place.

Well, I ran out of space again, but this time I had containers when I ran this command (I didn’t the first time I saw the error because the images and containers never got built):

docker ps -aSo I deleted them:

docker rm <id>deleting two containers freed up enough space.

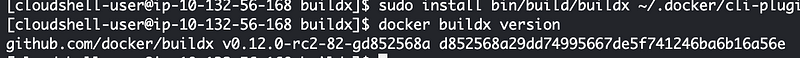

So I verified that the buildx command is doing pretty much nothing and the container doesn’t have the files at the specified contexts. Is this because it is an old version of buildx? Back to the last post to try to install buildx, if there is enough space for that.

I figured out that rebooting CloudShell after deleting files frees up space.

I attempt to build CloudShell again.

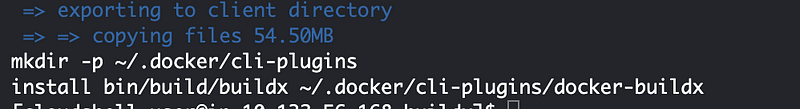

Luckily it executed successfully (took a long time):

Ran the last two commands there with sudo for the last one as explained in the last post and get the updated version:

Next I removed the buildx directory to clear up space and run my init script again. Wish me luck. 🤞

Seems to be working and I see the context copies getting highlighted more prominently now:

And….I’m almost to the end when I get an out of space error again.

I have no images and no containers so that is not the issue.

At this point I try the following command:

docker system pruneThat seems to help.

I also run this command but it doesn’t free up much space:

sudo yum clean allThat didn’t do much but it cleared up a bit of space.

After repeatedly getting this error I resort to pruning and cleaning and restarting between each build.

Then I realize I need to make a few more path changes which you’ll see in the Docker files and other files to get everything working and I’m not going to bore you with here because this all took far too long.

In fact, I didn’t resolve all the path issues yet but I spent way too long on this already so saving this for another post. I got the container running and I got the credentials passed to the container successfully.

No spell checking on this post because I feel like I didn’t even accomplish much. I spent the whole time troubleshooting and trying to clear up space for something that should be simple to run.

But then, likely you’ll want to pull containers from a repository in most cases. It’s just this initial case where I don’t yet have a repository that I want to build the container. I hope that AWS will update buildx soon as that would help. Other than that, reboot CloudShell a lot. 🫤

I finished this container in the next post:

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2024

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab