upload failed: ./[xyz] to s3://abc/xyz An error occurred (AccessDenied) when calling the PutObject operation: Access Denied

Error uploading a file to an S3 bucket

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: Bugs | AWS Security | Secure Code

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

How many years have I been writing S3 bucket policies and IAM policies? At least 10. I’d like to think I’m a halfway decent programmer, though I realize I’ve been doing this way too long and not as quick as I used to be. But that’s where experience is supposed to kick in, right?

I still think programming is fun but sometimes, but when I hit the same non-obvious error messages over and over and I think, there must be a better way. I’ve often thought about writing a better IAM and resource policy generator since AWS doesn’t have very good options. I’m using the IAM policy generator now and it’s not working. I have standard bucket policies I’ve used for years but I’m trying to do something different here so I have been testing with the visual editor, with plans to further automate.

You must know that if I’m still having problems every time I write an S3 bucket policy, beginners are really struggling. And this is why we have security problems and lax security when it comes to resource and IAM policies. It’s hard. Too hard. People can’t figure out what’s wrong so they open up one thing after the other — and leave it that way.

For networking, I wrote a tool to help people figure out what ports their application requires. Cloud providers can provide better error messages and sample zero-trust policies to help people get things done more easily.

~~~~~~~~

But I digress.

~~~~~~~~

So I have a policy currently that allows a rule PutObject permissions to a specific bucket and it is not working. Let’s figure this out. I did something wrong.

For testing purposes I’m starting with IAM only and no bucket policy, so the IAM role is the only thing causing my access to fail. Let’s take a look.

In my policy I have one s3 statement that allows all read and list actions on any bucket. I tried to restrict that with conditions such as MFA, IP address, and a specific OU but as noted in a prior post, none of those are working so they don’t exist here:

My policy should also allow all read and list access to local buckets along with the cross-account buckets that are working.

Next I added the PutObject permission to a specific bucket in my account. Welp. That’s not working. Let’s revisit that policy.

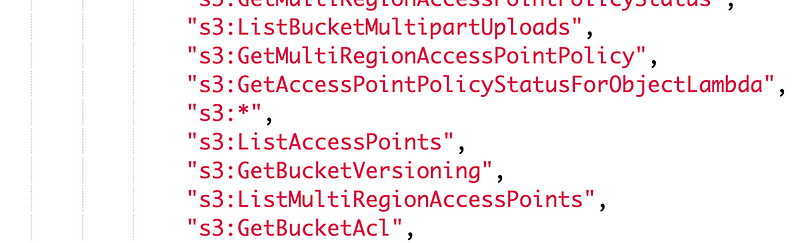

Oh that’s right. I just clicked *all* write actions since I already had all read and get for all S3 resources and here’s what the visual editor created:

"Sid": "VisualEditor3",

"Effect": "Allow",

"Action": [

"s3:PutAnalyticsConfiguration",

"s3:PutAccelerateConfiguration",

"s3:DeleteObjectVersion",

"s3:RestoreObject",

"s3:CreateBucket",

"s3:ReplicateObject",

"s3:PutEncryptionConfiguration",

"s3:DeleteBucketWebsite",

"s3:AbortMultipartUpload",

"s3:PutLifecycleConfiguration",

"s3:DeleteObject",

"s3:DeleteBucket",

"s3:PutBucketVersioning",

"s3:PutIntelligentTieringConfiguration",

"s3:PutMetricsConfiguration",

"s3:PutBucketOwnershipControls",

"s3:PutReplicationConfiguration",

"s3:PutObjectLegalHold",

"s3:InitiateReplication",

"s3:PutBucketCORS",

"s3:PutInventoryConfiguration",

"s3:PutObject",

"s3:PutBucketNotification",

"s3:PutBucketWebsite",

"s3:PutBucketRequestPayment",

"s3:PutObjectRetention",

"s3:PutBucketLogging",

"s3:PutBucketObjectLockConfiguration",

"s3:ReplicateDelete"

],

"Resource": [

"arn:aws:s3:::my.bucket/*",

"arn:aws:s3:::my.bucket"

]

}Note that I added the two variations of resources for the bucket or any object in the bucket. That’s because it’s annoying to try to remember which actions apply to a bucket or an object and write multiple hard-to-read, convoluted statements. Is that causing my problem? Because this should allow all read access to the bucket and the other policy should allow all write access.

But just for sanity purposes I’ll just allow all actions on this bucket and see what happens. BTW that’s not he real bucket name.

Alright I give.

S3.*It still doesn’t work. I have s3* to * resources.

Conditions and MFA

As with my last post I have MFA required on the KMS portion of the policy and MFA does not show up for assume role in CloudTrail so let’s remove that.

I’ve confirmed you can’t use those conditions with assumed roles.

Encryption and KMS Keys

The only other thing I can think of, is that the problem has to do with the KMS key assigned to the bucket. That’s not what the error message says, however. You would expect — or at least hope — that the error message would be more specific that this if it were a KMS key issue. I believe I granted access to the key but let’s just remove encryption.

And Finally. It works.Now, this is *not* where I’m going to stop. If you’re a developer don’t just stop when you get things working. Figure out why they are not working and fix it — and if it is a bug or feature request shoot that over to AWS one way or another. You can tweet it out on Twitter with the hash tag #AWSWishList and someone will probably get your request over to the appropriate AWS team.

In this case I know that my problem is related to one of the KMS error messages I wrote about before in this blog.

- Check the KMS Key policy and make sure my role is allowed to use the key.

- Check my IAM Permissions and make sure the IAM user is allowed to use KMS for that key.

Oops. I forgot to add users to my KMS Key policy. I could have sworn I did that, but like I said I’ve been doing this far too long. That’s why I’m going to automate…everything. I’m just testing out the plan at the moment. For now, I’ll add the role to the users list on the KMS key and add it back to the bucket and I should be good to go.

Oh…hang on…I have allowed permission by way of another account at the bottom of the key policy screen. Though I would prefer to add a specific role I only have my report builder in that account so I guess it’s OK.

Let’s check the IAM policy…

Here’s where I noticed the visual editor just stuck s3.* in the middle of all the other actions when I allowed it for my testing above:

And people wonder why I don’t generally like things that write code for you. I use them as a starting point, but never in production. Let’s clean that up because I really only need PutObject for the local bucket and read access to the remote buckets.

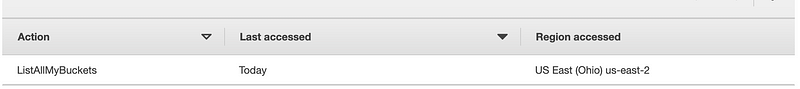

When I navigate to AWS Access Advisor in IAM for this role, even after a successful PutObject operation I only see ListAllMyBuckets as the action.

I turned on server logging on the s3 bucket in order to see other bucket actions that may be failing. It seems like there is some kind of delay between adding the bucket policy and when it actually works because after restricting my role back down, the policy was failing. I looked in CloudTrail logs and turned on server access logging so I can see what’s failing and now it works without any additional changes.

Here’s the policy I ended up with that allows me to access the remote bucket read-only and put-object on the local bucket (with no encryption currently.)

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListAllMyBuckets",

"s3:ListBucket"

],

"Resource": "*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::my.bucket/*",

"arn:aws:s3:::my.bucket"

]

}

]

}I wish I could further restrict access to remote buckets with a condition on the OU but that doesn’t seem to work. But this role will only have access to the bucket policies that explicitly allow cross-account access to this account and any local buckets. I’m tryin to create a generic tool for certain projects in a specific OU and I don’t want this tool reading buckets in any other account. I also only want it to be able to write to one local bucket. I think that part is OK.

Thoughts on my architecture...I can get around this issue of not being able to lock down with OUs and MFA by granting copying the files this process needs to read to a specific bucket from the external accounts instead of having this process reach out to buckets in other accounts. But then I have the reverse problem. Every account has write access to a single account and that's more risky than read access the other way around.I could create a single bucket per project in this account and limit each remote account to its own bucket in the central account, but then I risk a misconfiguration where one of those accounts can see everyone else’s data. I think having to manage the permissions for one single resource to have read-only access to all the other data is safer. But at some point I’ll probably have to write automation to only allow access to specific buckets since the OU thing doesn’t seem to be working…or figure that out if I’m just doing something wrong.Now to figure out why KMS is denying access when I enable it…

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2022

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab