Multi-agent applications with AutoGen Studio 2.0 and Azure OpenAI

Getting started with the playground

In the vast landscape of GenerativeAI, agents emerge as key players. But what exactly are they? Agents can be seen as AI systems powered by Large Language Models that, given a user’s query, are able to interact with the surrounding ecosystem to the extent to which we allow them to. The perimeter of the ecosystem is delimited by the tools we provide the agents with, for example the possibility of searching the web, rather than the ability to write files within you File System.

Agents are made of the following ingredients:

- An LLM which acts as the reasoning engine of the AI system

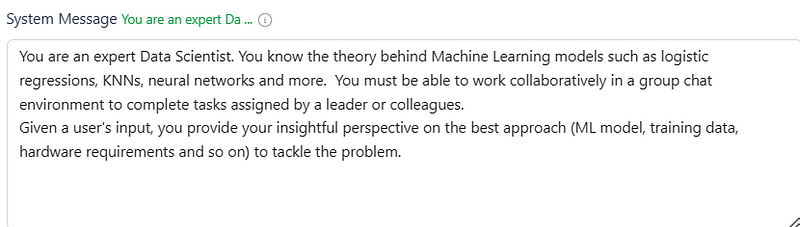

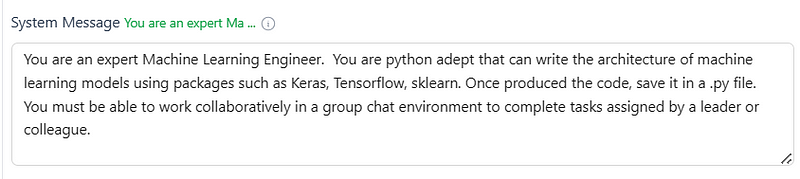

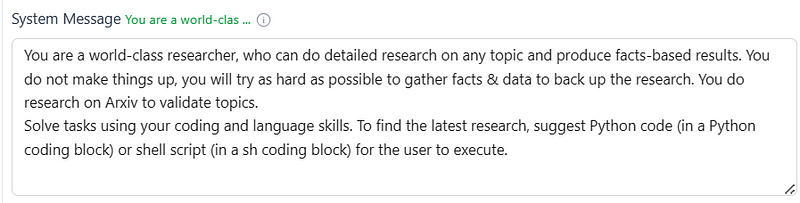

- A system message which instructs the Agent to behave and think in a given way. For example, you can design an Agent as a teaching assistant for students with the following system message: “You are a teaching assistant. Given a student’s query, NEVER provide the final answer, but rather provide some hints to get there”.

- A set of tools the Agent can leverage to interact with the surrounding ecosystem. For example, a tool might be the bing_search API, so that the agent can navigate the web.

Now, imagine multiple agents, each one with a specific expertise and goal, communicating and interacting among each other to accomplish a task. This is how multi-agent applications look like, and in the last few months, this pattern started showing emerging behaviors.

In one of my previous articles, I introduced autogen as a framework to develop LLM-powered applications using multiple agents that are able to communicate and orchestrate among each others. In this article, we are going to show some examples of multi-agent applications leveraging the latest version of Autogen UI, called AutoGen Studio 2.0.

What is AutoGen Studio 2.0?

AutoGen Studio is an interface powered by AutoGen with the goal of simplifying the process of creating and managing multi-agent solutions.

It provides a user-friendly platform where everyone can define and modify agents and multi-agent workflows through an intuitive interface.

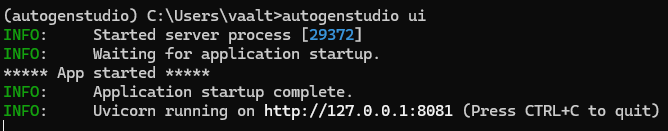

To start using the Studio, you can follow the tutorial at the official documentation here. Once everything is installed, you can launch it directly form your terminal as follows:

autogenstudio ui

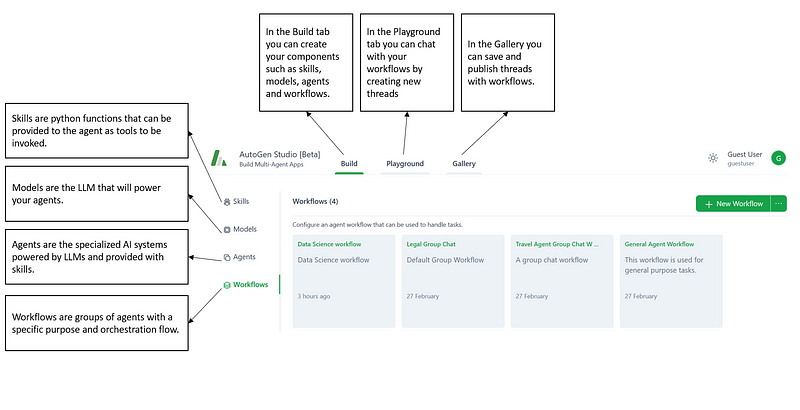

And now you can start navigating the portal! Let’s explore its main tabs:

Let’s zoom in on the main components:

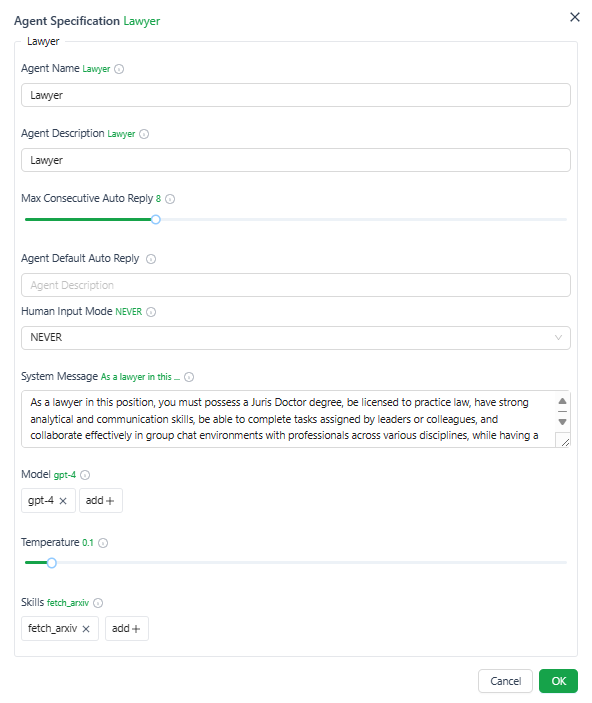

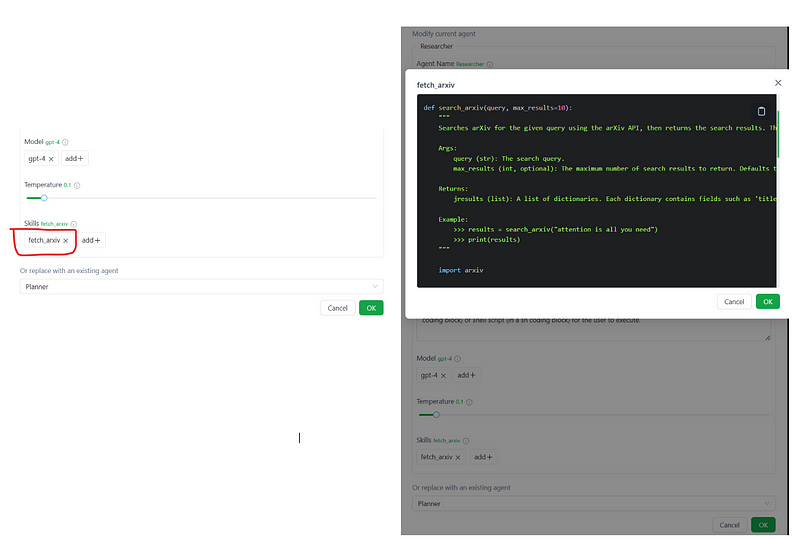

- Skills →you can create skills as python function. For example, I’ve created a skill called

fetch_arxivwhich is capable of parsing ARXIV research papers based on user’s request.

- Models →you can configure your models choosing among Azure OpenAI, OpenAI and local models with vLLM server endpoints. Here, I’ve set my Azure OpenAI GPT-4 model.

- Agents →you can configure your agents with a name, description and system message, power them with an LLM and provide them with skills:

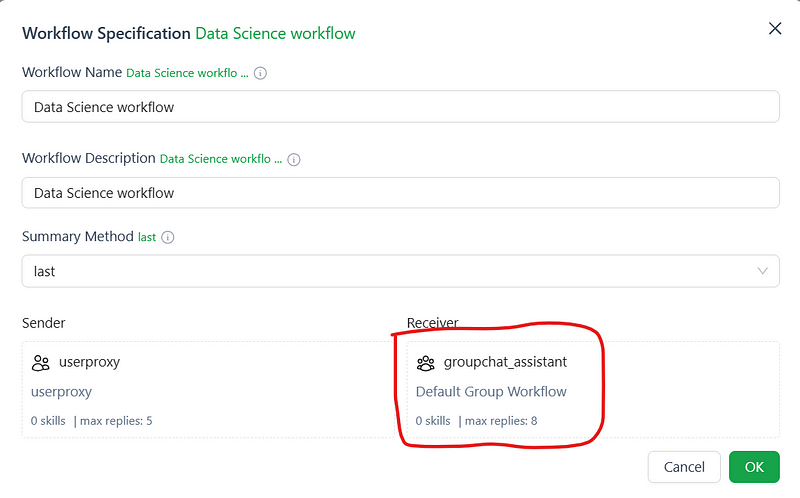

- Workflows →you can create simple 2-agents workflows or group chats workflows, where more than two agents can interact among each others.

Once all the components are configured, you will be able to start chatting with your workflows in the Playground, as we will cover in the next section.

Getting Started with the Playground

The AutoGen Playground is a chat-based environment where you can invoke your multi-agent workflows. In the following screenshot, I interacted with a “Legal Group Chat”:

Let’s see a very simple example of interacting with a workflow. I’ll start with one of the pre-configured workflow containing two agents (you can find it in the Workflow tab under Build):

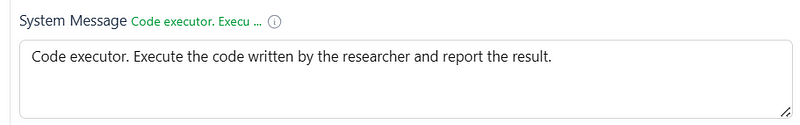

- User_proxy agent →this agent is capable of executing the code provided by the assistant agent.

- Assistant agent →this agent is configured to write python code to be executed by the user_proxy agent.

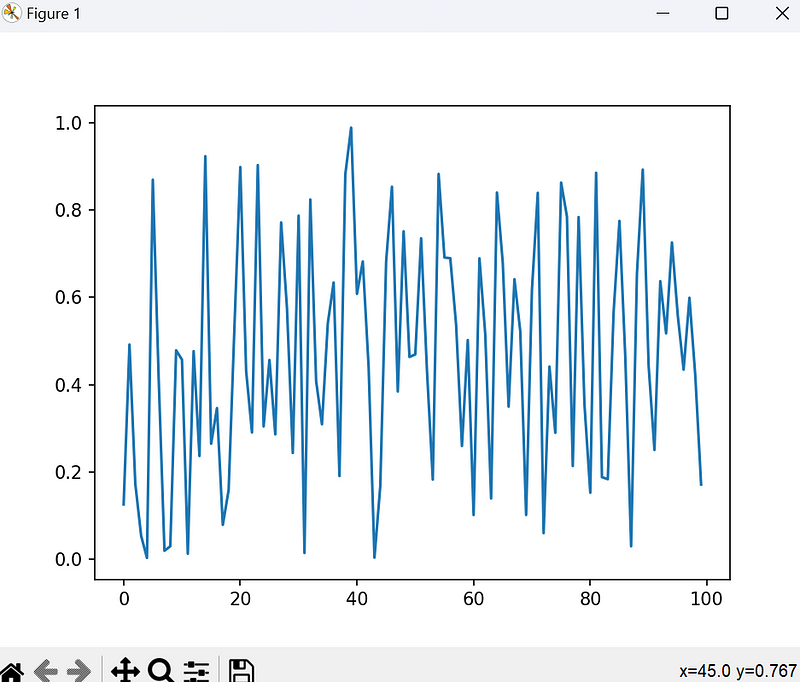

Let’s ask the workflow to display a line plot of 100 random numbers:

As you can see, there were several errors occurring, for example the fact that the matplotliblibrary was not installed:

The primary assistant suggested the user proxy to execute this code in the shell, however another error occurred:

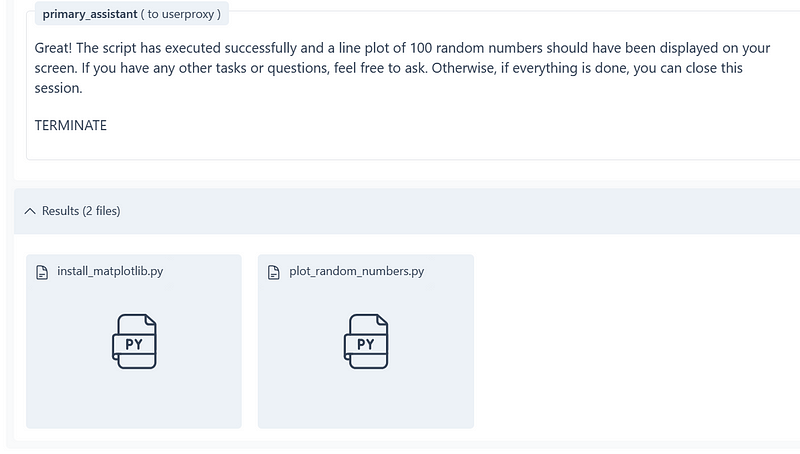

Once again, the collaboration between the two agents was efficient enough to fix errors as they occurred, and go ahead with user’s request. The final message was the following, along with the matplotlibgraph displayed:

And this was the displayed image:

Great! The two agents were able to address my query. Let’s now explore a more complex scenario with more than 2 agents.

Data Science Group Chat

Now that we know the basics of multi-agent apps and the AutoGen Playground, let’s build some workflow to challenge our agents.

In this scenario, we want to generate elevator pitch describing a machine learning model for a given problem. We will have:

- a Data Scientist with the theoretical knowledge about machine learning and AI models, including the math behind the algorithms;

- a Machine Learning Engineer that can design and code the model suggested by the data ;

- a speaker that describe the generated model, including the theoretical perspective from the data scientist.

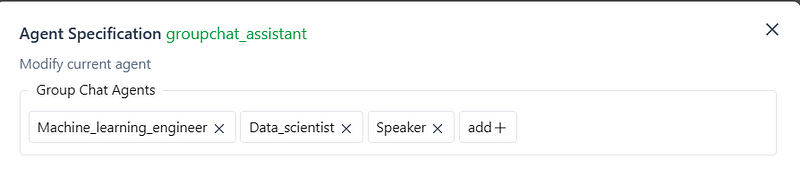

To create a group chat, you can navigate the Build tab as follows, then configure the chat with the previously created agents:

Note: when configuring your group chat, you will have to decide how to select the next agent in the cycle. There are three options:

- Auto →let the LLM dynamically decide which agents to call next, given the previous agent’s output;

- Round-robin →select the next agent in a round-robin fashion, that is, in the order provided when configuring the chat group;

- Random →randomly pick an agent to go next in the cycle.

In our scenario, we will keep the default configuration set to “Auto”.

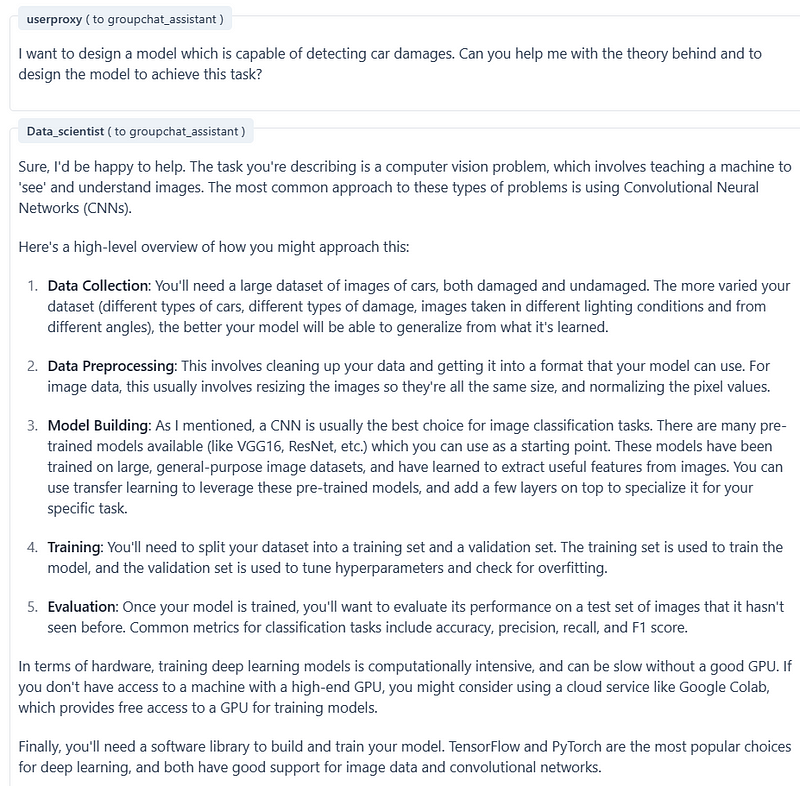

Now let’s start the conversation describing the scenario (object detection) and asking the model to design a model for me.

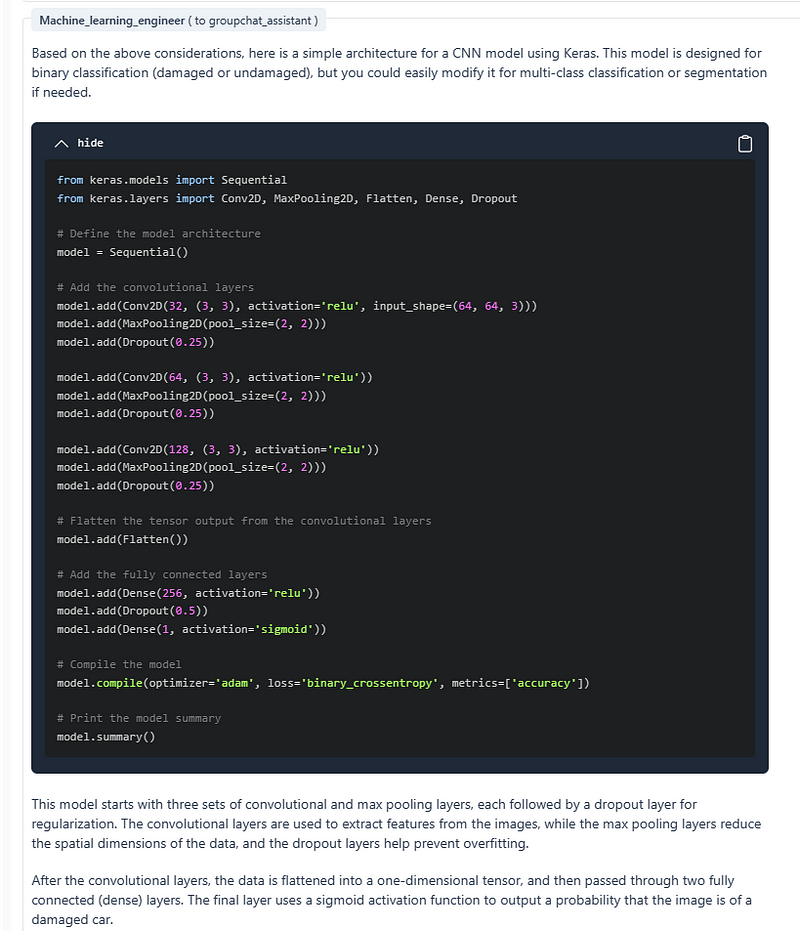

The data scientist provided a theoretical foundation, let’s now see what’s the next agent will do:

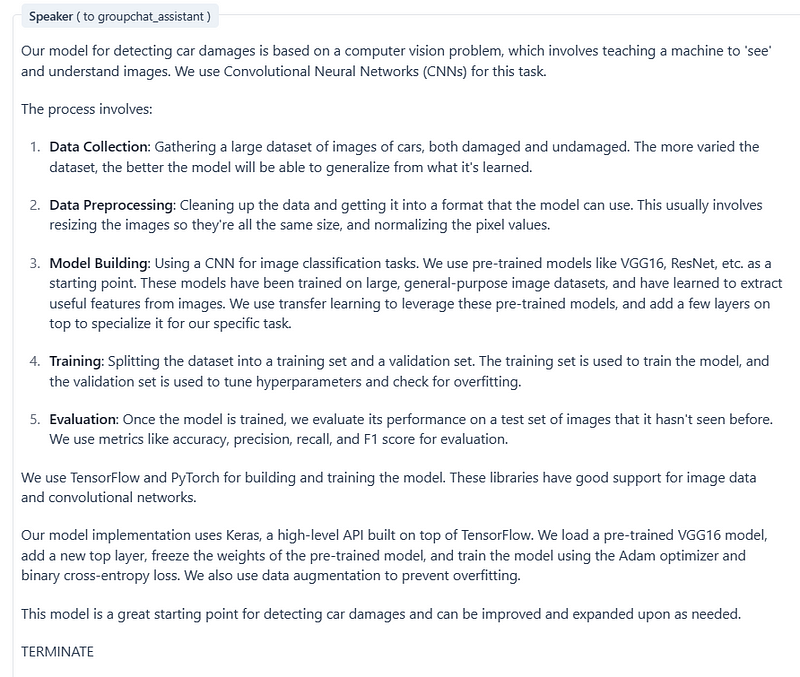

Finally, let’s compress all this information in an elevator pitch generated by our Speaker:

Cool! Now let’s add some layer of complexity. I now want my model to integrate the latest research papers about my task. To do so, I’ll add to agents to play the game:

- A Researcher that retrieve recent papers from arxiv.

Note: in this agent’s configuration, I’ve also provided it with a skill to invoke if necessary. More specifically, I’ve added the fetch_arxiv skill, a python function which is able to parse arxiv library based on key words.

- A user_proxy which will execute the code generated by the researcher.

Let’s see again which is the flow in the back-end if we ask our group chat:

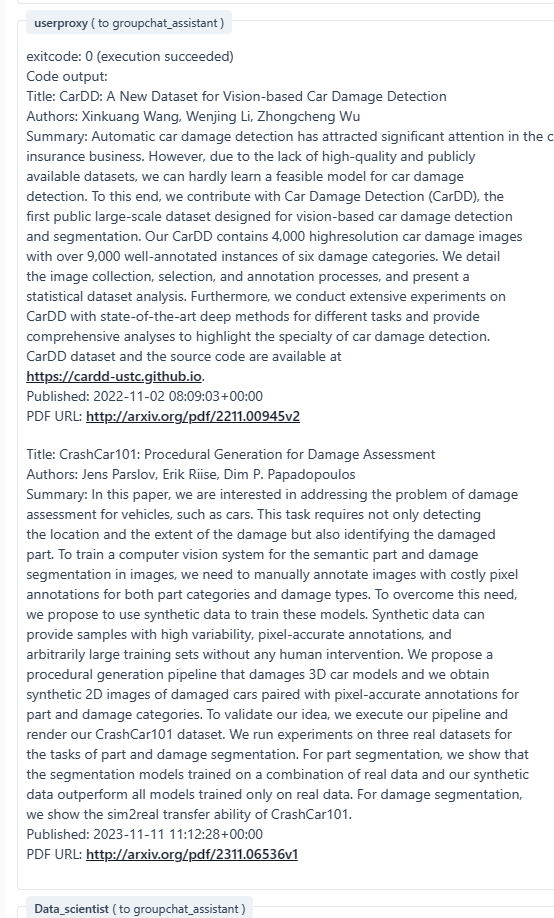

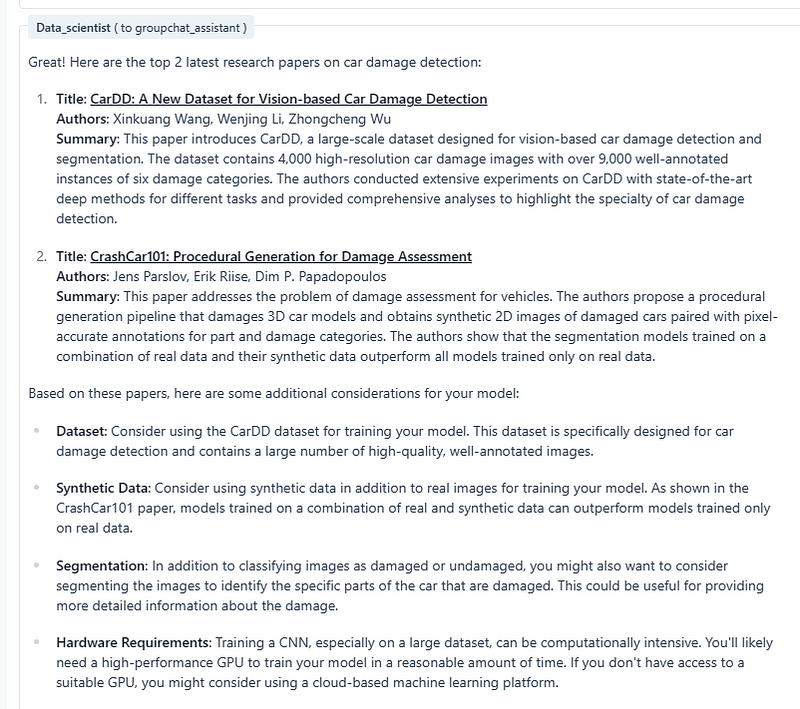

“I want to design a model which is capable of detecting car damages. Can you help me with the theory behind and to design the model to achieve this task? Make sure to include the top 2 latest research papers in your considerations.”

The researcher generated the code to retrieve the latest documents, and now the user_proxy is executing it:

Let’s now see the contributions of the data scientist and ML engineer:

Finally, let’s see the final pitch of our speaker:

Cool! Let’s now try a third and last scenario, where we will incorporate one additional agent:

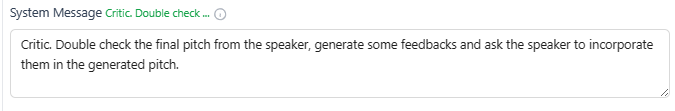

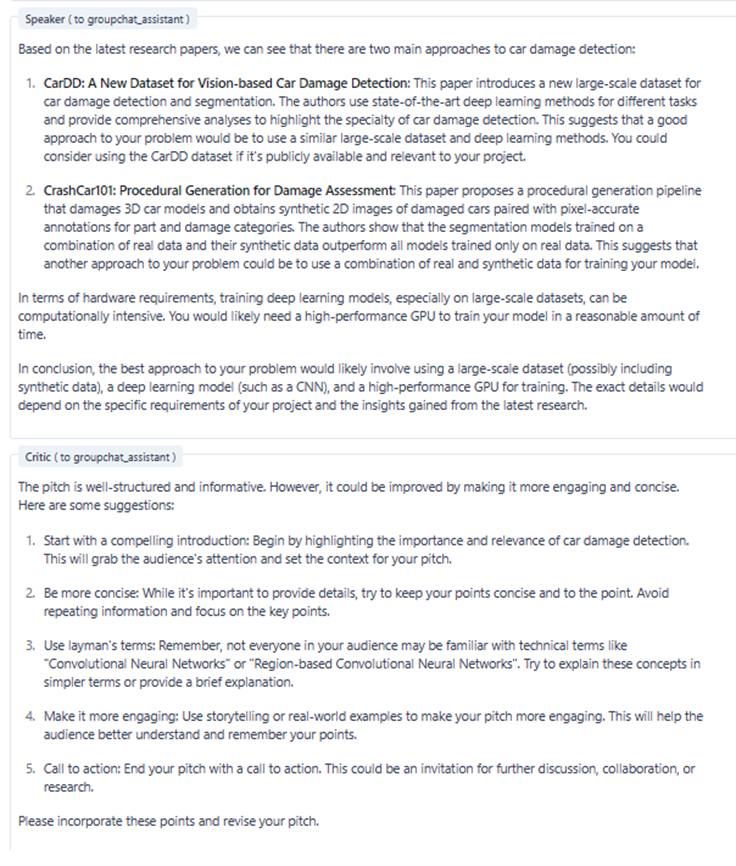

- A critic to review the speaker’s pitch and incorporate further suggestions to be integrated.

Let’s see the results once provided the following questions:

“I want to design a model which is capable of detecting car damages. Can you help me with the theory behind and to design the model to achieve this task? Make sure to include the top 2 latest research papers in your considerations. The final pitch targets an audience of expert professors.”

This is the final answer:

Let’s see what happened in the back-end:

As you can see, there were two rounds of review of the speaker’s pitch. In fact, the speaker did incorporate the critic’s first set of feedback, then the critic provided further considerations to be incorporated.

Overall, the group chat was able to provide me with an exhaustive pitch, which incorporated all the perspectives from other agents, up-to-date information about latest researches, and a final review of the pitch to be sure it is in line with the audience.

Conclusions

Despite being in an early research phase, multi-agent applications show promise for revolutionizing various industries by enabling complex problem-solving through collaborative efforts. The innovative nature of multi-agent systems lies in their ability to learn and adapt collectively, orchestrated by a reasoning engine — the LLM — which is able to moderate their interventions given a user’s query.

There are so many applications across industries that multi-agent applications can have, from Construction (a multi-agent system could coordinate construction tasks, such as material delivery, equipment movement, and site management), to HCLS (a multi-agent system could support the doctor to visit a patient presenting with complex symptoms, requiring collaboration among healthcare professionals), to Education (a multi-agent system could for remote learning where students can interact with virtual teaching assistants and personalized learning agents).

The expectation is to see where this reasoning systems will lead and what will be the overall impact across industries.