Generic Scripts For Deploying an Organization, Environments, and Applications

ACM.359 Biting the bullet and cleaning up my deployment scripts to work for any environment

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: AWS Security | Secure Code | IAM | AWS Organizations

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post I created three keys in my Sandbox Account — one for logs, application data, and deployment tools.

I was going to write about using those keys and my plan was to delete my existing environment and start over. But then I remembered… networking. I set up a private network and had a lot of issues with VPC Endpoints along the way. I didn’t fully automate everything and I realized I could simplify my design a bit. I wrote about some options in this post.

So now I’m thinking I should just stop and fix my scripts for good and get it over with. I was going to save this for later but its seems like the time to get it done or I’ll just have more troubleshooting and complexity later.

I’m not going to implement everything in that networking post but I am going to make one change. I’m going to deploy one security group for all my VPC endpoints and one security group for all my resources that access the VPC endpoints. As I wrote about in that post, we can use endpoint policies to further restrict access to the appropriate resources. But to avoid insanity and the time it will likely take to troubleshoot if I do both at once, I’m only going to change my security groups in this post.

I’m going to fully automate things I added manually for testing purposes — users, roles, SSM parameters, and secrets.

I’m also going to remove hardcoded values and use my SSM parameters in names. You’ll see how that changes things as we progress.

Additionally, I am going to use all lowercase with no dashes for any names because that is the only approach that works across all AWS resources as I’ve figured out with further testing. I could use dashes in almost everything — except container names — which also can’t have upper and lowercase in Elastic Container Registry. I’m going to prefix all names with the environment name because some things can’t have a number at the start of the name for some unknown reason. Therefore, environment names cannot start with a number, but anything else can.

Here we go…I’m going to start my whole deployment methodology over from scratch. I was planning to do this later but now’s the time. I’m also going to create a new repo and check my code into CodeCommit first and then push it to my GitHub repo for this code (the reverse of what I did in my static website code).

Additionally, I’m going to fix any outputs that are using anything but the name of the resource (less the environment and organization values) for the output that contains the ARN or ID of the resource.

The other thing I’m going to do is change my repository structure as follows.

All CloudFormation templates will be in a resources folder at the root.

/resources

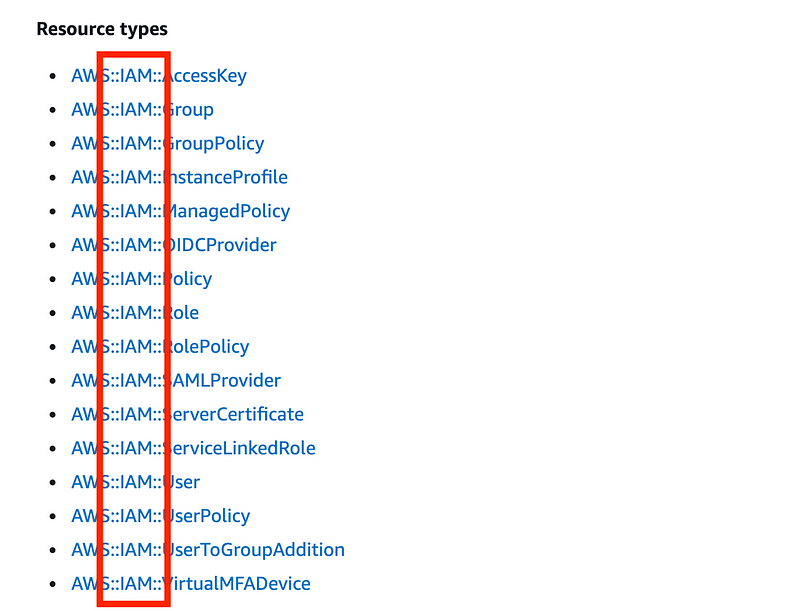

Below that I will have folders aligned with the CloudFormation resources listed here, less “Amazon” or “AWS” which seems irrelevant and messes up the alphabetical listing. I wish AWS would remove that and other long time AWS users have expressed the same wish. #awswishlist.

CloudFormation Resources:

I’m finding the use of “stacks” in my structure to just waste my time trying to get to the file I want to use.

In each resource folder I’ll have the functions script and a cfn folder with the templates related to that resource. Any related scripts will be in a scripts folder.

There will be a few exceptions where I don’t like the AWS naming convention in that list.

I will have an IAM folder instead of “Identity and Access Management” since I think most people will be looking for IAM and that is what it is called in the resource name:

I will have a separate folder for EC2 and VPC resources. These are really separate categories of resources and if you want to segregate this code into separate repositories it will be easier to do if the code is in separate folders. Subnets, route tables, VPC endpoints, and security groups will fall under VPC. I’ll generally follow the layout of the AWS UI for this separation though some things exist on both the EC2 and VPC UI.

Where I do diverge from the naming convention I will put a placeholder with a readme file that tells anyone looking for the resources where to find them.

Organizational Deployment Scripts

I have two deployment scripts currently and going to add a couple more:

- An organization deployment script.

- An environment deployment script.

- An apps account deployment script.

- A static website deployment script.

There’s still more I need to do in the organization as a whole, but I’ll go ahead and get these scripts working.

I’m going to use my Bash container to run scripts. That container can be used on a local laptop or on an EC2 instance that requires MFA to run the script as explained in this proof of concept.

What’s an environment?

If you’ve ever worked as a software developer you’ve probably heard the term “development environment” or something to that effect. My environments are basically similar to that but I’d probably call them “deployment environments” instead. A deployment environment includes things like a CodeCommit repository, an Elastic Container Registry, and definition of parameters specific to that environment used in naming conventions.

In some organizations, a single code repository is used across environments. I do things a bit differently. I have a separate code repository in each environment. You can more easily restrict who can access the repository for each environment. Code can be pushed from one environment to another and you can lock things down when not actively deploying. I already showed you how to push code from GitHub to CodeCommit using a very simple script. You could also perform integrity checks along the way and after the fact.

I find git branches and merges to be complex and error prone. I’ve been the one to sort out a bad merge before. That’s fine to do in a development environment, but once you are pushing to production, lets have one source role that pushes the code between repos to avoid conflicts, no? That’s my approach anyway.

So for each of my environments, I’m going to have a container repository and a code repository that I can lock down when not actively pushing or deploying things to those registries. I can also allow different principles to access different repositories.

There will be one “administrative” or “deployment” account in each environment and one or more “application” accounts where the code gets pushed to deploy applications.

I also deploy separate accounts for each penetration test, for example, with a client specific configuration. So this method of deploying accounts for applications can be used for different types of projects as well. An account used for penetration testing has a different configuration than one used for hosting public websites but I can create a deployment script for either one. I already have a script for new penetration tests accounts, actually. But these changes will make those deployments even easier and faster to modify.

Removing the hardcoded values and creating reusable scripts

As I was building things out, I was hardcoding things here and there that were complicated to code at the moment. Now I want to make sure I have completely reusable scripts for any new website or environment — and that they still work with all the changes I’ve made.

I’m going to test this all from scratch — including creating new AWS Accounts. If you have been programming for a while, you know that deploying on top of existing resources is not a true test of your deployment scripts. You need to start with a blank slate to test scripts that start from scratch. On the flip side, if you are deploying onto a production environment, you need to test your deployment scripts on something that mirrors production, otherwise you’re going to miss things.

Reusable Components

I explained how you could use my code in your own scripts in a prior post by checking out my repository and calling the functions in your own code. That’s basically how my two scripts work.

I use my role switching code to deploy different things. The OrgRoot role deploys the accounts. Currently the SandboxAdmin role deploys everything else.

My script switches roles and calls common functions and that’s about it. It doesn’t implement any code itself.

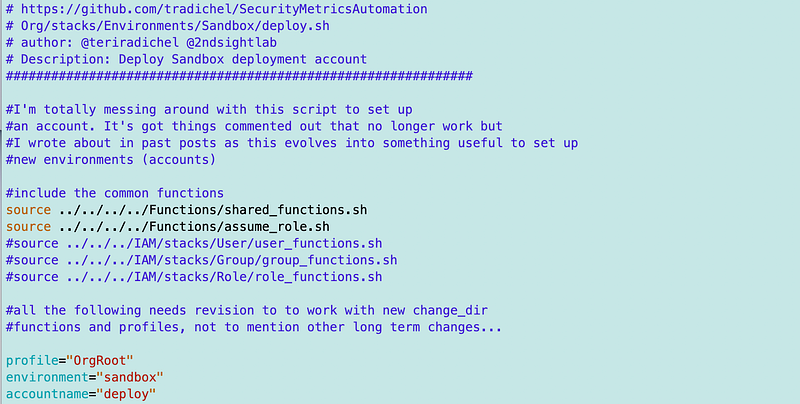

Sandbox Environment Deployment Script

My Sandbox deployment script is a big mess right now. I have things I was building and testing but it will end up including the following:

- An environment deployment account.

- SSM Parameters with the environment-specific parameters I wrote about earlier used across scripts.

- Environment admin user.

- Environment admin role.

- Apps deployment user.

- Apps deployment role.

- Networking for deployments.

- An ECR repository.

- EC2 instance for the environment admin.

- A codecommit repository for my deployment code (what I’m writing about in this blog).

Coming after further testing:

- A container solution to pull from GitHub and push the AWS CodeCommit that requires a separate MFA code each time (Have to see if the EC2 solution I did a proof of concept for in an earlier post works consistently.)

- A Lambda function that can trigger a push from CodeCommit to S3 that can be called from any repository.

- I need to revisit the Domains (DNS) account and see if I want to make any changes to that. I want to see if it makes sense to create an environment specific DNS account for testing DNS outside of the production DNS account. However, if you create a subdomain like dev.rainierrhododendrons.com, that requires some changes to the primary domain. But you might have separate DNS accounts for different domains. Still thinking about that.

Note that I am not going to create repositories for websites in my environment script. Those are specific to each website and we want to touch the environment deployment script and run it only once when we set up the new environment if possible.

Environment Apps or Project Account

- Create a new account.

- Add the SSM parameters defining the environment.

- Deploy the application environment networking.

- Add a cross-account role for an app deployments.

Website Deployment Script

The web deployment script currently does the following but needs to be cleaned up to remove hard coded variables and retested from scratch:

- Create a GitHub repository.

- Copy code from an existing website into the GitHub repository.

- Create an AWS CodeCommit repository.

- Create the S3 bucket for the website.

- Deploy a Trigger on the AWS CodeCommit repository to copy files to S3.

- Copy the code from GitHub to AWS CodeCommit (triggers push to S3).

- Create any required DNS records in the DNS account.

- Create any required DNS records in the apps account.

- Deploy a TLS certificate.

- Configure S3 to host a website.

- Configure CloudFront.

New ** :

- Create a repository for images.

- Pull the images into the image domain S3 bucket.

- All the web deployment steps above.

OK that’s the plan. In the spirit of Andy Jassy, “Giddyup!”

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab