Fixing AWS GitHub Prefix List — GitHub API Changes?

ACM.273 Fixing the Prefix List because it’s not working anymore…

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Part of my series on Automating Cybersecurity Metrics. The Code.

🔒 Related Stories: Network Security | GitHub Security

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post I was distracted from what I really wanted to do by a hard-coded policy that I needed to make more generic before I could proceed.

This post is about the kind of thing that could make a person slightly cranky. You had your code working and then a cloud product makes a change that breaks it.

I was reusing some code that worked before. Because it worked before and I nor AWS has changed anything, I presume Microsoft broke this with changes on their end. But also, while working through this, I figured out some ways to improve my own code.

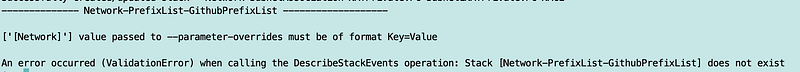

The post I *hope* to deliver next, now delayed twice, was supposed to be simple using mostly code that worked before but when it got to the GitHub prefix list I created and deployed previously I kept getting errors.

I had a note in there that if the processed failed to run it again but re-running it twice didn’t work.

I started digging into the code further.

To isolate the problem, I tried running this function standalone that creates the prefix list:

deploy_github_prefix_listThis time, it ran. The Microsoft API worked. I use that term loosely. It executed this time and Microsoft returned some results. But my code didn’t produce the correct results and further testing is proving that the GitHub API is unreliable.

I check the stack output. Apparently GitHub increased the number of IP ranges again to a point past whatever the AWS limits are that I probably knew at one point but have since forgotten. I haven’t changed anything on my end so why would I get the error below with the same API call?

I pulled a subset of IPs due to this issue previously but something in the Github output changed and now it’s broken again.

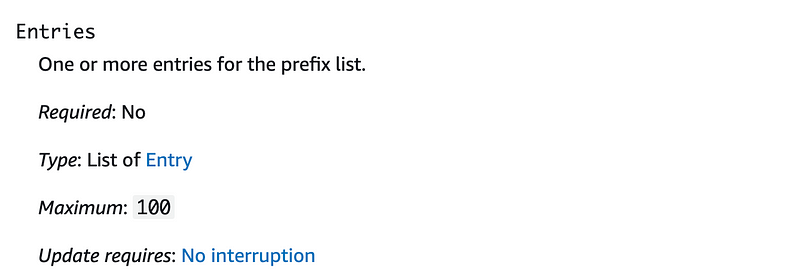

That CloudFormation error message could be better. It should tell you what the max entries number is — and according to the documentation it is 1,000.

You can resize a prefix list and modify the maximum number of entries for the prefix list up to 1000.

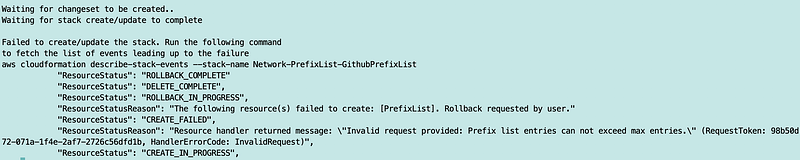

But am I really pulling back 1,000 IP addresses in this list?

Let’s find out.

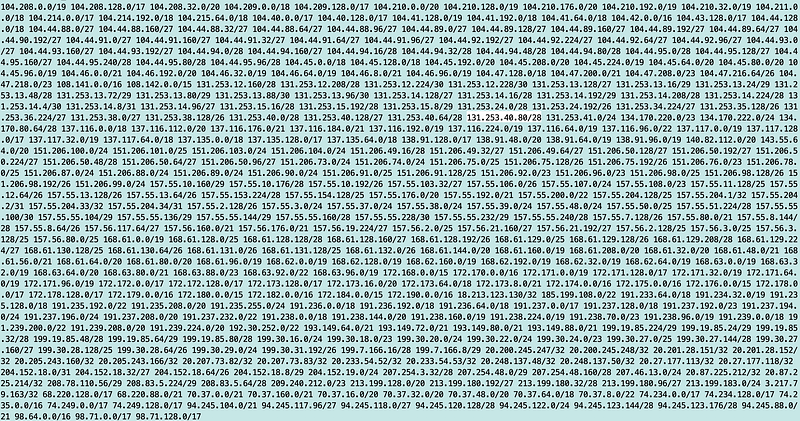

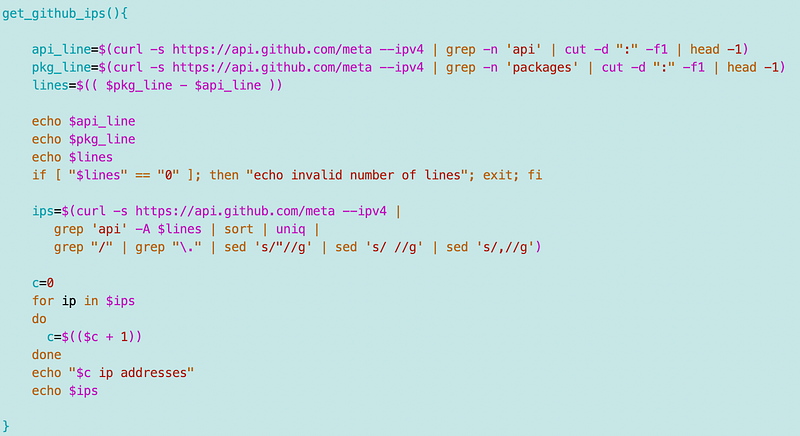

I wrote a little function to get and count the GitHub IPs:

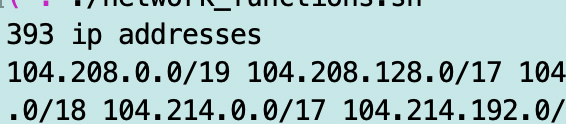

There are “only” 393.

CloudFormation bug?? No. Oh great. CloudFormation only allows 100. I probably wrote about this before but I forgot.

But upon reviewing my prior post, the deployment didn’t work until I changed the Maximum to 50 and deleted my prior stack. Hmm. I have no existing stacks in this account for this GitHub prefix list so that’s not it.

Microsoft. Why so many IPs?

The whole reason I have to filter out this list in the first place is because there are 50 gajillion IP addresses.

I wish GitHub would fix this. I should be able to define which regions I want to use with GitHub. I should be able to use potentially one or two CIDR blocks per region or at the very most like 5 to do a push or pull to GitHub. That’s it. No. I have 393 CIDR blocks to deal with. And that’s not including IPv6! Insanity.

Github, think about network security, please

When I look at this list of IPs it is unbelievable. Here’s why:

- The IP blocks are tiny an numerous for apparently no reason. Why so many small ranges? Allocate larger, contiguous CIDR blocks. I used to do this for Capital One. I understand the complications.

- If you can’t do that, front all your tiny little ranges with something that has a reasonable number of CIDRs and routes to all your gajillion IP ranges if you can’t consolidate them. Use regional load balancers and reverse proxies or something. Create a smarter API gateway with a single block per region and a router behind it. Anything to reduce the number of IP blocks.

- Use contiguous IP blocks! This list is a whole bunch of non-continuous tiny IP ranges that are difficult to manage on the Microsoft side. On the client side it forces you to create hundreds of firewall rules to support the access you need, or in our case in this post, multiple prefix lists.

- The API is not reliable. The only thing I can think of is that even though Microsoft claims they still support IPv4 they are somehow sending me to an IPv6 address I can’t reach. I could test this but I can also just add this to my curl command which I will try to see if that alleviates the initial failures. But what if a DNS requests sends me an IPv6 address? Hopefully curl can sort that out.

--ipv4Testing the above and checking that DNS is not returning IPv6 did not resolve any problems or identify any issues related to IPv6.

How can we fix our prefix list?

If we simply select a subset of IP ranges then we might end up trying to reach some IP that we can’t access via the firewall rule. That won’t work.

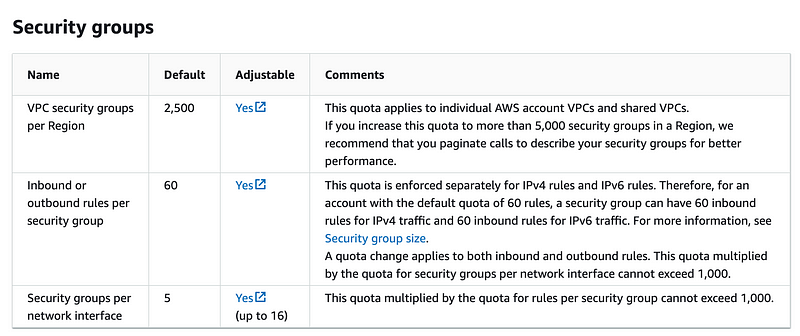

How many CIDRs can we add to the security groups attached to a single network interface? I seem to recall writing about that somewhere else but the good news is, these quotas seem to have increased.

You can have up to 60 rules per security group now. You can have up to 5 security groups per network Interface.

60 * 5 = 300

Really, Microsoft? 393 CIDR blocks for GitHub per the metadata and I’m not even capturing all of them in this query.

I was thinking I could just create multiple prefix lists and add them to my security group but no. Because the underlying entries count towards the quota and as of now we need 393.

Is anyone else complaining to Microsoft or people just all end up hosting their own GitHub server?

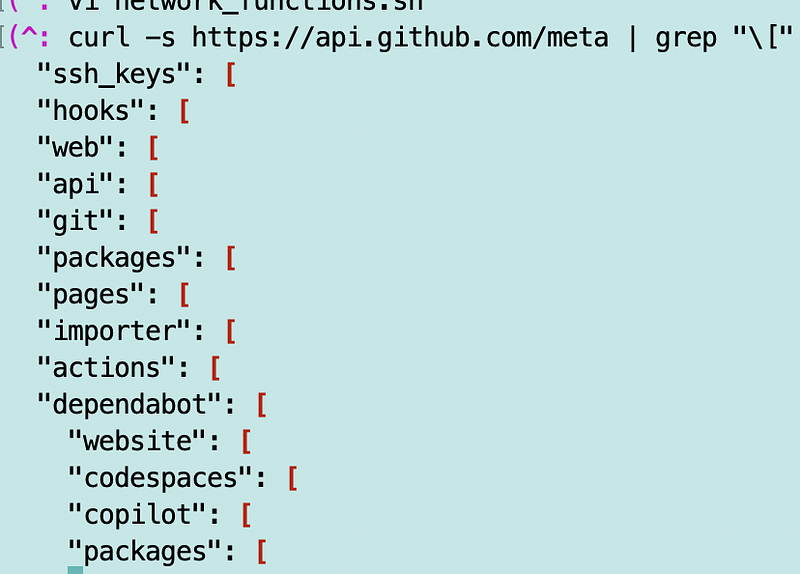

Let me revisit the IP list again. Just like last time I’m going to grep all the headings to see if something changed.

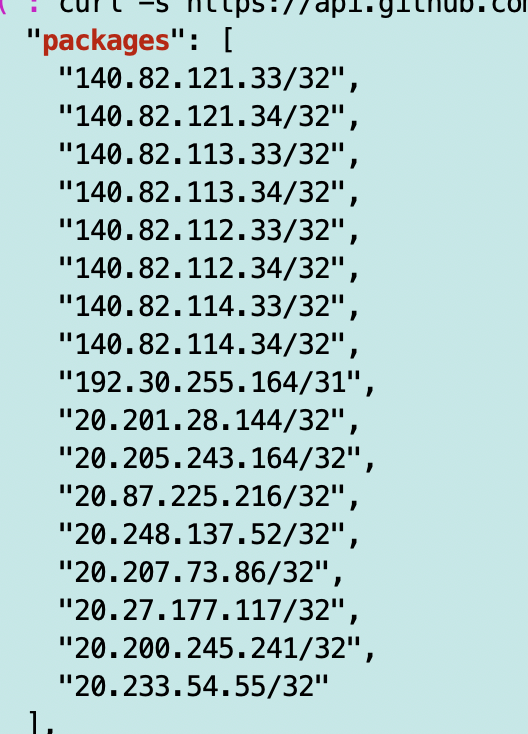

Odd. Why does “packages” show up twice?

Looks like one is a set of IPs:

And one is a set of domains:

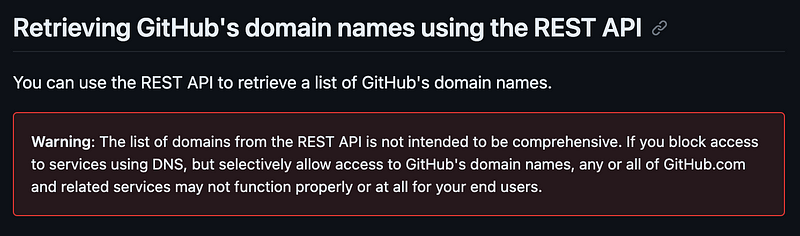

I’m not sure what the point of adding domains names is here since they don’t work.

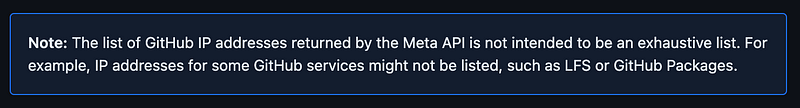

Now I’m reading the IP address list might also be suspect. I don’t think it said this last time I visited. Not very useful.

Well, the longest list is for “actions” and I’ve already determined those are far too risky. This is just one more nail in the GitHub Actions coffin. A majority of those 393 IP blocks are for GitHub actions.

I think when I initially created this list I was pondering GitHub actions but as I wrote about before that is out of the question now. I’m going to add this finding to my second post below.

I don’t think we need packages. That is likely for the GitHub package manager, which I don’t intend to use at this point.

Copilot is a security thing not needed at this point. Dependabot runs on GitHub and not needed here.

Codespaces is an expensive replacement of a open source free text editor named Atom online. Sad.

We don’t need Hooks. I don’t think we need the website for the Lambda function. Importer is the opposite of what I’m trying to do.

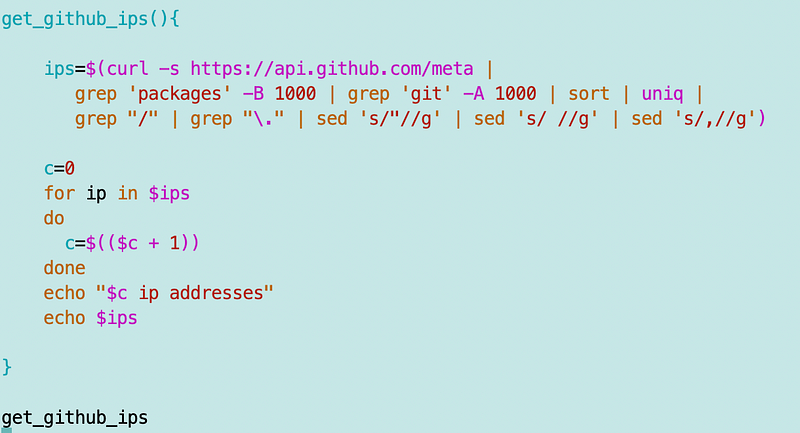

As far as I can tell the only need are the api and git IP addresses. Luckily they are next to each other in the list which simplifies what I am about to do.

The next name in the list is packages. So essentially I need all the IP addresses between “api” and “packages.”

I can get the line that the first instance of api appears on:

api_line=$(curl -s https://api.github.com/meta --ipv4 | grep -n api | cut -d ":" -f1 | head -1)Then I can get the line that pages (the heading after packages) appears on:

pkg_line=$(curl -s https://api.github.com/meta --ipv4 | grep -n packages | cut -d ":" -f1 | head -1)I can calculate the number of lines I need:

lines=$(( $pkg_line - $api_line))Now I can grep that many lines after packages. I will get some extraneous data in the second instance of packages but that will all get grepped out when I filter out the IP address. My test function looks like this:

And here’s a much more reasonable list of IP addresses — 28 (though it still seems excessive):

Note that while I was trying the run this the API was flaking on and off repeatedly so I had to add a check for 0 IP addresses. Also, sometimes the first number would come up but the second number would be 1 and I ended up with a negative number.

If I dig the domain a bunch of times I never get back an IPv6 address. So there’s just something wrong with this API.

Anyway, it works when it works. Now I’m going to copy the relevant lines to the function that deploys the prefix list.

There was one other thing I had to fix — I had to set my parameter variable “p” to an empty string. It must have had some value in it from somewhere else that was messing up the stack creation. Bad me for not initializing my variables!

Anyway now my function works and my prefix list deploys again. Hope it continues to work because that is a pain. I should probably be parsing JSON instead but that also is a pain. So for now this is my solution.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab