Batch Compute Environment and Execution Roles and Policies

ACM.334 Trying to decipher what roles and policies we need from the AWS Batch documentation

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: IAM | Secure Code | Container Security | Batch

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post I analyzed some of the roles I need to create to use AWS Batch.

I thought I was going to deploy all the roles I need in this post. I started to do that. However, I also made some discoveries along the way. I need to take a closer look at what AWS Batch requires.

Required Roles, Revisited

I started to create the roles covered in the documentation but things got a bit confusing. The documentation mentions various roles and policies but at the time of this writing it does not make it clear where or how each policy or role is used.

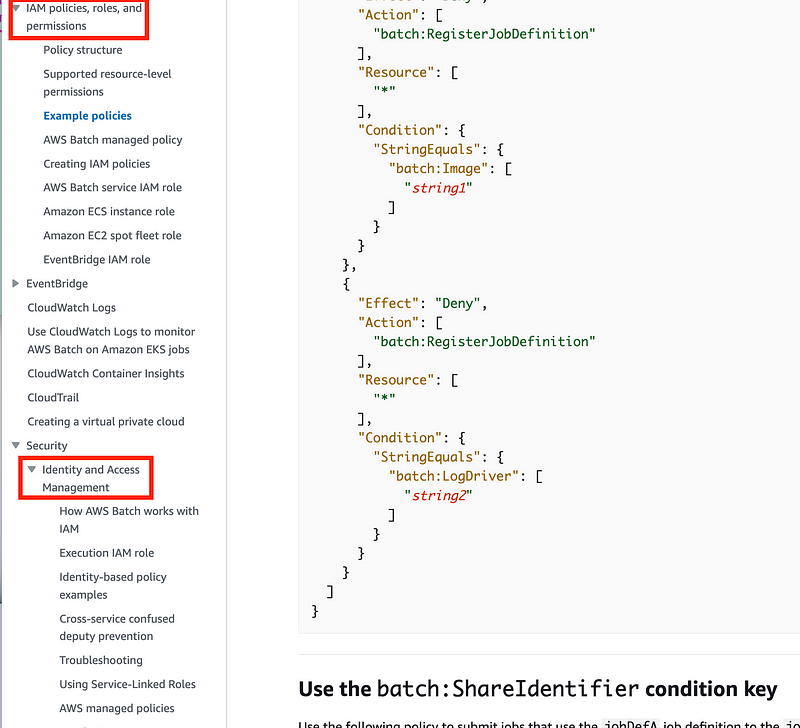

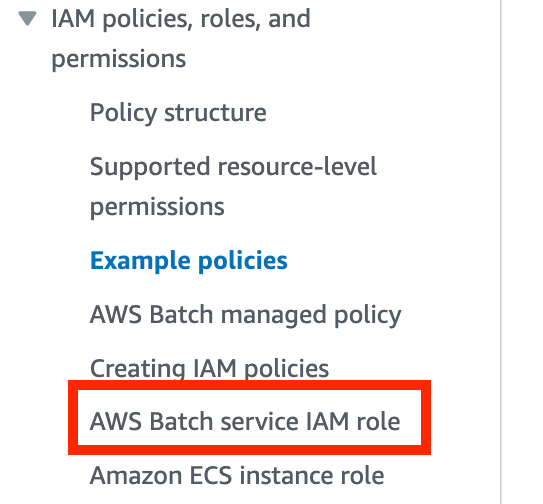

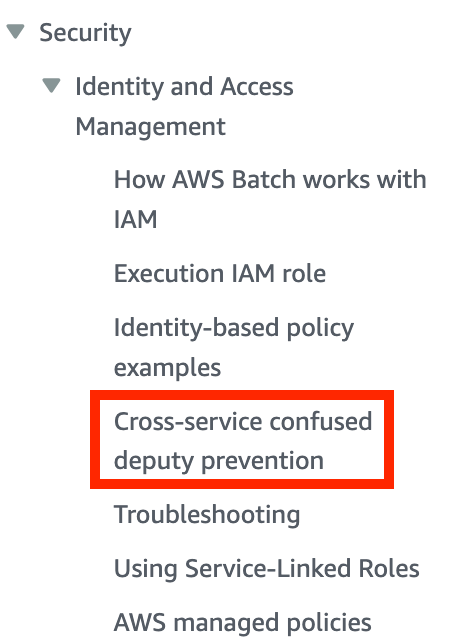

I realized while writing this post that part of the confusion is that AWS Batch has two separate sections on IAM policies in the documentation:

Somehow I landed on the bottom section and was scouring that for the necessary information and was confused. Also, why are these two separate sections?

If you should use the conditions in the Cross-service confused deputy prevention section, include that in the above IAM examples and explain it there. Also, which policies require those conditions? All of them? And by the way the sample Batch service role policy has conditions that don’t work. So that needs to be fixed. Perhaps those conditions get replaced with the conditions that prevent cross-service access? It’s not really clear on first read but I’m going to think through it below.

In order to try to determine exactly what is required, I created a few of the policies and tried to use them in the AWS console. That is when I realized I was missing something. Here’s a walk through of what I discovered followed by a summary at the end.

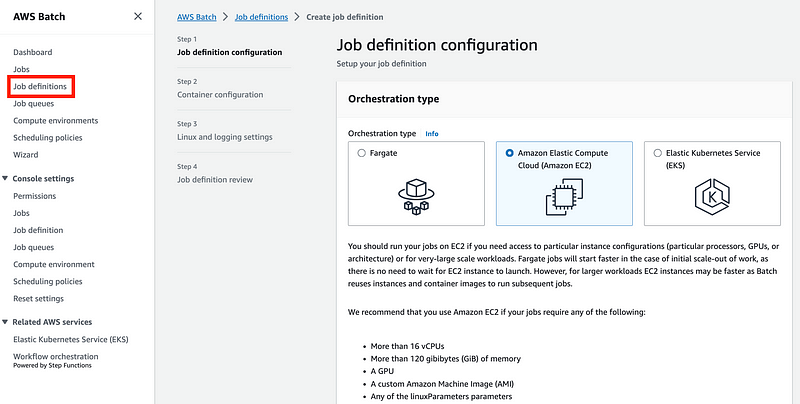

Compute Environment Options

Click Compute Environment on the Left.

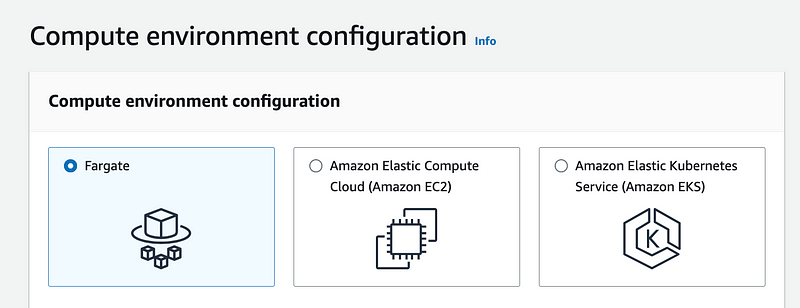

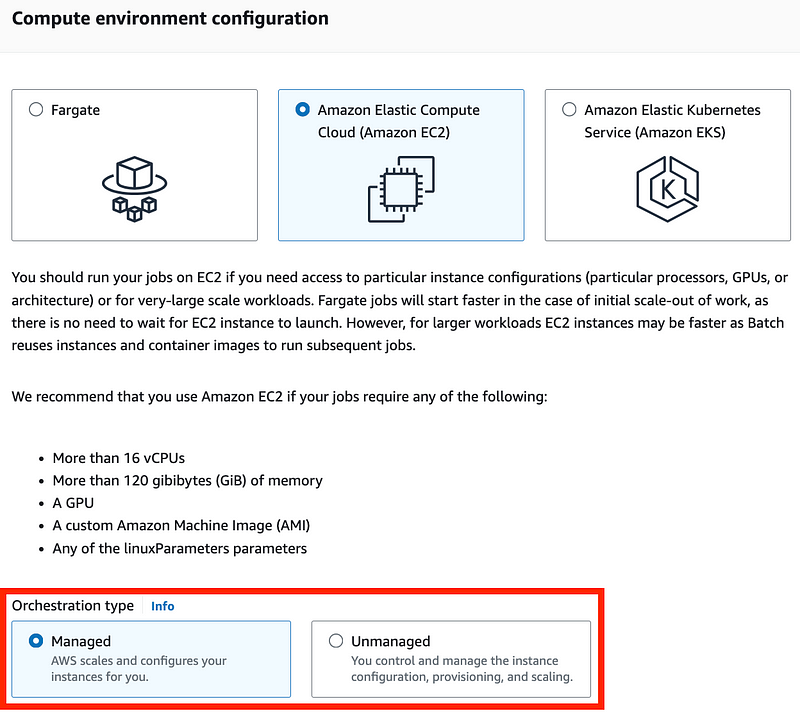

Note that we have three compute environment options.

Fargate: Upload your container and run it. It is not clear from the AWS

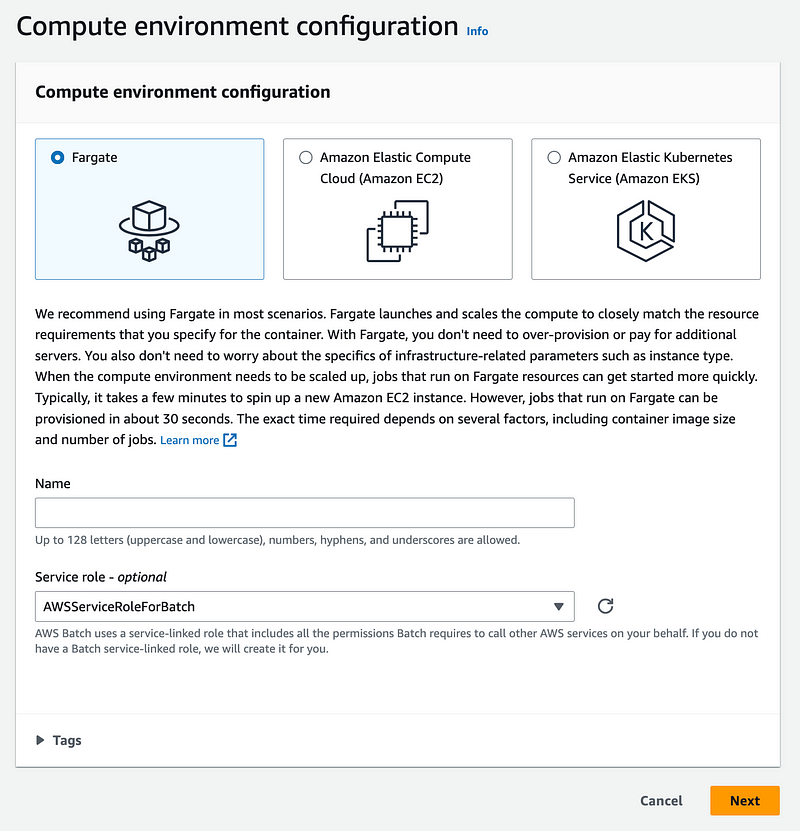

Pros: AWS recommends this option because it is faster and it is also likely simpler to deploy. I found this in the console, not the documentation:

We recommend using Fargate in most scenarios. Fargate launches and scales the compute to closely match the resource requirements that you specify for the container. With Fargate, you don’t need to over-provision or pay for additional servers. You also don’t need to worry about the specifics of infrastructure-related parameters such as instance type. When the compute environment needs to be scaled up, jobs that run on Fargate resources can get started more quickly. Typically, it takes a few minutes to spin up a new Amazon EC2 instance. However, jobs that run on Fargate can be provisioned in about 30 seconds. The exact time required depends on several factors, including container image size and number of jobs.

Cons: Batch documentation that you can encrypt ephemeral storage or the compute environment when using Fargate with your own customer-managed key. I also do not see that option when creating the compute environment. Some reports indicate that in general, Fargate costs more. I don’t know if that is true in Batch and it may vary by use case.

Apparently a number of people are blocked from using Fargate at their companies due to lack of ability to encrypt the ephemeral data with a customer manged key. Hopefully AWS is working on this and we’ll hear an announcement soon.

https://github.com/aws/containers-roadmap/issues/915

Amazon EC2: Run your container on an instance you specify.

Pros: You can use your own AMI, encrypted with your customer managed key. You can be very specific about which instance types you use.

Cons: Looking at the possible instance types you cannot get the very smallest instance types. This may offset any savings compared to using Fargate — but there’s no way to know without testing both options. It may take longer to spin up an EC2 instance if you are running a job that needs to scale up. In my case, I don’t need anything to scale and I’m more worried about security.

Kubernetes: You cannot even look at that option unless you have a running EKS cluster. No thanks.

Steps to create a compute environment

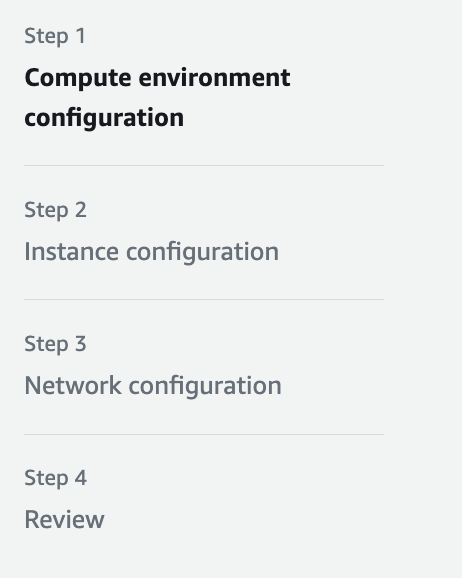

For both Fargate and EC2 there are 4 steps to configure a compute environment:

Compute environment general configuration

For both Fargate and EC2 the first step is similar. For both you will need to create a role name and choose a role.

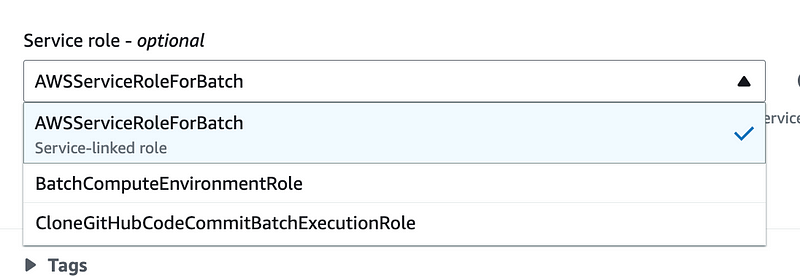

Now what is interesting is that on the Fargate configuration it says the Service role is optional, yet you are forced to pick one.

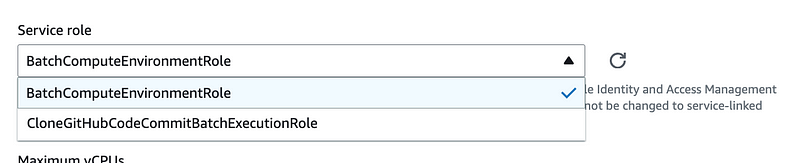

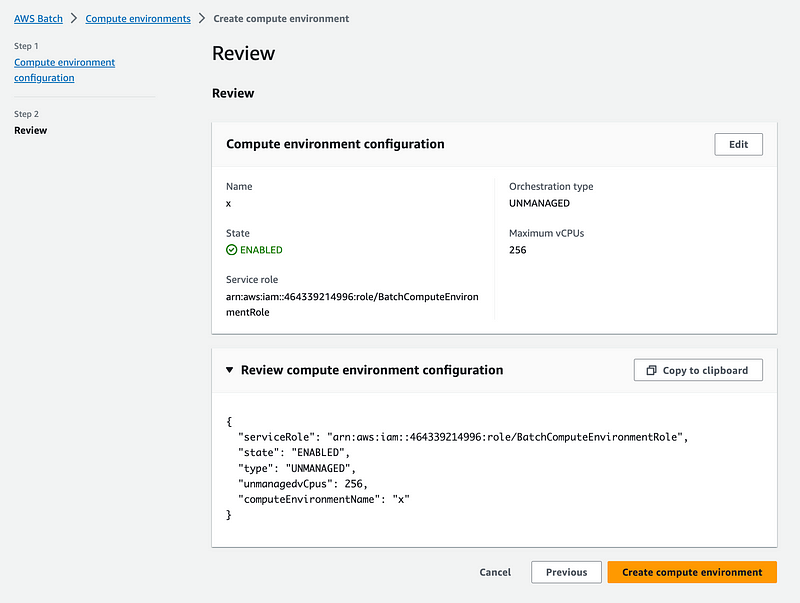

Any roles that have a trust policy that allows the AWS Batch Service to use the role will show up here. You can see that I followed the documentation to create the role and policy on the service linked role documentation page and called it BatchComputeEnvironmentRole and it shows up here.

But after I went through all this it was not clear that I was supposed to use that role and policy in this location so I went back to the documentation to validate I had done this correctly. I think I was reading only the bottom IAM section and then I found the top IAM section of the documentation.

The only link that sounds close is the link to the AWS Batch service IAM role.

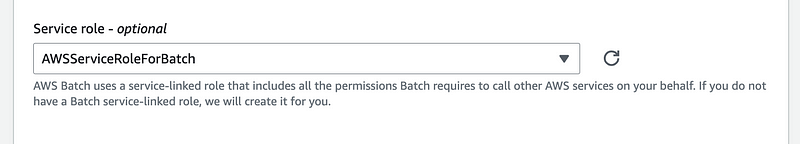

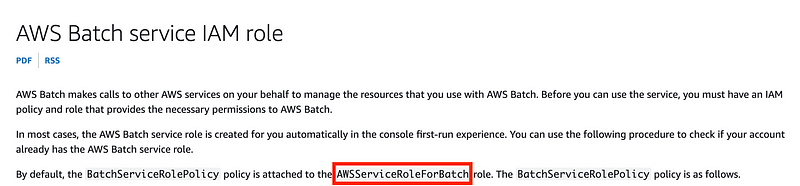

The default role name in the console is AWSServiceRoleForBatch.

In the documentation for the policy I used I see that role name.

So I did create and select the correct role but to me, clarifying that this role is associated with the compute environment is clearer than “Batch Service Role.” But maybe I’ll add a role with that specific name to make sure that only the role I create is used, if possible.

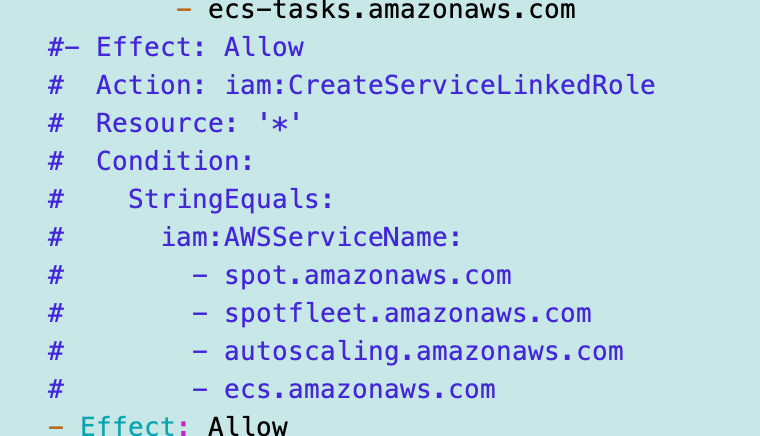

I did remove the Chinese endpoint and the ability to create new service linked roles from the policy and it deployed fine, but I don’t know if it will work. I haven’t run a batch job yet. This is the section I commented out.

IAM conditions and security best practices

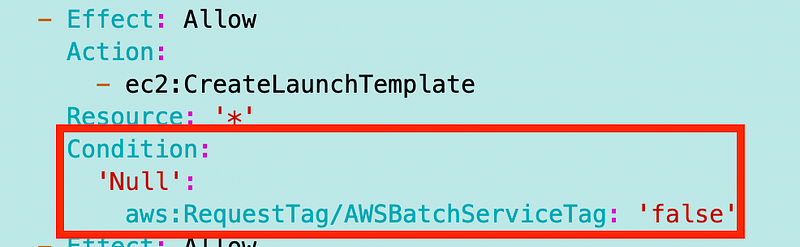

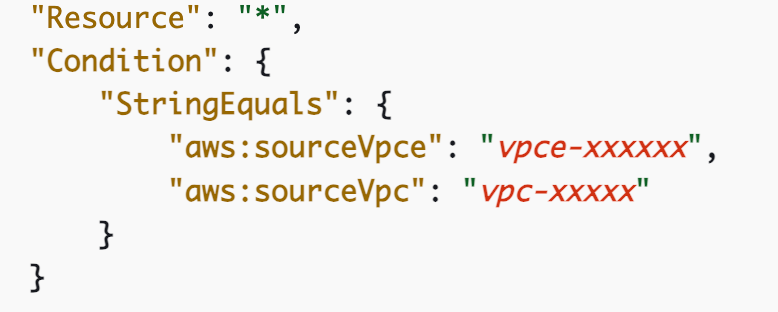

Also the conditions don’t work so I had to remove all instances of this condition:

Is this where I should be adding the cross-account confused service prevention condition?

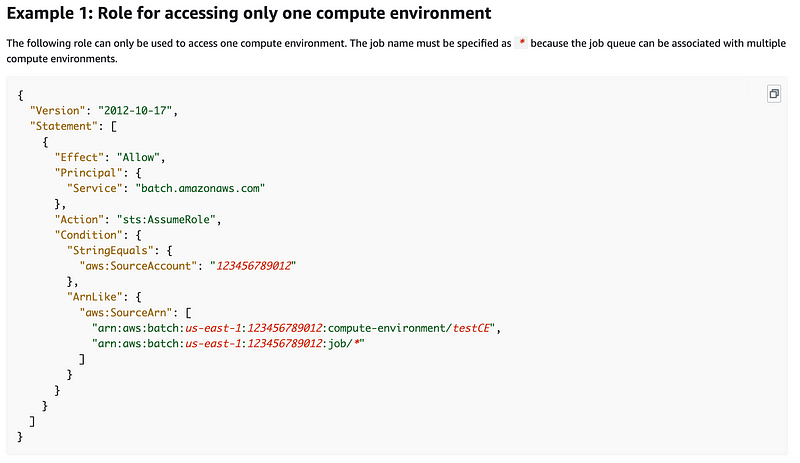

It appears this should be in any trust policy where a service can assume a role:

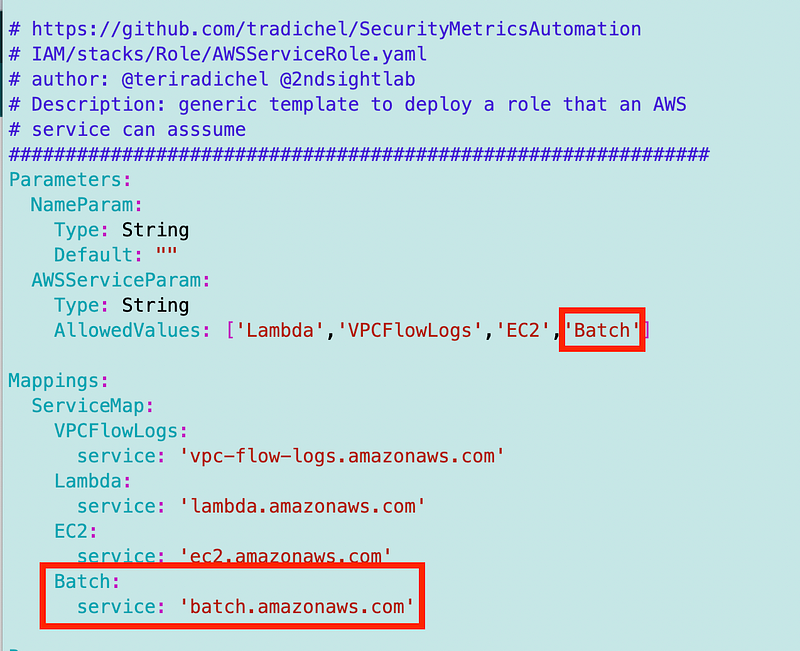

I’ll need to revisit that in my AWS service role and perhaps add it for any service, not just AWS Batch. Recall that I have a common role for deploying an AWS service. I simply had to modify it to work with AWS Batch as well. I’ll need to revisit the condition.

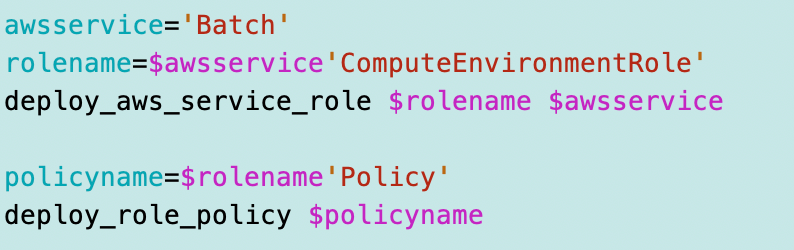

I used the same code basically to deploy the Batch service or compute environment role.

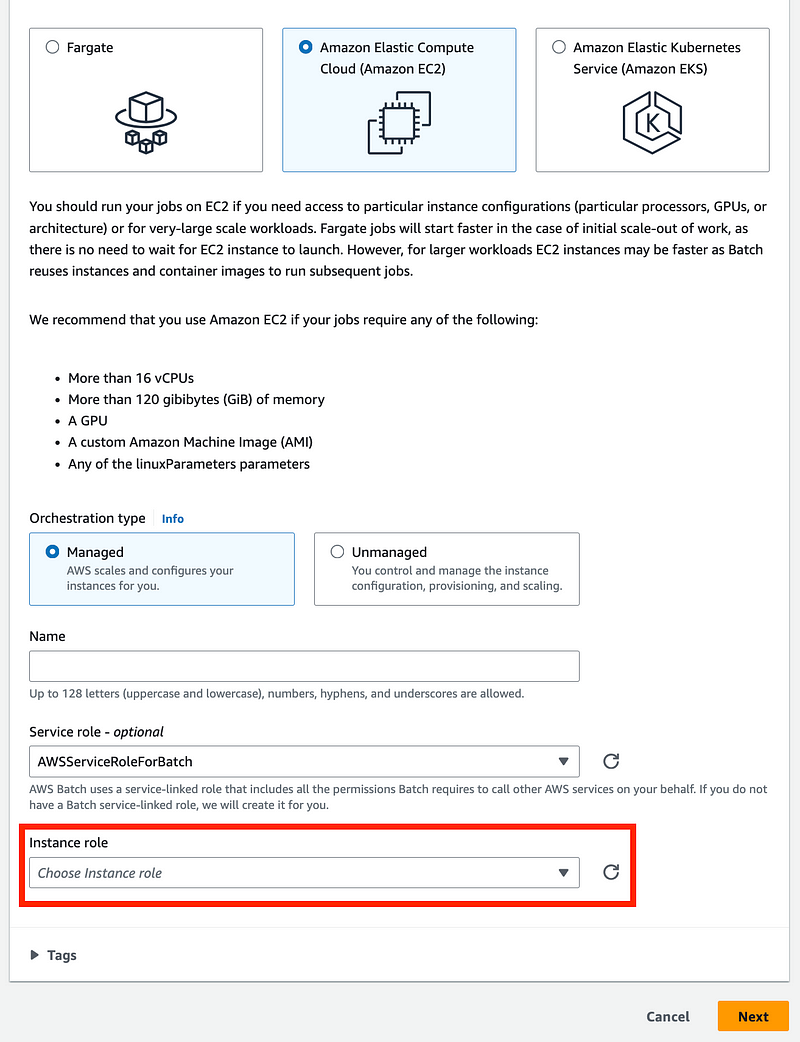

If you look at this same screen for the EC2 instance option it is pretty much the same, but you can only choose IAM roles you create:

EC2 Instance Role

With the EC2 isntance option, you also have to create a role for your EC2 instance or let AWS create one for you. When you use the EC2 instance option, you are deploying your own EC2 instance unlike when you use Fargate and let AWS handle the instance deployment.

OK, but what permissions does the instance need and how does that differ from what the AWS Compute Environment is doing?

I presume that what is called EC2 instance role in the console relates to ECS instance role in the documentation.

Why you need a role for your Batch EC2 Instances

When you use the EC2 instance option, it is going to deploy an ECS (Elastic Container Service) EC2 instance. ECS is a service from AWS that helps you manage containers running on EC2 instances.

ECS instances run an agent on the EC2 instance that manages the containers to do things like:

- Start a particular container from a particular image.

- I presume also — track the details of the container that allow it to be accessible on the network (IP address, etc.)

- Other container management operations.

That agent needs permissions. That’s why you need this Role and Policy if you are using the EC2 option of AWS Batch.

The documentation does not have the sample policy but I presume you can dig around and find it based on the role names and such in the documentation. I just let AWS create the role and policy so I could copy it to create my own and deploy it.

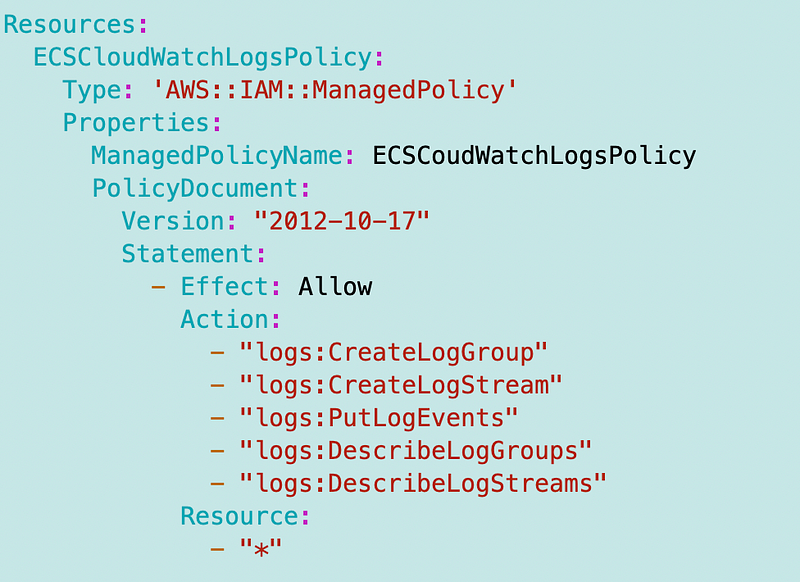

The other thing I noticed was that the documentation had a separate policy for logs. Initially I created it stand alone because I wasn’t sure what to do with it but it should probably be added to the EC2 Instance policy.

As noted in the prior post I am creating the ECS log policy, but would like to restrict that to a specific log ARN once I understand the format. I don’t know exactly how this will be used yet based on what I glanced at in the console so I simply deployed the policy.

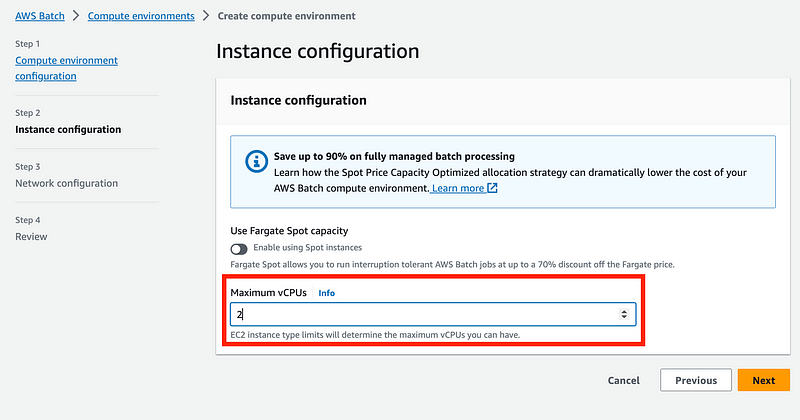

Instance Configuration

There are only two options for instance configuration with Fargate. What’s cool here is that it looks like you can use Spot capacity to save money. I’ll get to that in a minute. Also, you might want to set the max vCPUs to limit the instance types. Otherwise you might end up with a very expensive instance. I’m not sure how AWS manages all that and would require additional testing. If you use an EC2 environment. you can be more specific.

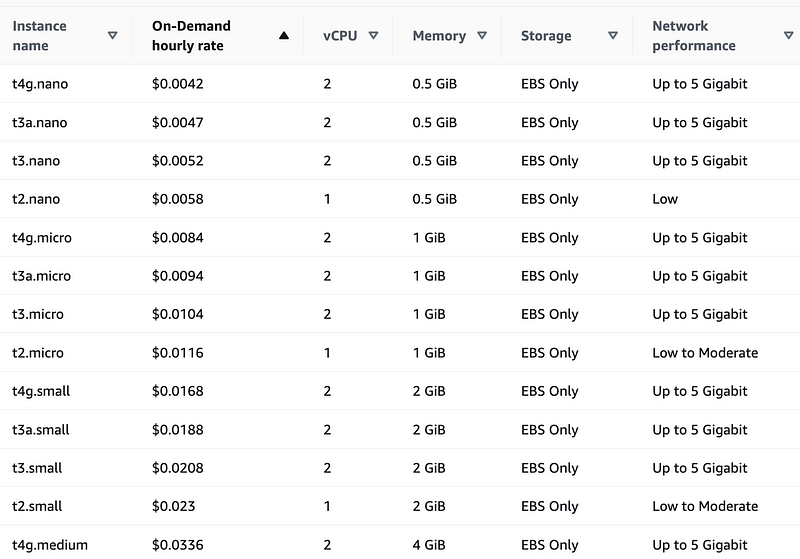

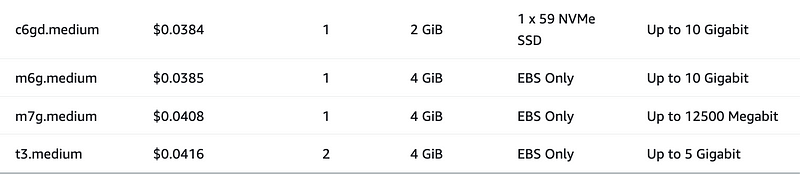

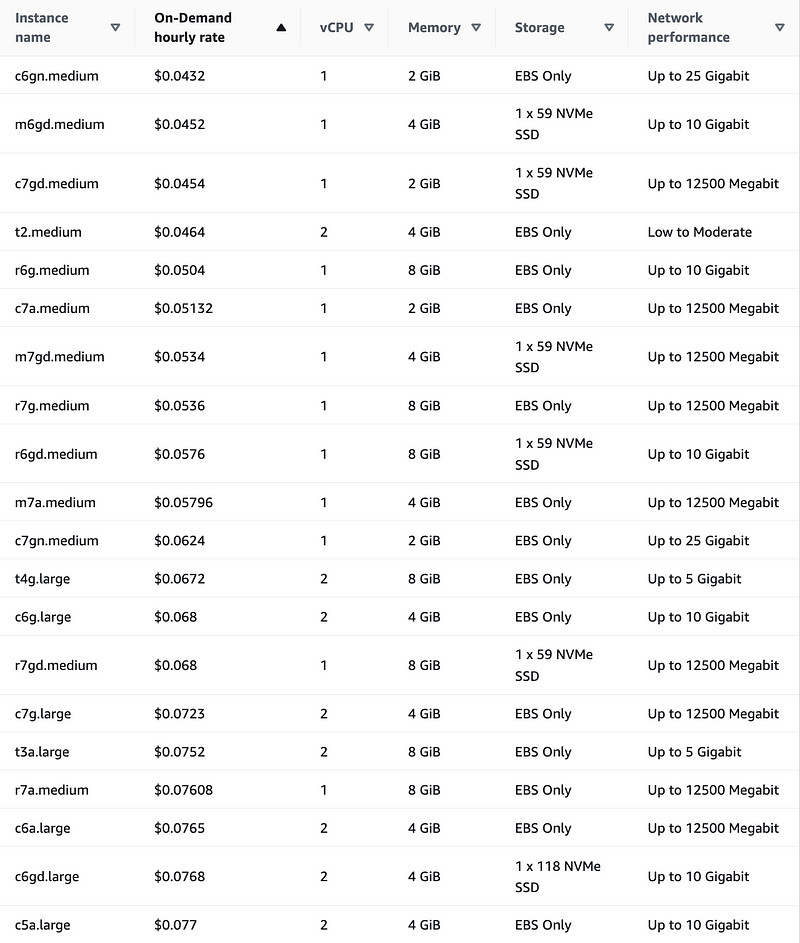

Why does this matter? Check the cost of EC2 instances. Sort by cost (on-demand hourly rate. Now I don’t know if the AWS managed options use the “t” type instances but I didn’t see an option to select them with your own configuration so skip all these:

The next most inexpensive options use 1 vCPU:

However, limiting to 1 vCPU doesn’t always equate to the lowest price. Some 2 vCPU instances are lower cost than some 1 vCPU instances.

If you really want to control this, choose the EC2 option.

On that note, the other difference on the first page if you choose EC2 is the option to choose Managed or Unmanaged.

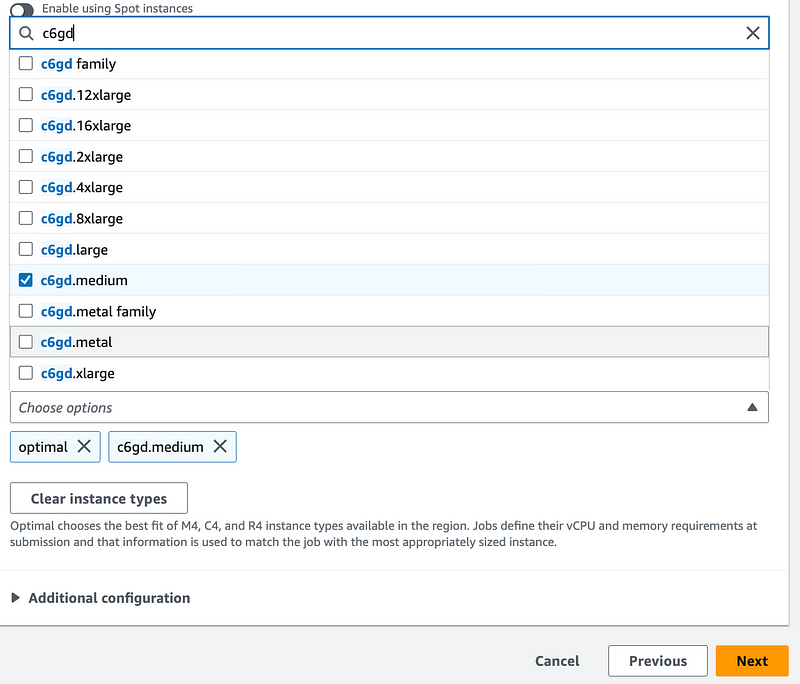

If you choose Managed, you can choose the EC2 instance type. So you can search for the lowest priced instance type from the list above which is the c6gd.medium instance type.

With the Unmanaged option, you simply give the environment a name, roles, and that’s it. You don’t select an EC2 instance. I guess that creates an ECS cluster for you that you manage, scale and size yourself.

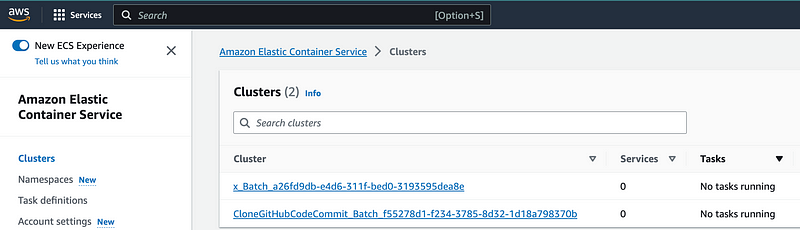

So this led me to an Aha! moment. The Batch compute environment is just a cluster that runs your batch containers. It’s either ECS (or Fargate that uses ECS under the hood) or Kubernetes. It would be simpler if the documentation described it that way. Batch schedules jobs to run with containers. It needs somewhere to run the containers and you can configure it yourself or let AWS configure it for you.

So I head over to the ECS page and I see two clusters for the two Batch compute environments I created. Maybe just call them clusters and provide a link to the related service from Batch to make that clearer.

Anyway. Got it. I’m going to let Batch run the ECS environment for now but I’m going to stick with EC2 for the moment. Maybe I’ll test Fargate out more later if I can figure out how to encrypt everything with a customer managed key. I also want to review the cost of all this versus simply executing a container on an EC2 instance versus whatever Batch is doing on top of that.

The other thing I noticed is that I could delete the underlying clusters without deleting the Batch Compute Environment. That could be confusing. The status of the compute environment turned to INVALID.

Additionally, I tried to delete a Batch compute environment after disabling it and it never got deleted even though it said it got the request.

I presume part of the cost of Batch is the underlying compute environment. What if you have a cluster running but you are not using it constantly. Are you paying for that versus a Fargate container that only charges you when you deploy it? Something to consider and test.

There is no additional charge for AWS Batch. You pay for AWS resources (e.g. EC2 instances, AWS Lambda functions or AWS Fargate) you create to store and run your application. You can use your Reserved Instances, Savings Plan, EC2 Spot Instances, and Fargate with AWS Batch by specifying your compute-type requirements when setting up your AWS Batch compute environments. Discounts will be applied at billing time.

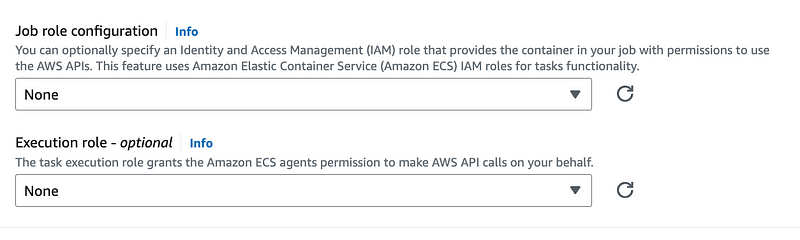

Sorting out job an execution roles

Somewhere, somehow we need to grant permissions to do the things we were doing in the Lambda function to the batch job. This is where I got totally confused. I’m using the Batch service so I presumed I would need to grant Batch permission to assume the role.

Here was my initial train of thought.

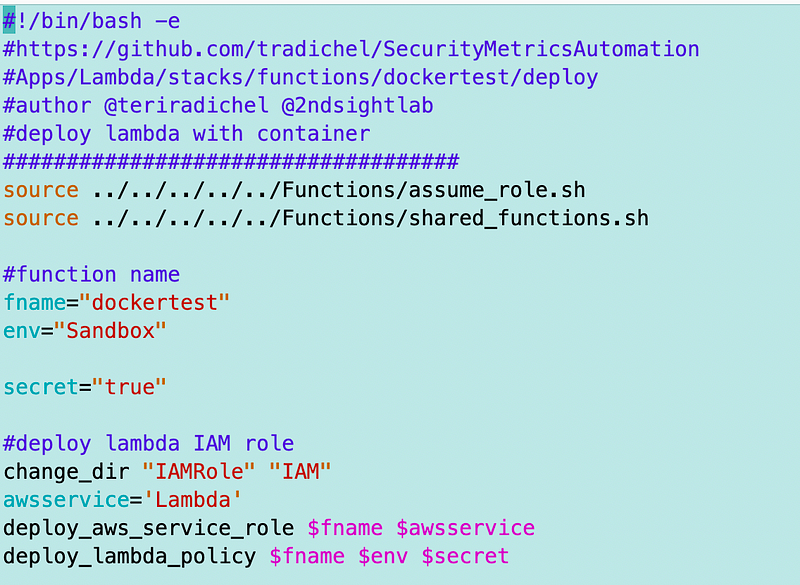

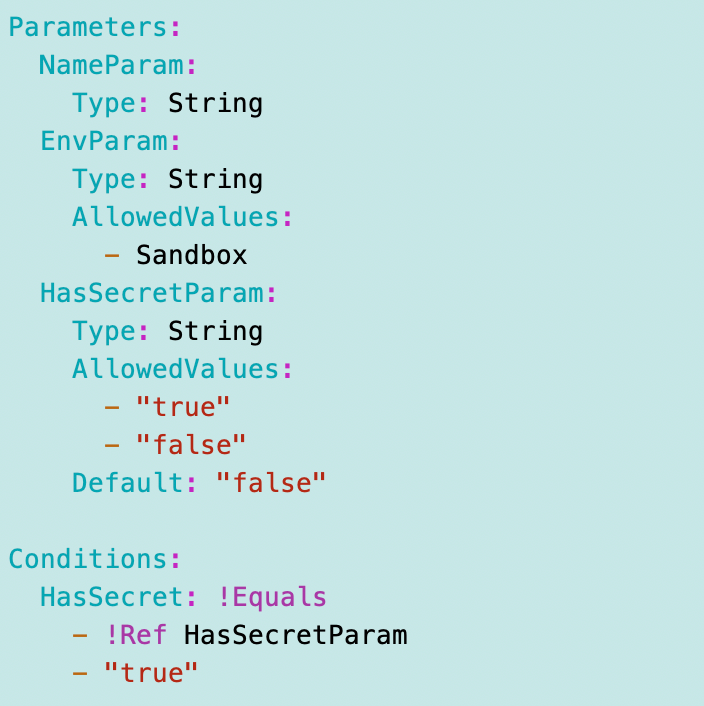

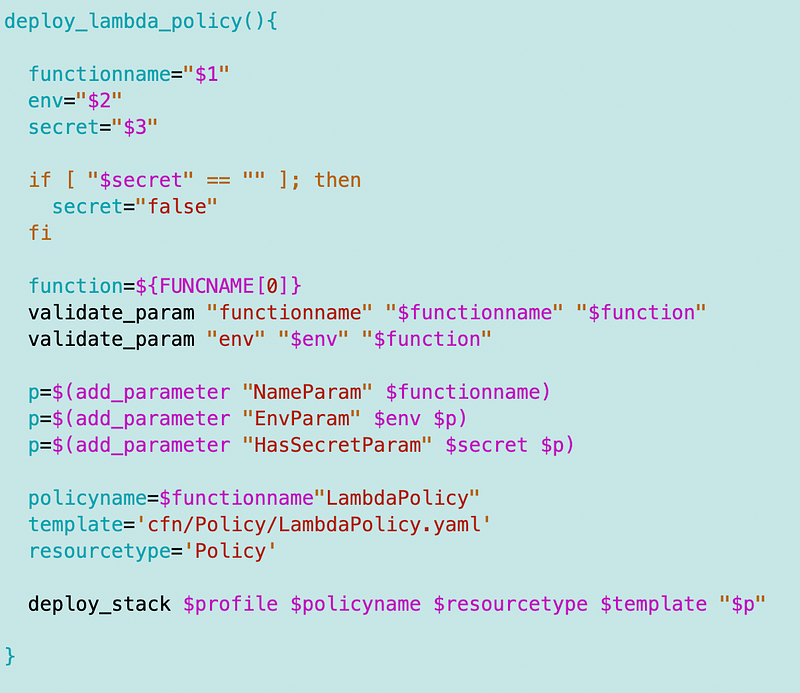

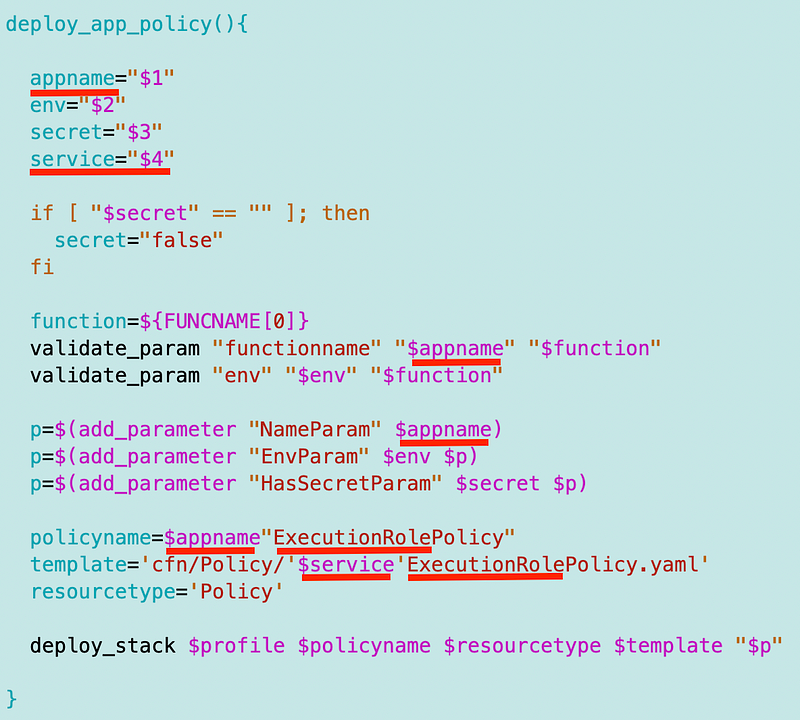

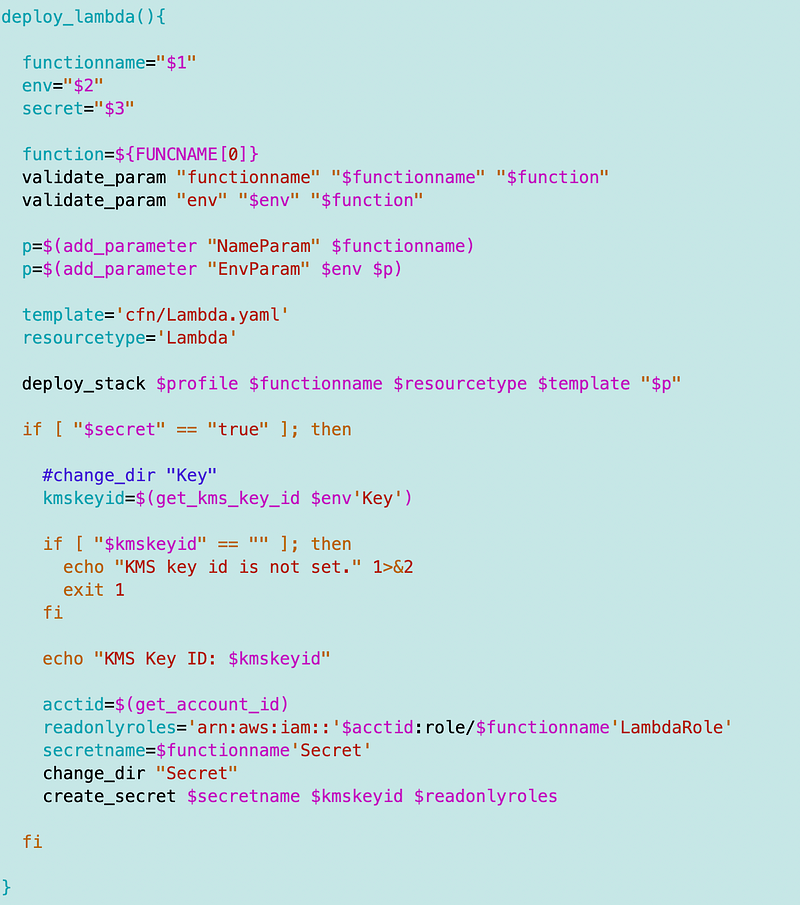

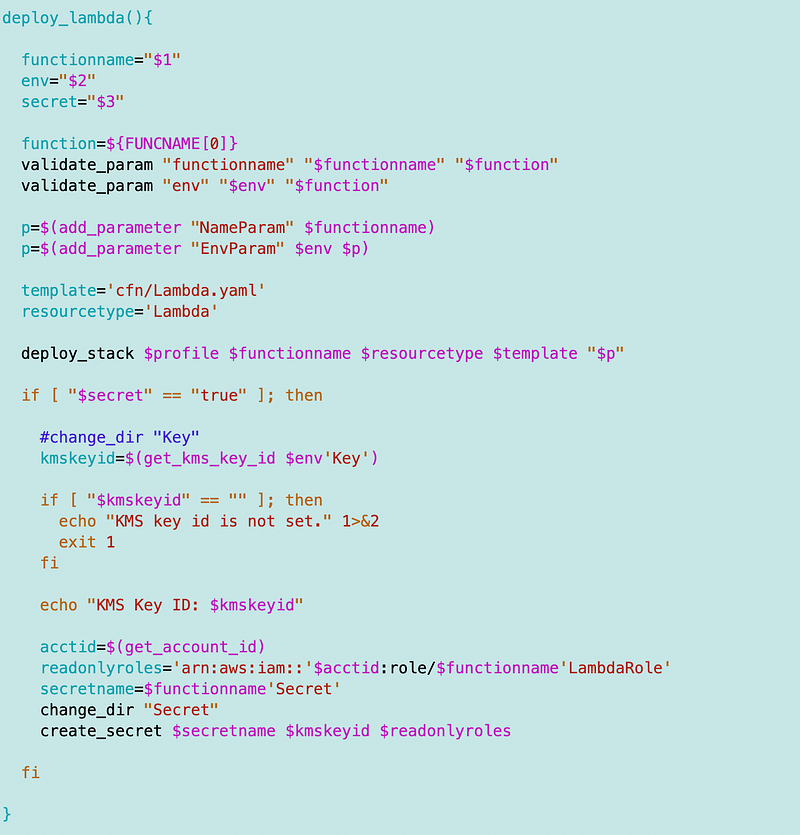

Recall that I’m deploying my Lambda execution role and function like this:

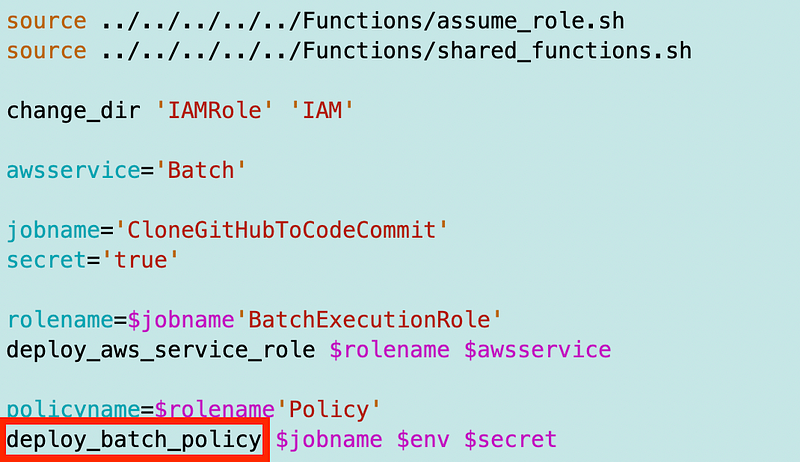

I can deploy the batch role pretty much the same way except that I’m going to need a deploy_batch_policy function instead of deploy_lambda_policy.

It could be that I could create a “deploy_app_policy” function but I’m not sure yet if they are that similar yet.

I can reuse the AWS Service Role template again but this time we’ll name it [job_name]BatchExecutionRole.

For the Lambda policy, I pass in parameters indicating to which environment the Lambda function was deployed and whether or not it has a secret. These are all probably still applicable.

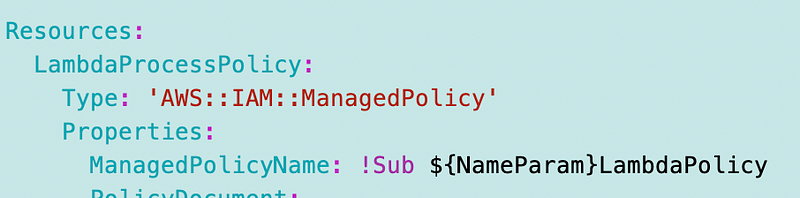

I used a common naming convention to set the policy name. We can change Lambda to Batch below, or we can make the service a parameter potentially, but we’ll need to see how much is common between the two functions.

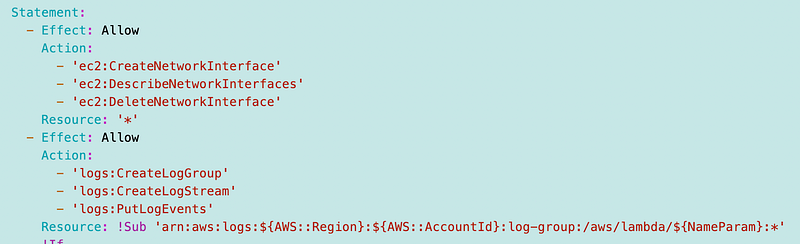

Lambda required the following in the execution role:

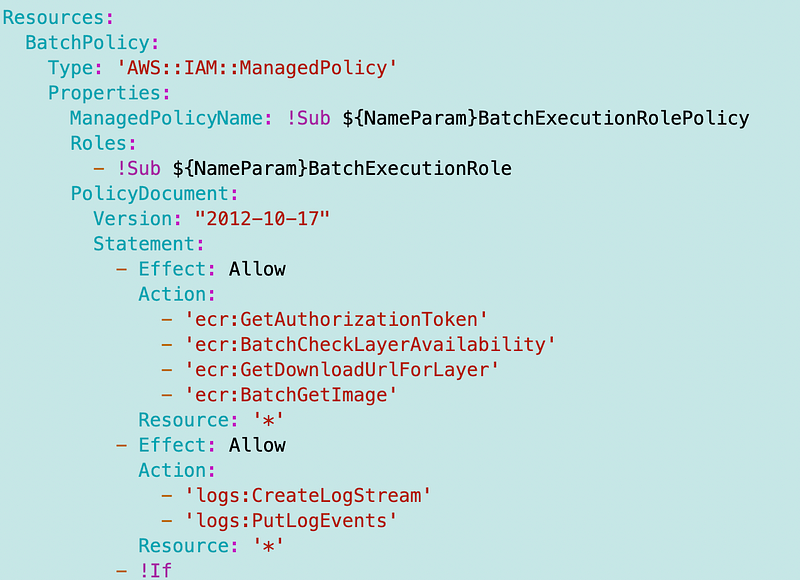

What does a Batch execution policy require?

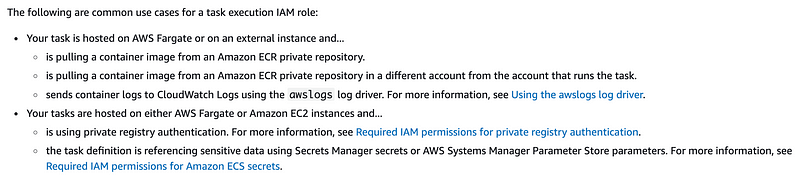

The documentation says Batch requires the following for “common use cases.” So are these required or not? That is not clear to me from reading the documentation. It’s also not clear if these permissions are required for the container, the EC2 instance, or what.

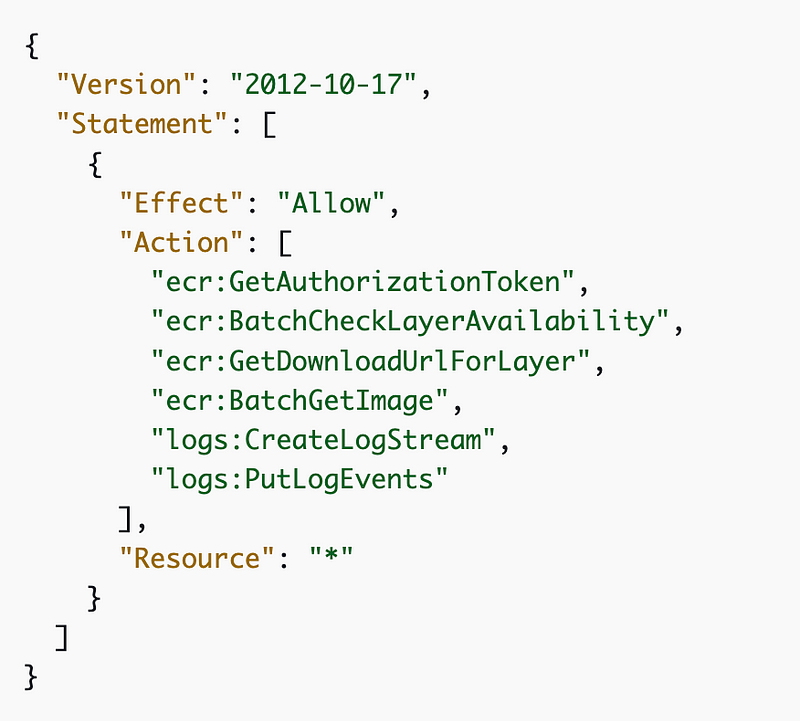

Why do I need ECR GetAuthorizationToken ?

The GetAuthorizationToken documentation says:

Retrieves an authorization token. An authorization token represents your IAM authentication credentials and can be used to access any Amazon ECR registry that your IAM principal has access to. The authorization token is valid for 12 hours.

It doesn’t seem like the job I’m running should have or need this permission. The service itself or whatever is deploying the containers needs that, not the actual job?

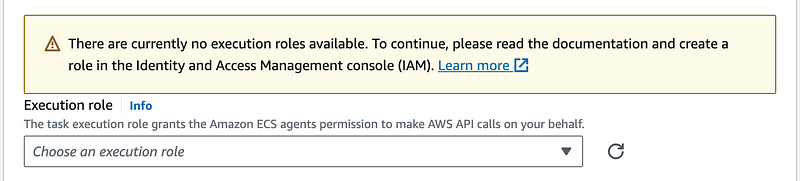

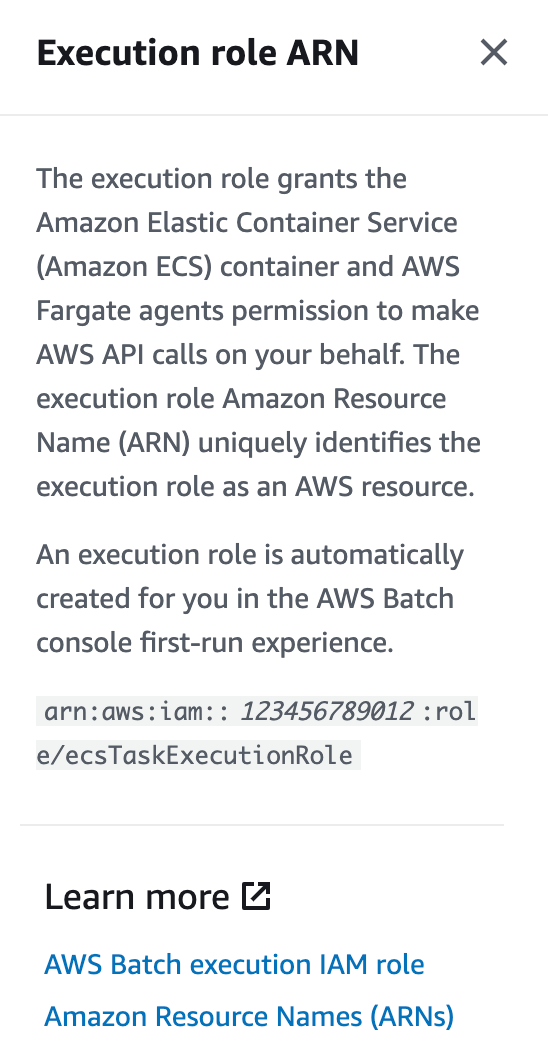

I had to look at the console to try to get clarification. Let’s click the “Info” link below.

So this role allows your Batch job to make API calls on your behalf. Which API calls? Only the ones we need (KMS and Secrets Manager actions) or all the actions specified above because Batch requires them plus whatever permission your job requires?

When you click on the Learn more link it takes you to the AWS Batch Execution role page which links to an ECS role that gets assigned to an EC2 instance. As I figured out below this doesn’t really make sense at all.

Initially I thought, well if you run a batch job with EC2, the role is assigned to the EC2 instance itself, not the container on the instance. That’s what it sounds like. So you’re running one container on one EC2 instance? That’s not very efficient. I get the same thing when I click Learn more for Fargate — it leads to the page talking about assigning an ECS role to an EC2 instance even though with Fargate you’re not running EC2 instances right? In that case, shouldn’t the documentation be talking about a role assigned to a container?

Well, I’m just going to test it out and look at the logs.

As mentioned, it feels like if we have to add the following permissions to our batch execution role then we are mixing up control plane and data plane permissions, but perhaps not if we lock down container retrieval to the specific container the job is allowed to run. The policy provided doesn’t do that.

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",So I took my Lambda policy and I changed everywhere it said “Lambda” to “Batch”. Then I swapped out the Lambda specific roles for the Batch roles.

Next I took a look at deploy_lambda_policy and it really does look like we really could just call this deploy_app_policy for both Lambda and Batch instead of having two separate functions.

I had to make a few changes and I will have to go back and modify my Lambda policy deployment:

- Pass in the appname instead of the Lambda function name.

- Change all references to Lambda function name to appname.

- Pass in the service (Lambda or Batch at the moment)

- Change the template name to dynamically add the service name.

- Add ExecutionRole to the policy name.

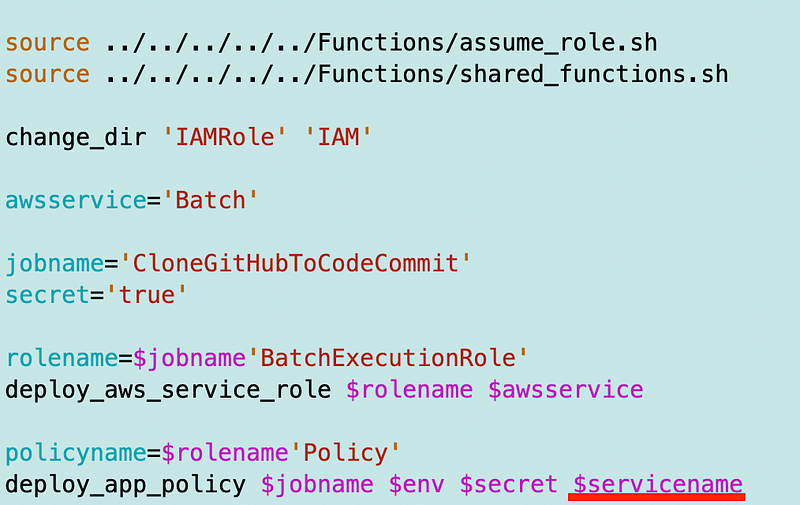

Then I just add $servicename when I call the script.

Now recall that I’m deploying a secret when I deploy the Lambda function if specified:

I haven’t deployed a secret yet for my batch job so that’s throwing an error.

Recall that I was deploying my secret with my Lambda function.

I pulled the secret code out of the above to deploy a secret for now so I could deploy the role.

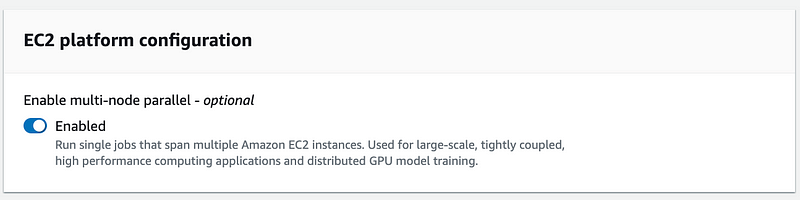

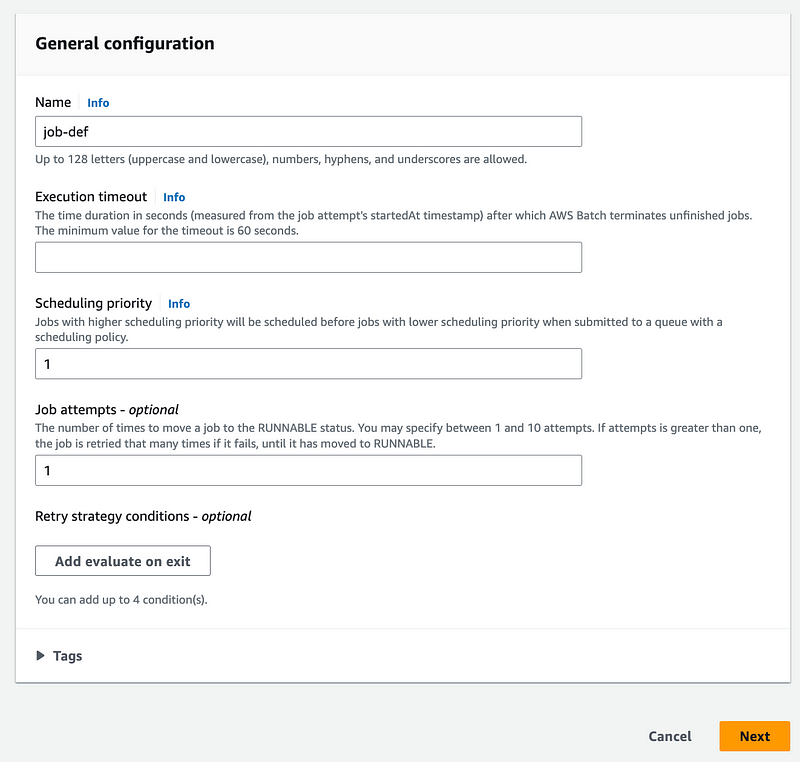

Then I tried to create a job definition using the EC2 option.

I disabled multi-node because that sounds like an expense I don’t need.

I added a name and clicked next.

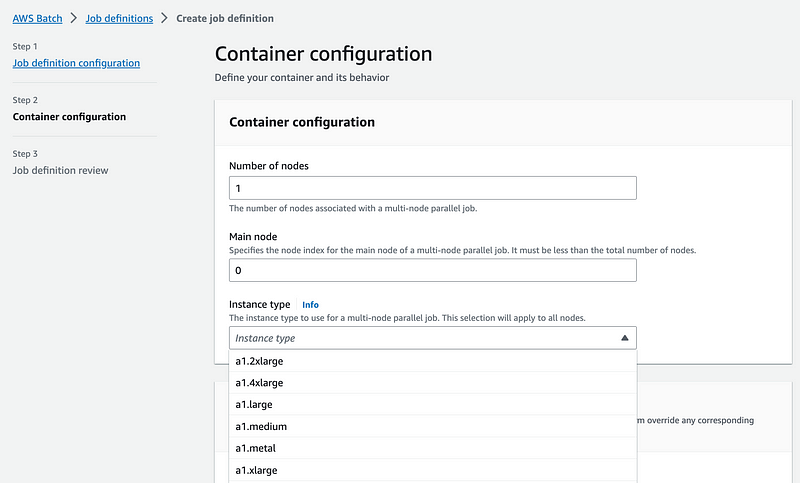

Here again I’m choosing an instance type. Why? Isn’t that defined by the compute environment? Well, I was reading something about single node batch definitions. Does this mean you don’t need to create a compute environment if you’re only using a single node? That’s not clear either.

But anyway.

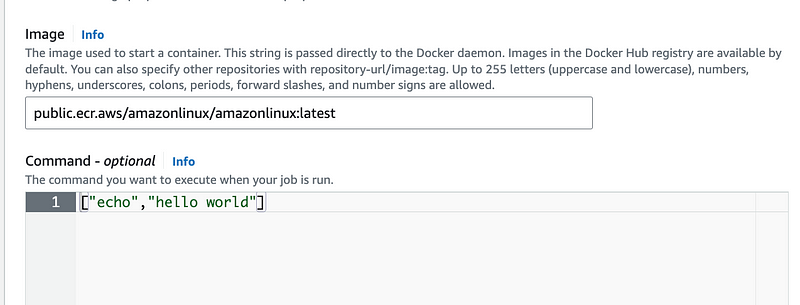

Next you can choose an image and pass in a command.

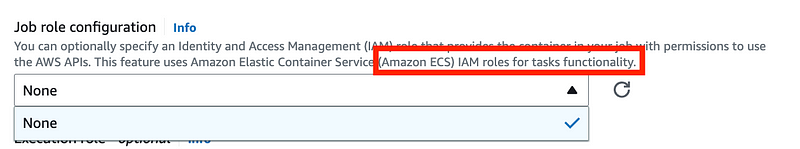

Here’s where I find my next mistake. The job execution is not performed by the Batch service, it’s performed by ECS tasks. My roles that can be assumed by AWS Batch do not appear here. So I’m looking for an ECS task role explanation in the Batch documentation and I cannot find one. Is this just referring to the ECS instance role? But that doesn’t appear here either and doesn’t make sense.

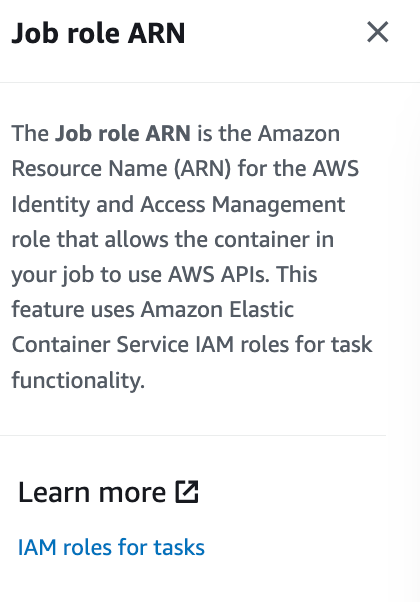

The Info link doesn’t say much more.

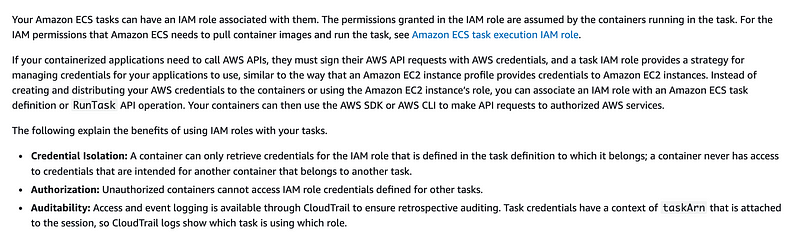

Let’s try the IAM Roles for tasks link.

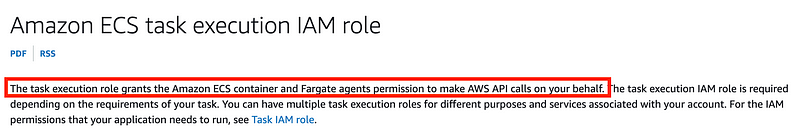

Honestly that wasn’t that helpful. It links to a page explaining how to fill out this particular form. I search for roles and find yet another link to Amazon ECS task execution IAM roles.

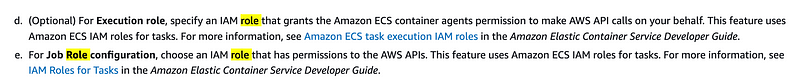

Now here’s another confusing point. Notice the names of the two roles in the documentation. They are in reverse or of what appears on the screen in the Batch console. Initially I had these two backwards in my mind as a result.

The execution role (the second role on the screen) is used by the ECS agent on the EC2 instance to start a container from a specific image.

The job role is used by the container to run whatever job you are trying to run.

The documentation and the UI are in a different order which of course I didn’t notice initially.

ECS Agent Execution Role

Let’s take a look at the execution role. And by the way I need to rename things now so I can align with the AWS terminology to make things easier to understand.

This sounds more promising than the Batch documentation in terms of creating a role that will work at that point of the configuration.

As mentioned above, the agent needs permission to get the container from a private registry. It also appears that a task may need permission to access secrets (yes it does in our case.) I presume the permissions for AWS CodeCommit would go here as well.

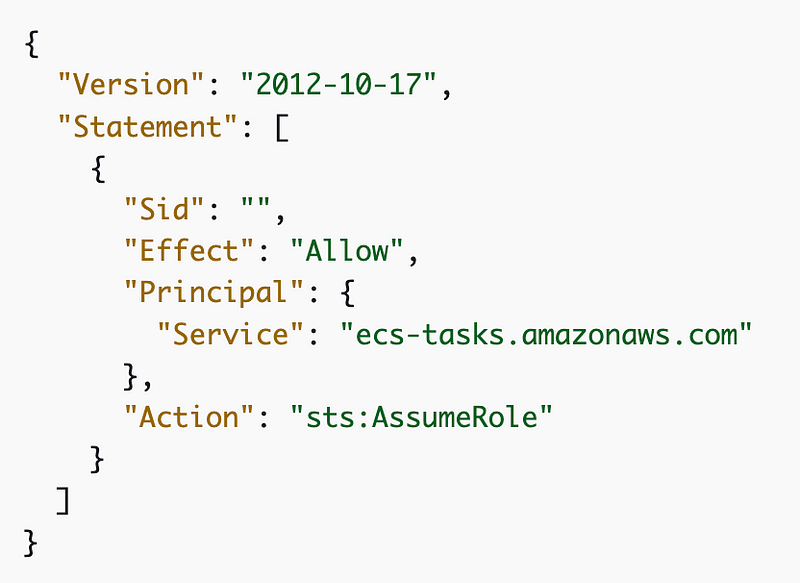

We need to allow ecs-tasks to assume the role. I presume we could add the confused deputy restriction here as well somehow. We’d need to see what ARNs are included in the request.

We can also limit access to VPC Endpoints when using Fargate. I don’t know why the documentation here references Fargate specifically.

Job role

The job role, finally, is the role that our container uses to do whatever it needs to do. So I’m not sure we actually need to give our execution role in the last section access to AWS Secrets Manager. It will need KMS to pull the encrypted container from the private registry. We’ll give this job role the Secrets Manager and KMS access to decrypt the credentials to assume the role via MFA I was testing in Lambda.

The job role needs a trust policy that allows ecs-tasks.amazonaws.com to assume the role and should use the conditions shown below to limit the confused-deputy attack.

Here’s the documentation we need for the job role:

And there are a whole bunch of issues related to job execution roles that I’m not going to get into in this post:

But at least now we know (I think) which roles we need to use AWS Batch.

Spot instance role

By the way, there’s also a role if you want to use Spot instances, but I’m not addressing that here either as we’ve covered enough ground in this post.

Event Bridge Role

There’s also a role if you want to integrate with AWS Event Bridge. I’m not using that just yet.

MFA User and Role

Not covered in the AWS Batch documentation are the Use I am creating with credentials that can be used with MFA and the role that requires MFA to assume. That was what I was testing on Lambda and now trying to test on AWS Batch. Those will be essentially the same as what we had for AWS Lambda but renamed to align with the batch job I’m creating.

Networking

The networking options for the Batch service are pretty straight forward. I used all the networking I already created for Lambda in this post and following where I added additional VPC endpoints for the services I’m using. The posts include the CloudFormation code required to deploy the networking.

Why Batch?

So why would you want to use AWS Batch instead of just running a container on ECS or even a single EC2 instance with a container?

- Typically batch jobs require scheduling. Batch helps with that.

- You can also trigger various jobs to start after another one completes. There’s information about state and the step functions in the Batch documentation.

- If your job is written to be re-runnable it can automatically retry your job.

- Trigger a batch job as a response to events with Event Bridge.

Well, deciphering this documentation took a couple of days. Next, I’ll try to write the code to deploy all these roles and maybe test them out manually to start.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab