Analyzing IAM Roles and Policies for MFA to Run AWS Batch

ACM.333 Converting my Lambda roles over to AWS Batch Roles and other roles and policies required to run AWS Batch

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: IAM | Secure Code | Container Security | Batch

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post, I rebuilt my container I was using for Lambda to run in AWS Batch. Instead of leveraging the Lambda Runtime Emulator locally, I can just run the container. I also simplified my code structure a bit so it all comes from a jobs folder.

In this post I’m going to analyze the roles and policies I need to run AWS Batch because there’s quite a lot of ground to cover. I’ll create them in an upcoming post. I’m going to look at converting the role and user I created for Lambda, create a new secret for my batch job, but reuse my GitSecrets secret to access GitHub.

In addition, we need to understand the permissions the AWS Batch service has in our account — and I’m not too keen on some of the things in the default service linked role policy.

I’m going to try to reuse the same concept of leveraging MFA for a batch job that I was using with Lambda. That means I need the following:

- The batch job execution role required by AWS Batch.

- A user with credentials that can assume a role only with MFA.

- The assumed role that can leverage GitHub secrets.

AWS Batch requires some additional and separate roles and they will vary based on how you are using AWS Batch.

You can get an overview of the roles and policies needed for AWS Batch here:

Deciding which compute environment to use for AWS Batch

Before we create our roles we need to decide which type of compute environment we are going to use for our AWS Batch job.

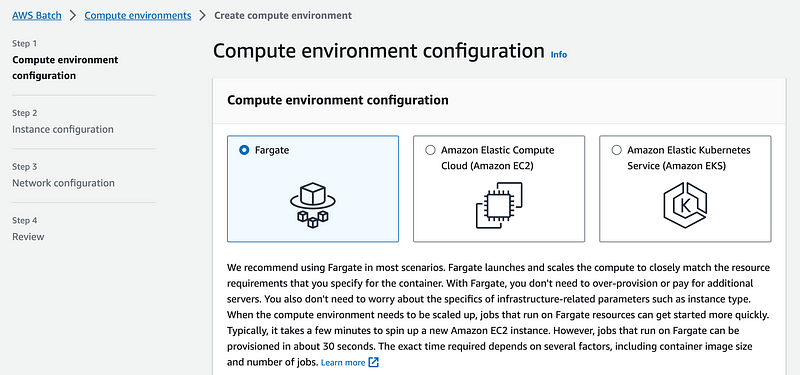

There are different ways to run AWS Batch — on EC2 instances, via Fargate, and EKS (Amazon’s managed Kubernetes service).

What if we use Fargate? Well, that might be interesting but initial analysis shows that Fargate is more expensive in many cases:

I’m going to stick with EC2 for now so I’ll let someone else figure out Fargate. There are also more security layers to consider with Fargate if AWS is managing the underlying hosts. But it’s interesting if you don’t have to worry about spinning up the EC2 instances and you can keep it secure.

Update: Note that upon further research, Fargate may not work for MFA jobs the way I have currently designed them, because AWS says it takes a few minutes for the EC2 instance to spin up and the token may expire. But there may be other options.

Typically, it takes a few minutes to spin up a new Amazon EC2 instance. However, jobs that run on Fargate can be provisioned in about 30 seconds. The exact time required depends on several factors, including container image size and number of jobs.

That said, it’s not clear how encrypting ephemeral storage when using Fargate with batch works. I would need to research this a bit more.

I want to try to leverage EC2 spot instances eventually. That might not work for certain jobs where I am trying to require MFA because there will be a limited window for providing the code to assume a role — something I’ll need to figure out as I go.

I also don’t want to use the monolith that is Kubernetes unless there is a serious need for it. I don’t understand how Kubernetes became the de facto over ECS (Elastic Container Service). I think it was mostly hype but maybe I’m missing something. I just know that helping customers with Kubernetes security is so much more complicated.

The push for Kubernetes at Google was performance and compute optimization, not security. That is clear from the initial design. At least there are options for network security now which did not exist in the beginning. I am guessing ECS is just as, if not more performant but do your own testing to confirm that.

AWS IAM Access Advisor

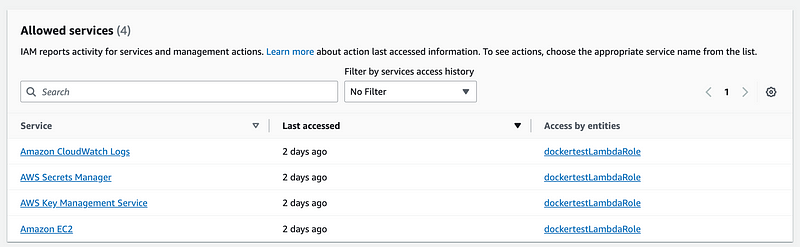

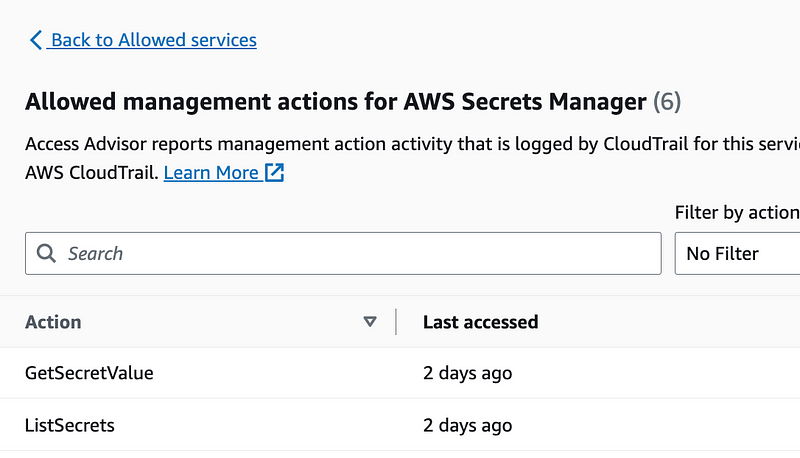

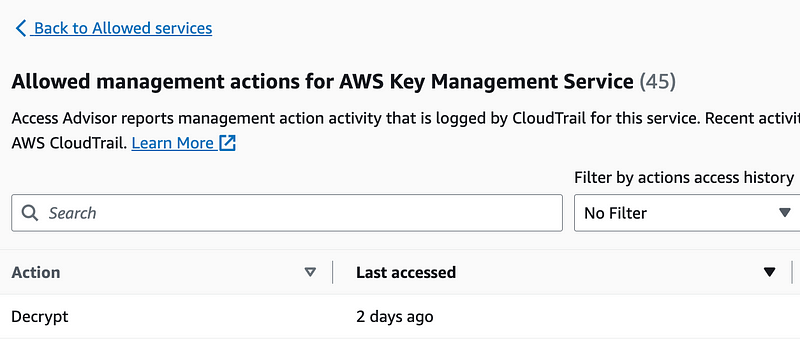

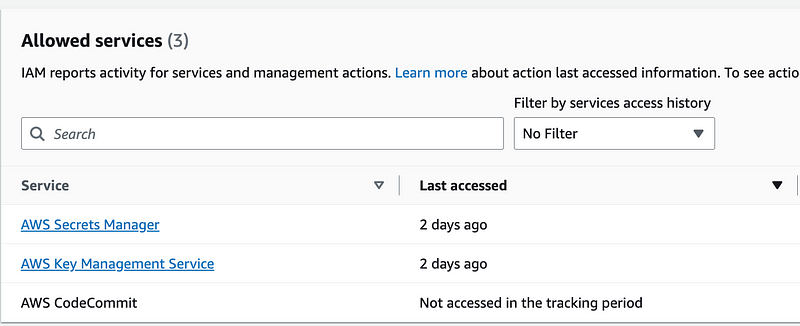

Before converting my existing roles and policies, I can go in and try to use AWS Access Advisor (which is one of the tabs on the policy details screen) to see exactly which actions my roles and user leveraged. That way I can limit my new policies to exactly what is required — or in other words — zero trust policies. You can see as I walk through the different policies I created how I’m looking at what was actually used to refine the roles.

Batch Job Execution Role

First I take a look at dockertestLambdaPolicy.

This is the policy that will be leveraged by the container running in the AWS Batch service but I’ll rename it to batchtestBatchPolicy.

Here are the services accessed:

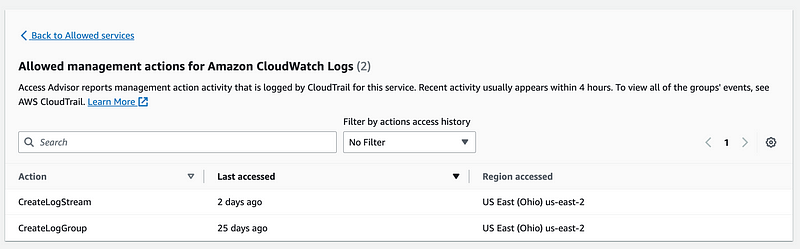

The CloudWatch configuration was specific to Lambda, not used by my code, so we’ll have to modify this to whatever the Batch execution role requires.

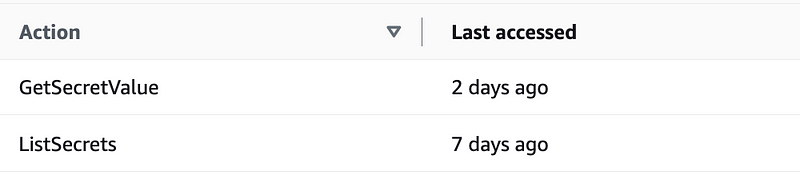

For AWS Secrets Manager we need:

Actually, I was testing with ListSecrets so we probably don’t even need that.

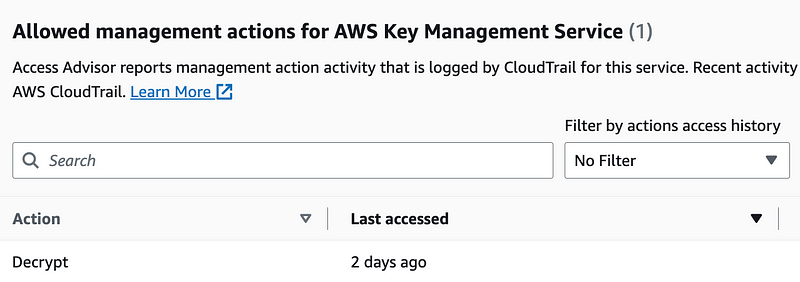

For KMS we only need Decrypt.

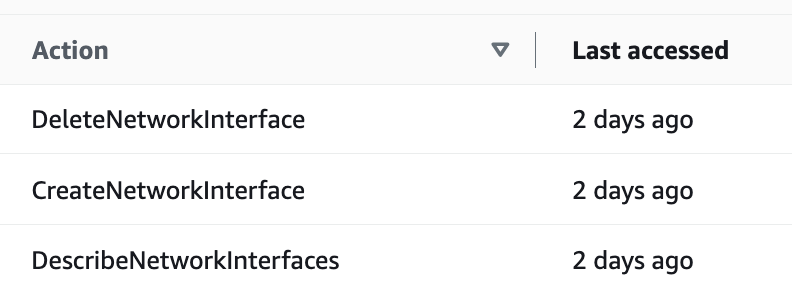

The Lambda Execution role used these EC2 services. Those are not specific to my code — so we’ll need to change that out to whatever the AWS Batch execution role requires.

Let’s see what the requirements are for the AWS Batch Execution role.

Besides whatever permissions we need to grant the Batch job, it will need the following permissions:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

}

]

}The role needs the following trust policy since we are using the ECS option (and this would also be required for Fargate which runs on ECS):

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"Service": "ecs-tasks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}Amazon calls this the ecsTaskExecutionRole but we’re going to name it batchtestExecutionRole and it will have access to the batchtestSecret.

Preventing the Cross-Service Confused Deputy Problem

I have other posts explaining the confused deputy problem if you click the IAM link above.

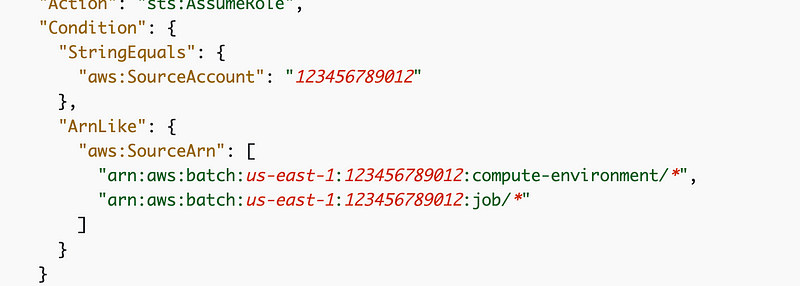

To prevent cross-service confused deputy issues, we can add a condition like this to the batch execution role policy.

Batch Job Assume Role

Next I take a look at dockertestAssumeRole which is going to become batchtestAssumeRole.

Secrets Manager requires the same permissions for a specific secret. Note that we had some issues with the policy that accessed a specific ARN. We’ll try that again with AWS Batch and see if we have better luck.

KMS only requires decrypt, same as above.

We never got AWS CodeCommit working since we couldn’t assume the role and moved on. We can take a look at this again after we get everything working.

User Policy

Now I tried to take a look at my user policy in AWS IAM Access Advisor but I already deleted that user so I can’t see it now. The policy only needs to allow STS Assume role specifically for the the batchtestAssumeRole.

CloudWatch on AWS Batch

Since we are using the EC2 option for running AWS Batch, we’re going to want to also have logging not only from the container but on the EC2 instance. Here are the instructions for that.

Create a policy that allows the ECS host to log to CloudWatch:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents",

"logs:DescribeLogStreams"

],

"Resource": [

"arn:aws:logs:*:*:*"

]

}

]

}I personally would like to make the logs ARN more specific. We’ll take a look at that after we have some logs.

Once you have that policy, it needs to be attached to whatever role the ECS EC2 instances are using. From the documentation:

You can create an Amazon EC2 launch template that includes CloudWatch monitoring.

I explained what launch templates are in a prior post.

Service Linked Roles for AWS Batch

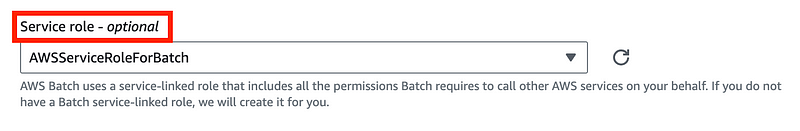

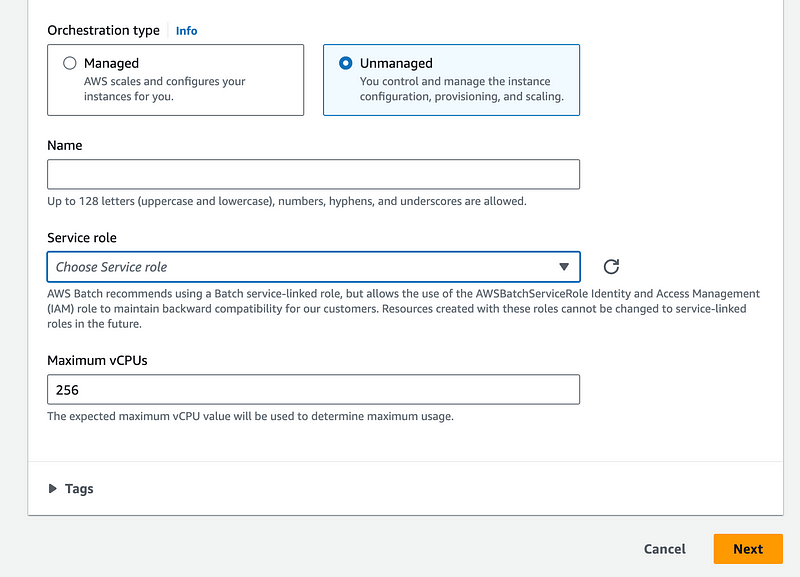

AWS Batch can use a service linked role. Initially I did not realize it is optional, but then I took a look at the AWS Console:

Note that this role is the role used to manage your compute environment. There is a separate role assigned to your batch job. This was not exactly clear to me from the documentation. I had to look at the console.

Although that says optional, you can’t go past that page with Fargate without choosing a role. Note that it says AWS will create the role if one does not exist. I did noticed that with Fargate when I created a role that Batch can assume it allowed me to choose it.

With EC2 it looks like you have an option of managed or unmanaged. With unmanaged you choose a role.

From the documentation:

A service-linked role makes setting up AWS Batch easier because you don’t have to manually add the necessary permissions. AWS Batch defines the permissions of its service-linked roles, and unless defined otherwise, only AWS Batch can assume its roles. The defined permissions include the trust policy and the permissions policy, and that permissions policy cannot be attached to any other IAM entity.

Recall that Service Linked Roles are not restricted by your Service Control Policies.

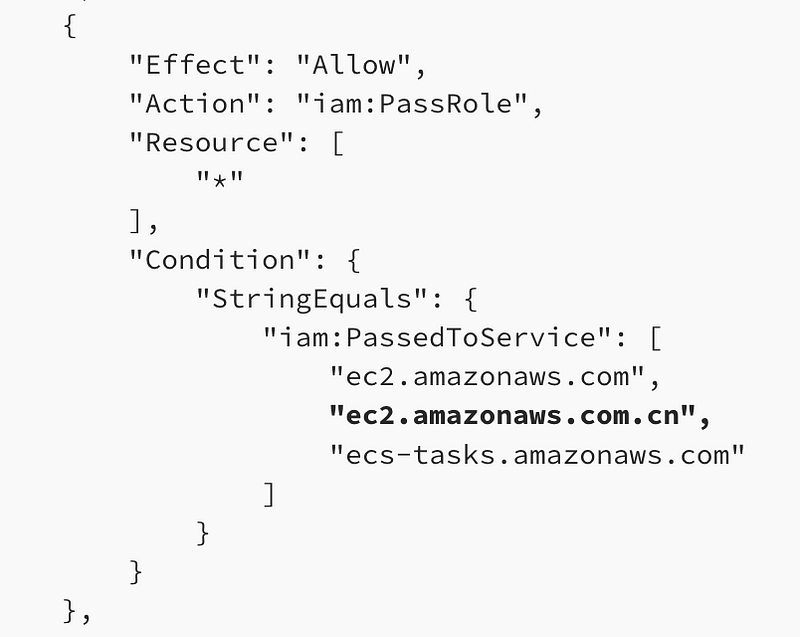

The service linked role for AWS Batch has quite a lot of permissions. I don’t particularly like this one:

I warned about the risk associated with IAM PassRole in terms of privilege escalation in a prior post. See the IAM link at the top.

I also don’t like the fact that the service linked role can create create other service linked roles.

None of these roles are subject to your AWS Organizations Service Control Policies (kind of ironically, given the name).

You can’t modify a service linked role, but you can create it before the service does. You can delete a service linked role for AWS Batch — but you must clean up the resources for your service-linked role before you can manually delete it.

In any case, I’m not going to use this option. I’m going to create my own role and policy, if possible. The documentation is incredibly confusing. I had to look at the console to try to figure out what is going on with this role.

If you choose to create your own role and policy, you will need to monitor AWS updates at the bottom of this page. AWS may add new functionality which your batch jobs will require to work properly in the future.

Here’s the full policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:DescribeAccountAttributes",

"ec2:DescribeInstances",

"ec2:DescribeInstanceStatus",

"ec2:DescribeInstanceAttribute",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeKeyPairs",

"ec2:DescribeImages",

"ec2:DescribeImageAttribute",

"ec2:DescribeSpotInstanceRequests",

"ec2:DescribeSpotFleetInstances",

"ec2:DescribeSpotFleetRequests",

"ec2:DescribeSpotPriceHistory",

"ec2:DescribeVpcClassicLink",

"ec2:DescribeLaunchTemplateVersions",

"ec2:RequestSpotFleet",

"autoscaling:DescribeAccountLimits",

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeAutoScalingInstances",

"eks:DescribeCluster",

"ecs:DescribeClusters",

"ecs:DescribeContainerInstances",

"ecs:DescribeTaskDefinition",

"ecs:DescribeTasks",

"ecs:ListClusters",

"ecs:ListContainerInstances",

"ecs:ListTaskDefinitionFamilies",

"ecs:ListTaskDefinitions",

"ecs:ListTasks",

"ecs:DeregisterTaskDefinition",

"ecs:TagResource",

"ecs:ListAccountSettings",

"logs:DescribeLogGroups",

"iam:GetInstanceProfile",

"iam:GetRole"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream"

],

"Resource": "arn:aws:logs:*:*:log-group:/aws/batch/job*"

},

{

"Effect": "Allow",

"Action": [

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:log-group:/aws/batch/job*:log-stream:*"

},

{

"Effect": "Allow",

"Action": [

"autoscaling:CreateOrUpdateTags"

],

"Resource": "*",

"Condition": {

"Null": {

"aws:RequestTag/AWSBatchServiceTag": "false"

}

}

},

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": [

"*"

],

"Condition": {

"StringEquals": {

"iam:PassedToService": [

"ec2.amazonaws.com",

"ec2.amazonaws.com.cn",

"ecs-tasks.amazonaws.com"

]

}

}

},

{

"Effect": "Allow",

"Action": "iam:CreateServiceLinkedRole",

"Resource": "*",

"Condition": {

"StringEquals": {

"iam:AWSServiceName": [

"spot.amazonaws.com",

"spotfleet.amazonaws.com",

"autoscaling.amazonaws.com",

"ecs.amazonaws.com"

]

}

}

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateLaunchTemplate"

],

"Resource": "*",

"Condition": {

"Null": {

"aws:RequestTag/AWSBatchServiceTag": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"ec2:TerminateInstances",

"ec2:CancelSpotFleetRequests",

"ec2:ModifySpotFleetRequest",

"ec2:DeleteLaunchTemplate"

],

"Resource": "*",

"Condition": {

"Null": {

"aws:ResourceTag/AWSBatchServiceTag": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"autoscaling:CreateLaunchConfiguration",

"autoscaling:DeleteLaunchConfiguration"

],

"Resource": "arn:aws:autoscaling:*:*:launchConfiguration:*:launchConfigurationName/AWSBatch*"

},

{

"Effect": "Allow",

"Action": [

"autoscaling:CreateAutoScalingGroup",

"autoscaling:UpdateAutoScalingGroup",

"autoscaling:SetDesiredCapacity",

"autoscaling:DeleteAutoScalingGroup",

"autoscaling:SuspendProcesses",

"autoscaling:PutNotificationConfiguration",

"autoscaling:TerminateInstanceInAutoScalingGroup"

],

"Resource": "arn:aws:autoscaling:*:*:autoScalingGroup:*:autoScalingGroupName/AWSBatch*"

},

{

"Effect": "Allow",

"Action": [

"ecs:DeleteCluster",

"ecs:DeregisterContainerInstance",

"ecs:RunTask",

"ecs:StartTask",

"ecs:StopTask"

],

"Resource": "arn:aws:ecs:*:*:cluster/AWSBatch*"

},

{

"Effect": "Allow",

"Action": [

"ecs:RunTask",

"ecs:StartTask",

"ecs:StopTask"

],

"Resource": "arn:aws:ecs:*:*:task-definition/*"

},

{

"Effect": "Allow",

"Action": [

"ecs:StopTask"

],

"Resource": "arn:aws:ecs:*:*:task/*/*"

},

{

"Effect": "Allow",

"Action": [

"ecs:CreateCluster",

"ecs:RegisterTaskDefinition"

],

"Resource": "*",

"Condition": {

"Null": {

"aws:RequestTag/AWSBatchServiceTag": "false"

}

}

},

{

"Effect": "Allow",

"Action": "ec2:RunInstances",

"Resource": [

"arn:aws:ec2:*::image/*",

"arn:aws:ec2:*::snapshot/*",

"arn:aws:ec2:*:*:subnet/*",

"arn:aws:ec2:*:*:network-interface/*",

"arn:aws:ec2:*:*:security-group/*",

"arn:aws:ec2:*:*:volume/*",

"arn:aws:ec2:*:*:key-pair/*",

"arn:aws:ec2:*:*:launch-template/*",

"arn:aws:ec2:*:*:placement-group/*",

"arn:aws:ec2:*:*:capacity-reservation/*",

"arn:aws:ec2:*:*:elastic-gpu/*",

"arn:aws:elastic-inference:*:*:elastic-inference-accelerator/*",

"arn:aws:resource-groups:*:*:group/*"

]

},

{

"Effect": "Allow",

"Action": "ec2:RunInstances",

"Resource": "arn:aws:ec2:*:*:instance/*",

"Condition": {

"Null": {

"aws:RequestTag/AWSBatchServiceTag": "false"

}

}

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateTags"

],

"Resource": [

"*"

],

"Condition": {

"StringEquals": {

"ec2:CreateAction": [

"RunInstances",

"CreateLaunchTemplate",

"RequestSpotFleet"

]

}

}

}

]

}Now that I understand what roles and policies the AWS Batch service requires, I can create them in the next post. I’ve already got a lot of code to automate this that we can leverage. We mostly need to customize the policies.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2023

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab