Why Golang Fasthttp opts for Slice over Map for Headers, Cookies, and Query Parameters

Fasthttp is a high-performance Golang HTTP framework designed with numerous optimizations to enhance performance. One notable design choice is the preference for using Slice over Map, especially when managing HTTP headers.

Why is that?

This article will dissect, from simple to complex reasons, why Fasthttp opts for Slice over Map and explain through code examples the high-performance rationale behind this choice.

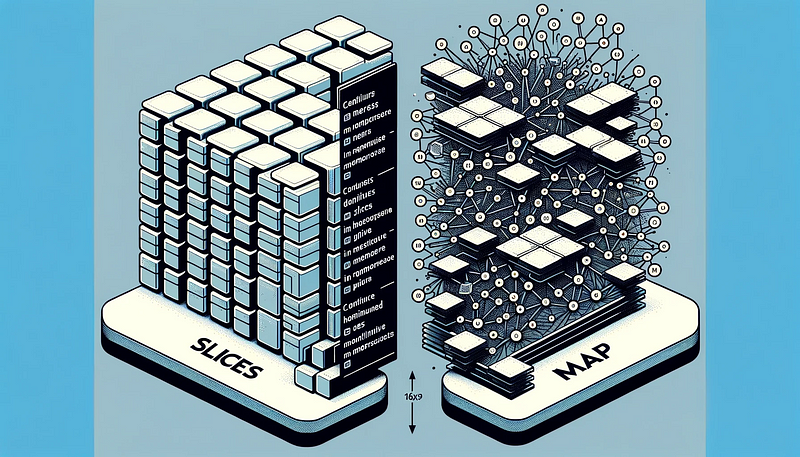

Slice vs Map: Basic Concepts

Before diving deep, it’s crucial to understand the fundamental concepts and performance characteristics of Slice and Map in Go:

Slice: A slice is a dynamic, flexible view of an array. Its underlying structure is an array, meaning its elements are stored contiguously in memory.

Map: A map is an unordered collection of key-value pairs implemented via a hash table. It offers quick lookup, addition, and deletion operations, but its performance isn’t always stable.

Memory Allocation and Performance

In high-performance scenarios, memory allocation and garbage collection are key performance factors. Fasthttp has optimized these aspects:

Efficiency of Slice Memory

Slice, with their elements stored contiguously, offers fast access speed and efficient CPU cache utilization. Moreover, Slice can reuse existing arrays by reslicing, reducing memory allocation and garbage collection pressure.

Memory Overhead of Map

In contrast, Map has a larger memory overhead. The scattered storage of keys and values in memory leads to lower CPU cache efficiency. Furthermore, maps’ growth often involves rehashing and reallocating memory, potentially bottlenecking performance-sensitive applications.

Fasthttp’s SliceMap

Fasthttp employs a custom sliceMap structure for storing key-value pairs, instead of a standard map. Here’s a simplified version of sliceMap and its Add method:

type kv struct {

key []byte

value []byte

}

type sliceMap []kv

func (sm *sliceMap) Add(k, v []byte) {

kvs := *sm

if cap(kvs) > len(kvs) {

kvs = kvs[:len(kvs)+1]

} else {

kvs = append(kvs, kv{})

}

kv := &kvs[len(kvs)-1]

kv.key = append(kv.key[:0], k...)

kv.value = append(kv.value[:0], v...)

*sm = kvs

}In this design, sliceMap enhances performance by:

Reducing Memory Allocation

By operating on an existing slice, sliceMap reuses memory as much as possible. When the capacity is sufficient, it extends the slice by reslicing kvs = kvs[:len(kvs)+1], avoiding additional memory allocation.

Reducing Garbage Collection Pressure

As the elements of a slice are stored contiguously, they can be more efficiently processed by the garbage collector, reducing garbage collection overhead. Furthermore, since memory is reused, the frequency of garbage collection is also significantly reduced.

Deeper Reasons for Performance Optimization

Fasthttp’s choice of sliceMap over Map is not solely based on memory and performance considerations but also deeper reasons:

Characteristics of HTTP Headers, query parameters, cookies

In HTTP request processing, the number of headers, query parameters, or cookies is usually small. This means even with linear search, the efficiency of lookup operations wouldn’t become a performance bottleneck.

In contrast, while hash Map theoretically offers near O(1) lookup efficiency, their practical use involves overhead and complexity:

- Firstly, hash computation in HashMap requires time.

- Secondly, hash collisions require additional handling, potentially involving linked list traversals or rehashing.

These factors might negate the theoretical lookup efficiency advantage of hash Map when the number of elements is small, making Slice a superior choice.

CPU Cache Prefetching: pre-load neighboring elements into the cache

The contiguous memory layout of Slice aligns with CPU cache operation principles, which load adjacent data at once. This continuity allows the CPU to pre-load neighboring elements into the cache after accessing a slice element, speeding up subsequent accesses. Therefore, sequential slice access results in a high cache hit rate, reducing main memory access frequency and enhancing performance.

Conclusion

Fasthttp’s design choices reflect a deep understanding and meticulous optimization of performance details. By opting for Slice over Map, Fasthttp has optimized aspects such as memory allocation, garbage collection, and CPU cache utilization, providing a solid foundation for high-performance HTTP applications. This design is not just a technical choice but a profound insight into practical application scenarios and performance requirements.