The Corporate Governance Failure at the Heart of Sam Altman’s Ouster from OpenAI

In this piece I examine both the flawed corporate structure of OpenAI, and the neglect of governance evolution during a year of immense change. Those things led to the chaos that is still unfolding around the firing of Sam Altman. I conclude that alternative governance structures aren’t the issue, as some may readily surmise. Nonetheless, how OpenAI’s particular structure was set up and managed left it vulnerable to calamity. Other alternative structures, such as those instituted by Anthropic, were more thoughtfully put together, better harmonize the interests of multiple stakeholders, and should be more resilient to slow divergences of goals or sudden organizational shocks.

On Friday, November 17, 2023, CEO and co-founder Sam Altman was unceremoniously fired from OpenAI by four members of the company’s non-profit board. As of Monday, November 20, 2023, three days after he was fired, it seems Sam Altman will be joining Microsoft as CEO of a new AI division that he will help create. Mr. Altman will be joined at Microsoft by former OpenAI board chair and COO Greg Brockman, who quit last Friday within hours of Mr. Altman’s ouster and his own demotion from the board. Other senior employees have since resigned, are intent on joining the new Microsoft division, and many more likely will do so. Reportedly, at least 650 of OpenAI’s 770 employees had signed a petition demanding that the company take Mr. Altman back and get rid of its Board. In addition to employee shock and outrage, OpenAI’s investors were very upset about Mr. Altman’s sudden firing, without advance notice to any of them and seemingly without substantive provocation. The company was on the verge of another financing round that would have entailed a secondary sale of employee shares, valuing OpenAI at $87 Billion dollars, triple its current valuation. That financing appears in jeopardy (certainly the valuation can’t stand with the amount of destabilization introduced to the company), which harms the interests of both employees and previous investors.

Despite scrambling over the weekend to repair the damage done by his firing and bring Mr. Altman back, the board instead preserved their own roles (for the time being) and quickly demoted interim CEO Mira Murati (OpenAI’s CTO) — designated as interim just Friday — in favor of Emmett Shear, former CEO of Twitch. In the wee hours of Monday morning, Ilya Sutskever, co-founder and Chief Scientist of OpenAI and one of the board members who orchestrated Mr. Altman’s ouster, expressed remorse over what has transpired: “I deeply regret my participation in the board’s actions. I never intended to harm OpenAI.” The fate of OpenAI hangs in the balance. The company has been harmed, deeply, and it remains to be seen how it will recover. Regardless of what prompted the board to see fit to remove Mr. Altman in the first instance, certainly in no one’s perfect world would the chaos and uncertainty brought about by both the ouster and the manner in which it was undertaken have been a goal. This is all a grand fiasco. Of the many things to be said (and still to be discovered) about the Shakesperian drama unfolding before our eyes, it is safe to say now that it is an unmitigated failure of corporate stewardship.

What transpired with OpenAI represents a huge and unmistakable failure of corporate governance. This is the case whether Altman returned after his firing or not (who knows, he still might). How he was terminated in the first instance, and the chaos that followed, provide all the evidence of failure that we need. Through the lens of governance, we can get an interesting and perhaps the most probative view on what went wrong at OpenAI. The data points from the last few days are susceptible to multiple readings. Even those who agree there was a failure of governance may ascribe different reasons for it. Some commentators will be happy that the will of the board prevailed; they will see that as a win for board governance (see, e.g., here, arguing that Altman’s quick return to OpenAI based on employee and investor pressure after his firing would have been a failure). Others may blame the alternative governance structure of the company and contend that anything too creative or exotic that leaves key shareholders out of oversight and decision making is flawed.

Basic Corporate Governance

For profit companies typically have boards of directors. Venture-backed start-up boards typically include one or two company executives, as well as representatives from the VC firms who contributed the most capital. It is healthy to have other outside, or independent, directors, who can bring fresh perspectives on governance and management matters without being overly swayed by self-interest. Corporate board members have fiduciary obligations to the company, typically to promote positive business performance, ensure legal and regulatory compliance and maximize shareholder returns.

Nonprofit company boards operate in much the same way. There are independent board directors, perhaps a representative from one or more of the biggest philanthropic backers of the organization and some executive staff. The big difference is that nonprofits don’t exist to maximize shareholder value or profit. They typically are formed with a charitable or social benefit goal as their primary mission.

Neither for profit or nonprofit boards have specific fiduciary obligations to their organization’s employees or outside stakeholders (such as customers or business partners) other than generally maximizing business performance. Most healthy organizations take care to attend to diverse stakeholder interests, with the knowledge that doing so and acting beyond what fiduciary duties or legal requirements may require tends to support achievement of business performance, whether in maximizing shareholder value or fulfilling a charitable or social benefit goal.

Corporate Governance at OpenAI

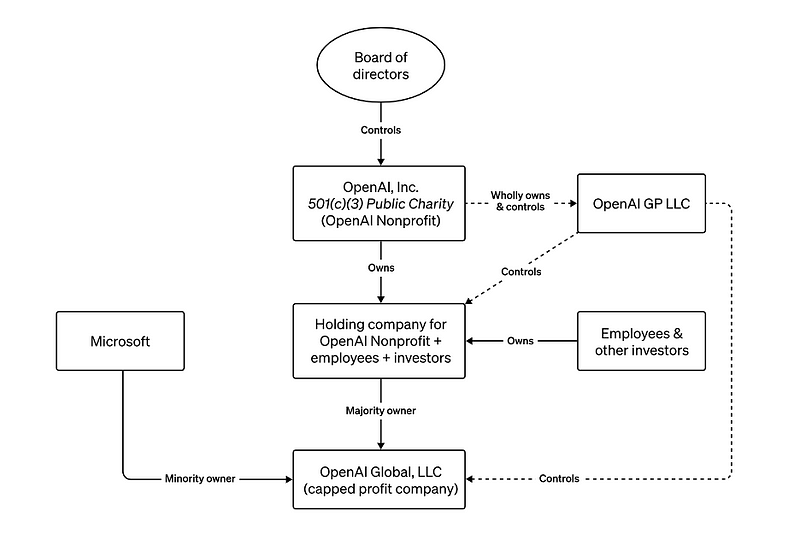

OpenAI was founded as a nonprofit in late 2015. The non-profit form was chosen “with the goal of building safe and beneficial artificial general intelligence for the benefit of humanity.” As framed by the company, OpenAI “believed a 501(c)(3) would be the most effective vehicle to direct the development of safe and broadly beneficial AGI while remaining unencumbered by profit incentives.” However, after a few years in operation, the company realized that the capital needed to fuel its ambitions would not be obtainable via a nonprofit entity only. As OpenAI explains,

“It became increasingly clear that donations alone would not scale with the cost of computational power and talent required to push core research forward, jeopardizing our mission. So we devised a structure to preserve our Nonprofit’s core mission, governance, and oversight while enabling us to raise the capital for our mission”

In anticipation of receiving a $1 Billion initial investment from Microsoft in 2019, OpenAI under Mr. Altman’s stewardship created for-profit subsidiaries under the original nonprofit. These for-profit entities would legally be able to accept outside investment and provide equity interests to OpenAI employees. The key for-profit subsidiary included a “capped profit” mechanism, whereby investors could only hope to achieve up to a 100x return on their investments and all excess above that would be returned to the nonprofit for use towards its broader mission.

This approach was not without its controversy. It prompted senior defections from the company, leading, for instance, to the founding of Anthropic in 2021 by Dario and Daniela Amodei. Apparently, some tensions remained from the decisions to accept outside funding, which intensified in 2023 once ChatGPT became a breakout success, with Microsoft and other investors pouring many Billions into the company. Worth noting is that Ilya Sutskever did acknowledge and seemed sanguine about the new structure in 2019 when it was created. He stated at that time “In order to build AGI you need to have billions of dollars of investment … We created this structure in order to raise this capital while staying aligned with our mission.”

Governance & Judgment Failures

As outlined, OpenAI’s governance structure is a retrofit. The failure of governance that it experienced in the lead up to November 17 is in part due to that structure. But in larger part it is due to how the structure was neglected while the company quickly changed.

→ Letting the nonprofit board membership languish following defections in early 2023. In addition to the six members that it had immediately before Mr. Altman’s firing and Mr. Brockman’s demotion on November 17, 2023, the OpenAI Board previously counted as members Reid Hoffman, an experienced Silicon Valley company operator and investor, Will Hurd, a former Texas Congressman, and Shivon Zilis, a director of Elon Musk’s Neuralink. These three stepped down as board members in the Spring of 2023 owing to various conflicts. Inadequate effort was made to replace them or to supplement the board with additional expertise and experience, as might befit a company suddenly worth tens of billions of dollars overnight. Mr. Altman may have had a lot of trust in the three outside directors, and certainly in Mr. Brockman. But trust is sometimes inadequate to preserve prudent, transparent decision making and good judgment, especially. It is entirely unclear whether Adam D’Angelo, Helen Toner, Tasha McCauley and Ilya Sutskever had the depth and breadth of experience to guide OpenAI against its nonprofit mission, muchless that balanced with a broader set of stakeholder interests in a multibillion dollar, globally prominent enterprise. It’s clear they exercised poor judgment in how they went about terminating Mr. Altman. Everyone would have been better served by a bigger board, with more maturity and diverse expertise on matters concerning AGI and its prospective social impact as well as harmonizing these interests with those of investors and other stakeholders.

→ Inadequate review processes for the CEO. As of this writing, I do not have access to OpenAI, Inc.’s bylaws or other governing documents. However, we know that the decision to fire Mr. Altman arose from a majority vote (four of the six directors). CEO’s serve at the pleasure of their boards and can be removed based on a majority decision. However, disagreements over strategic direction of a company typically should not involve termination of an otherwise high performing CEO without malfeasance or a persisting impasse deemed detrimental to the business. Prudent practice typically involves investigation, documentation and articulation of objective evidence of deficits or misdeeds. The OpenAI board’s stated reason for terminating Mr. Altman is the vague characterization that he was not “consistently candid” with the Board, “hindering its ability to exercise its responsibilities.” However, subsequent revelations make clear that he was not engaged in any malfeasance and there was “no specific disagreement about safety.” Without knowing more, the decision to oust Mr. Altman seems based on personality conflicts or personal disagreements that plausibly should or could have been subject to dispute resolution procedures or consultation with other interested stakeholders. Governing documents don’t always provide for such procedures, but good governance often involves following such procedures voluntarily where wrongdoing or underperformance is not otherwise presented by a CEO. The nonprofit board had no seats for investors or even an advisory committee of investors. On the one hand, this may have avoided any corrupting influences. On the other, it deprived the board of the wisdom that “smart money” can often bring to the table, particularly in situations involving difficult strategic decisions or disagreements having material impact on the company.

→ The board overstepped an overly narrow mission focus. The mission of OpenAI is “to ensure that artificial general intelligence benefits all of humanity.” In that regard, safety for AGI is listed as a paramount objective. AGI, of course, remains an aspirational goal for OpenAI. Per Mr. Altman in early November, the company’s current LLM products are not the way to get to AGI or superintelligence. As such, the commercial success of products like ChatGPT and GPT-4 do not implicate AGI or AGI-related safety. There are plenty of immediate policy and user safety issues to be concerned about based on the AI products currently available to the public. But these are not of the existential risk or more severe public safety risk variety associated with AGI or Superintelligent AI. Nor was there a promise to permit Microsoft or others access to potentially dangerous technology. For instance, since at least June 2023, the company had made clear that:

“While our partnership with Microsoft includes a multibillion dollar investment, OpenAI remains an entirely independent company governed by the OpenAI Nonprofit. Microsoft has no board seat and no control. And … AGI is explicitly carved out of all commercial and IP licensing agreements.”

Whatever concerns the OpenAI board may have held about the commercial direction of the company under Mr. Altman’s leadership, these did not immediately implicate their mandate under the stated mission of the non-profit. Moreover, Mr. Sutskever himself had assumed control over AGI and Superintelligence research at OpenAI, particularly the goal of developing and managing those hoped-for achievements safely.

The overall point here is that once OpenAI changed overnight and brought the world along with it, it should have been abundantly clear that more was needed to harmonize its various motives and interests. The business of the company was burgeoning around Generative AI, and that, in and of itself, was not the mission focus of the nonprofit running the company. Either the nonprofit’s mission needed to be expanded, or more decision making and leadership over its broader pursuits needed to be devolved to subsidiary entities operating its Generative AI business.

→ Failing to Anticipate Obvious Ramifications. The ultimate governance failure was the failure by the Board to anticipate the extreme disruption to OpenAI that their actions would introduce. These may all be a function of a more basic lack of leadership savvy, experience and expertise. However, that there would be the kind of fallout that there has been since Friday should have been expected. Since the public launch of ChatGPT one year ago, Sam Altman has become a leading figure in the global AI movement. He has functioned as a credible, articulate ambassador for both the amazing potential of AI as well as its risks. Moreover, he has been a capable steward of financing and product development at the company, beloved by investors, employees and silicon valley leadership more broadly. For many, Sam Altman is not only the face and voice of OpenAI, but he IS the company. In such situations, it is incumbent on a board to lay sufficient groundwork for an ouster. Having a coherent succession plan, a sophisticated messaging plan and connecting confidentially with key stakeholders in advance would all be critical. None of these measures appeared to have been undertaken at all, or else with sufficient forethought and planning. It is as yet unclear whether the board had sound counsel, not only about the technical viability of terminating Mr. Altman, but about the propriety of doing so under the circumstances in which the termination unfolded.

Alternative Structures with Aligned Incentives

The commercial success of ChatGPT, a public beta research project, took everyone off guard. It created a commercial opportunity zone no one expected and no one had planned for. No one could have, because the runaway success of ChatGPT and its industry transforming impact had no precedent in technology history. The nonprofit needed to evolve, but, instead, lost Board members to conflicts and failed to replace or supplement them with additional experience or skill sets appropriate to what OpenAI was becoming in 2023. Because it did not, trust broke down, unsound judgment was exercised by the remaining board majority and chaos ensued. Mr. Altman does share in the responsibility for not attending to the very governance deficiencies that arose to undermine him, though, perhaps, his trusting nature is generally a virtue. Even the well-intended can be overcome by structures that don’t adequately align incentives, contain proper safeguards or sufficiently define decision making procedures. Many may be tempted to impugn all alternative structures as a result. I do not believe this is warranted.

A non-profit was not the best vehicle for OpenAI’s ambitions in 2015, and certainly not in 2019, when it first revisited its corporate structure. Since 2013, the state of Delaware created public benefit corporations (PBCs). This corporate form embraces that a company may have goals beyond profit maximization and have stakeholders it wishes to be accountable to beyond its shareholders. Importantly PBCs can embrace both the pursuit of profit and social benefit goals in a cohesive corporate structure. Its board can include investors, and decision making at the board level must take into account the interests of all identified stakeholders at all times.

This is the form of corporation that Anthropic elected to create, following their founders’ disillusionment with OpenAI’s approach. In addition to a PBC, Anthropic also formed a long term benefit trust (LTBT). By design, the LTBT is a rotating body whose members serve year-long terms. The LTBT is “purpose” driven, and meant to hold the broader mission for socially beneficial uses of AI in mind within the scope of their authority and decision making. They can select the vast majority of the PBC’s board members, but do not themselves sit on the Anthropic board. As a novel corporate structure, the Anthropic LTBT + PBC builds in some design flexibility, acknowledging that some fine tuning may be desirable along the way. This kind of structure has yet to be tested, either with a crisis or litigation. However, based on its contours we can readily see how it would be more resilient to the kind of crisis faced by OpenAI. Not only might a termination event not been reached were OpenAI to have a structure more along these lines, but how that event was handled would likely have been done much more responsibly than what we have seen over the past several days.

Copyright © 2023 Duane R. Valz. Published here under a Creative Commons Attribution-NonCommercial 4.0 International License

The author works in the field of machine learning/artificial intelligence. The views expressed herein are his own and do not reflect any positions or perspectives of current or former employers.

If you enjoyed this article, consider trying out the AI service I recommend. It provides the same performance and functions to ChatGPT Plus(GPT-4) but more cost-effective, at just $6/month (Special offer for $1/month). Click here to try ZAI.chat.