Step-by-Step Guide to Running Latest LLM Model Meta Llama 3 on Apple Silicon Macs (M1, M2 or M3)

Are you looking for an easiest way to run latest Meta Llama 3 on your Apple Silicon based Mac? Then you are at the right place! In this guide, I’ll show you how to run this powerful language model locally, allowing you to leverage your own machine’s resources for privacy and offline availability. This tutorial will include setting up a user-friendly interface similar to ChatGPT, all on your own computer.

I will keep it simple and give you the steps to run it in your local including with a nice UI like ChatGPT.

What You Need: Ollama

Ollama is a setup tool specifically designed to make running large language models like Llama 3, Mistral, and Gemma straightforward on macOS. You can start by downloading Ollama. For more details about what Ollama offers, check their GitHub repository: ollama/ollama.

After you set it up, you can run the command below in a new terminal session to see that it is set and ready

ollama -v

Choosing Your Model

Now depending on your Mac resource you can run basic Meta Llama 3 8B or Meta Llama 3 70B but keep in your mind, you need enough memory to run those LLM models in your local. I tested Meta Llama 3 70B with a M1 Max 64 GB RAM and performance was pretty good.

To run Meta Llama 3 8B, basically run command below: (4.7 GB)

ollama run llama3:8b

Or for Meta Llama 3 70B, run command below: (40 GB)

ollama run llama3:70b

The download will take some time to complete depending on your internet speed.

Setting Up the User Interface

After those steps above, you have model in your local ready to interact with UI. Let’s setup the UI and start interacting.

To setup UI, we will use OpenWebUI (https://openwebui.com/)

You will need docker for this setup to easily run OpenWebUI.

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Now you can go to your web browser and open http://localhost:3000/

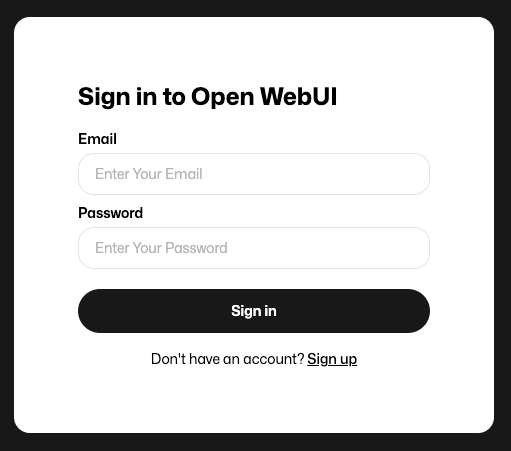

You will see this screen:

You can click Sign up and create an account. Don’t worry this will be stored in your local only and not going to the internet.

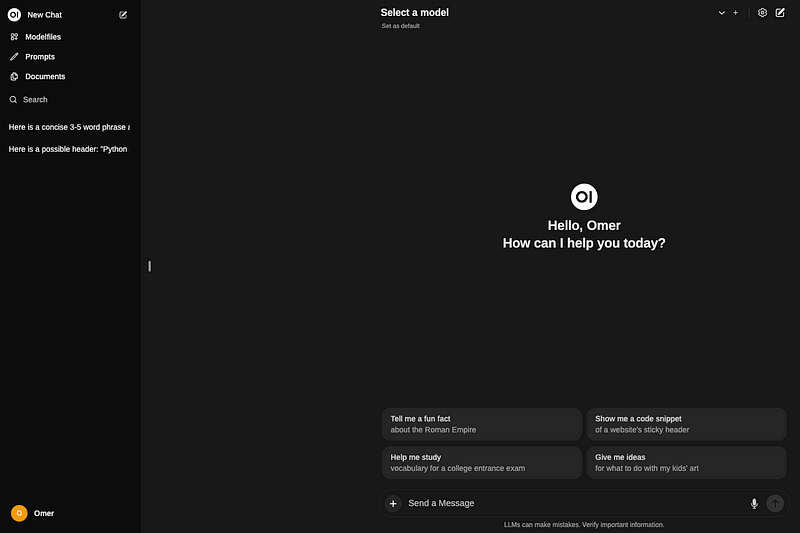

When you login, you will see a familiar screen (Like ChatGPT) below.

You can now select a model from above and start testing LLM models.

With our current setup, you are not limited to Meta Llama 3, you can use pretty much any other open source LLM models easily.

Conclusion

With the setup complete, your Apple Silicon Mac is now a powerful hub for running not just Meta Llama 3 but virtually any open-source large language model available. I hope you found this guide helpful! Feel free to share your experiences or ask questions in the comments below. Stay tuned for more tips on leveraging big data and AI tools directly from your local machine!

If you enjoyed this article, consider trying out the AI service I recommend. It provides the same performance and functions to ChatGPT Plus(GPT-4) but more cost-effective, at just $6/month (Special offer for $1/month). Click here to try ZAI.chat.