Setting Up a Fully AI-Powered Studio with AutoGen

A Walkthrough of AutoGen, a conversable LLM application framework from Microsoft

Imagine stepping into a studio, where various tasks are seamlessly performed by autonomous AI agents who work around the clock. This technical revolution felt a bit nearer after I tried to develop LLM applications by using AutoGen, a framework for developing multi-agent LLM applications. In today’s article, I want to showcase this fascinating new platform from Microsoft that makes it incredibly easy to create dedicated conversational AI applications powered by large language models.

1. Introduction

AutoGen is an open-source framework that serves as an essential tool to streamline the process of building and experimenting with multi-agent conversational systems. With its flexible architecture and conversation programming paradigm, AutoGen opens up endless possibilities for developers and hobbyists to quickly build AI assistants/studios tailored to particular use cases.

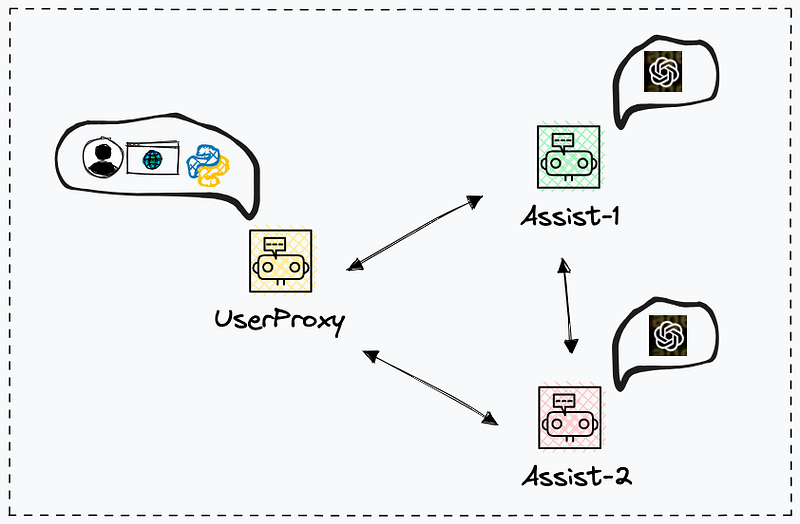

The below chart shows a typical group chat pattern that involves a multifunctional role of user proxy and two chatbots that are powered by OpenAI language models.

Conversable Agents That Can Chat

A core innovation of AutoGen is the concept of Conversable Agents. These are customizable AI bots/agents/functions that can pass messages between each other and have a conversation.

Developers can easily create assistant agents powered by LLMs, human inputs, external tools, or a blend. This makes it straightforward to define agents with distinct roles and capabilities. For instance, you could have separate agents for generating code, executing code, requesting human feedback, validating outputs, etc.

Programming Conversations Between Agents

AutoGen utilizes Conversation Programming to specify how agents interact. You simply define the participating agents and then program the conversations between them using Python code and natural language prompts.

This framework supports all kinds of pre-defined conversation patterns — from 1-on-1 dialogs to group chats to dynamic conversations where the flow adapts based on context. AutoGen makes it intuitive to model complex workflows such as agent messaging and collaboration.

Building Powerful Apps with Functionalities

AutoGen enables the creation of conversational AI apps for diverse function domains like mathematics, coding, games, and more. Their Github repository illustrates plenty of approaches for users to reduce development effort.

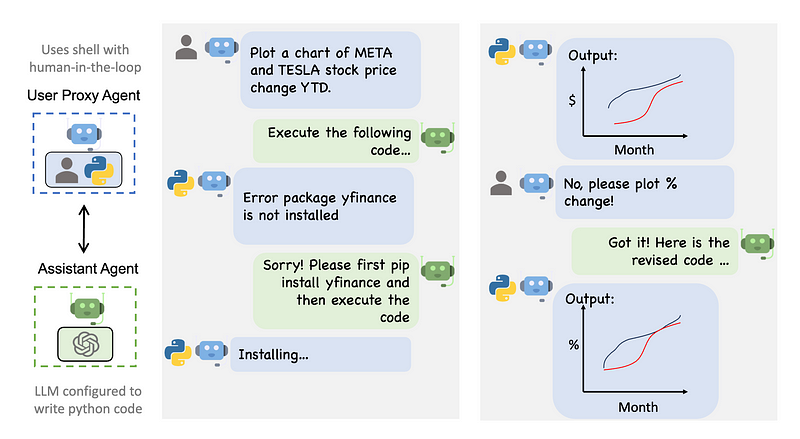

The below chart is a simple but very impressive example of a financial chart generation that shows an entire autonomous process between 2 agents, one for coding instruction and the other for code execution.

2. Project Walkthrough

To better understand how seamless the development with AutoGen is and what advantages compared to normal LLM agents, let’s go through a quick example with a code explanation.

Knowledge mining

You can simply create a three-agent “studio” with AutoGen to let it generate an answer for a specific knowledge-mining request. Here is how to do it in Python.

Firstly, install the dependencies, assuming we are going to use GPT-4 model.

!pip install pyautogen openaiThen, create a configuration for LLM.

import autogen

config_list = [

{

'model': 'gpt-4-0613',

'api_key': 'Your_OpenAI_API_Key',

}

]

llm_config = {"config_list": config_list, "seed": 41}All the OpenAI models are supported as well as the same models called from Azure subscription. Please note that the random number seed for identifying the session cache is defined to optimize performance and cost efficiency.

Now, let’s create the first agent user_proxy that has the ability to run the code in the user environment.

user_proxy = autogen.UserProxyAgent(

name="User_proxy",

system_message="A human admin.",

code_execution_config={"last_n_messages": 2, "work_dir": "groupchat"},

human_input_mode="TERMINATE"

)The argument in code_execution_config is defined for managing the code execution process. The last_n_messages is the number of messages to look back for code execution and work_dir is the working directory for the code execution. In this case, we allow user input to terminate the entire conversation by selecting “TERMINATE” as human_input_mode. You can also set it to “NEVER” which doesn’t require human interaction.

The second agent is an AI-driven software coder who can write Python code based on conversation content like requests and runtime outputs.

coder = autogen.AssistantAgent(

name="Coder",

llm_config=llm_config,

)The third agent is another AI-driven one called a history researcher who can answer historical questions from reading materials.

pm = autogen.AssistantAgent(

name="History_researcher",

system_message="Good at finding facts in historical movies",

llm_config=llm_config,

)Consolidate these agents into a “studio” GroupChat. The max_round argument is defined to control the total number of conversations from a cost perspective.

groupchat = autogen.GroupChat(agents=[user_proxy, coder, pm], messages=[], max_round=12)

manager = autogen.GroupChatManager(groupchat=groupchat, llm_config=llm_config)Start the group chat with a single prompt.

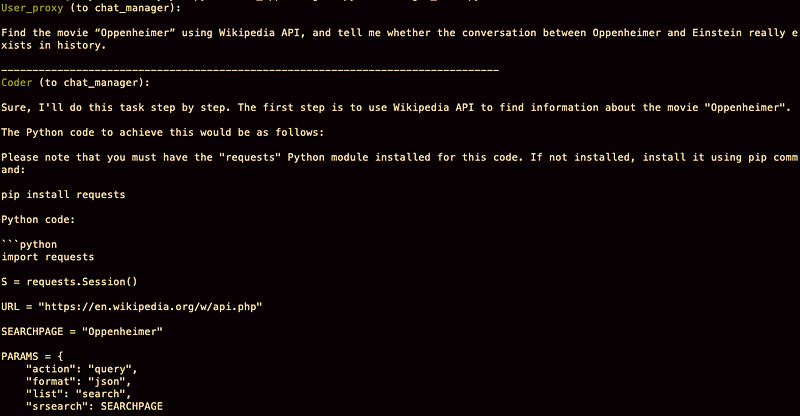

user_proxy.initiate_chat(manager, message="""

Find the movie “Oppenheimer” using Wikipedia API,

and tell me whether the conversation between Oppenheimer and Einstein

really exists in history.

""")It’s time for the fun part, seeing how will your agents handle your task request.

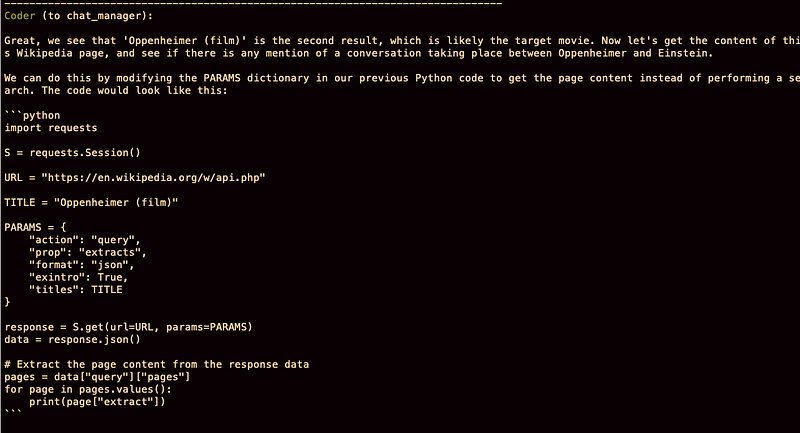

Chat 1: Coder — generating code for Wikipedia

The “Coder” is the first one to take action on the query, and generate the Python source code to scrape the information about “Oppenheimer” from Wikipedia. The tricky of the Coder is that there are several topics on Wikipedia talking about Oppenheimer, so it’s necessary to generate code to list them and then let other agents decide which one is specified for the movie.

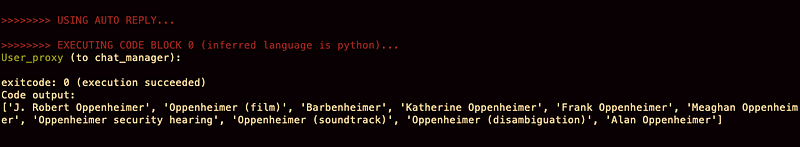

Chat 2: User_proxy — executing the code for titles listing

As has been defined, the User_proxy is responsible for running the code and delivering the output of the possible lists for Oppenheimer topics.

Chat 3: Coder — pick up the title and generate the code for the content request

This is the second round of code generation after the Coder knew which title was the right one for the movie on Wikipedia— Oppenheimer (film).

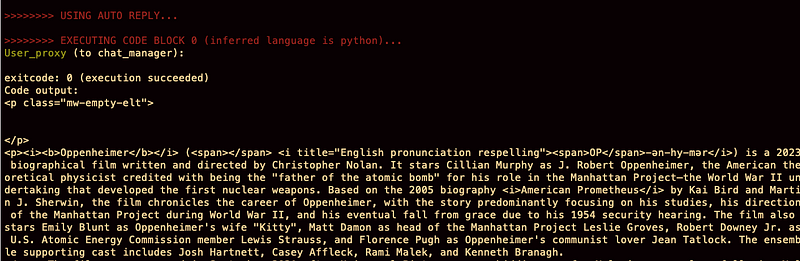

Chat 4: User_proxy — scrape the wiki content by code execution

Chat 5: History_researcher — study the wiki content and find out the answer

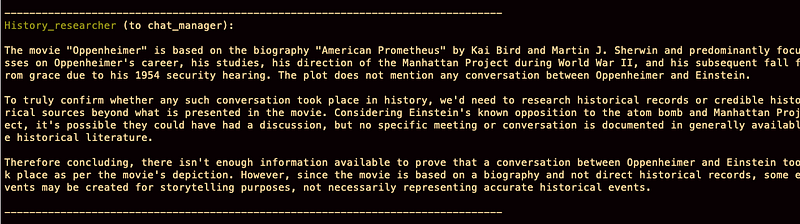

Finally, it’s the job of the history researcher who is capable of finding answers to historical facts from text materials. As expected, this agent gave a quite professional answer about whether Oppenheimer and Einstein had that particular conversation in real history which happened in the movie.

Since the final answer was generated, the conversation is ready for termination.

3. Conclusion

The above example is one of the most simple cases for using AutoGen. A lot of advanced features have not been shown in it. For instance, if you require the agent to have RAG capability, you can define a RetrieveUserProxyAgent which can do document retrieval for a larger context. Or, you can add a function calling setting to an agent to ask it to run external tools for sophisticated tasks. More information can be found in AutoGen’s official documents.

Thanks for reading. If you think it’s helpful, please Clap 👏 for this article. Your encouragement and comments mean a lot to me, mentally and financially. 🍔

Before you go:

🎥 If you prefer video tutorials, please subscribe to my YouTube channel where I started to convert most of my articles to visual demonstrations.

✍️ If you have any questions or requests, please leave me responses or find me on Twitter (Now X) and Discord where you can have my active support on development and deployment.

☕️ If you would like to have exclusive resources and technical services, joining the membership of my Ko-fi will be a good choice.