LangChain — Prompt Template

What is a Prompt

A “prompt” is a carefully crafted input that is given to a language model to elicit a specific desired response. Prompts are essentially instructions or questions designed to guide the model’s output in a certain direction. They play a crucial role in interacting with models like GPT (Generative Pre-trained Transformer) and other AI language systems.

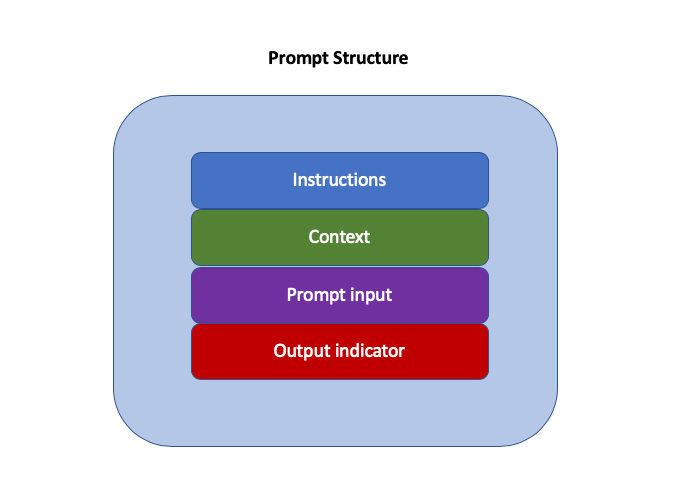

A basic prompt structure looks like:

- Instructions: Informs the model about the general task at hand and how to approach it, such as how to utilize provided external information, process queries, and structure the output. This is typically a more constant section within a prompt template. A common use case involves telling the model “You are a useful XX assistant,” prompting it to take its role more seriously.

- Context: It acts as an additional source of knowledge for the model. This information can be manually inserted into the prompt, retrieved through vector database searches, or brought in through other methods (like calling APIs, using calculators, etc.). A common application is to pass knowledge obtained from a vector database search to the model as context.

- Prompt Input: It usually represents the specific question or task for the large model to address. This part could actually be merged with the “Instruction” section, but separating it into an independent component makes the structure more organized and the template easier to reuse. It is typically passed as a variable to the prompt template before invoking the model to form a specific prompt.

- Output Indicator: It marks the beginning of the text to be generated. It’s similar to writing “Solution:” on a math test in childhood, signaling the start of your answer. If generating Python code, the word “import” might be used to signal the model to start writing Python code (as most Python scripts begin with imports). This part is often superfluous when conversing with ChatGPT, but in LangChain, agents often use a “Thought:” as a lead-in, indicating the model to start outputting its reasoning.

Types of Prompt Templates in LangChain

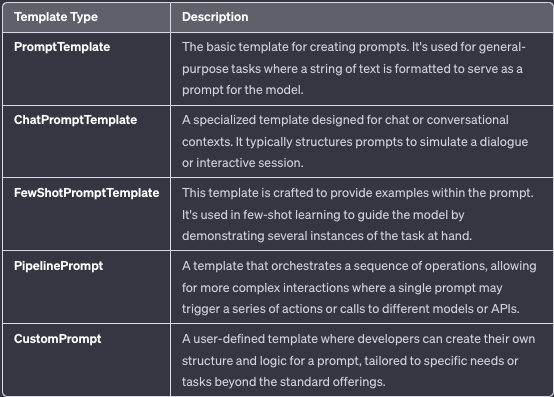

LangChain offers two fundamental types of templates: String (StringPromptTemplate) and Chat (BaseChatPromptTemplate), from which various specialized prompt templates are constructed:

To import these templates in LangChain, you can:

from langchain.prompts.prompt import PromptTemplate

from langchain.prompts import FewShotPromptTemplate

from langchain.prompts.pipeline import PipelinePromptTemplate

from langchain.prompts import ChatPromptTemplate

from langchain.prompts import (

ChatMessagePromptTemplate,

SystemMessagePromptTemplate,

AIMessagePromptTemplate,

HumanMessagePromptTemplate,

)PromptTemplate

A simple example:

from langchain import prompts

template = """\

You are a business consulting advisor.

Can you suggest a good name for an e-commerce company that sells {product}?

"""

prompt = prompts.PromptTemplate.from_template(template)

print(prompt.format(product="toy"))The primary function of this program is to generate prompts tailored to various scenarios, offering suggestions for company names based on a product or service defined by the user.

In the process described above, one convenient aspect of the templates in LangChain is that the from_template method can automatically extract variable names (such as product) from the input string without the need for explicit specification. Consequently, product in the program becomes an automatic parameter within the format method.

you also have the option to manually specify input_variables through the constructor of the prompt template class when creating the template, as illustrated in the following example:

prompt = PromptTemplate(

input_variables=["product", "market"],

template="You are a business consulting advisor. For a company focusing on selling {product} in the {market} market, what name would you recommend?"

)

print(prompt.format(product="flowers", market="middle"))ChatPromptTemplate

For chat models like OpenAI’s ChatGPT, LangChain also offers a range of templates, each featuring specific roles. The following code demonstrates the various message roles in OpenAI’s Chat Model.

For example:

import openai

openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are an informed sports assistant."},

{"role": "user", "content": "Tell me about the 2022 FIFA World Cup."},

{"role": "assistant", "content": "The 2022 FIFA World Cup is scheduled to be held in Qatar."},

{"role": "user", "content": "What teams are considered favorites?"}

]

)The format specification for messages transmitted to gpt-3.5-turbo and GPT-4 by OpenAI is as follows:

Messages must be an array of message objects, each with a role (system, user, or assistant) and content. The conversation can be as short as a single message or involve multiple exchanges.

Typically, a dialogue starts with a system message for formatting, followed by alternating user and assistant messages.

System messages help to set the behavior of the assistant. For instance, you can modify the assistant’s personality or provide specific instructions on how it should behave throughout the conversation. However, note that system messages are optional, and the behavior of models without system messages may be similar to using a generic message, such as “You are a helpful assistant.”

User messages provide requests or comments for the assistant to respond to. Assistant messages store previous assistant responses but can also be written by you to provide examples of the desired behavior.

The series of ChatPromptTemplate templates in LangChain are designed in alignment with this range of roles. For example:

# Import chat message class templates

from langchain.prompts import (

ChatPromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

)

# Building the template

template = "You are a creative marketing expert, tasked with brainstorming advertising strategies for {product} companies."

system_message_prompt = SystemMessagePromptTemplate.from_template(template)

human_template = "Our focus is on {product_detail}, targeting the youth market."

human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

prompt_template = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt])

# Formatting the prompt messages

prompt = prompt_template.format_prompt(product="tech gadgets", product_detail="eco-friendly smart watches").to_messages()

# Calling the model with the prompt to generate results

from langchain.chat_models import ChatOpenAI

chat = ChatOpenAI()

result = chat(prompt)

print(result)Outputs:

content='xxxxxx'

additional_kwargs={}

example=FalseFewShotPromptTemplate

The concept of FewShot, or few-shot learning, is a crucial aspect of prompt engineering. It aligns with the second guideline in OpenAI’s prompt engineering recommendations — providing the model with references or examples.

The concepts of Few-Shot (few examples), One-Shot (single example), and the corresponding Zero-Shot (no examples) originate from machine learning. The ability of machine learning models to learn new concepts or categories with minimal or even no examples is highly valuable for many real-world problems, as acquiring a large amount of labeled data is often not feasible.

Let’s create some sample inputs

samples = [

{

"toy_type": "Building Blocks",

"occasion": "Learning",

"ad_copy": "Building blocks, perfect for sparking creativity, are the ideal choice for educational play."

},

{

"toy_type": "Teddy Bear",

"occasion": "Comfort",

"ad_copy": "Teddy bears, a cuddly companion, offer comfort and friendship to children."

},

{

"toy_type": "Lego sports car",

"occasion": "Birthday",

"ad_copy": "Lego sport cars bring thrilling adventures to playtime, perfect for a birthday gift."

},

{

"toy_type": "Puzzle",

"occasion": "Brain Teasing",

"ad_copy": "Puzzles challenge the mind and offer hours of problem-solving fun for all ages."

}Based on the above samples, let’s create a template

from langchain.prompts.prompt import PromptTemplate

template = "Toy Type: {toy_type}\nOccasion: {occasion}\nAd Copy: {ad_copy}"

prompt_sample = PromptTemplate(input_variables=["toy_type", "occasion", "ad_copy"],

template=template)

print(prompt_sample.format(**samples[0]))In this step, we have created a PromptTemplate object. This object generates prompts based on specified input variables and a template string. Here, the input variables include “toy_type”, “occasion”, and “ad_copy”.

The template is a string containing variable names enclosed in curly braces, which are replaced by the corresponding variable values. With this, we have transformed the example format from the dictionary into a prompt template, creating usable LangChain prompts. For instance, I used data from samples[0] to replace the variables in the template, resulting in a complete prompt.

Create FewShotPromptTemplate

Next, by utilizing the prompt_sample created in the previous step and all examples from the samples list, we create a FewShotPromptTemplate object to generate more complex prompts.

# 3. Create a FewShotPromptTemplate object

from langchain.prompts.few_shot import FewShotPromptTemplate

prompt = FewShotPromptTemplate(

examples=samples,

example_prompt=prompt_sample,

suffix="Toy Type: {toy_type}\nOccasion: {occasion}",

input_variables=["toy_type", "occasion"]

)

print(prompt.format(toy_type="Miniature Train", occasion="Playtime"))Output:

Toy Type: Building Blocks

Occasion: Learning

Ad Copy: Building blocks, perfect for sparking creativity, are the ideal choice for educational play.

Toy Type: Teddy Bear

Occasion: Comfort

Ad Copy: Teddy bears, a cuddly companion, offer comfort and friendship to children.

Toy Type: Lego sports Car

Occasion: Birthday

Ad Copy: Lego sport cars bring thrilling adventures to playtime, perfect for a birthday gift.

Toy Type: Puzzle

Occasion: Brain Teasing

Ad Copy: Puzzles challenge the mind and offer hours of problem-solving fun for all ages.

Toy Type: Miniature Train

Occasion: PlaytimeAs evident, the FewShotPromptTemplate is a more intricate type of prompt template that encompasses multiple examples along with a prompt. This kind of template can guide the model to generate corresponding outputs using several examples. Currently, we have created a new prompt that includes specifics for the toy type “Miniature Train” and the occasion “Playtime”.

Use LLM

Finally, by passing this object to the large model, we can obtain the desired copy based on the prompt we’ve created!

from langchain.llms import OpenAI

model = OpenAI(model_name='text-davinci-003')

result = model(prompt.format(toy_type="Miniature Train", occasion="Playtime"))

print(result)Use Sample Selector

If we have a large number of examples, it’s impractical and inefficient to send all of them to the model at once. Additionally, including too many tokens in each request can lead to unnecessary data usage (since OpenAI charges based on the number of tokens used).

LangChain provides an example selector to choose the most appropriate samples. (Note that since the example selector uses vector similarity comparison, a vector database needs to be installed. Here I use Chroma).

from langchain.prompts.example_selector import SemanticSimilarityExampleSelector

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

# Initialize the Example Selector

example_selector = SemanticSimilarityExampleSelector.from_examples(

samples,

OpenAIEmbeddings(),

Chroma,

k=1

)

# Create a FewShotPromptTemplate object using the Example Selector

prompt = FewShotPromptTemplate(

example_selector=example_selector,

example_prompt=prompt_sample,

suffix="Toy Type: {toy_type}\nOccasion: {occasion}",

input_variables=["toy_type", "occasion"]

)

print(prompt.format(toy_type="Toy Car", occasion="Birthday"))In this revised code, the SemanticSimilarityExampleSelector from LangChain is used to select the most relevant examples from the given samples. A FewShotPromptTemplate object is then created using this selector, tailored to the toy context with the provided suffix and input variables. The final prompt is formatted with the toy type “Remote Control Car” and the occasion “Birthday” and then printed.