Exploring AI Vision: How to Use OpenAI’s Tools for Image and Video Analysis

Harnessing the Power of Sight: Innovative Applications with OpenAI’s Vision API

Introduction

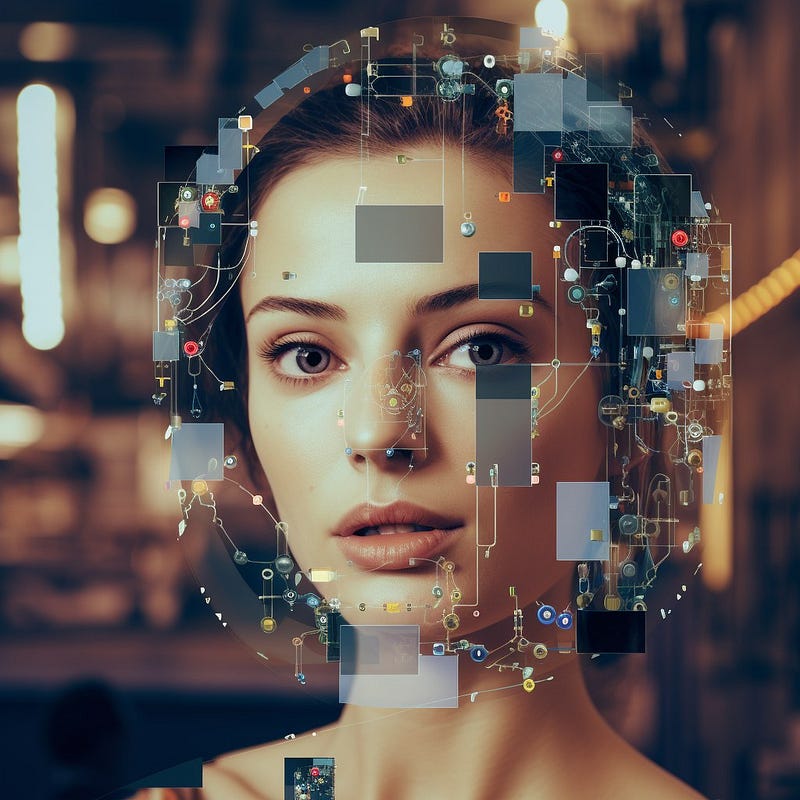

The rise of artificial intelligence has been nothing short of revolutionary, offering us the ability to see the world through the lens of advanced computational models. The OpenAI Vision API stands at the forefront of this revolution, providing developers with a powerful tool for image and video analysis. This article takes a hands-on approach to explore the capabilities of the OpenAI Vision API through a series of experiments and practical examples. By leveraging this API, we can transform the way we interact with visual data, making our applications not just see but also understand the visual world around us.

Exploring the OpenAI Vision API Through Roboflow’s Repository

The repository at roboflow/awesome-openai-vision-api-experiments serves as a treasure trove for anyone eager to delve into the world of visual AI. It contains a collection of examples demonstrating how to use the OpenAI Vision API to analyze images, video files, and live webcam streams. Here, we'll dissect these examples, provide best practices, and show you how to implement the code effectively.

Installation

# create and activate virtual environment

python3 -m venv venv

source venv/bin/activate

# install dependencies

pip install -r requirements.txtInference on Images

Performing inference on static images is often the first step toward understanding the capabilities of any vision API. Let’s start by loading an image and sending it to the OpenAI Vision API for analysis.

import openai

# Assuming you've set your API key in your environment variables

openai.api_key = os.getenv("OPENAI_API_KEY")

def analyze_image(image_path):

with open(image_path, 'rb') as image_file:

image_data = image_file.read()

response = openai.Image.create(

file=image_data,

model="vision-embedding-001"

)

return response

# Example usage

image_analysis = analyze_image('path/to/your/image.jpg')

print(image_analysis)The function analyze_image reads the image data and sends it to the OpenAI API, returning the analysis results. It’s essential to handle the image as a binary stream to ensure data integrity during the transmission to the API endpoint.

Inference on Video Files

The process for video files is similar to images, but with an added complexity of dealing with multiple frames. To analyze a video, we extract frames and then run inference on each frame.

import cv2

def analyze_video(video_path):

cap = cv2.VideoCapture(video_path)

frames_results = []

while True:

ret, frame = cap.read()

if not ret:

break

# Convert the image to a format compatible with OpenAI API

_, buffer = cv2.imencode('.jpg', frame)

frame_result = openai.Image.create(

file=buffer.tobytes(),

model="vision-embedding-001"

)

frames_results.append(frame_result)

cap.release()

return frames_results

# Example usage

video_analysis = analyze_video('path/to/your/video.mp4')

for frame_analysis in video_analysis:

print(frame_analysis)Here, we use OpenCV to read the video file, extract frames, and then encode them to JPEG before sending them to the API. This conversion is necessary because the OpenAI API expects the image data in a certain format.

Inference on Webcam Streams

To perform real-time analysis on webcam streams, we follow a similar method to video files but with a continuous stream of frames.

def analyze_webcam_stream():

cap = cv2.VideoCapture(0) # 0 is typically the default camera

try:

while True:

ret, frame = cap.read()

if not ret:

break

# Convert the image to a format compatible with OpenAI API

_, buffer = cv2.imencode('.jpg', frame)

frame_result = openai.Image.create(

file=buffer.tobytes(),

model="vision-embedding-001"

)

print(frame_result)

# Add here the logic to display the frame or the analysis results

finally:

cap.release()

# Example usage

analyze_webcam_stream()In this scenario, we capture each frame from the webcam, process it, and output the analysis in real-time. It’s important to manage resources efficiently, ensuring the capture device is released properly after use.

Best Practices

When working with the OpenAI Vision API, there are several best practices you should follow:

- Rate Limiting: Be mindful of the API’s rate limits to avoid service interruptions.

- Error Handling: Implement robust error handling to deal with network issues or invalid inputs.

- Data Privacy: Ensure that you handle sensitive data according to privacy regulations and guidelines.

- Resource Management: When dealing with video and webcam streams, manage the system’s resources to prevent memory leaks and ensure the application’s responsiveness.

Conclusion

The OpenAI Vision API is a gateway to unearthing the immense potential that lies within visual data. By tapping into this API, we arm our applications with the perception needed to interpret and interact with the world in groundbreaking ways. Through the examples provided, we’ve seen how to harness the API’s power for static images, video files, and real-time webcam streams. The key lies in understanding the nuances of each medium — whether it’s the singular focus of a still image, the dynamic nature of video frames, or the immediacy of live video feed.

When integrating the OpenAI Vision API into your projects, remember that experimentation is as crucial as implementation. Testing various scenarios, different types of visual content, and diverse environments will give you a richer understanding of the API’s capabilities and limitations.

Moreover, the field of AI is in constant flux, with new models and techniques emerging regularly. Staying updated with the latest releases and updates from OpenAI will ensure that your applications remain at the cutting edge of technology.

In conclusion, the OpenAI Vision API is more than just a tool; it is a canvas for innovation. By combining the examples and best practices outlined here with your creativity and expertise, you can build solutions that not only see but also comprehend and act upon the visual world. Whether you’re building the next-generation of interactive entertainment, revolutionizing the field of security with AI-powered surveillance, or crafting an AI assistant that understands the world as we do, the potential is limitless. The vision API is your stepping stone to a future where AI and human vision converge, creating a landscape ripe for innovation and discovery.