CVEs: Security Bugs That Bite

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Related Stories: Cybersecurity for Executives

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

As part of my Cybersecurity for Executives online book, I listed 20 questions to ask your security team. The first question was How many of our systems are running out of date software with known security flaws (otherwise known as CVEs)? What is a CVE, where do they come from, and why is it important?

Do you know what CVE-2017–5638 is? It happens to be the root cause of the Equifax breach. Attackers were able to use this CVE to gain access to Equifax systems and expose personal data of 143 million Americans. They attacked a known and published flaw in a specific piece of software to gain access to an Equifax server. Once on that server, they used that machine to get to other computers in the company, until ultimately, they were able to access and steal data. The flaw in the software was published online two months earlier. If the company had taken action when that vulnerability was published, the company could have avoided this data breach.

Here is one more example. CVE-2017–0144, is the underlying mechanism that facilitated the WannaCry ransomware outbreak, which shut down large companies and hospitals in 2017. Malware was able to get onto systems using this CVE. It would then encrypt all the files, making them inaccessible, and then demanded a ransom in bitcoins to get them back. This ransomware spreads from computer to computer, from organization to organization — automatically. And it is still going! In August 2018, TSMC chip maker that manufactures iPhone chips said ransomware halted production for an entire day. Although this ransomware shutdown hospitals in the UK, Malwarebytes reports that this is still one of the top five causes of data breaches in healthcare as of April 30, 2019.

What is a CVE?

CVE stands for Common Vulnerabilities and Exposures. CVEs are flaws in information security systems that could be used to harm an organization or personal computer systems. As researchers and vendors discover new vulnerabilities, they are added to the list of known CVEs, so organizations can take action to protect themselves. The vulnerabilities will include a rating that indicates how critical they are. Executives should ensure their organizations fix security vulnerabilities as soon as possible — starting with the most critical.

In 1999, MITRE Corporation launched a CVE List to help organizations track flaws, primarily in software though there have been some hardware related flaws. The US National Institute of Standards and Technology also publishes known CVEs. Another site, CVE Details, sometimes has additional information. You can sign up for a newsletter from US Cert to learn about new CVEs. By publishing a list of known flaws in hardware and software, organizations should be able to track problems that could affect their systems so they can do something about it.

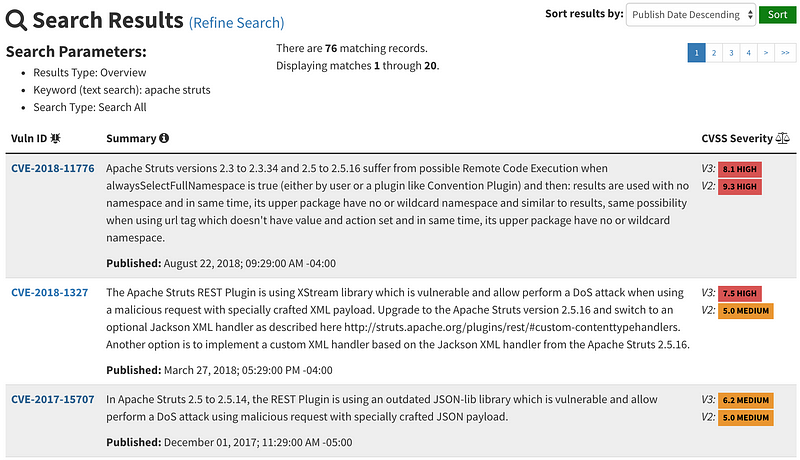

In the Equifax breach, the vulnerable software was called Apache Struts. The NIST web site and others have search capabilities to see if any vulnerabilities exist in a particular type of software. This search shows CVEs in Apache Struts.

How are new vulnerabilities discovered?

Security flaws are sometimes exposed when security researchers test software or hardware. They intentionally attack systems using a variety of techniques including looking for logic flaws in the code that are caused by faulty programming that did not consider all actions an end user could take on the system. Researchers may also use a technique called fuzzing where they intentionally pass a massive amount of bad data into all the places where data is input into the system. If the software does not correctly validate all inputs, the system could crash. If the researcher can produce a crash, this may indicate a security flaw that they can exploit to get access to the system, data, or the ability to completely control the system.

Security researchers may also look at malware that has infected systems and use a variety of techniques to reverse engineer how that malware works. Sometimes malware tries to obfuscate itself to be sneaky —meaning the attacker makes it harder for tools designed to stop malware to see what it is or for security researchers to figure out what it is doing. They do this using tools called packers or by changing code in text into other formats that are not human readable. Hackers may hide attacks or data in manipulated network traffic. Some types of malware run in the memory of a system, without putting files onto the machine.

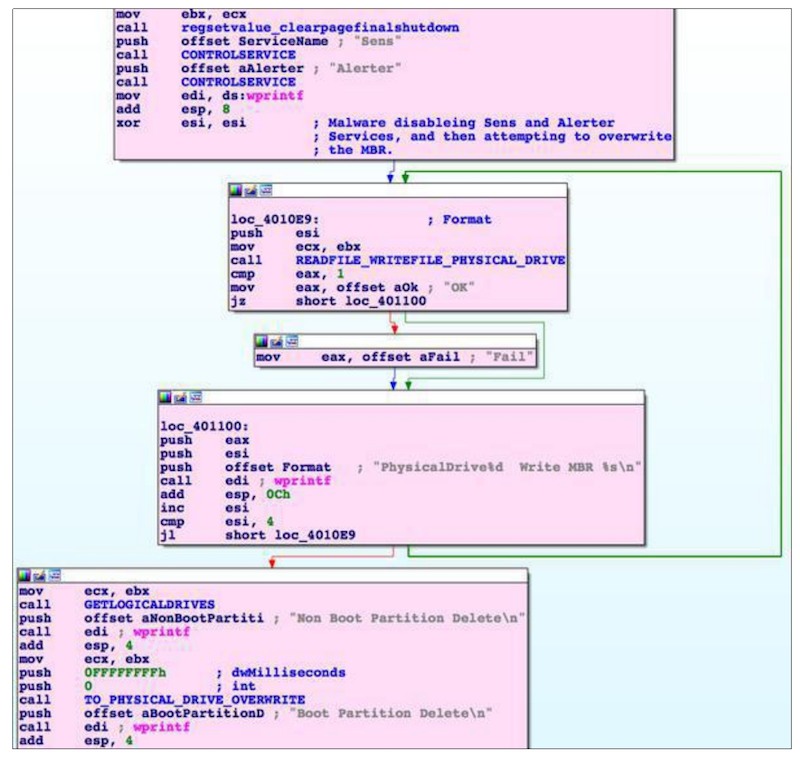

Often researchers will need open and run malware in tools called disassemblers or debuggers such as IDA or GHIDRA. These tools allow the researcher to see the low-level code called assembly language that interacts with the hardware since the full source code is often not available. They may also look at the underlying structure of PDF files, Word documents, or web pages to find nefarious executable code injected into files by attackers. Studying the network activity generated by malware is another useful way to gain insight into what it is doing. These are just a few examples of how security researchers figure out how malware got onto a machine and what it is doing.

In some cases, vendors discover vulnerabilities internally or via customers and report the vulnerability themselves. They generally will also provide a fix for the CVE at the same time as the public disclosure, if it is a critical issue.

Responsible disclosure

After a researcher finds a vulnerability, he or she may report the findings. Hopefully, they will disclose information in a manner that will not harm others — meaning they don’t just put it on a blog post and tell the world the minute they discover it! Doing so might alert malicious actors to the flaw who will then use it against innocent victims. Researchers should first contact the vendor to let them know about the problem and give the vendor a chance to fix it. This process is known as responsible disclosure. If flaws are published before the vendor can fix it, this may allow attackers to break into systems in the meantime.

Unfortunately, some researchers try to report vulnerabilities to vendors, and vendors do not fix the flaws in a timely manner, or they may not fix them at all. In some cases, vendors ignore security researchers, either intentionally or because they do not have a good process for dealing with these reports. In other cases, they may even threaten to sue the researcher or take other adversarial action. This reaction causes some security researchers to be apprehensive about telling vendors when they find flaws in products.

What motivates security researchers?

Security researchers may have many different motivations for finding and exposing CVEs. First, a security researcher might be genuinely interested in fixing security problems to make the world a better place. As crazy as that sounds, some people actually care about keeping the world safe. Unfortunately, we also all need to make a living to pay our bills and eat, so at some point, a security researcher is generally hoping to make some income from the time they put into security research and finding vulnerabilities.

A security researcher may be hunting for vulnerabilities as part of his or her job on a research team for a security vendor that creates software to block malware and protect systems. When the researcher uncovers a vulnerability, the company adds new features to products to prevent malware from leveraging the flaw to access systems. Other companies publish security information to demonstrate security knowledge and share information. Some examples of companies publishing malware research include Google’s Project Zero and Cisco’s Talos. I worked on a security team for a vendor and it was one of my favorite jobs.

In some cases, a researcher may be interested in gaining some notoriety for finding the flaw. Often researchers speak at hacker and security conferences like Defcon or BSides where they demonstrate how they broke into systems or software. Many of these researchers are also pentesters (short for penetration testers), who are hired by companies to try to break into systems. More on that in a future blog post. By proving they were able to exploit systems they may gain some fame, or perhaps get some more business if they show prowess in hacking into systems.

Another reason researchers look for flaws would be to ask the company for money in return for reporting the vulnerability. Researchers may reach out directly to companies asking for money when they find programming errors they can use to break into systems, though as noted this could have some repercussions. Sometimes they will go through a third party to sell their findings. The researcher may not know who the other party is that is buying the exploit — and for what purpose — which could have negative consequences. Some companies run bug bounties directly, like Google’s Vulnerability Reward Program, or through organizations HackerOne or BugCrowd as a way to allow security researchers to attack systems with permission and report flaws.

Malicious Motivations

Of course, vulnerabilities are often used to create malware for harmful purposes. The line between security researchers and hackers who exploit systems without permissions is sometimes blurry. The work tends to be very similar. Consider one of the most well-known hacker tools: Metasploit. HD Moore created this tool for pentesters hired by companies to test the security of their systems. Since then Metasploit has been used for illegal activities as well.

Hackers may operate alone or as part of an organized crime organization that uses exploits to break into systems and make money. Fireeye has a report on one group called FIN7— there are many others around the world tracked by companies and governments to try to understand and limit their harmful activities.

A security researcher may also be working for a foreign government. Depending on where you live in, the word foreign has a different context. A security researcher in one country may be considered a hacker in another. Countries have already attacked each other using malware. In some cases, these efforts are initially secret, but eventually, they tend to be exposed. A fascinating read outlines how the US used such an attack to blow up Iranian Nuclear facilities when they were operating outside of an agreement to prevent the proliferation of nuclear material: Countdown to Zero Day: Stuxtnet and the Launch of the World’s First Digital Weapon. According to the New York Times, President Obama facilitated this use of cyber force. Note that now Iran is now considered one of the top cyber threats to the US.

Countries are now employing cyber armies. Sometimes these cyber attacks seem invisible, but at other times they affect people’s lives in a more significant way. Recently Israel bombed a building in Gaza alleged to be the base of a Hamas hacking group. News organizations reported that Russia is the source of a form or ransomware named NotPetya which was used to take down systems not only in Ukraine but spread throughout the world similar to WannaCry. The US government indicted Chinese hackers as a result of some of this cyber activity.

Zero Day Malware

Bad actors do not always disclose vulnerabilities because they want to be able to create malware and use it for some nefarious purpose, and typically it is something big. These vulnerabilities are not common occurrences and used in extreme cases, because once the public knows about it, it may not work again in the future. The people who create the software should fix it once it becomes public. An attacker is not going to waste it because obtaining zero-day vulnerabilities is time-consuming and expensive.

That zero-day malware might allow the attacker to get into a system with proprietary information that they can sell to a competitor. In the case of a government, the idea may be that the government wants to use the malware to defend itself. Although zero-day malware sounds exciting, it is not the cause of most data breaches. Some companies claim that a data breach was caused by zero-day malware when, most likely, it wasn’t. Although zero-day malware sounds intriguing, in many cases attackers don’t even need to use it. There are so many other easier to attack problems on systems that the attackers don’t need to “burn a zero-day” as the security industry likes to call it. They can use much more basic and well-known exploits — in other words, CVEs!

So back to my original question — How many CVEs exist in the systems running in your organization?

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2019

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab