Containers 101 and Why Use Them?

ACM.277 The basics of containers like Docker containers

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

⚙️ Check out my series on Automating Cybersecurity Metrics | Code.

🔒 Related Stories: Container Security | Application Security | Cybersecurity

💻 Free Content on Jobs in Cybersecurity | ✉️ Sign up for the Email List

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In the last post I wrote about creating a VPC with a NAT and a VPC Endpoint for AWS CodeCommit (or any AWS service request that you want to keep off the Internet). A Lambda function needs to access GitHub from a private network via the NAT.

In order to test this network out we are going to create a Lambda function. However, what I plan to do with pretty much all code I write is to run it in a container.

What’s a container?

A container is essentially a way to package up and run some code. You define your container in a container image. Then you can create one or more containers based on that container image.

What’s a container image?

Think of a container image like a template of a container, not the actual container that you create based on the template. A container image is typically defined in a file written in a specific manner that determines the characteristics of a container when it is run. You build an image from that template file an all the components that need to go into the image.

The image is used by the container engine to run containers based on that template. To run Docker containers, you install Docker (the Docker engine.) You write an image file in the specified format, and you use the Docker engine to run it.

When you create a Docker image you write something called a Docker file.

Here are the components of a Dockerfile.

You use a Docker file to build an image.

You can also build images starting with other images called base images. A best practice for Docker security and reduction of image sizes is to use a multi-stage build.

You use an image to run container.

Example command:

docker run --publish 8000:8000 node-dockerContainer registries or repositories

Developers build and store container images in repositories. Container registries store container images, not the containers themselves. When a developer wants to run a container, often they pull an image from a container registry. They might run a container based on that image, or build on top of that image to create a new image with additional software and configuration changes.

Amazon has a container registry service called Elastic Container Registry (ECR) which I’ll be using in another post.

AWS ECR has a lot of features like encrypting images, controlling access, container scanning, and managing container lifecycles.

Public versus private container registries

A public container registry is a place where any developer can upload an image or freely download an image from the repository that was created by someone else and uploaded to it. Many examples exist of containers containing malware being uploaded to public repositories, so you have to be careful what containers you use from public repositories.

Organizations that want to ensure the software they run is secure will maintain a private container registry. This is a registry that can only be updated by authorized individuals within the organization. Often organizations will have secure processes for interacting with private registries to maintain code and configuration integrity and network restrictions to limit access to authorized IP ranges. In addition, some cloud services offer mechanisms for security scanning for private registries to ensure images do not contain vulnerabilities or malware.

What’s the difference between an application running in a container versus an application that is not running in a container?

An application that is not running in a container gets the library it uses from the files stored on the host and used by all the other applications on the host.

This causes problems when you need to upgrade a library shared by many applications. For example I showed you how to deal with different versions of python in this post.

I might want to run the command “python” and one application needs python 3.10 and another needs python 3.14. How do you deal with that? Well, if you put the code in a container, you can put python 3.10 in the container for one application and python 3.14 in the container for the other application. When the applications run in a container they reference their own python installation and you don’t have any conflicts between the two. You can also upgrade the version of Python for one application without affecting the other.

You may also have applications that could be affected by precedence issues so you want to isolate the and enforce using a particular version of python, for example.

How is a container different than a Virtual Machine?

A container is different than a virtual machine in that multiple containers running on the same machine will share certain component of the underlying operating system. When you run a virtual machine, a complete operating system is installed in each virtual machine and no components are shared.

That means virtual machines are heavier to operate, take longer to load, and generally have a larger attack surface. On the other hand, virtual machines can be more isolated since they don’t share operating system components.

Often single processes will run in containers to carry out a single task.

On the other hand, a virtual machine will be used to run an entire application or multiple applications. I use virtual machines for my penetration testing environment. Virtual machines are often used to reverse engineer malware to keep the malware from infecting the primary operating system. We used virtual machines as development environments for one place I worked because the virtual machine had all the necessary software installed for any new developer. If something happened to your development environment, it could be restored from a backup.

What’s the difference between a microservice and a container?

You may have heard the term microservices. Generally, each microservice that carries out a specific function within an application will run in it’s own container. However, a microservice and a container are not one and the same. A microservice is a single service within an application. Although it can run in a container, it doesn’t have to do so. A microservice is a mini-executable and an entire application is made up of many different microservices that work together.

A container may run a microservice but it can also run an entire application. For example, I was having problems using Metasploit, a security testing tool, in a particular environment. I ended up building it from GitHub source in a container to avoid dependency conflicts with other things on my system. I could also limit how much Metasploit could interact with my system that way as well.

What would not be typical would be to install multiple applications into a single container. If you find yourself doing that you should ask yourself if the applications should be in separate containers, or if you may need a virtual machine instead.

What types of containers exist?

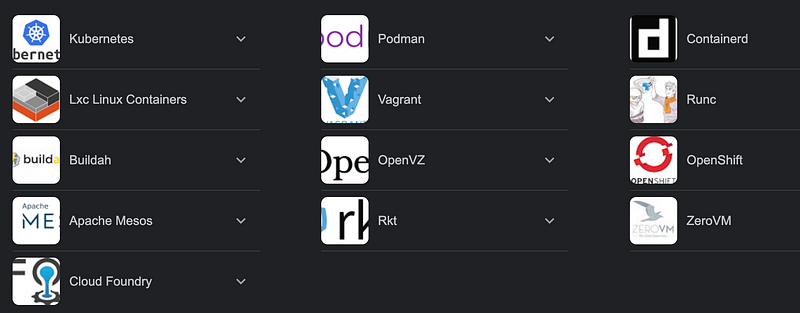

You may have heard of Docker containers, but containers existed as a Linux construct before Docker existed. Though most people still use Docker, some alternative exist. The different types of containers refer more to the underlying container engines and runtimes that run the containers.

If you search for Docker alternatives in Google this is what comes up:

What’s the difference between a container engine and a container runtime?

The container engine is the whole platform that provides tools to build and images and manage containers. Docker contains a number of tools and features related to container management.

The container runtime is a subset of the container engine or a standalone component that communicates withe OS to build and mount a container.

How do I run an application using multiple containers?

You can run commands to execute a single container. Often, however, organizations use a container orchestration system to run multiple containers associated with an application.

What is a container orchestration system?

A container orchestration system helps run and manage all the containers used to run an entire application. When containers stop working, the orchestration system terminates the container and starts a new one. In some cases traffic is load-balanced across multiple containers so a single container is not overloaded. Containers may be scaled up or down based on demand.

Applications are typically sending traffic to domain names that send the traffic to a particular container. As containers come up and go down the application needs to ensure that the domain names point to valid container IP addresses. If a container is no longer accessible, the container orchestration systems ensures the domain name doesn’t point to that container anymore. If a new container comes up, the application needs to read it so the DNS records need to be adjusted accordingly.

Container orchestration systems do a lot more than that to manage containers for an application. They can be complicated to set up and operate.

One of the most popular container orchestration systems is Kubernetes.

Docker Swarm is another container orchestration system.

AWS has another container orchestration system called Elastic Container Service.

Focusing on containers only with AWS Lambda, Fargate, or Batch

Instead of managing Kubernetes or some other complex container management system and managing all the underlying hosts, you can run docker containers in AWS Lambda, AWS Fargate, or AWS Batch. Using those services, you can quickly spin up a running container and a lot of the heavy lifting is done for you.

Use AWS Lambda when you have a short-lived process.

Use AWS Fargate when you need a longer-running containers for applications.

Use AWS Batch for non-interactive processes to process data.

Run a docker container in a Lambda function

You can write code and drop it into a Lambda function, or you can put your code in a container and add it to a Lambda function.

Why would I want to use a container? If I build a container I should be able to test it stand alone outside of Lambda to some degree, and then test it within Lambda. I can standardize how I write and deploy code — whether that code is deployed to Lambda, AWS Batch, Fargate, Kubernetes, or Elastic Container Service (ECS).

What security concerns do we have for containers?

I wrote about some of the threats you should consider when running containers here. I will try to cover these in more detail as time goes on.

In the next post I’ll create a simple container that clones a GitHub repository.

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2024

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity for Executives in the Age of Cloud

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Appication Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresenationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Medium: Teri Radichel

❤️ Sign Up For Email

❤️ Twitter: @teriradichel

❤️ Mastodon: @[email protected]

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab