Code Llama 2 Paper Review. Code Llama: Open Foundation Models for Code.

We release Code Llama, a family of large language models for code based on Llama 2 providing state-of-the-art performance among open models, infilling capabilities, support for large input contexts, and zero-shot instruction following ability for programming tasks. We provide multiple flavors to cover a wide range of applications: foundation models (Code Llama), Python specializations (Code Llama — Python), and instruction-following models (Code Llama — Instruct) with 7B, 13B and 34B parameters each. All models are trained on sequences of 16k tokens and show improvements on inputs with up to 100k tokens. 7B and 13B Code Llama and Code Llama — Instruct variants support infilling based on surrounding content. Code Llama reaches state-of-the-art performance among open models on several code benchmarks, with scores of up to 53% and 55% on HumanEval and MBPP, respectively. Notably, Code Llama — Python 7B outperforms Llama 2 70B on HumanEval and MBPP, and all our models outperform every other publicly available model on MultiPL-E. We release Code Llama under a permissive license that allows for both research and commercial use.

Okay, first things first, why do you need Code Llama 2 ( or equivalents, which will probably show up on the market probably reasonably soon?) Answer: to speed up software development. There is a lot to the art of software engineering ( as a tribute to Bob, please refer to Code Complete for details) . Now, think about your recent interaction with ANY system — be it signing up for university classes, paying tuition, scheduling an online appointment for your kid’s doctor, buying an airplane ticket — whatever it is — you used computers to give them your data and your money and ( hopefully) got something back. The majority of tech is doing just that — collecting the data and ( maybe) giving something back. There are, of course, other applications, like launching spaceships, digging into the earth, analyzing oceans’ water and weather, and so forth. Again, data input -> data output. The “service” or “vertical” or “domain” does not really matter. It will start to matter when cyber mechanics are joined with the data in — data out, and that’s when we will start to see rapid changes in our environments and put traffic cones on self-driving cars .

Now, smart people who will build those systems will be aided with tools like Code Llama 2. Therefore, let’s review how the theory behind it works, what it can and cannot do, and ( if the installation will finally finish, maybe even build our own #evilai for entertaining purposes. )

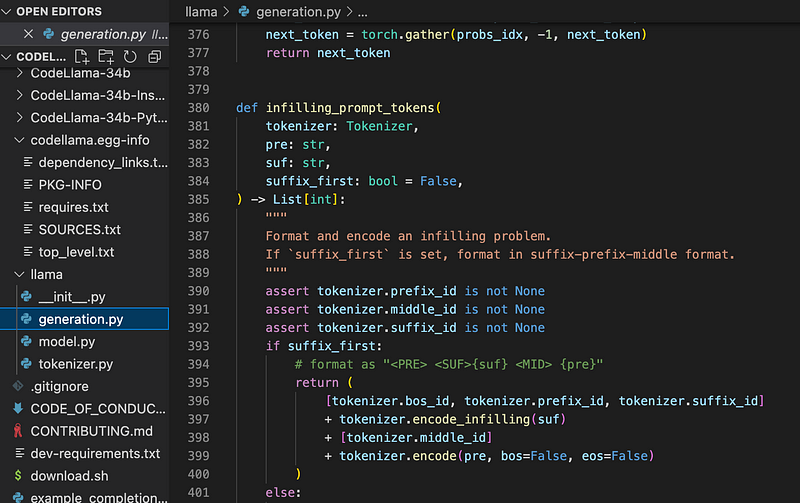

Use case #1. Infilling. What is it and why do you need it? It speeds up develiopment by autofiling ( guessing) what is next. You have seen it in google search, visual studio intellisense ( sirca 2006) , and the famous “duck” on your phone.

Code infilling is the task of predicting the missing part of a program given a surrounding context. Applications include code completion at the cursor’s position in code IDEs, type inference and generation of in-code documentation (e.g., docstrings).

Usefulness: it depends. I am sure there is a Infilling Product Manager’s powerpoint somewhere at Meta where they specified by how much each individual developer productivity has increased to validate the existence of the feature. How will it work when the AI will code the code? Does AI programer need code infilling feature? The philosophical discussion of robots job vs human job to follow…

Use Case #2. Long context fine tunining. I was trying to think of how to explain to an averge humans what long context is and why it is important. If you go search on google right now about it, you will drawn in tech jargon. So, robots to the rescue, here is what ChatGPT has to say about long context :

Alright, imagine you’re building a tower with your colorful blocks. Each block is like a word in a story. Now, some stories are short, like “The cat sat on the mat.” That’s easy to remember, right? It’s like a small tower of blocks.

But what if the story is really, really long, like a fairy tale with a prince, a dragon, and a treasure? That’s like a super tall tower of blocks! It’s harder to remember everything that happens in the story, just like it’s harder to see all the blocks in a tall tower at once.

In computer land, we have smart helpers called “machines” that read stories and answer questions about them. These machines are good at reading short stories but can get confused with long fairy tales. They might forget about the dragon by the time they read about the treasure!

So, people who are like toy-makers for machines are trying to make them better at remembering long stories. They use special tricks so the machine can think about the dragon and the treasure at the same time, just like you can see all the blocks if you step back from your tall tower.

And that’s what “long context” means. It’s like a really long story or a super tall tower of blocks that the machine is trying to understand all at once! 🐉🏰📚

Usefulness: it depends. One of the ( future) capabilities of AI I am personally fascinated by is the ability to “see” everything everywhere all at once. The question is — can a human help machine in fine tuning long context? Will we need a special field of mental health practitioners who help software developers working on “long context” to adjust and integrate to human society?

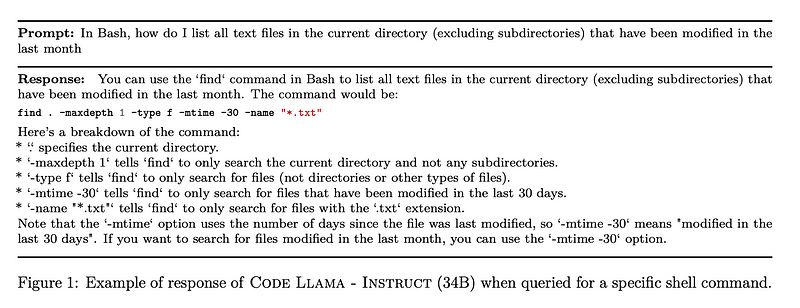

Use case #3. Instruction fine tuning. Why is it usefull — it is nice to have an assistant who tells you step by step what to do, exlains how it is done and produces ( hopefully) valid outcome. There is a lot of potenial in this one in computer science education, training, and such. If “sympathy” functions are added, you basically can get your favorite teacher in a digital form who will patiently train you on whatever and help with whatever ( well, since this is code llama, this will be your coding tutor).

The paper also goes into a discussion of #evilai writing bad code by being a bad actor. However, they state that there is plenty of malicious codes available on the dark web, so…I personally see toxicity, bias, and truthfulness as applied to generating the source code itself as a bit contradictory. The machine will write the code to create itself, but if it is building itself in the image of its creator, then the creator needs to take a hard, long look at its own toxicity, bias, and truthfulness. The machine does not know what “good” or “bad” is. In fact, how would you even explain to it what “malicous” code it?

In summary, Meta engineers created themselves a cheat code to pass coding interviews. Not sure what and how Leetcodewill battle against it.

If you are a CEO, CTO, engineering manager, etc — should you fire your human developers and replace them with AI developers to write your code base? NO. This technology is not yet capable of replacing humans, it aids humans in development, it certainly helps to pass irrelevant coding interviews full of obscure tricks no one will ever use in a real working environment. Of course, if your KPI is how many developers failed your interviews because of the random trick questions, this tech might help. If you are actually aiming to make money with technology, you still need humans.