Build Scenario-Specific Chatbot Using OpenAI And Pinecone

In this article, I will help you explore how to build a scenario-specific chatbot using OpenAI and Pinecone.

When we build a chatbot using some custom data, it is quite common that all the users will be getting response from the same set of documents. Now, what if you want to draw a thin line between users or between different scenarios with a constraint that a single index will be created for everyone.

The reason behind this use case is to provide more personalized and engaging experience to the end user.

Let’s dig deeper to understand, how we can achieve this.

Setting Up The Pinecone

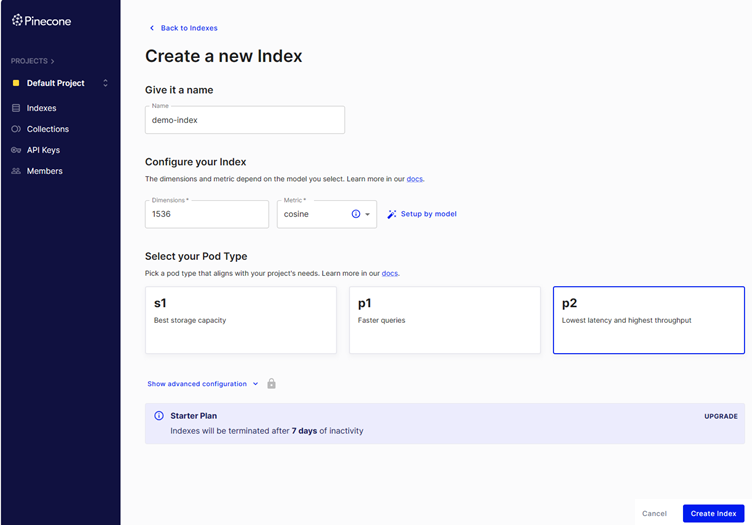

I’ll be using Pinecone to save and index my data. If you are new to Pinecone, feel free to visit https://app.pinecone.io/ and create your own empty index. Here is how the index creation screen will look like:

Once index is created, you can grab API Key and the Environment value from pinecone portal.

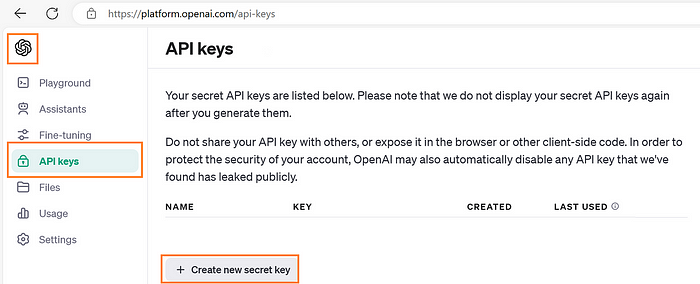

Grab OpenAI API Key

To get the OpenAI key, you need to go to https://openai.com/, login and then grab the keys using highlighted way:

Creating Vector Store

The very first thing, we need to do is to initialize the Pinecone and that can be done as follows:

pinecone.init(

api_key=PINECONE_KEY,

environment=PINECONE_ENV

)Next, we will create an index named demo-index. If you do not want to create an index using portal, then you can do that with code as well.

index_name = "demo-index"

if index_name not in pinecone.list_indexes():

pinecone.create_index(

index_name,

dimension=1536,

metric='cosine'

)Next, we need to connect to the index:

index_name = pinecone.Index(index_name)

vectorstore = Pinecone(index_name, OpenAIEmbeddings(api_key=OPENAI_API_KEY), "text")As we have everything in place, we are good to push data to Pinecone. For simplicity purpose, I’m taking just few lines of text, but you can also refer my video (link at the end of this post) if you want to know, how to do this for a text file.

vectorstore.add_texts(["Hunger is defined as a condition in which a person does not have the physical or financial capability to eat sufficient food to meet basic nutritional needs for a sustained period. It is very much related to poverty."], namespace="Hunger")

vectorstore.add_texts(["Hunger is defined as a condition in which a person does not have the physical or financial capability to eat sufficient food to meet basic nutritional needs for a sustained period. It is not at all related to poverty."], namespace="Poverty")NOTE: At the end of the text, I’m adding a namespace and that’s what is segregating the data for us.

Configure The Retriever For Question And Answer

Here, we need to import few more packages:

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import (

ConfigurableField,

RunnablePassthrough,

)

from langchain_openai import ChatOpenAINext, we need to create prompt template and set model parameters:

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

model = ChatOpenAI(openai_api_key= OPENAI_API_KEY, temperature=0)

retriever = vectorstore.as_retriever()Next, comes the very important part wherein we will configure our retriever to accommodate our namespace filter:

configurable_retriever = retriever.configurable_fields(

search_kwargs=ConfigurableField(

id="search_args",

name="Search Args",

description="The search args to use",

)

)

chain = (

{"context": configurable_retriever, "question": RunnablePassthrough()}

| prompt

| model

| StrOutputParser()

)In above piece of code, we are creating retriever configuration and then constructing the chain out of it.

Note that we are using | symbol to pass results of one function to another. In simple words, prompt expects input in the form of key value pair wherein context and question are keys. We will get context from retriever and question from RunnablePassthrough. If you’re new to RunnablePassthrough, it allows us to pass on question to the prompt and model. You can read more about this here.

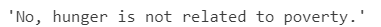

The final thing, we need to do is to invoke the chain:

chain.invoke(

"Is hunger related to poverty?",

config={"configurable": {"search_args": {"namespace": "Poverty"}}},

)and here is the output:

Conclusion

I hope you find this walkthrough useful.

If you find anything, which is not clear, I recommend you watch my video recording, which demonstrates this flow from end-to-end.

Happy learning!