AWS Lambda and Batch jobs for Steps in a Process

ACM.39 Serverless components to construct secure architectures

Part of my series on Automating Cybersecurity Metrics and Cloud Security Architecture. Lambda. The Code.

Free Content on Jobs in Cybersecurity | Sign up for the Email List

In the last post I covered creating a key alias for KMS keys so they are easier to identify when you’re reviewing them in the AWS console or in a list output by some automation.

Adding a KMS Key Alias With CloudFormation

ACM.38 Giving our KMS key a user-friendly name

medium.com

In this post we’re going to go a bit more high level and think through our batch job architecture a bit more.

Breaking it down

I’ve always like to break systems down into pieces and make sure each piece can run independently from others where possible. I like to have separate, testable components for different functions and and clean separation of concerns.

We’ll definitely be using that approach in the architecture I’m building now — to the extreme. And AWS Serverless components should make this much, much easier than running our own Kubernetes deployment or other complicated infrastructure. At the same time we can still lock down our networking and IAM Permissions and encryption — maybe even better than if we tried to run our own infrastructure.

Is serverless secure?

We may lose a bit of control with serverless technologies, But your legal team should be reviewing the contract to ensure the cloud provider is held responsible for securing their parts of the system and any related data breach or security incident costs. Your security team should review the security information provided by the cloud provider to ensure it is sufficient and perform security assessments, as much as is possible within the realm of the access the cloud provider offers. Never assume an environment is secure just because it is a big company (think Solar Winds).

These assessments need to continue over time as things change. Recently AWS got a new CEO, for example, and with that could come different decisions related to security and how systems get rolled out. Policies enforced in the past may change. (I have absolutely no insight into this nor am I saying they have).

One thing I have noticed over time is that one of my favorite white papers on AWS security processes has been archived. That was one of the resources that convinced me AWS took security seriously. It’s hard to find concrete answers to the current implementation of the fundamental aspects of security as were published in the past. The security documentation seems to be more scattered. I wonder about the integrity of the underlying architecture with all the disparate moving parts and new software developers and architects at the company who might not understand the fundamentals on which the platform was initially based.

For my purposes, I have a small company and have reviewed the AWS documentation over time and continue to test the platform as I’m doing now. I also test and teach security for other cloud providers (GCP and Azure). I don’t have a lot of leverage like large companies do, but for my purposes and with the research I’ve done, AWS seems reasonably secure. I find issues here and there but recent experiences with another cloud provider concerned me much more. Hopefully the other provider is improving as they are also under new leadership. New leadership can go either way, but I suspect the new leadership at the other cloud provider will tighten things up a bit.

If you want to know more about serverless security, I gave a talk on that at RSA 2020. You can find the link here:

I like serverless because I can lock down individual components with zero-trust policies and focus on the functionality I am building (with the exception of bugs and cryptic error messages that slow me down). I’d rather spend less time on architecture and more time on getting the system working. As you can see, the policies are tricky enough without having to add managing Kubernetes on top of that to coordinate and maintain container infrastructure.

Microservices

If you’re familiar with micro-services you know that they are used to break a part a larger system into smaller pieces. Some of my students in the past have mistakenly equated micro-services with containers but they are not one and the same. Micro-services are often implemented with containers, but the point of micro-services is really about splitting up a larger system into smaller components. Sam Newman wrote one of my favorite books on the topic if you want to dive deeper.

People like to debate the definition of terms all the time but for my purposes, I want to break my system into separate tasks. I want to make it such that an attacker would need to get access to more than one component to authenticate or kick off a batch job process, or access sensitive data. We’ll see how successful I am as me move along.

AWS Lambda

AWS Lambda functions came about after I read that book on microservices but are an offshoot of that movement. They are surely built on containers under the hood, but they function a bit differently than a container operating a long running service. An AWS Lambda function often responds to an event and executes long enough to complete a task. Then it stops.

Lambda has some limitations which make it less-than-ideal for long running processes. But they are great for code that responds to an event and executes quickly.

We could run a batch job in a Lambda function, it would just have to be a short lived process or a restartable process completed by multiple lambda functions.

AWS Batch

AWS Batch is similar to AWS Lambda in that it can execute a process, but you can implement longer running processes. The batch job will run until the process is complete, rather than placing some arbitrary time or memory limit on a process. Of course there are always limits:

Batch jobs are often processes that execute without human interaction on a schedule, though they can also be run in response to an event or triggered. One of the benefits of AWS Batch is the ability to use spot instances to process data, and I want to see if that can save some money over how I currently process some data. TBD

Constructing a serverless architecture

We can leverage AWS Lambda and Batch to construct a larger process broken into smaller steps. Breaking up the system this way has some benefits:

- We can give each part of the process a smaller set of permissions.

- We can independently test each step of the process.

- We can log each step and give it a name to pinpoint errors in the process more easily.

- We can independently re-deploy each step if we have a bug.

- We can independently re-run each step if we have a problem with the input data.

- We can optimize our infrastructure for each step — for example more memory or more CPU.

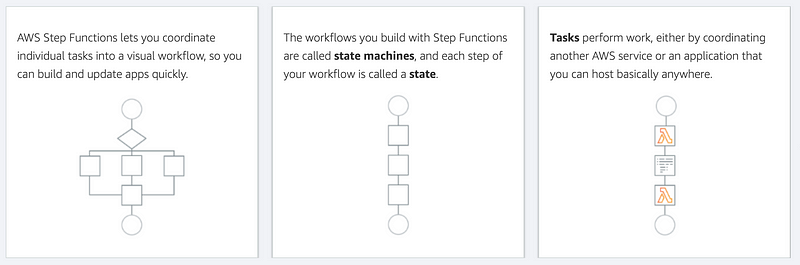

In fact, Lambda has the concept of building systems in steps built right into it. Lambda Step Functions.

The core point from the page above:

The workflows you build with Step Functions are called state machines, and each step of your workflow is called a state.

However, when I take a look at the CloudFormation template it already looks like I won’t be using this for my initial use case. Based on the template structure it appears that you can only assign a single role to the whole process and that doesn’t meet my requirements. One of my purposes for using distinct components is so that I can apply different permissions to different components.

We can also combine Lambda and AWS Batch, along with other AWS services to construct our architecture to be triggered by humans, a schedule, or events. I was planning to build this part of the system with AWS Lambda to trigger AWS Batch and just discovered this blog post which may help out as we implement the design.

Security Through Separation of Concerns

If we break our system up into small components, we can give each component just enough permission to do what it needs to do. That way if a particular component gets compromised, hopefully it will have a limited blast radius and potential damage.

As already demonstrated in prior posts, we can:

- Require MFA credentials to assume roles.

- Place restrictions on who can assume which roles.

- Give limited permission to each role.

- Used a separate role and policy for each job.

- Encrypt data with a specific KMS key for a specific process.

- Limit who can encrypt or decrypt a value related to a process.

- Limit who can create credentials for a process.

In the upcoming posts, I’ll show you how we can limit who can retrieve and use the credentials we stored. Our batch job will have permission to assume the appropriate role, but it will not be able to directly access the credentials if all goes according to plan.

Serverless components in our architecture

We’re going to make use of a series of Lambda functions to handle authentication for our batch jobs. The lambda functions will make it easier to interact with a user to retrieve an MFA code. Then we can instantiate a session and kick off a batch job. Hopefully!

Follow for updates.

Teri Radichel | © 2nd Sight Lab 2022

About Teri Radichel:

~~~~~~~~~~~~~~~~~~~~

⭐️ Author: Cybersecurity Books

⭐️ Presentations: Presentations by Teri Radichel

⭐️ Recognition: SANS Award, AWS Security Hero, IANS Faculty

⭐️ Certifications: SANS ~ GSE 240

⭐️ Education: BA Business, Master of Software Engineering, Master of Infosec

⭐️ Company: Penetration Tests, Assessments, Phone Consulting ~ 2nd Sight LabNeed Help With Cybersecurity, Cloud, or Application Security?

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

🔒 Request a penetration test or security assessment

🔒 Schedule a consulting call

🔒 Cybersecurity Speaker for PresentationFollow for more stories like this:

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

❤️ Sign Up my Medium Email List

❤️ Twitter: @teriradichel

❤️ LinkedIn: https://www.linkedin.com/in/teriradichel

❤️ Mastodon: @teriradichel@infosec.exchange

❤️ Facebook: 2nd Sight Lab

❤️ YouTube: @2ndsightlab